XCP-ng 8.3 betas and RCs feedback 🚀

-

@john-c No UEFI on HP DL Gen8.... it too bad because they work just fine. HP DL Gen9 servers support UEFI boot. By the time XCP 9.x rolls around it will be time to trash the G8 machines anyway. But lack of BIOS boot will limit cheap lab/home machines...

-

@john-c

HP says Gen9 has UEFI, and probably those are now in the $200 shipped range with 40 threads and 128gb of RAM like the gen8 were when I bought them.

I'll have to upgrade at some point, probably going to be a mini system next time.

As an FYI, I think Vsphere8 products require UEFI, I saw a notice in the instructions about this, haven't installed yet. Yes testing the competition and mostly for experience migrating off of VMware.

-

@Greg_E said in XCP-ng 8.3 betas and RCs feedback

:

:@john-c

HP says Gen9 has UEFI, and probably those are now in the $200 shipped range with 40 threads and 128gb of RAM like the gen8 were when I bought them.

I'll have to upgrade at some point, probably going to be a mini system next time.

As an FYI, I think Vsphere8 products require UEFI, I saw a notice in the instructions about this, haven't installed yet. Yes testing the competition and mostly for experience migrating off of VMware.

Bargain Hardware carries a HPE DL360 Gen9 for only £80, a HPE DL380 Gen9 for £80 and a HPE DL560 Gen9 for £237. Check them out! https://www.bargainhardware.co.uk

I used them for my Home Lab's Dell PowerEdge servers, they are really good. I haven't had problems with them or their products if I do they are very good at helping out. Also are a good source for access to components and upgrades which are hard to get!

-

@Greg_E I saw a similar thing and dealt with it thusly:

Upgrade the master in BIOS to preserve the configuration

Upgrade second (only other, in this case) host in the pool using UEFI boot

Migrate 'master' status to the second host

re-install the first host with UEFI, re-join it to the pool.Before doing this take careful note of the

xe pool-enable-tls-verificationmentioned in the release notes. You won't be able to join the cleanly reinstalled host without it.Typing 'master' so many times has me wishing we had a better word for the pool coordinator.

-

Thanks. I will eventually need to do this on my production system, thankfully it is new hardware and should be good until I retire (next guy's problem).

-

not sure is it XO or Xen issue.

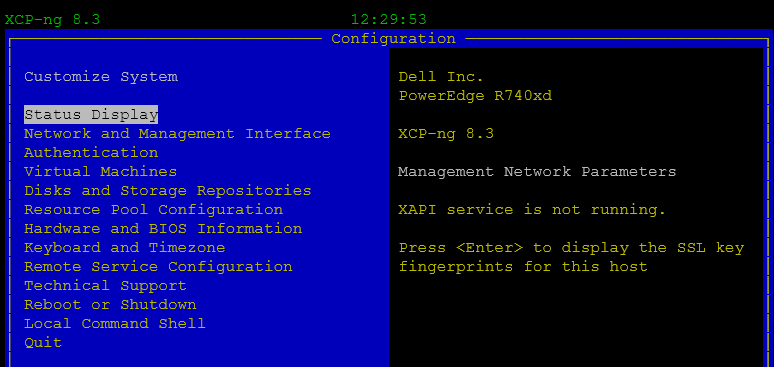

8.3 release. i changed pool master and now it unavailable.master

hosts

Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] lock: opening lock file /var/lock/sm/5cceb02b-bda5-85d1-10c1-b5dfe4fbf66a/sr Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] Setting LVM_DEVICE to /dev/disk/by-scsid/36e43ec6100f01acf292713f000000006 Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] lock: opening lock file /var/lock/sm/845ef0aa-7561-9253-b9fa-0b862c145c54/sr Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] LVMCache created for VG_XenStorage-845ef0aa-7561-9253-b9fa-0b862c145c54 Oct 11 12:12:00 rpsrv-cas-caph fairlock[3895]: /run/fairlock/devicemapper acquired Oct 11 12:12:00 rpsrv-cas-caph fairlock[3895]: /run/fairlock/devicemapper sent '420301 - 77059.294148651hz#006��^?' Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] ['/sbin/vgs', '--readonly', 'VG_XenStorage-845ef0aa-7561-9253-b9fa-0b862c145c54'] Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] pread SUCCESS Oct 11 12:12:00 rpsrv-cas-caph fairlock[3895]: /run/fairlock/devicemapper released Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] Entering _checkMetadataVolume Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] LVMCache: will initialize now Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] LVMCache: refreshing Oct 11 12:12:00 rpsrv-cas-caph fairlock[3895]: /run/fairlock/devicemapper acquired Oct 11 12:12:00 rpsrv-cas-caph fairlock[3895]: /run/fairlock/devicemapper sent '420301 - 77059.464449994hz#006��^?' Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-845ef0aa-7561-9253-b9fa-0b862c145c54'] Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] pread SUCCESS Oct 11 12:12:00 rpsrv-cas-caph fairlock[3895]: /run/fairlock/devicemapper released Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] Targets 1 and iscsi_sessions 1 Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] lock: closed /var/lock/sm/5cceb02b-bda5-85d1-10c1-b5dfe4fbf66a/sr Oct 11 12:12:00 rpsrv-cas-caph SM: [420301] lock: closed /var/lock/sm/845ef0aa-7561-9253-b9fa-0b862c145c54/sr Oct 11 12:22:19 rpsrv-cas-caph SM: [423642] Raising exception [150, Failed to initialize XMLRPC connection] Oct 11 12:22:19 rpsrv-cas-caph SM: [423642] Unable to open local XAPI session -

- Is that on the released version of XCP-ng 8.3?

- What steps did you follow to change the pool master and why? Was it in the middle of the pool upgrade?

- check xensource.log around the time of the XAPI startup.

-

- release version.

- fresh pool installation. no tasks, just master change via XO.

$ Oct 11 13:26:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC live_words = 1213142 Oct 11 13:26:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC heap_words = 1772032 Oct 11 13:26:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC free_words = 558699 Oct 11 13:29:38 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|stunnel] client cert verification 172.29.1.153:443: SNI=pool path=/etc/stun$ Oct 11 13:29:38 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|master_connection] stunnel: stunnel start\x0A Oct 11 13:29:38 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|stunnel] Started a client (pid:45316): -> 172.29.1.153:443 Oct 11 13:29:38 rpsrv-cas-navi xapi: [ info||0 |bringing up management interface D:56aeb415b10f|master_connection] stunnel disconnected fd_closed=true proc_quit=false Oct 11 13:29:38 rpsrv-cas-navi xapi: [error||0 |bringing up management interface D:56aeb415b10f|master_connection] Caught Xapi_database.Master_connection.Goto_handler Oct 11 13:29:38 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|master_connection] Sleeping 256.000000 seconds before retrying master conne$ Oct 11 13:29:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC live_words = 854843 Oct 11 13:29:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC heap_words = 880128 Oct 11 13:29:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC free_words = 25284 Oct 11 13:32:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC live_words = 1571266 Oct 11 13:32:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC heap_words = 1772032 Oct 11 13:32:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC free_words = 200761 Oct 11 13:33:54 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|stunnel] client cert verification 172.29.1.153:443: SNI=pool path=/etc/stun$ Oct 11 13:33:54 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|master_connection] stunnel: stunnel start\x0A Oct 11 13:33:54 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|stunnel] Started a client (pid:46379): -> 172.29.1.153:443 Oct 11 13:33:54 rpsrv-cas-navi xapi: [ info||0 |bringing up management interface D:56aeb415b10f|master_connection] stunnel disconnected fd_closed=true proc_quit=false Oct 11 13:33:54 rpsrv-cas-navi xapi: [error||0 |bringing up management interface D:56aeb415b10f|master_connection] Caught Xapi_database.Master_connection.Goto_handler Oct 11 13:33:54 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|master_connection] Sleeping 256.000000 seconds before retrying master conne$ Oct 11 13:35:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC live_words = 935099 Oct 11 13:35:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC heap_words = 1012224 Oct 11 13:35:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC free_words = 77123 Oct 11 13:38:10 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|stunnel] client cert verification 172.29.1.153:443: SNI=pool path=/etc/stun$ Oct 11 13:38:10 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|master_connection] stunnel: stunnel start\x0A Oct 11 13:38:10 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|stunnel] Started a client (pid:47395): -> 172.29.1.153:443 Oct 11 13:38:10 rpsrv-cas-navi xapi: [ info||0 |bringing up management interface D:56aeb415b10f|master_connection] stunnel disconnected fd_closed=true proc_quit=false Oct 11 13:38:10 rpsrv-cas-navi xapi: [error||0 |bringing up management interface D:56aeb415b10f|master_connection] Caught Xapi_database.Master_connection.Goto_handler Oct 11 13:38:10 rpsrv-cas-navi xapi: [debug||0 |bringing up management interface D:56aeb415b10f|master_connection] Sleeping 256.000000 seconds before retrying master conne$ Oct 11 13:38:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC live_words = 1213031 Oct 11 13:38:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC heap_words = 1772032 Oct 11 13:38:52 rpsrv-cas-navi xcp-rrdd: [ info||7 ||rrdd_main] GC free_words = 558790 -

@Tristis-Oris i tried to remove host from pool or change master, but can't execute even

xe host-listwith endless timeoutdone with

xe pool-emergency-transition-to-master xe pool-recover-slavesall vms at dead master counts as running, so i can't run them again.

and can't forget host

"code": "HOST_HAS_RESIDENT_VMS",upd

need to reset vms with

xe vm-reset-powerstate uuid=*** --forcethen forget host. -

@Tristis-Oris is the

xapidaemon still running?daemon.logandxensource.logaround the time you changed the master could give more insight? -

Oct 11 16:05:51 rpsrv-cas-caph xapi-nbd[16958]: main: Failed to log in via xapi's Unix domain socket: Unix.Unix_error(Unix.ECONNREFUSED, "connect", ""); retrying in 4.0000$ Oct 11 16:05:55 rpsrv-cas-caph xapi-nbd[16958]: Caught: Unix.Unix_error(Unix.ECONNREFUSED, "connect", "") Oct 11 16:05:55 rpsrv-cas-caph xapi-nbd[16958]: main: Failed to log in via xapi's Unix domain socket: Unix.Unix_error(Unix.ECONNREFUSED, "connect", ""); retrying in 4.0000$ Oct 11 16:05:59 rpsrv-cas-caph xapi-nbd[16958]: Caught: Unix.Unix_error(Unix.ECONNREFUSED, "connect", "") Oct 11 16:05:59 rpsrv-cas-caph xapi-nbd[16958]: main: Failed to log in via xapi's Unix domain socket: Unix.Unix_error(Unix.ECONNREFUSED, "connect", ""); retrying in 4.0000$ Oct 11 16:06:03 rpsrv-cas-caph xapi-nbd[16958]: Caught: Unix.Unix_error(Unix.ECONNREFUSED, "connect", "") Oct 11 16:06:03 rpsrv-cas-caph xapi-nbd[16958]: main: Failed to log in via xapi's Unix domain socket: Unix.Unix_error(Unix.ECONNREFUSED, "connect", ""); retrying in 4.0000$ Oct 11 16:06:07 rpsrv-cas-caph xapi-nbd[16958]: Caught: Unix.Unix_error(Unix.ECONNREFUSED, "connect", "") Oct 11 16:06:07 rpsrv-cas-caph xapi-nbd[16958]: main: Failed to log in via xapi's Unix domain socket: Unix.Unix_error(Unix.ECONNREFUSED, "connect", ""); retrying in 4.0000$ Oct 11 16:06:11 rpsrv-cas-caph systemd[1]: Created slice User Slice of root. Oct 11 16:06:11 rpsrv-cas-caph systemd[1]: Starting User Slice of root. Oct 11 16:06:11 rpsrv-cas-caph systemd[1]: Started Session c9 of user root. Oct 11 16:06:11 rpsrv-cas-caph systemd[1]: Starting Session c9 of user root. Oct 11 16:06:11 rpsrv-cas-caph xapi-nbd[16958]: Caught: Unix.Unix_error(Unix.ECONNREFUSED, "connect", "") Oct 11 16:06:11 rpsrv-cas-caph xapi-nbd[16958]: main: Failed to log in via xapi's Unix domain socket: Unix.Unix_error(Unix.ECONNREFUSED, "connect", ""); retrying in 4.0000$ Oct 11 16:06:15 rpsrv-cas-caph xapi-nbd[16958]: Caught: Unix.Unix_error(Unix.ECONNREFUSED, "connect", "") Oct 11 16:06:15 rpsrv-cas-caph xapi-nbd[16958]: main: Failed to log in via xapi's Unix domain socket: Unix.Unix_error(Unix.ECONNREFUSED, "connect", ""); retrying in 4.0000$ Oct 11 16:06:19 rpsrv-cas-caph xapi-nbd[16958]: Caught: Unix.Unix_error(Unix.ECONNREFUSED, "connect", "") Oct 11 16:06:19 rpsrv-cas-caph xapi-nbd[16958]: main: Failed to log in via xapi's Unix domain socket: Unix.Unix_error(Unix.ECONNREFUSED, "connect", ""); retrying in 4.0000$Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 |server_init D:b092c49f7d0d|backtrace] 14/20 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasi$ Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 |server_init D:b092c49f7d0d|backtrace] 15/20 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasi$ Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 |server_init D:b092c49f7d0d|backtrace] 16/20 xapi Called from file ocaml/xapi/server_helpers.ml, line 67 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 |server_init D:b092c49f7d0d|backtrace] 17/20 xapi Called from file ocaml/xapi/server_helpers.ml, line 94 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 |server_init D:b092c49f7d0d|backtrace] 18/20 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasi$ Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 |server_init D:b092c49f7d0d|backtrace] 19/20 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasi$ Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 |server_init D:b092c49f7d0d|backtrace] 20/20 xapi Called from file ocaml/libs/log/debug.ml, line 250 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 |server_init D:b092c49f7d0d|backtrace] Oct 11 16:02:49 rpsrv-cas-caph xapi: [ warn||0 |server_init D:b092c49f7d0d|startup] task [Synchronising host configuration files] exception: Pool_role.This_host_is_broken Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] server_init D:b092c49f7d0d failed with exception Pool_role.This_host_is_broken Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] Raised Pool_role.This_host_is_broken Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 1/15 xapi Raised at file ocaml/libs/log/debug.ml, line 267 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 2/15 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 3/15 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 39 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 4/15 xapi Called from file ocaml/xapi/server_helpers.ml, line 186 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 5/15 xapi Called from file ocaml/xapi/startup.ml, line 95 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 6/15 xapi Called from file ocaml/xapi/startup.ml, line 103 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 7/15 xapi Called from file list.ml, line 110 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 8/15 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 9/15 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 39 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 10/15 xapi Called from file ocaml/xapi/xapi.ml, line 1081 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 11/15 xapi Called from file ocaml/xapi/server_helpers.ml, line 67 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 12/15 xapi Called from file ocaml/xapi/server_helpers.ml, line 94 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 13/15 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 14/15 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 39 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 15/15 xapi Called from file ocaml/libs/log/debug.ml, line 250 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] Oct 11 16:02:49 rpsrv-cas-caph xapi: [debug||0 ||xapi] xapi top-level caught exception: INTERNAL_ERROR: [ Pool_role.This_host_is_broken ] Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] Raised Pool_role.This_host_is_broken Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] 1/1 xapi Raised at file (Thread 0 has no backtrace table. Was with_backtraces called?, line 0 Oct 11 16:02:49 rpsrv-cas-caph xapi: [error||0 ||backtrace] Oct 11 16:04:12 rpsrv-cas-caph xcp-rrdd: [ info||7 ||rrdd_main] GC live_words = 743602 Oct 11 16:04:12 rpsrv-cas-caph xcp-rrdd: [ info||7 ||rrdd_main] GC heap_words = 1795584 Oct 11 16:04:12 rpsrv-cas-caph xcp-rrdd: [ info||7 ||rrdd_main] GC free_words = 1051903 -

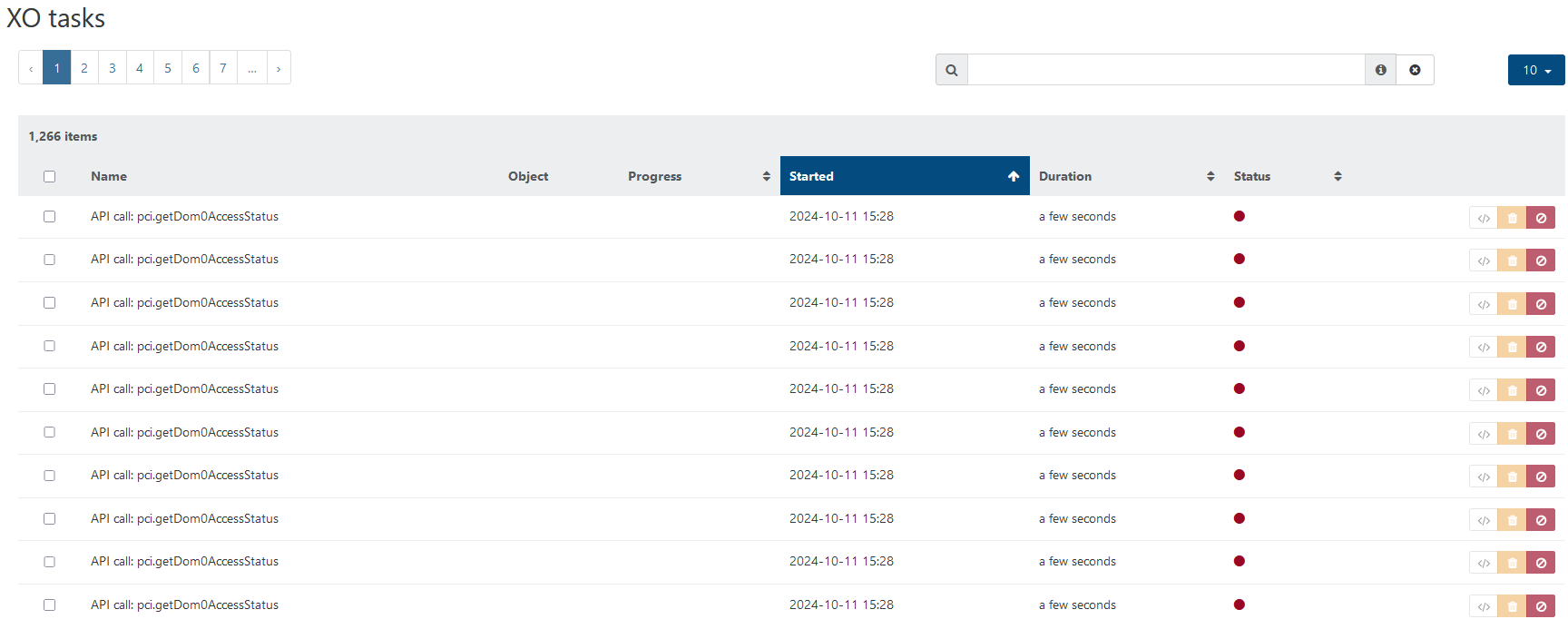

also with two 8.3 pools, i have tons of logs everywhere.

that for last 1 hour.

-

@Tristis-Oris

Pool_role.This_host_is_brokenis a bit scary, and more logs will be needed to understand how it got there. I'm trying to follow the same procedure as you to request the right lines - how exactly did you perform the master change? -

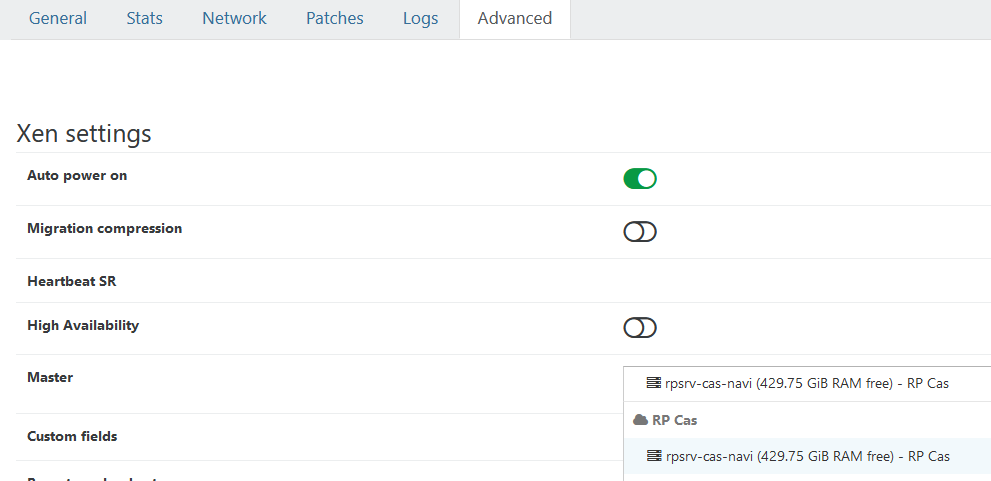

@yann pool>advanced>master.

Fresh xen installation, wait some time after joining last host, then changed master. Balancer disabled, no updates, few VMs runing on all hosts.only one thing, master change don't work at 1st attempt - just nothing happens. Looks like task been canceled , so i repeat it again after some time.

i don't think it totaly broken, maybe something happens at same time. but never got that issue before.

-

@Tristis-Oris said in XCP-ng 8.3 betas and RCs feedback

:

:then changed master

Could you please detail that part a bit more? I understand you used XO, but which screen and which action?

-

-

@Greg_E I forgot to mention a potentially important step just before the reimage from BIOS -> UEFI.

After you've drained the host disconnect it from the pool.

so:

reimage all hosts in whatever mode they're in

migrate all VMs from the target to be changed to UEFI

disconnect said target from the pool

reimage target with UEFI boot

reconnect target to the poolnomad

-

@Tristis-Oris thanks!

I realize one thing is not clear to me: is the "unreachable master" in your situation the one you switched to, or the original master? And how many hosts in total are in this pool when you launch this operation?

The logs we'll need to analyze will be the xensource.log from the first occurrence of

designate_new_masteron both old and new master, until the time of that exception. -

@yann new one.

new part1 https://pastebin.com/ij0B7KHy

new part2 https://pastebin.com/0tQyvesY

old https://pastebin.com/dvAkEEbY -

on 8.3 pool more and more VMs became attached to Control Domain. Backups fail due VDI_IN_USE.

can't unplug vdi:

xe vbd-list vm-uuid=***

xe vbd-unplug uuid=***

The server failed to handle your request, due to an internal error. The given message may give details useful for debugging the problem.

message: Expected 0 or 1 VDI with datapath, had 5can't migrate to 8.2 pool due incompatible versions.

and already got coalesce on VM without backup.

upd

removed all stuck vdi, but sr coalesce won't move.