Migrating an offline VM disk between two local SRs is slow

-

Mostly CPU bound (single disk migration isn't multithreaded). Higher your CPU frequency, faster the migration.

-

@olivierlambert Thanks, that's good to know. I appreciate your taking the time to discuss. I don't suppose there are any settings we can fiddle with that would speed up the single-disk scenario? Or some workaround approach that might get closer to the hardware's native speed? (In one experiment, someone did something that caused the system to transfer the OS disk with the log message "Cloning VDI" rather than "Creating a blank remote VDI", and the effective throughput was higher by a factor of 20 ...)

-

Can you describe exactly the steps that were done so we can double check/compare and understand the why?

edit: also, are you comparing a live migration vs an offline copy? It's very different, since in live you have to replicate the blocks while the VM on top is running.

-

@olivierlambert This is all offline. Unfortunately I can't describe exactly what was done, since someone else was doing the work and they were trying a bunch of different things all in a row. I suspect that the apparently fast migration is a red herring (maybe a previous attempt left a copy of the disk on the destination SR, and the system noticed that and avoided the actual I/O?) but if there turned out to be a magical fast path, I wouldn't complain!

-

You can also try warm migration, which can go a lot faster.

-

Using XOA "Disaster Recovery" backup method can be a lot faster than normal offline migration.

One time I did it, it took approx 10 minutes instead of 2 hours...

-

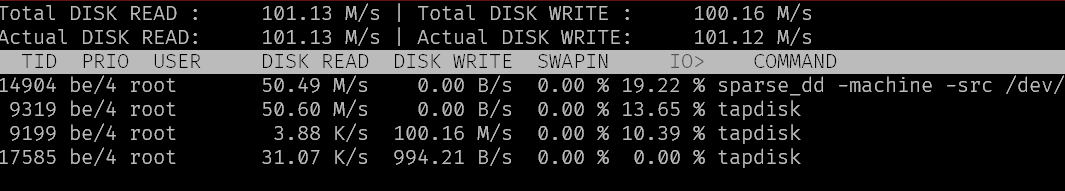

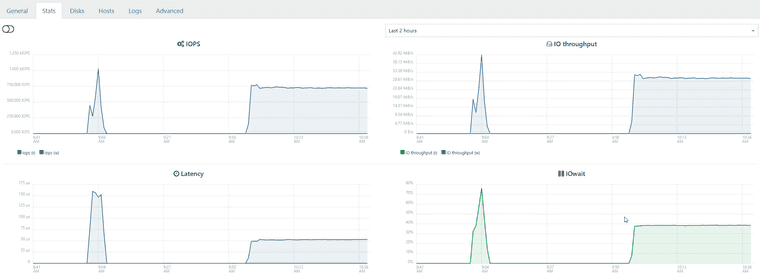

I think I am seeing a similar issue. Raid1 NVME copy to raid 10 4x2tb HDD on same host

a 300gb transfer is estimated at 7 hours. (11% done in 50 mins)

the vm is live.

according to the stats almost nothing is happening on this server or the 2 storage

-

@olivierlambert

Is the CPU on the sending host or the receiving host the limiting factor for single disk migrations? -

I can't really tell, gut feeling is the sending host, but I have no numbers to confirm.

-

@Davidj-0 in my case there CPU activity is minimal. I think something is wrong with the software raid 10 setup. On an identical setup warm migration between to the raid 10 array between hosts is showing horrible iowait similar to the sr to sr transfer on the other host

-

Maybe the IO scheduler is not the right one?