XCP-ng 8.3 updates announcements and testing

-

Updates published: https://xcp-ng.org/blog/2025/10/23/october-2025-security-and-maintenance-update-for-xcp-ng-8-3-lts/

Thank you for the tests!

-

@gduperrey Updated 2 pools/2 servers @home, updated 3 pools/5 servers @ office with RPU.

All fine. -

Updates 3 pools. All fine except for the last host on the last pool ran into a yum mirror error and failed. Manually running yum update and rebooting the host worked. Sadly i then had to move VMs back around since the RPU failed and the process of migrating VMs back did not kick off as a result.

Error was

One of the configured repositories failed (Unknown), and yum doesn't have enough cached data to continue. At this point the only safe thing yum can do is fail. There are a few ways to work "fix" this: 1. Contact the upstream for the repository and get them to fix the problem. 2. Reconfigure the baseurl/etc. for the repository, to point to a working upstream. This is most often useful if you are using a newer distribution release than is supported by the repository (and the packages for the previous distribution release still work). 3. Run the command with the repository temporarily disabled yum --disablerepo=<repoid> ... 4. Disable the repository permanently, so yum won't use it by default. Yum will then just ignore the repository until you permanently enable it again or use --enablerepo for temporary usage: yum-config-manager --disable <repoid> or subscription-manager repos --disable=<repoid> 5. Configure the failing repository to be skipped, if it is unavailable. Note that yum will try to contact the repo. when it runs most commands, so will have to try and fail each time (and thus. yum will be be much slower). If it is a very temporary problem though, this is often a nice compromise: yum-config-manager --save --setopt=<repoid>.skip_if_unavailable=true xcp-ng-base: Check uncompressed DB failed -

Updated AMD Ryzen pool at home and update two Intel Dell r660 and r640 pools at work. No issues to report back.

-

updated my two hosts without issues.

-

IMPORTANT NOTICE!

IMPORTANT NOTICE!After publishing the updates, we discovered a very nasty bug when using the UEFI certificates that we distribute. Long story short, they're too big, and there's only limited space (57K), and combined to a preexisting bug in varstored, this will cause the VM to stop booting after Windows or any other OS attempts to append to the DBX (revocation database).

We pulled the

varstoredupdate, but those who updated can be affected.There are conditions for the issue:

- Existing VMs are not affected, unless you propagated the new certs to them

- New VMs are affected only if you never installed UEFI certs to the pool yourself (through XOA or

secureboot-certs install), or cleared them usingsecureboot-certs clearin order to use our default certificates.

If you have the affected version of

varstored(rpm -q varstoredyieldsvarstored-1.2.0-3.1.xcpng8.3) :- on every host, downgrade it with

yum downgrade varstored-1.2.0-2.3.xcpng8.3. No reboot or toolstack restart required. - if you have affected UEFI VMs, that is VMs that meet the conditions above but are not broken yet, don't install updates, turn them off, and fix them by deleting their DBX database: https://docs.xcp-ng.org/guides/guest-UEFI-Secure-Boot/#remove-certificates-from-a-vm. This has to be done when the VM is off. Your OS will add its own DBX afterwards.

- If you already have broken VMs (this warning reaching you too late), revert to a snapshot or backup. Other ways to fix them will require a patched

varstoredcurrently in the making.

-

@stormi Downgraded all hosts @home and @office. I have not done anything to running Windows Server VM's or touch anything regarding certs. So I guess I'm all good.

-

@flakpyro Thanks for letting us know. I suppose there was a mirror that was not ready yet, or had a transient issue, and unfortunately XOA's rolling pool update feature is not very resilient to that at the moment.

-

I reverted the package however i initially followed the directions provided by vates in the release blog post and ran "secureboot-certs clear" then on each VM with Secure boot enabled i clicked "Copy the pools default UEFI Certificates to the VM".

After reverting the updates and running secureboot-certs install again i went back and clicked "Copy the pools default UEFI Certificates to the VM" again thinking it would put the old certs back.

It sounds like this may not be enough and i need to remove the dbx record from each of these VMs. Am i correct or was that enough to fix these VMs?

Per the docs:

varstore-rm <vm-uuid> d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx Note that the GUID may be found by using varstore-ls <vm-uuid>.When i run the command i see

Exmaple:

varstore-ls f9166a11-3c3f-33f1-505c-542ce8e1764d 8be4df61-93ca-11d2-aa0d-00e098032b8c SecureBoot 8be4df61-93ca-11d2-aa0d-00e098032b8c DeployedMode 8be4df61-93ca-11d2-aa0d-00e098032b8c AuditMode 8be4df61-93ca-11d2-aa0d-00e098032b8c SetupMode 8be4df61-93ca-11d2-aa0d-00e098032b8c SignatureSupport 8be4df61-93ca-11d2-aa0d-00e098032b8c PK 8be4df61-93ca-11d2-aa0d-00e098032b8c KEK d719b2cb-3d3a-4596-a3bc-dad00e67656f db d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx 605dab50-e046-4300-abb6-3dd810dd8b23 SbatLevel fab7e9e1-39dd-4f2b-8408-e20e906cb6de HDDP e20939be-32d4-41be-a150-897f85d49829 MemoryOverwriteRequestControl bb983ccf-151d-40e1-a07b-4a17be168292 MemoryOverwriteRequestControlLock 9d1947eb-09bb-4780-a3cd-bea956e0e056 PPIBuffer 9d1947eb-09bb-4780-a3cd-bea956e0e056 Tcg2PhysicalPresenceFlagsLock eb704011-1402-11d3-8e77-00a0c969723b MTC 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0000 8be4df61-93ca-11d2-aa0d-00e098032b8c Timeout 8be4df61-93ca-11d2-aa0d-00e098032b8c Lang 8be4df61-93ca-11d2-aa0d-00e098032b8c PlatformLang 8be4df61-93ca-11d2-aa0d-00e098032b8c ConIn 8be4df61-93ca-11d2-aa0d-00e098032b8c ConOut 8be4df61-93ca-11d2-aa0d-00e098032b8c ErrOut 9d1947eb-09bb-4780-a3cd-bea956e0e056 Tcg2PhysicalPresenceFlags 8be4df61-93ca-11d2-aa0d-00e098032b8c Key0000 8be4df61-93ca-11d2-aa0d-00e098032b8c Key0001 5b446ed1-e30b-4faa-871a-3654eca36080 0050569B1890 937fe521-95ae-4d1a-8929-48bcd90ad31a 0050569B1890 9fb9a8a1-2f4a-43a6-889c-d0f7b6c47ad5 ClientId 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0003 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0004 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0005 4c19049f-4137-4dd3-9c10-8b97a83ffdfa MemoryTypeInformation 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0006 8be4df61-93ca-11d2-aa0d-00e098032b8c BootOrder 8c136d32-039a-4016-8bb4-9e985e62786f SecretKey 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0001 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0002So the command would be:

varstore-rm f9166a11-3c3f-33f1-505c-542ce8e1764d d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx correct? Does "d719b2cb-3d3a-4596-a3bc-dad00e67656f " indicate the old certs have been re-installed? -

@flakpyro It's in the middle. But the "d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx" part is always the same across all VMs.

After reverting the updates and running secureboot-certs install again

This will create a pool-level dbx which does not have the problem seen in varstored-1.2.0-3.1. So as long as you propagated the variables to all affected VMs without any errors, there's no need to delete them manually. But you can always delete them again if you're not sure.

-

@dinhngtu Ah okay, i was wondering if "d719b2cb-3d3a-4596-a3bc-dad00e67656f" indicated i was back on the safe certs since it is the same on all VMs since reverting and clicking "Copy the pools default UEFI Certificates to the VM"

So i need to run

varstore-rm f9166a11-3c3f-33f1-505c-542ce8e1764d d719b2cb-3d3a-4596-a3bc-dad00e67656f dbxwhile powered off to be safe?

-

Awesome thanks for the response. I took a snapshot and tried rebooting a VM and it booted back up without issue simply by clicking the propagate button on each affecting VM after reverting and running "secureboot-certs install"

-

-

@acebmxer Looks like it. You can recover by installing a preliminary test update here:

wget https://koji.xcp-ng.org/repos/user/8/8.3/xcpng-users.repo -O /etc/yum.repos.d/xcpng-users.repo yum update --enablerepo=xcp-ng-ndinh1 varstored varstored-tools secureboot-certs clearThen propagate SB certs to make sure that subsequent dbx updates will run normally.

Sorry for the inconvenience.

-

I was just getting ready to go update my pool... So is it safe now? And do these certs only affect Secure Boot VMs or UEFI VMs as well?

-

@dinhngtu Thank you for that reply.

Just to clarify I have 4 host. 2 host i updated to latest update once they became available to public via pool updates. The other 2 host I had to push the second beta release to get command secureboot-certs install to work. Then I pushed the rest of the update again once they became public.

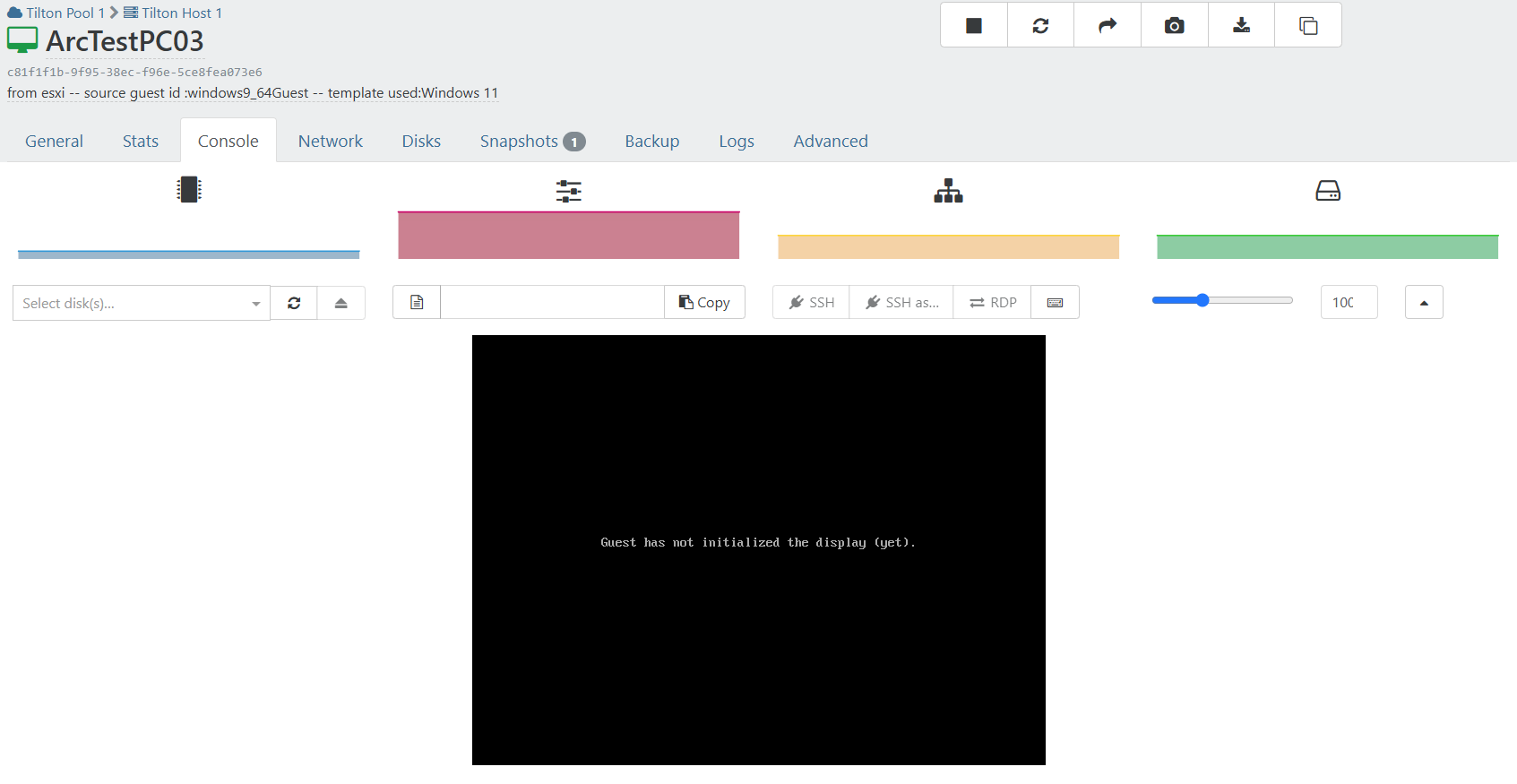

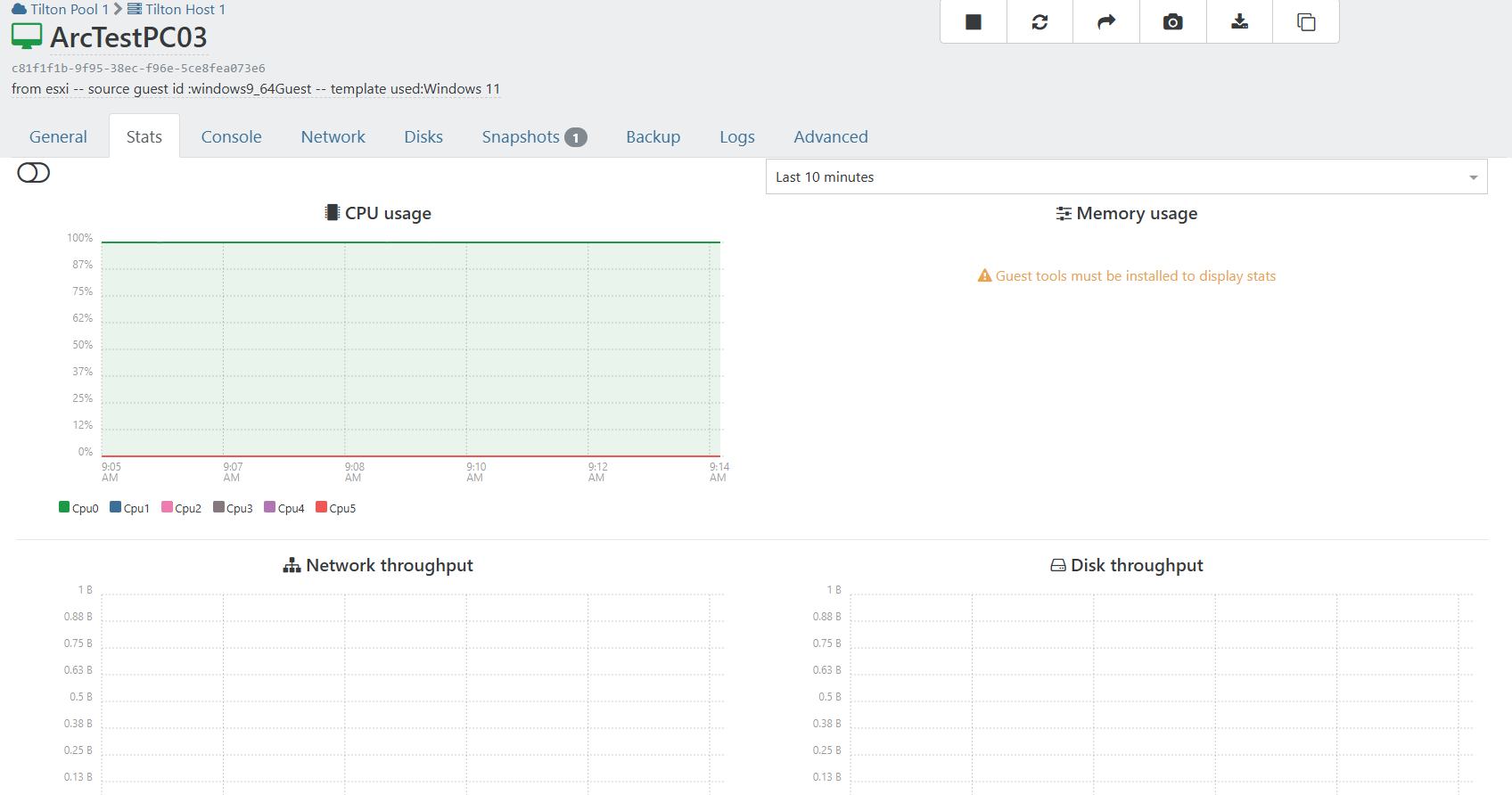

So far im only seeing 1 vm having the issue on the later 2 host the had the beta updates installed. There that test PC and 1 windows server (Printsrver), xoproxy, and xoce.

Should i run those commands on all 4 host or just the later 2 with the troubled vm?

-

@Greg_E said in XCP-ng 8.3 updates announcements and testing:

I was just getting ready to go update my pool... So is it safe now? And do these certs only affect Secure Boot VMs or UEFI VMs as well?

This problem will affect UEFI VMs. See the announcement above for more details.

In short, if you haven't updated, you should be safe; the offending update has been withdrawn and no longer available from

yum update(as long as you're not using the testing branch).@acebmxer said in XCP-ng 8.3 updates announcements and testing:

@dinhngtu Thank you for that reply.

Just to clarify I have 4 host. 2 host i updated to latest update once they became available to public via pool updates. The other 2 host I had to push the second beta release to get command secureboot-certs install to work. Then I pushed the rest of the update again once they became public.

So far im only seeing 1 vm having the issue on the later 2 host the had the beta updates installed. There that test PC and 1 windows server (Printsrver), xoproxy, and xoce.

Should i run those commands on all 4 host or just the later 2 with the troubled vm?

I haven't tested using different versions of varstored within the same pool.

-

All 4 host have the same version. They were just not updated the same way at the same time was the point i was making and that so far only 1 vm seems to be affected.

But i guess to play it self i should downgrade all 4 host.

Edit - ran the following commands on master host of pool with the vm with issue. VM now boots up.

Sorry for all the questions.

wget https://koji.xcp-ng.org/repos/user/8/8.3/xcpng-users.repo -O /etc/yum.repos.d/xcpng-users.repo yum update --enablerepo=xcp-ng-ndinh1 varstored varstored-tools secureboot-certs clearShould run that above commands on all the remaining host?

Or should i run this command on any of the four host?

yum downgrade varstored-1.2.0-2.3.xcpng8.3 -

@acebmxer You can confirm if there are other affected VMs using this command:

xe vm-list --minimal | xargs -d, -Ix sh -c "echo Scanning x 1>&2; varstore-ls x" > /dev/nullIf there are still affected VMs, you must fix them on varstored-1.2.0-3.2 by

secureboot-certs clear+ propagating before downgrading to 2.3 (if you wish).Note that downgrading to 2.3 with pool certs cleared means you'll need to set up guest Secure Boot again. So I recommend staying on 3.2 if it's possible for you.

-

@dinhngtu The output of that command seems to result in the output of "Scanningn every UUID in my pool", most of which do not use SB. Im guessing that normal and i do not have any affected VMs any longer?