Migrating an offline VM disk between two local SRs is slow

-

Hi!

I had to migrate a VM from one local SR (SSD) to another SR (4x HDD HW-RAID0 with cache) and it is very slow. It is not often I do this, but I think that in the past this migration was a lot faster.

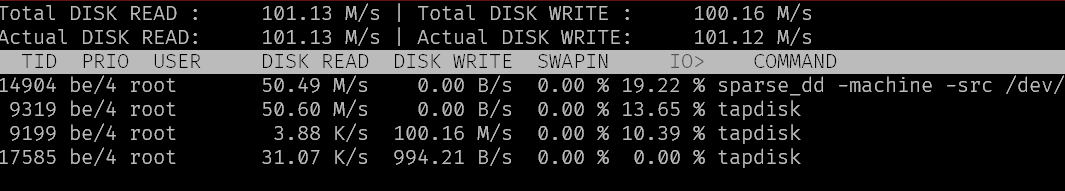

When I look in

iotopI can see roughly 80-100MiB/s.

I think that the issue is the sparse_dd connecting to localhost (10.12.9.2) over NBD/IP that makes it slow?

/usr/libexec/xapi/sparse_dd -machine -src /dev/sm/backend/55dd0f16-4caf-xxxxx2/46e447a5-xxxx -dest http://10.12.9.2/services/SM/nbd/a761cf8a-c3aa-6431-7fee-xxxx -size 64424509440 -good-ciphersuites ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-GCM-SHA384:AES256-SHA256:AES128-SHA256 -prezeroedI know I have migrated disks before on this server with multiple hundreds of MB/s, so I am curious to what is the difference.

This is XOA stable channel on XCP-ng 8.2.

-

80/100MiB/s for one storage migration is already pretty decent. You might go faster by migrating more disks at once.

I'm not sure to understand what difference are you referring too? It's always has been in that ballpark, per disk.

-

@Forza you're getting .8 GBps, which doesn't seem unreasonable. Are the SR's attached to 1GB networking?

Separate question, why are you opting to use RAID 0, purely for the performance gain?

-

@DustinB, no these are local disks not networked disks. I used to get around 400-500MB/s or so for plain migration between the two SRs.

-

@DustinB said in Migrating an offline VM disk between two local SRs is slow:

Separate question, why are you opting to use RAID 0, purely for the performance gain?

Yes, performance for bulk/temp data stuff.

-

@olivierlambert said in Migrating an offline VM disk between two local SRs is slow:

80/100MiB/s for one storage migration is already pretty decent. You might go faster by migrating more disks at once.

I'm not sure to understand what difference are you referring too? It's always has been in that ballpark, per disk.

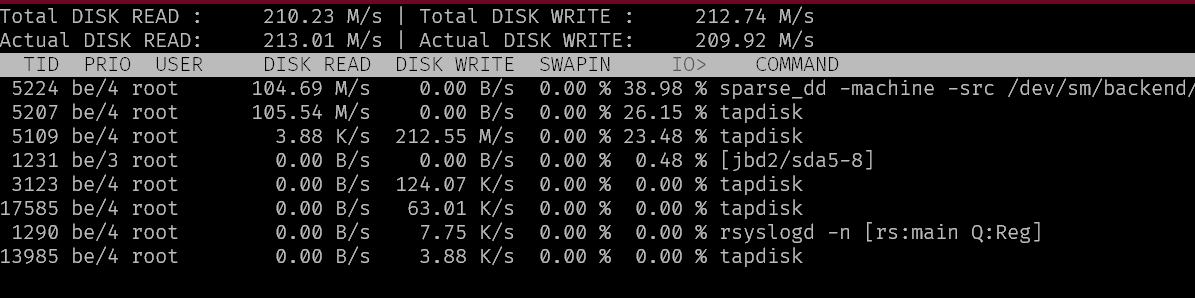

This is not over a Network, only between local ext4 SRs on the same server. I tried the same migration using XCP-ng center and it is at the moment double as fast:

Can't really see any difference though. It is the same sparse_dd and nbd connection

Perhaps it's a fragmentation issue. Though, doing a copy of the same VHD file gives close to 500MB/s.

Perhaps it's a fragmentation issue. Though, doing a copy of the same VHD file gives close to 500MB/s. -

What generally limits the migration speed? My installation is seeing a phenomenon that might be similar — when migrating between SRs we're only getting about 20 MB/s, while everything is wired together with 10 Gbps links.

-

20 MiB/s is rather slow, are you migrating one disk per one disk?

-

@olivierlambert No, this is an array of SSDs on a PowerVault, and we have evidence that the raw thoughput that we can get out of the system is much, much higher. I'm not 100% sure, but it seems that some software aspect of the migration framework is really bottlenecking things, although I've poked around and don't see anything that appears to be CPU-bound either.

-

I meant one virtual disk per one virtual disk. If you migrate more VMs/disks at once, you should see it scaling.

-

@olivierlambert Ah, yes, that is true. We are migrating one extremely large VM, so there isn't much to parallize for us, unfortunately. But it is true that someone tried something that caused the system to migrate two disks at once, and the total throughput did double.

(Specifics: the machine is 4 TiB spread across 2 big disks and 1 small OS disk, and the way that we're attempting to migrate right now it all goes serially, so the task is taking multiple days and hitting various timeouts. We think we can solve the timeouts, and we don't expect to need to do this kind of migration at all frequently, but I'd still like to understand why the single-disk throughput is so much lower than what we believe the hardware is capable of.)

-

Mostly CPU bound (single disk migration isn't multithreaded). Higher your CPU frequency, faster the migration.

-

@olivierlambert Thanks, that's good to know. I appreciate your taking the time to discuss. I don't suppose there are any settings we can fiddle with that would speed up the single-disk scenario? Or some workaround approach that might get closer to the hardware's native speed? (In one experiment, someone did something that caused the system to transfer the OS disk with the log message "Cloning VDI" rather than "Creating a blank remote VDI", and the effective throughput was higher by a factor of 20 ...)

-

Can you describe exactly the steps that were done so we can double check/compare and understand the why?

edit: also, are you comparing a live migration vs an offline copy? It's very different, since in live you have to replicate the blocks while the VM on top is running.

-

@olivierlambert This is all offline. Unfortunately I can't describe exactly what was done, since someone else was doing the work and they were trying a bunch of different things all in a row. I suspect that the apparently fast migration is a red herring (maybe a previous attempt left a copy of the disk on the destination SR, and the system noticed that and avoided the actual I/O?) but if there turned out to be a magical fast path, I wouldn't complain!

-

You can also try warm migration, which can go a lot faster.

-

Using XOA "Disaster Recovery" backup method can be a lot faster than normal offline migration.

One time I did it, it took approx 10 minutes instead of 2 hours...

-

I think I am seeing a similar issue. Raid1 NVME copy to raid 10 4x2tb HDD on same host

a 300gb transfer is estimated at 7 hours. (11% done in 50 mins)

the vm is live.

according to the stats almost nothing is happening on this server or the 2 storage

-

@olivierlambert

Is the CPU on the sending host or the receiving host the limiting factor for single disk migrations? -

I can't really tell, gut feeling is the sending host, but I have no numbers to confirm.