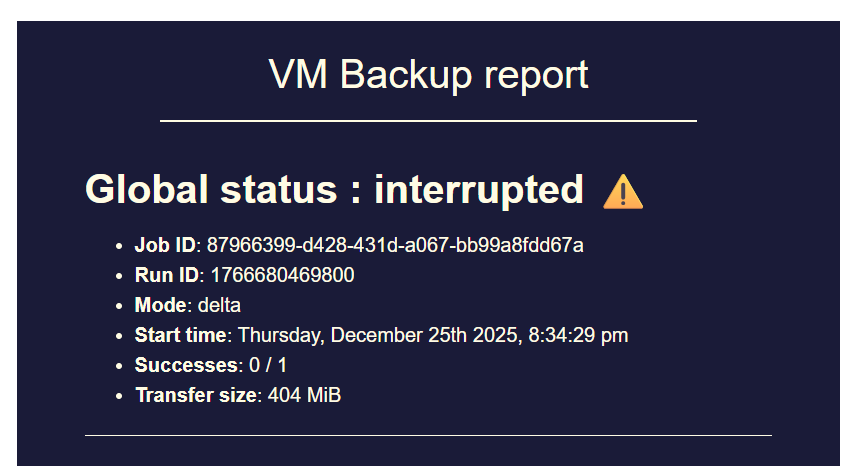

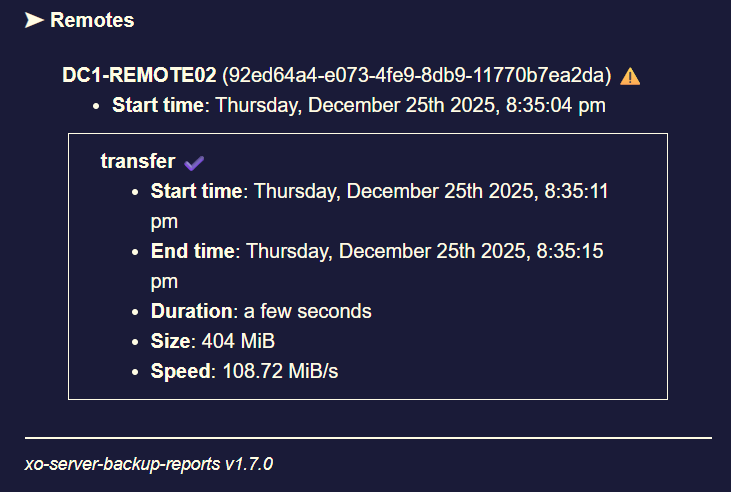

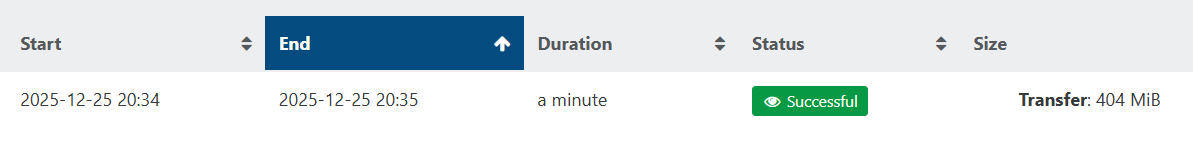

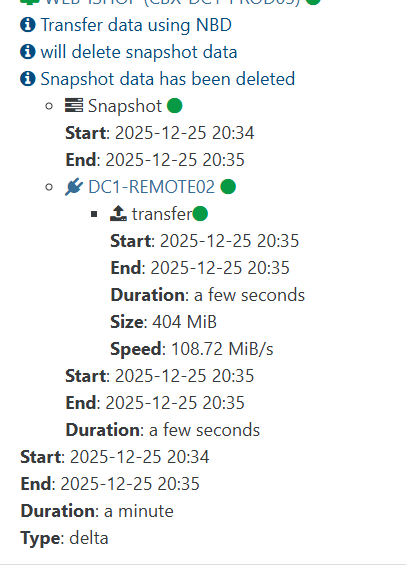

backup mail report says INTERRUPTED but it's not ?

-

@MajorP93 you say to have 8GB Ram on XO, but it OOMkills at 5Gb Used RAM.

did you do those additionnal steps in your XO Config ?

You can increase the memory allocated to the XOA VM (from 2GB to 4GB or 8GB). Note that simply increasing the RAM for the VM is not enough. You must also edit the service file (/etc/systemd/system/xo-server.service) to increase the memory allocated to the xo-server process itself. You should leave ~512MB for the debian OS itself. Meaning if your VM has 4096MB total RAM, you should use 3584 for the memory value below. - ExecStart=/usr/local/bin/xo-server + ExecStart=/usr/local/bin/node --max-old-space-size=3584 /usr/local/bin/xo-server The last step is to refresh and restart the service: $ systemctl daemon-reload $ systemctl restart xo-server -

@Pilow said in backup mail report says INTERRUPTED but it's not ?:

@MajorP93 you say to have 8GB Ram on XO, but it OOMkills at 5Gb Used RAM.

did you do those additionnal steps in your XO Config ?

You can increase the memory allocated to the XOA VM (from 2GB to 4GB or 8GB). Note that simply increasing the RAM for the VM is not enough. You must also edit the service file (/etc/systemd/system/xo-server.service) to increase the memory allocated to the xo-server process itself. You should leave ~512MB for the debian OS itself. Meaning if your VM has 4096MB total RAM, you should use 3584 for the memory value below. - ExecStart=/usr/local/bin/xo-server + ExecStart=/usr/local/bin/node --max-old-space-size=3584 /usr/local/bin/xo-server The last step is to refresh and restart the service: $ systemctl daemon-reload $ systemctl restart xo-serverInteresting!

I did not know that it is recommended to set "--max-old-space-size=" as a startup parameter for Node JS with the result of (total system ram - 512MB).

I added that, restarted XO and my backup job.I will test if that gives my backup jobs more stability.

Thank you very much for taking the time and recommending the parameter. -

can you remind me the Node version you have, that is exhibiting the problem?

-

@olivierlambert Right now I am using Node JS version 20 as I saw that XOA uses that version aswell. I thought it might be best to use all dependencies at the versions that XOA uses.

I was having the issue with backup job "interrupted" status on Node JS 24 aswell as documented in this thread.

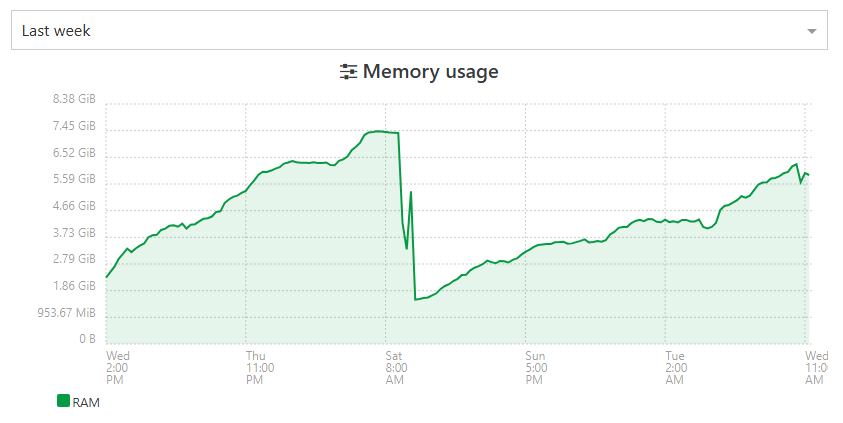

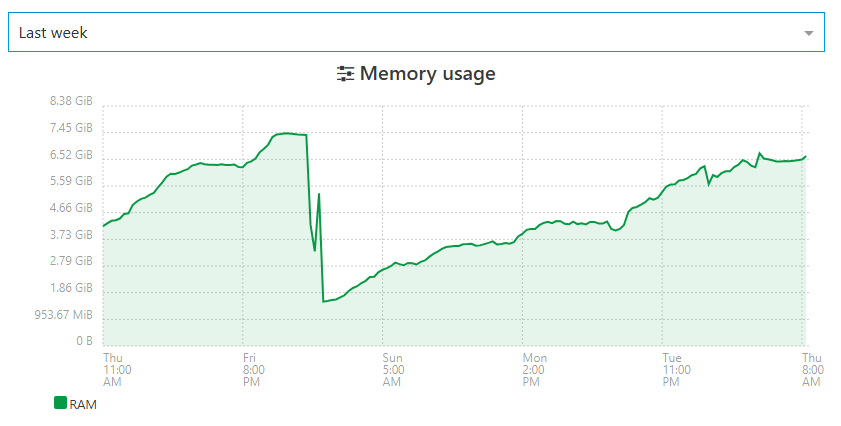

Actually since I downgraded to Node 20 total system RAM usage seems to have decreased by a fair bit which can be seen by comparing the 2 screenshots that I posted in this thread. On first screenshot I was using Node 24 und second screenshot Node 20.

Despite that the issue re-occurred after a few days of XO running.I hope that --max-old-space-size Node parameter as suggested by @pilow solves my issue.

I will report back.Best regards

-

Okay weird, so having a very different RAM usage with Node 24 should be checked when we'll update XOA Node version.

-

@MajorP93 this was found in the troubleshooting section of the documentation when i tried to optimise my xoa/xoproxies deployments

-

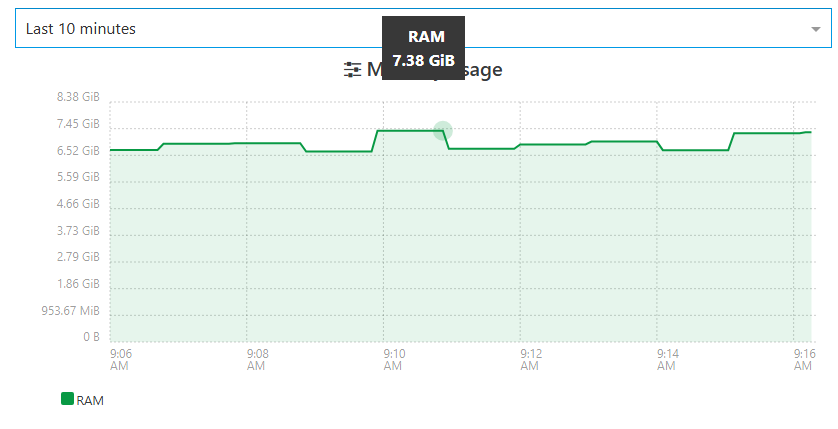

not looking better today. still not crashed.

but before the patches at 48h max, i topped the 8Gb and got the OOM killed process. -

before disruption, I prefer to patch/reboot my XOA

We got to the limit

still some memory leak somewhere guys !

-

@Pilow we are still working on it, but for now we didn't find a solution

-

After implementing the --max-old-space-size Node parameter as recommended by @pilow it took longer time for the VM to hit the issue.

Still: backups went into interrupted status.

Memory leak seems to be still there.

With each subsequent backup run the memory usage rises and rises. After backup run the memory usage does not fully go back to "normal".

After adding the node parameter there was no heap size error on Node anymore since the heap size got increased. The system went into various OOM errors in kernel log (dmesg) despite not all RAM (8GB) being used.

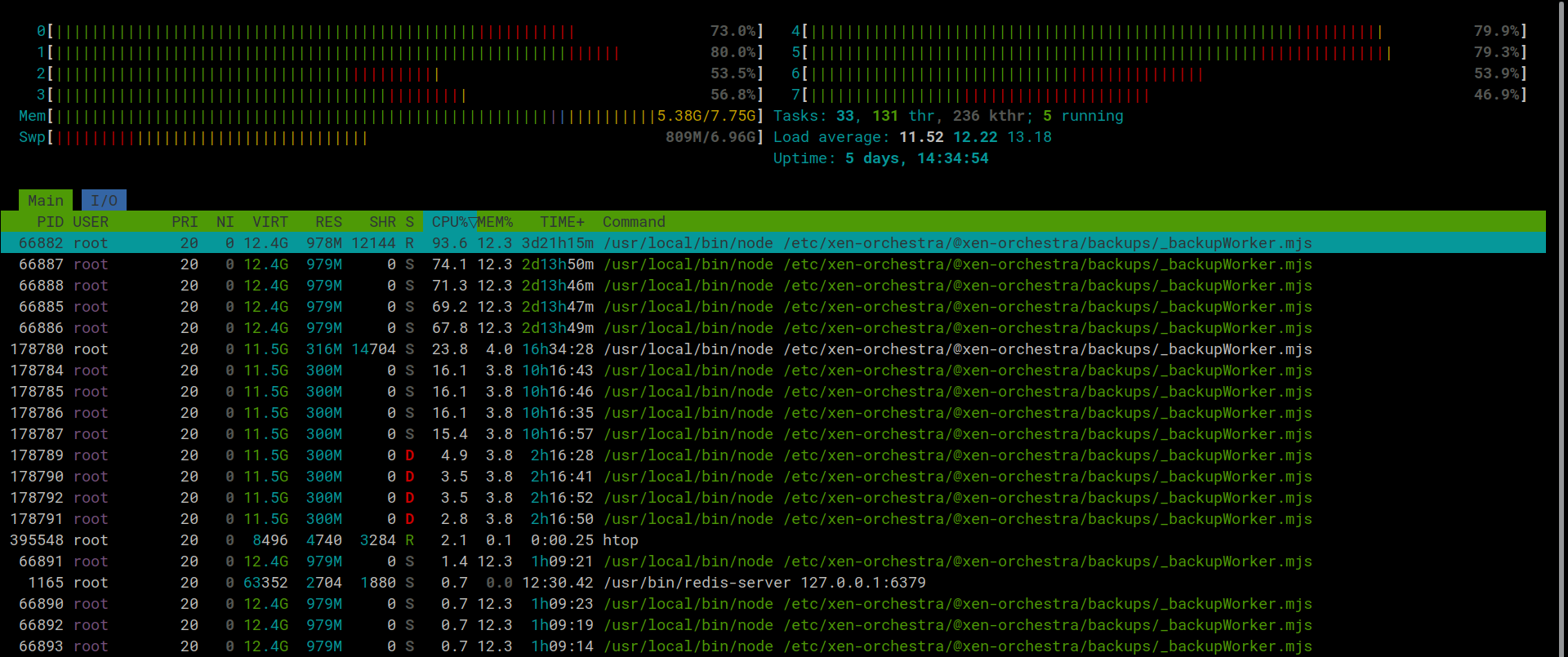

This is what htop looks like with 3 backup jobs running:

-

While working last night i noticed one of my backups/pools did this. Got the email that it was interupted but when i looked the tasks were still running and moving data it untill it porcess all vms in that backup job.