VDI_IO_ERROR(Device I/O errors) when you run scheduled backup

-

@olivierlambert So chances of resolving are minimal to none. Ugh. I'll keep digging around, it's proving a right pain in the @£$%

-

Well, if you spot something or able to reproduce it easily with some protocol other people could try and also reproduce, that would increase the chances a lot

-

Some more insight - for past couple of weeks I've been running a CR backup simultaneously to two SRs on different boxes. One consistently fails (remote to an identical XCP-ng box, but over the web on a 1gbps link) with the usual error, but the other succeeds (inside the firewall, 1gbps link, identical XCP-ng box), which would point to the problem not being at the source but the use of a wide area transit.

So...here is SMlog at the time of the failure on the target:

SM: [24325] vdi_detach {'sr_uuid': 'cf2dbaa3-21f3-903b-0fd1-fbe68539f897', 'subtask_of': 'DummyRef:|b73e41cc-a667-4ac0-b0f9-545dcd88b08a|VDI.detach', 'vdi_ref': 'OpaqueRef:3c20a324-2c22-4977-a63e-5baee37f9372', 'vdi_on_boot': 'persist', 'args': [], 'o_direct': False, 'vdi_location': '923acf0b-03bf-44ba-a846-f468141910f3', 'host_ref': 'OpaqueRef:d1eeb523-7938-4d80-a465-68ba2f149846', 'session_ref': 'OpaqueRef:a73dd5d5-2115-4bff-9d56-5cec26e267d8', 'device_config': {'device': '/dev/disk/by-id/scsi-3600605b010276170276bb1850660dd94-part3', 'SRmaster': 'true'}, 'command': 'vdi_detach', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:0cb18a22-646b-4dab-b08e-21ca36628f62', 'local_cache_sr': 'cf2dbaa3-21f3-903b-0fd1-fbe68539f897', 'vdi_uuid': '923acf0b-03bf-44ba-a846-f468141910f3'} SM: [24325] lock: opening lock file /var/lock/sm/923acf0b-03bf-44ba-a846-f468141910f3/vdi SM: [24325] lock: released /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/sr SM: [24380] lock: opening lock file /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/sr SM: [24380] lock: acquired /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/sr SM: [24380] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/923acf0b-03bf-44ba-a846-f468141910f3.vhd'] SM: [24380] pread SUCCESS SM: [24380] vdi_delete {'sr_uuid': 'cf2dbaa3-21f3-903b-0fd1-fbe68539f897', 'subtask_of': 'DummyRef:|6236329b-d253-4da6-bc9b-d5498fd02069|VDI.destroy', 'vdi_ref': 'OpaqueRef:3c20a324-2c22-4977-a63e-5baee37f9372', 'vdi_on_boot': 'persist', 'args': [], 'o_direct': False, 'vdi_location': '923acf0b-03bf-44ba-a846-f468141910f3', 'host_ref': 'OpaqueRef:d1eeb523-7938-4d80-a465-68ba2f149846', 'session_ref': 'OpaqueRef:b8fbaaeb-221f-4956-9088-f8565ba34d0a', 'device_config': {'device': '/dev/disk/by-id/scsi-3600605b010276170276bb1850660dd94-part3', 'SRmaster': 'true'}, 'command': 'vdi_delete', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:0cb18a22-646b-4dab-b08e-21ca36628f62', 'local_cache_sr': 'cf2dbaa3-21f3-903b-0fd1-fbe68539f897', 'vdi_uuid': '923acf0b-03bf-44ba-a846-f468141910f3'} SM: [24380] lock: unlinking lock file /var/lock/sm/923acf0b-03bf-44ba-a846-f468141910f3/vdi SM: [24380] lock: removing lock dir /var/lock/sm/923acf0b-03bf-44ba-a846-f468141910f3 SM: [24380] lock: opening lock file /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/running SM: [24380] lock: tried lock /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/running, acquired: True (exists: True) SM: [24380] lock: released /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/running SM: [24380] Kicking GC SMGC: [24380] === SR cf2dbaa3-21f3-903b-0fd1-fbe68539f897: gc === SMGC: [24405] Will finish as PID [24406] SMGC: [24380] New PID [24405] SM: [24406] lock: opening lock file /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/running SM: [24406] lock: opening lock file /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/gc_active SM: [24406] lock: opening lock file /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/sr SM: [24380] lock: released /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/sr SMGC: [24406] Found 0 cache files SM: [24406] lock: tried lock /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/gc_active, acquired: True (exists: True) SM: [24406] lock: tried lock /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/sr, acquired: True (exists: True) SM: [24406] ['/usr/bin/vhd-util', 'scan', '-f', '-m', '/var/run/sr-mount/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/*.vhd'] SM: [24406] pread SUCCESS SMGC: [24406] SR cf2d ('Xen12 SATA') (18 VDIs in 16 VHD trees): SMGC: [24406] b1392757(5.000G/1.589G) SMGC: [24406] *d1789c22(150.000G/87.188G) SMGC: [24406] 71e7c935(150.000G/313.500K) SMGC: [24406] aae21e12(150.000G/1.218G) SMGC: [24406] 7b458e2e(10.000G/3.106G) SMGC: [24406] 0fd9483c(25.000G/15.661G) SMGC: [24406] ae6739bf(10.000G/4.730G) SMGC: [24406] d25b8134(20.000G/3.759G) SMGC: [24406] 265ed8d8(150.000G/16.609G) SMGC: [24406] 32474df8(100.000G/98.434G) SMGC: [24406] 5ae3d2e6(50.000G/46.990G) SMGC: [24406] 7fc5f335(250.000G/16.885G) SMGC: [24406] ba7c92a3(25.000G/15.661G) SMGC: [24406] d6de6348(20.000G/9.978G) SMGC: [24406] 24c6b7e8(20.000G/9.978G) SMGC: [24406] ab433fff(50.000G/49.595G) SMGC: [24406] 6750bf45(10.000G/3.704G) SMGC: [24406] f9e3cd7e(10.000G/5.949G) SMGC: [24406] SM: [24406] lock: released /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/sr SMGC: [24406] Got sm-config for *d1789c22(150.000G/87.188G): {'vhd-blocks': 'eJzt1kESgiAUBmC5QTfgqB2Fq9QJOsKzCzTjqlooFakjkwjiQ5jm/xculHkf0MPUesil0zeNIAiCzOaa0T7rrhKBY4ld76pP3H7LLjr8Z2LI6Yv67cvEDLl9s/7UvjP9+nPx3v4L7PnoNk2x/7TKF13Z++8+mhzvhr7/FEOpSN+//rkQmy+ifK4M65fZfPEoYf35fHEqYv1NZj8wxOQOdYrZ/3/xxz5SrH7s5+Hd65vfX64qqszVPaVvNQopFbv/x9m7/ZSUDp6A5TcU+xZQv7fkdEZh/ups/wLZ//zZW5z//z+lT/4hpbz/1HCHFka7joc0V7sbl+qY58ryJ1FzVmCry7BhY3z9b8+iPVSsEb2/uU5mPzbw4cOHDx8+fPjw4cOHD38vv05U+QVg667e'} SMGC: [24406] No work, exiting SMGC: [24406] GC process exiting, no work left SM: [24406] lock: released /var/lock/sm/cf2dbaa3-21f3-903b-0fd1-fbe68539f897/gc_active SMGC: [24406] In cleanup SMGC: [24406] SR cf2d ('Xen12 SATA') (18 VDIs in 16 VHD trees): no changesThe only thing I can see in there is the garbage collection.

Given that the issue seems to be something to do with the wide area network (same speed as inside the firewall, but obviously more hops), I wonder if this may be a similar problem as we're seeing in the S3 backups over the WAN? Could it be that some packets are being delayed in transit, or that there is an internal time limit on a backup chunk completing? Would also explain why backing up smaller VMs has a higher chance of success than the larger ones, since the % chance of a temporary timeout increases the longer a backup runs?

I've kept the set up as (finally) I now have 100% repeatability on the CR backup to the remote SR failure, so if someone wants to come in and have a look around it can be arranged. Others seem to have the same problem so hopefully this can help get to the root cause, as this the first time I've managed to get it to consistently fail every time. Appreciate that this doesn't sound like a success, but it beats an intermittent failure!

-

Out of curiosity, can you do a:

dmesg | grep -i cut -C10(on your master host) -

@olivierlambert Target or source?

-

Try both.

-

Source:

# dmesg | grep -i cut -C10 [ 0.000000] Xen: [mem 0x0000000079a66000-0x0000000079f6efff] ACPI NVS [ 0.000000] Xen: [mem 0x0000000079f6f000-0x000000008fffffff] reserved [ 0.000000] Xen: [mem 0x00000000c7ffc000-0x00000000c7ffcfff] reserved [ 0.000000] Xen: [mem 0x00000000fbffc000-0x00000000fbffcfff] reserved [ 0.000000] Xen: [mem 0x00000000fec00000-0x00000000fec01fff] reserved [ 0.000000] Xen: [mem 0x00000000fec40000-0x00000000fec40fff] reserved [ 0.000000] Xen: [mem 0x00000000fed1c000-0x00000000fed44fff] reserved [ 0.000000] Xen: [mem 0x00000000fee00000-0x00000000feefffff] reserved [ 0.000000] Xen: [mem 0x00000000ff000000-0x00000000ffffffff] reserved [ 0.000000] Xen: [mem 0x0000000100000000-0x0000000270f0efff] usable [ 0.000000] NX (Execute Disable) protection: active [ 0.000000] SMBIOS 3.0 present. [ 0.000000] DMI: Supermicro X10DRi/X10DRi, BIOS 2.1 09/13/2016 [ 0.000000] Hypervisor detected: Xen PV [ 0.047663] tsc: Fast TSC calibration using PIT [ 0.047664] tsc: Detected 2399.968 MHz processor [ 0.047665] tsc: Detected 2400.010 MHz TSC [ 0.049570] e820: update [mem 0x00000000-0x00000fff] usable ==> reserved [ 0.049572] e820: remove [mem 0x000a0000-0x000fffff] usable [ 0.049578] last_pfn = 0x270f0f max_arch_pfn = 0x400000000 [ 0.049579] DisabledTarget:

dmesg | grep -i cut -C10 [ 0.000000] Xen: [mem 0x00000000fec10000-0x00000000fec10fff] reserved [ 0.000000] Xen: [mem 0x00000000fec18000-0x00000000fec18fff] reserved [ 0.000000] Xen: [mem 0x00000000fec20000-0x00000000fec20fff] reserved [ 0.000000] Xen: [mem 0x00000000fec28000-0x00000000fec28fff] reserved [ 0.000000] Xen: [mem 0x00000000fec30000-0x00000000fec30fff] reserved [ 0.000000] Xen: [mem 0x00000000fec38000-0x00000000fec38fff] reserved [ 0.000000] Xen: [mem 0x00000000fed20000-0x00000000fed44fff] reserved [ 0.000000] Xen: [mem 0x00000000fee00000-0x00000000feefffff] reserved [ 0.000000] Xen: [mem 0x00000000ff000000-0x00000000ffffffff] reserved [ 0.000000] Xen: [mem 0x0000000100000000-0x00000004a52a5fff] usable [ 0.000000] NX (Execute Disable) protection: active [ 0.000000] efi: EFI v2.50 by American Megatrends [ 0.000000] efi: SMBIOS=0x6f0bc000 SMBIOS 3.0=0x6f0bb000 ACPI 2.0=0x6ca22000 ACPI=0x6ca22000 ESRT=0x64ba8f18 [ 0.000000] SMBIOS 3.1.1 present. [ 0.000000] DMI: Supermicro SYS-6029P-TR/X11DPi-N, BIOS 2.0b 02/28/2018 [ 0.000000] Hypervisor detected: Xen PV [ 0.000480] tsc: Detected 2200.030 MHz processor [ 0.002610] e820: update [mem 0x00000000-0x00000fff] usable ==> reserved [ 0.002613] e820: remove [mem 0x000a0000-0x000fffff] usable [ 0.002624] last_pfn = 0x4a52a6 max_arch_pfn = 0x400000000 [ 0.002625] Disabled -

Hmm nothing obvious here…

-

This got my attention:

Jan 15 19:17:40 xcp-ng-xen12-lon2 xapi: [error||623653 INET :::80||import] Caught exception in import handler: VDI_IO_ERROR: [ Device I/O errors ] Jan 15 19:17:40 xcp-ng-xen12-lon2 xapi: [error||623653 INET :::80||backtrace] VDI.import D:378e6880299b failed with exception Unix.Unix_error(Unix.EPIPE, "single_write", "") Jan 15 19:17:40 xcp-ng-xen12-lon2 xapi: [error||623653 INET :::80||backtrace] Raised Unix.Unix_error(Unix.EPIPE, "single_write", "")This Unix.EPIPE error on the remote target means that the pipe stream is being closed before VDI.Import receives all the data. The outcome is a VDI I/O error due to a broken, partial sent/received VDI.

Since a remote-over-the-internet link can be more prone to latency/intermittency issues, it might be needed to adjust the remote NFS soft timeout/retries or mounting the target with hard option.

I would also check if the remote target is running out-of-space during the backup process.

-

@tuxen Target has plenty of space, over 5Tb free or about 20x the cumulative VM sizes. No NFS involved, it’s a locally mounted ext4 raid 1 array on the target box.

If same backup takes place behind the firewall it runs successfully 95% of the time, across the WAN it fails 95% of the time. Both over a 1gbps link.

Sometimes the failures clean themselves up, sometimes end up with a VM/disk marked [importing.....<backup name><VM name>] that need to be manually removed.

Any help hugely appreciated.

-

Interesting. It's like the data stream is interrupted somehow for a bit and that's enough to trigger the issue.

-

i have similar issue like this too. I'm using ext4 and it was perfectly fine when i'm using 7.6. After upgrading to ext4 and 8.2 fresh install. The CR dont work anymore.

-

I just tried installing Xen Orchestra from the sources on Debian 11. The same CR works well.

My previous Xen Orchestra from the sources ubuntu 18 having issue with VDI ERROR. Will do more testing.

-

Note: XOA is only the version distributed by Vates. Everything else is "Xen Orchestra from the sources"

-

@olivierlambert Thanks Corrected. I'm so happy for XCP-NG. I'm one of the early backers in Kickstarter. Hang the shirt up in our office.

-

Good Morning guys,

it looks like I'm having the same issue on a host that I'm doing replications. On the normal Delta Backups I'm not getting this error. But I'm getting it on the schedule Replication for only 1 of the vm's. Here is what comes on the log for that VM.

"id": "1679486747187", "message": "transfer", "start": 1679486747187, "status": "failure", "end": 1679487904889, "result": { "code": "VDI_IO_ERROR", "params": [ "Device I/O errors" ], "url": "https://192.168.2.11/import_raw_vdi/?format=vhd&vdi=OpaqueRef%3Aa5f60c35-64c2-497e-ae15-77aa63d14274&session_id=OpaqueRef%3A1593dbd0-4347-4dee-ad02-58ed84f6dbf6&task_id=OpaqueRef%3Aced050e9-1f49-4bbe-8b74-ef787d536d62", "task": { "uuid": "a7e3ba73-9d02-c211-48be-6a246828211c", "name_label": "[XO] Importing content into VDI HEALmycroft 0", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20230322T12:05:52Z", "finished": "20230322T12:25:03Z", "status": "failure", "resident_on": "OpaqueRef:259273e3-6fe1-4f9a-b688-ab0847cb81f8", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VDI_IO_ERROR", "Device I/O errors" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/vhd_tool_wrapper.ml)(line 77))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/import_raw_vdi.ml)(line 170)))" -

Device I/O error isn't a good sign. Check

dmesgand the disk health. -

@olivierlambert sorry to ask, but where should I do this at?

-

@rauly94 Hi !

You can type "dmesg" directly in your host.

You can check your disk's health with the command "smartctl", directly on the host too -

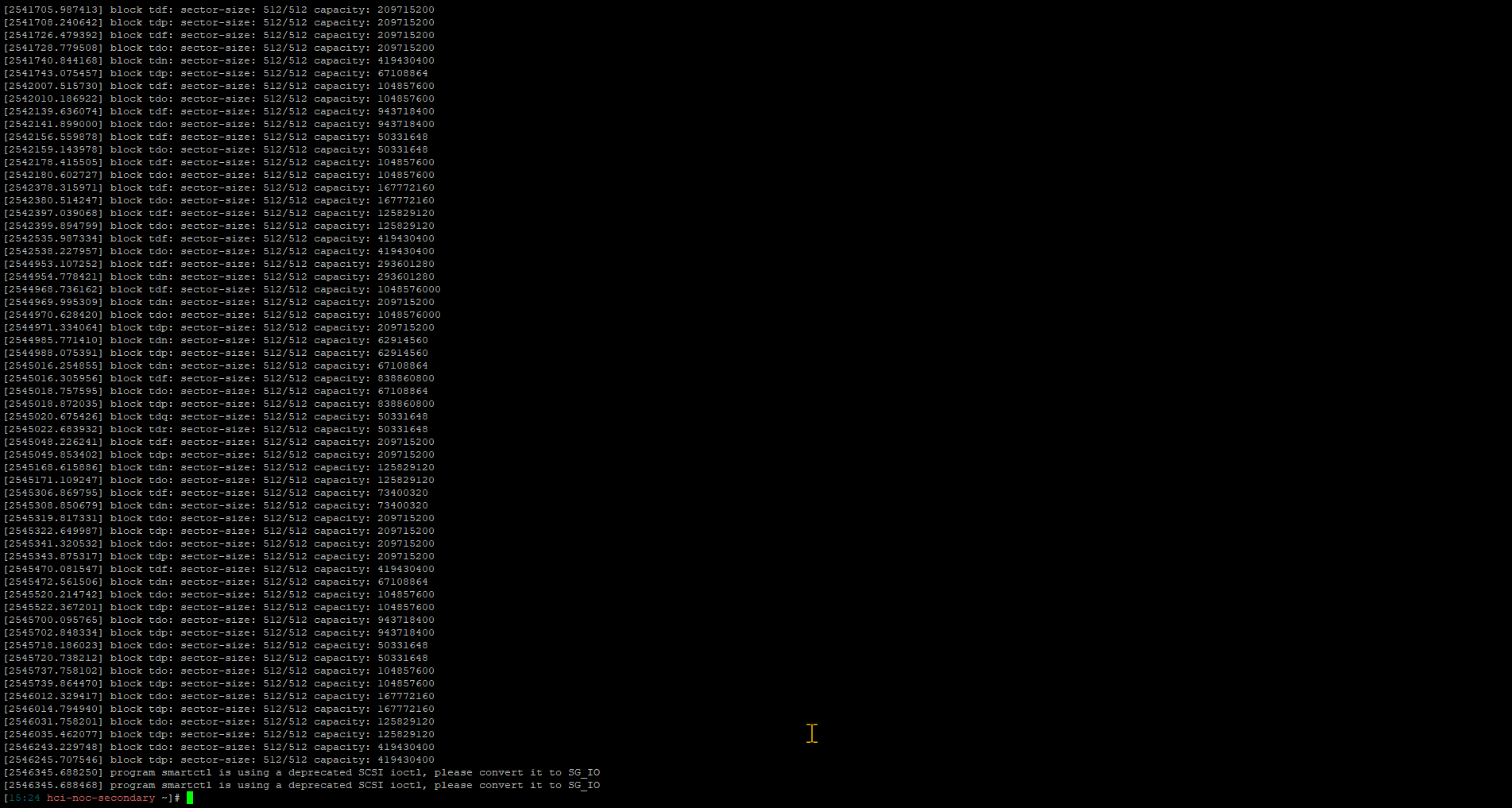

@olivierlambert said in VDI_IO_ERROR(Device I/O errors) when you run scheduled backup:

dmesg

that's what i got. I'm I doing it correctly.

The issue is happening on 1 VM. i tried doing a copy of the same vm and same error. just FYI