XO fails to backup 1 specific VM due to: Error: HTTP connection has timed out

-

Hi Everyone,

Problem: XO fails to backup a specific VM

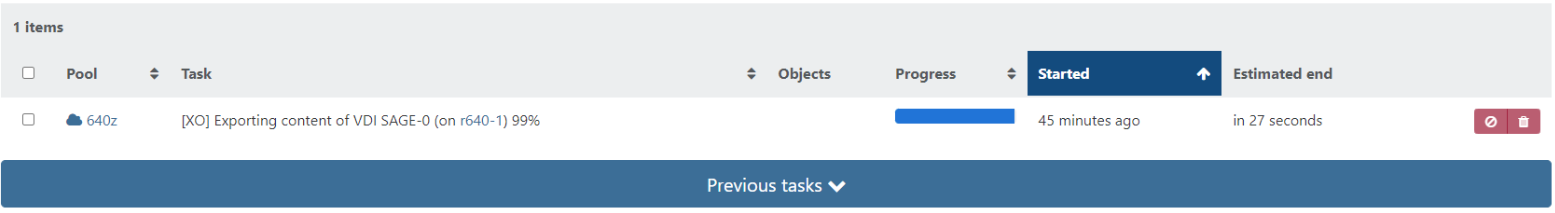

xo-server: 5.87.0I've been trying to backup a specific VM using delta backups and I've been met with HTTP timeouts only when the backup is about to finish. The backup page shows as failed but the backup task is still running:

Here's a little info about my setup (things I've tried to fix/isolate the issue after):

VM setup:

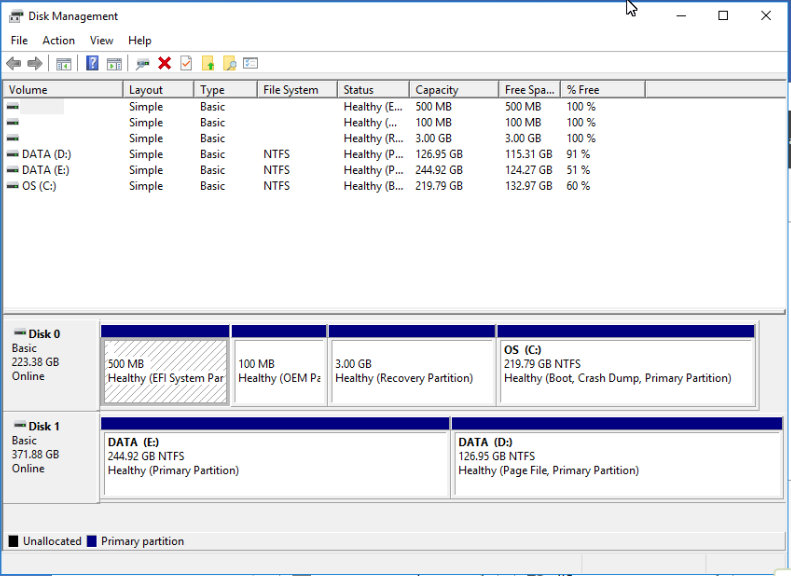

- OS: WIndows Server 2016 Standard (guest tools installed)

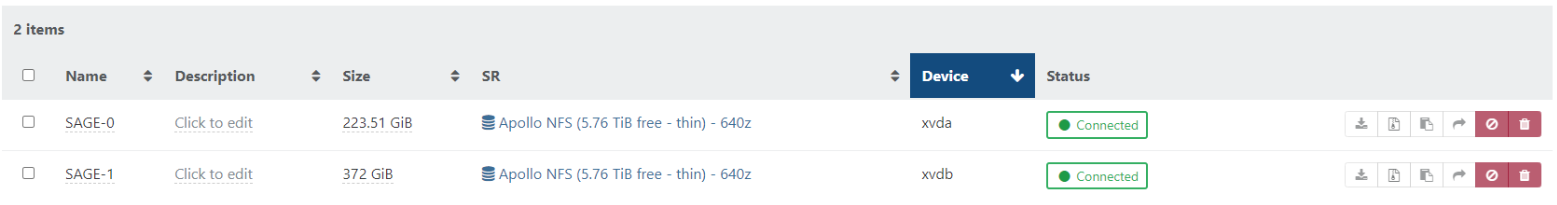

- Disks: 2

XCP-NG Hosts:

Version: 8.2.1- 2 Hosts (2x Dell R640 with Xeon SIlver 4108 & 64GB RAM)

- 1 Resource Pool

- Shared NFS SSD Storage connected to hosts at 10G on separate LAN

Things tried:

- I've tried backing up to a remote over 1G NFS & SMB

- I've created a separate backup task for this specific vm

- I've set a timeout of 72 hours

- I've tried changing management interfaces from 1G network to a separate 10G network

- I've tried backing up to another remote over 10G NFS & SMB

- I've updated XO (built from sources) & the OS as that seemed to resolve itself after updating in this thread by @lawrencesystems but didn't resolve my issue

- I've tried the quick deploy XO vm

The above yields two other vms backing up successfully in every case while the specific VM in question failing to back up.

Here is the log:

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1646328525726", "jobId": "933a38ad-48d8-48ed-bbec-e037639c9d13", "jobName": "Sage", "message": "backup", "scheduleId": "c7da8664-c4a3-41f3-82a6-c065da75b3db", "start": 1646328525726, "status": "failure", "infos": [ { "data": { "vms": [ "13e70ae1-3223-7b94-dc59-c31f7416af82" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "13e70ae1-3223-7b94-dc59-c31f7416af82" }, "id": "1646328526912", "message": "backup VM", "start": 1646328526912, "status": "failure", "tasks": [ { "id": "1646328527437", "message": "snapshot", "start": 1646328527437, "status": "success", "end": 1646328533606, "result": "0dd66066-38bd-247b-88fc-613c99c7fe91" }, { "data": { "id": "14021778-5160-457b-b949-a38c6e84ccc8", "isFull": true, "type": "remote" }, "id": "1646328533607", "message": "export", "start": 1646328533607, "status": "failure", "tasks": [ { "id": "1646328533647", "message": "transfer", "start": 1646328533647, "status": "failure", "end": 1646331954922, "result": { "canceled": false, "method": "GET", "url": "https://10.131.200.3/export_raw_vdi/?format=vhd&vdi=OpaqueRef%3A0ccbbba4-1449-4184-be31-409b9266ba62&session_id=OpaqueRef%3A1f4f5498-b540-40b9-8ada-809a5937b90c&task_id=OpaqueRef%3Ab4256fe3-de2f-498e-9539-aed9a2f56159", "timeout": true, "message": "HTTP connection has timed out", "name": "Error", "stack": "Error: HTTP connection has timed out\n at IncomingMessage.emitAbortedError (/usr/local/lib/node_modules/xo-server/node_modules/xen-api/node_modules/http-request-plus/index.js:83:19)\n at Object.onceWrapper (node:events:509:28)\n at IncomingMessage.emit (node:events:390:28)\n at IncomingMessage.patchedEmit (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/log/configure.js:118:17)\n at IncomingMessage.emit (node:domain:475:12)\n at IncomingMessage._destroy (node:_http_incoming:179:10)\n at _destroy (node:internal/streams/destroy:102:25)\n at IncomingMessage.destroy (node:internal/streams/destroy:64:5)\n at TLSSocket.socketCloseListener (node:_http_client:407:11)\n at TLSSocket.emit (node:events:402:35)" } } ], "end": 1646331954922, "result": { "canceled": false, "method": "GET", "url": "https://10.131.200.3/export_raw_vdi/?format=vhd&vdi=OpaqueRef%3A0ccbbba4-1449-4184-be31-409b9266ba62&session_id=OpaqueRef%3A1f4f5498-b540-40b9-8ada-809a5937b90c&task_id=OpaqueRef%3Ab4256fe3-de2f-498e-9539-aed9a2f56159", "timeout": true, "message": "HTTP connection has timed out", "name": "Error", "stack": "Error: HTTP connection has timed out\n at IncomingMessage.emitAbortedError (/usr/local/lib/node_modules/xo-server/node_modules/xen-api/node_modules/http-request-plus/index.js:83:19)\n at Object.onceWrapper (node:events:509:28)\n at IncomingMessage.emit (node:events:390:28)\n at IncomingMessage.patchedEmit (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/log/configure.js:118:17)\n at IncomingMessage.emit (node:domain:475:12)\n at IncomingMessage._destroy (node:_http_incoming:179:10)\n at _destroy (node:internal/streams/destroy:102:25)\n at IncomingMessage.destroy (node:internal/streams/destroy:64:5)\n at TLSSocket.socketCloseListener (node:_http_client:407:11)\n at TLSSocket.emit (node:events:402:35)" } } ], "end": 1646332004582, "result": { "canceled": false, "method": "GET", "url": "https://10.131.200.3/export_raw_vdi/?format=vhd&vdi=OpaqueRef%3A0ccbbba4-1449-4184-be31-409b9266ba62&session_id=OpaqueRef%3A1f4f5498-b540-40b9-8ada-809a5937b90c&task_id=OpaqueRef%3Ab4256fe3-de2f-498e-9539-aed9a2f56159", "timeout": true, "message": "HTTP connection has timed out", "name": "Error", "stack": "Error: HTTP connection has timed out\n at IncomingMessage.emitAbortedError (/usr/local/lib/node_modules/xo-server/node_modules/xen-api/node_modules/http-request-plus/index.js:83:19)\n at Object.onceWrapper (node:events:509:28)\n at IncomingMessage.emit (node:events:390:28)\n at IncomingMessage.patchedEmit (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/log/configure.js:118:17)\n at IncomingMessage.emit (node:domain:475:12)\n at IncomingMessage._destroy (node:_http_incoming:179:10)\n at _destroy (node:internal/streams/destroy:102:25)\n at IncomingMessage.destroy (node:internal/streams/destroy:64:5)\n at TLSSocket.socketCloseListener (node:_http_client:407:11)\n at TLSSocket.emit (node:events:402:35)" } } ], "end": 1646332004583 }My apologies for the lengthy post but I've tried just about every avenue I can think of. I'm hoping you may see something that I'm not. Thanks.

-

@northportio Is this XO (from source) or XOA?

Which version of XCP?

Try upgrading XO and running again.

-

@Andrew

It's happening on both built from source and XOA.

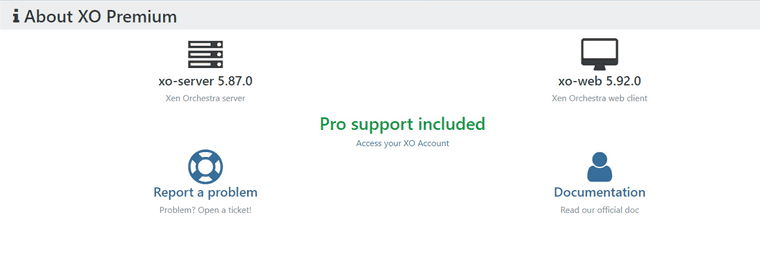

xo-server 5.87.0

xo-web 5.92.0

XCP-NG 8.2.1

I'm as up-to-date as possible as of 3-3-2022 1400h.

I'd also like to add that I tried with different hosts as the master role of the pool. Still yields the error. -

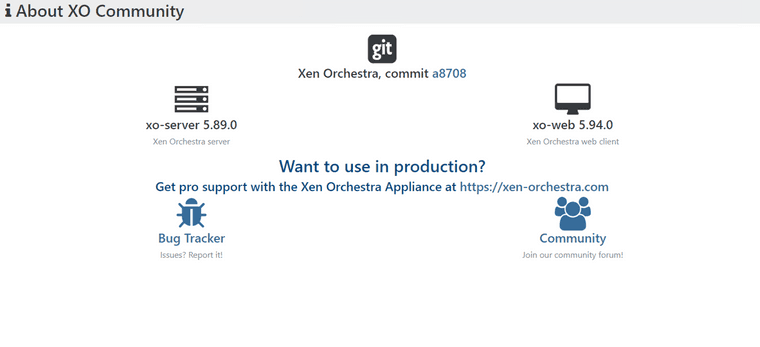

@northportio XO Built from source (commit a8708) is XO-server 5.89.0 and XO-web 5.94.0

I don't know if it will solve the issue but it's worth a try. Do you have enough space for the snapshots?

-

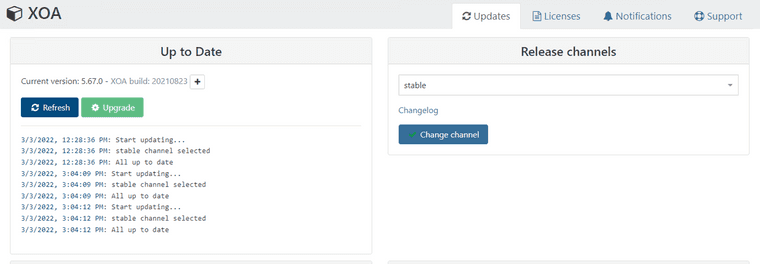

My apologies for the mix up. Here are the versions:

XOA:

Built from source:

And I have plenty of space for snapshots. I have a rolling snapshot on that VM that works.

-

@northportio You could try switching to the

latestrelease channel. -

@Danp Failed again on

latest. Another thing to note, theExporting content of VDI SAGE-0keeps going forever and the only way to clear it out is to restart the toolstack. -

@northportio Please could you try this and report back:

create this file

/etc/xo-server/config.httpInactivityTimeout.toml:# XOA Support - Work-around HTTP timeout issue during backups [xapiOptions] httpInactivityTimeout = 1800000 # 30 minsAnd rerun the backup job.

-

@Darkbeldin I did that and the job still failed.

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1646508682255", "jobId": "933a38ad-48d8-48ed-bbec-e037639c9d13", "jobName": "Sage", "message": "backup", "scheduleId": "c7da8664-c4a3-41f3-82a6-c065da75b3db", "start": 1646508682255, "status": "failure", "infos": [ { "data": { "vms": [ "13e70ae1-3223-7b94-dc59-c31f7416af82" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "13e70ae1-3223-7b94-dc59-c31f7416af82" }, "id": "1646508683162", "message": "backup VM", "start": 1646508683162, "status": "failure", "tasks": [ { "id": "1646508683673", "message": "snapshot", "start": 1646508683673, "status": "success", "end": 1646508688331, "result": "fd17d98f-1f25-4671-7321-6416fb3de129" }, { "data": { "id": "35f70147-809e-4bec-9531-927d187fce7c", "isFull": true, "type": "remote" }, "id": "1646508688332", "message": "export", "start": 1646508688332, "status": "failure", "tasks": [ { "id": "1646508688372", "message": "transfer", "start": 1646508688372, "status": "failure", "end": 1646513475586, "result": { "canceled": false, "method": "GET", "url": "https://10.131.200.3/export_raw_vdi/?format=vhd&vdi=OpaqueRef%3Ac9d9f6be-fb17-4993-bc3a-c11405ba7f6e&session_id=OpaqueRef%3A5fb25acb-a5e3-4f3c-9dc1-49f55a527d74&task_id=OpaqueRef%3Af73a85cd-33ae-4b8e-bfad-c9139f035e06", "timeout": true, "message": "HTTP connection has timed out", "name": "Error", "stack": "Error: HTTP connection has timed out\n at IncomingMessage.emitAbortedError (/usr/local/lib/node_modules/xo-server/node_modules/http-request-plus/index.js:83:19)\n at Object.onceWrapper (node:events:509:28)\n at IncomingMessage.emit (node:events:390:28)\n at IncomingMessage.patchedEmit (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/log/configure.js:118:17)\n at IncomingMessage.emit (node:domain:475:12)\n at IncomingMessage._destroy (node:_http_incoming:179:10)\n at _destroy (node:internal/streams/destroy:102:25)\n at IncomingMessage.destroy (node:internal/streams/destroy:64:5)\n at TLSSocket.socketCloseListener (node:_http_client:407:11)\n at TLSSocket.emit (node:events:402:35)" } } ], "end": 1646513475586, "result": { "canceled": false, "method": "GET", "url": "https://10.131.200.3/export_raw_vdi/?format=vhd&vdi=OpaqueRef%3Ac9d9f6be-fb17-4993-bc3a-c11405ba7f6e&session_id=OpaqueRef%3A5fb25acb-a5e3-4f3c-9dc1-49f55a527d74&task_id=OpaqueRef%3Af73a85cd-33ae-4b8e-bfad-c9139f035e06", "timeout": true, "message": "HTTP connection has timed out", "name": "Error", "stack": "Error: HTTP connection has timed out\n at IncomingMessage.emitAbortedError (/usr/local/lib/node_modules/xo-server/node_modules/http-request-plus/index.js:83:19)\n at Object.onceWrapper (node:events:509:28)\n at IncomingMessage.emit (node:events:390:28)\n at IncomingMessage.patchedEmit (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/log/configure.js:118:17)\n at IncomingMessage.emit (node:domain:475:12)\n at IncomingMessage._destroy (node:_http_incoming:179:10)\n at _destroy (node:internal/streams/destroy:102:25)\n at IncomingMessage.destroy (node:internal/streams/destroy:64:5)\n at TLSSocket.socketCloseListener (node:_http_client:407:11)\n at TLSSocket.emit (node:events:402:35)" } } ], "end": 1646513527913, "result": { "canceled": false, "method": "GET", "url": "https://10.131.200.3/export_raw_vdi/?format=vhd&vdi=OpaqueRef%3Ac9d9f6be-fb17-4993-bc3a-c11405ba7f6e&session_id=OpaqueRef%3A5fb25acb-a5e3-4f3c-9dc1-49f55a527d74&task_id=OpaqueRef%3Af73a85cd-33ae-4b8e-bfad-c9139f035e06", "timeout": true, "message": "HTTP connection has timed out", "name": "Error", "stack": "Error: HTTP connection has timed out\n at IncomingMessage.emitAbortedError (/usr/local/lib/node_modules/xo-server/node_modules/http-request-plus/index.js:83:19)\n at Object.onceWrapper (node:events:509:28)\n at IncomingMessage.emit (node:events:390:28)\n at IncomingMessage.patchedEmit (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/log/configure.js:118:17)\n at IncomingMessage.emit (node:domain:475:12)\n at IncomingMessage._destroy (node:_http_incoming:179:10)\n at _destroy (node:internal/streams/destroy:102:25)\n at IncomingMessage.destroy (node:internal/streams/destroy:64:5)\n at TLSSocket.socketCloseListener (node:_http_client:407:11)\n at TLSSocket.emit (node:events:402:35)" } } ], "end": 1646513527914 } -

2022-07-04T10_53_26.656Z - backup NG.txt

I have the same problem now, did this ever get resolved?

From source (today):

Xen Orchestra, commit 34c84

xo-server 5.98.0

xo-web 5.99.0 -

@Meth0d The error itself only means that the VM can't be downloaded but the root cause behind this can be multiple, so you need to investigate all the parameters of the backup, for example do you have enough space to make the snapshot? Is your remote accessible with enough space etc.

-

@Darkbeldin there is enough space for the snapshot and on the remote. How can i investigate the root rause further?

-

Have you tried to extend the HTTP timeout?

-

@olivierlambert i have created config.httpInactivityTimeout.toml in /opt/xen-orchestra/packages/xo-server with the content from above. Not sure how i can check if thats actually set.

BTW: this is not a CR Backup Problem, i also dont see Tasks running for my "normal" Backup Job. I cant check "how far" the actual Backup runs and if that differs.