Error: couldn't instantiate any remote

-

I can confirm the same thing has happened to my lab setup as is happening to Screame1.

I have three different servers that run a continuous replication, one every 10 minutes. Everything has been working perfectly for a couple weeks. Yesterday, at around 3:50 PM, I pulled down the latest XO update, 3d3b6, and my continuous replications stopped working from that point forward. The replication prior to the update (that ran at 3:40 PM) worked perfectly... the next one to run at 4:00 PM failed, and all have continued to fail from that point forward.

Like Screame1, I am also running XCP-ng 8.2.1 (GPLv2), with XO from sources update 3d3b6. Prior to updating yesterday, I was on XO update ed78d. It looks like one of the six commits that were pushed out between that version and the latest version has somehow broken the ability to run continuous replications.

I have a mix of hardware involved in the test lab... some are simple single drive workstations acting as hosts, and I have one HP server with standard SATA drives in a RAID 5 array.

Normal backups to an NFS share are working... only the continuous replications between hosts are broken.

-

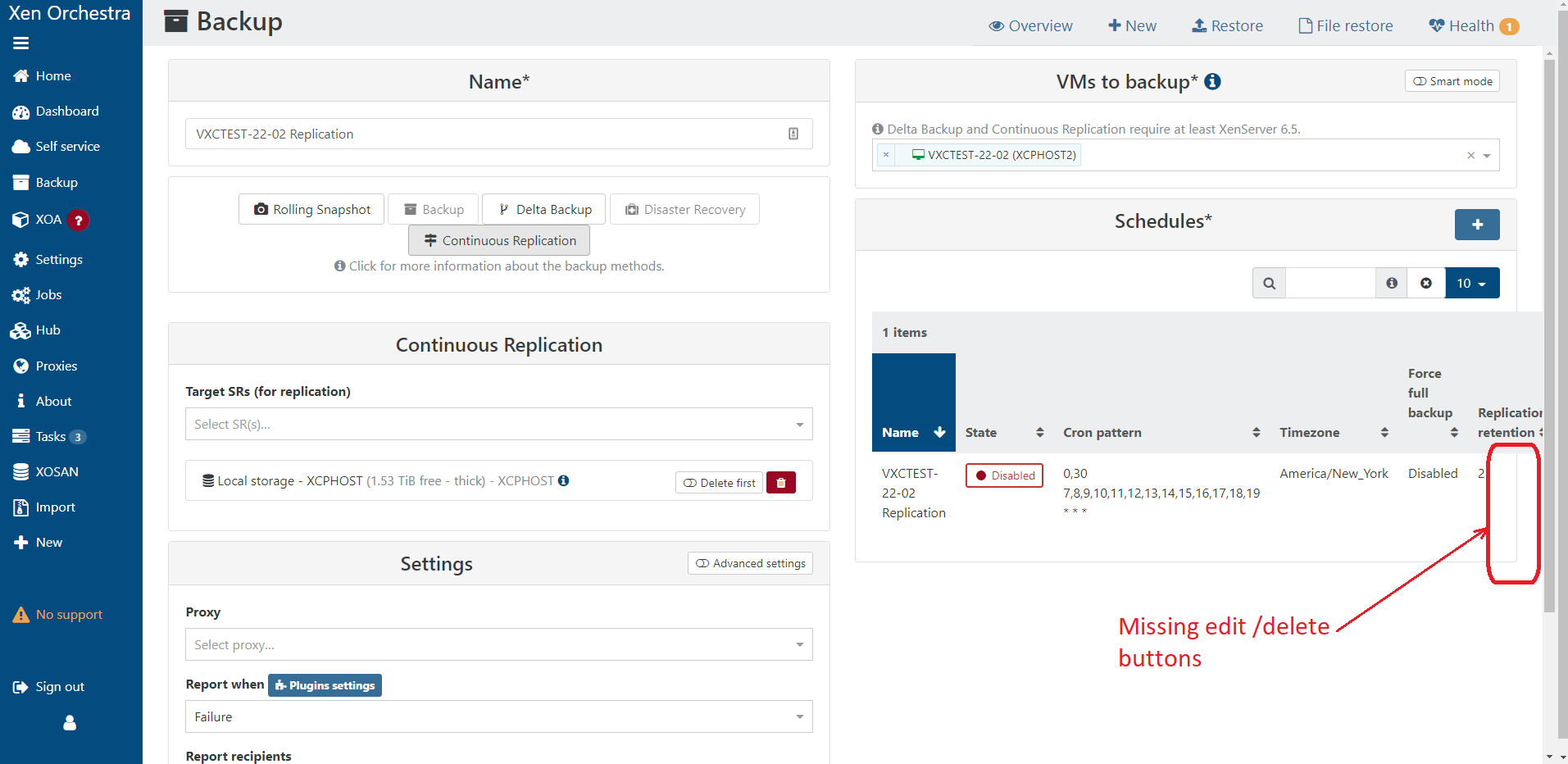

Just another bit of additional information. In my continuous replication jobs, the buttons that allow you to delete or edit the schedule for the jobs are missing, as well. Those same buttons are available for my Delta Backup jobs.

I even deleted and build a new continuous replication job from scratch and have the same issue with the new job as with the originals.

Hopefully this extra info helps. If not, I'll also be happy to provide anything you ask for... hopefully since you now have more than one instance, combining the two will help!

Thanks!

-

@JamfoFL The buttons are likely there, just off screen. Can you try expanding the window or scrolling to the right?

-

@Danp Yuuuup! I'm a flippin' idiot on that front. Thanks, Danp! I don't know why my screen is now reformat in those windows (it hadn't been like this previously, and doesn't look like this for the Delta Backups), but if it works and only looks funny, I can live with that. You can see how the border that usually surrounds all the fields for the scheduler is now "too short" and doesn't show stuff off of the screen.

Regardless... as I said, I can live with a slightly off-kilter screen. It's the complete loss of the ability to run continuous replications that of the greatest concern. I just want to throw in as much detail as possible.

Thanks again!!

-

Just for completeness... here is the log for the failure of one of the continuous replications jobs that had been working for quite some time until after the update:

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1664289600023", "jobId": "ab9ec0fb-43d5-4489-9f1b-b38e39b80e1a", "jobName": "VXCTEST-22-03 Replication", "message": "backup", "scheduleId": "99e24d76-1455-4e18-9f47-bc569cfe74e4", "start": 1664289600023, "status": "failure", "end": 1664289600028, "result": { "errors": {}, "message": "couldn't instantiate any remote", "name": "Error", "stack": "Error: couldn't instantiate any remote\n at executor (file:///root/xen-orchestra/packages/xo-server/src/xo-mixins/backups-ng/index.mjs:233:27)\n at file:///root/xen-orchestra/packages/xo-server/src/xo-mixins/jobs/index.mjs:263:30\n at Jobs._runJob (file:///root/xen-orchestra/packages/xo-server/src/xo-mixins/jobs/index.mjs:292:22)\n at Jobs.runJobSequence (file:///root/xen-orchestra/packages/xo-server/src/xo-mixins/jobs/index.mjs:332:7)" } }And here is the log for the brand-new continuous replication job I set up just now to see if that would make a difference:

backupNg.runJob { "id": "905e5413-f550-40af-afbb-027cfc2133f7", "schedule": "23b7fbac-77f0-4a0a-99cf-bb39ddb514ab" } { "errors": {}, "message": "couldn't instantiate any remote", "name": "Error", "stack": "Error: couldn't instantiate any remote at executor (file:///root/xen-orchestra/packages/xo-server/src/xo-mixins/backups-ng/index.mjs:233:27) at file:///root/xen-orchestra/packages/xo-server/src/xo-mixins/jobs/index.mjs:263:30 at Jobs._runJob (file:///root/xen-orchestra/packages/xo-server/src/xo-mixins/jobs/index.mjs:292:22) at Jobs.runJobSequence (file:///root/xen-orchestra/packages/xo-server/src/xo-mixins/jobs/index.mjs:332:7) at Api.#callApiMethod (file:///root/xen-orchestra/packages/xo-server/src/xo-mixins/api.mjs:394:20)" } -

One last bit of information to add, then I'll shut up and let the professionals go at it!

As I have been running these jobs successfully for some time before this issue popped up, I did have a number of VMs sitting ready from prior continuous replication jobs. I can confirm that I was able to successfully power on and boot these already existing continuous replication VMs, so whatever the issue is, it is only affecting the ability to complete continuous replication jobs going forward, but has not affected the ability to access and utilize the results of previous jobs.

-

@JamfoFL Nice catch !

i can't help anymore since i'm not a great dev, but @florent or @olivierlambert will be better than me to diagnostic these ! ^^ -

@JamfoFL What commit is showing under the XO About screen?

Error is likely related to this commit

-

@Danp Current commit is 3d3b6.

xo-server: 5.103.0

xo-web: 5.104.0Yes... as I had mentioned, everything worked until I updated yesterday at around 3:50 PM. I noticed there were five different commits that were released yesterday, and the one you linked to is one of those. So, as I figured, one of the commits from yesterday "broke" the ability to run continuous replications.

Now that it looks like you've focused on a cause, I'm sure it's only a matter of time until a new commit is published to fix the issue. Once it's released, I'll get it installed and, hopefully, we can put this behind us.

Thanks!!

-

Seems that the issue is on the latest version. I had the same issue and rolled back to:

xo-server 5.102.3

xo-web 5.103.0

Xen Orchestra, commit 9d09aRolling back fixed the issue. Guess I'll wait a bit for the issue to resolve in a further patch.

-

@JamfoFL Same issue here, it seems to be a version problem.

-

Just to be sure: it's when doing BOTH CR and delta backup?

-

Hello,

We could reproduce it with a job with only continuous replication. the PR with the fix is here : https://github.com/vatesfr/xen-orchestra/pull/6437/files

Can you confirm it fixes the problem ?

Regards

Florent -

@olivierlambert No... only with CR. Delta backups still work like a champ!

-

@florent Thanks for the update, Florent!

Unfortunately, my office is in the direct path of Hurricane Ian, so we are going to be closed for the next day or two, and I won't have an opportunity to try the patch until after we return from the storm!

Hopefully Screame1 (who originally submitted the error) will be able to test and verify, and I will do the same once I am back to the office.

Thanks again for you post, and I'll be sure to update you as soon as I can!

-

-

@JamfoFL I've been watching the coverage of Ian from here in Indiana. You and everyone in the path are in my thoughts. Stay safe!

-

@screame1 You can do something like this:

cd your_folder_where_xo_is git checkout fix_continuous_replication git pull -r yarn yarn build(i didn't use XO source since a long time, so maybe i'm wrong ^^)

-

@AtaxyaNetwork Thanks! I was able to find instructions in another thread, so I was down the path when you replied. I've applied the patch, restarted the server, and it appears to have started the backup correctly. It will obviously take a few minutes to know if the backups work, but this patch has moved me past the error reported.

Thanks again for the efforts @florent @JamfoFL and @AtaxyaNetwork !

-

@florent Thanks, patch applied and CR is working now.