Faulty XCP-ng host after update (but only on XO)

-

@olivierlambert well the XO instance is connected to the Master, it's not the master node showing this problem.

As when I rebooted the XO already assumed it was "disconnecting" and "connecting", but I am going to do it manually.I have:

- Rebooted the XO VM

- Rebooted the XCP node

- Rebooted the XCP master

none produced any different result.

-

Now I just did as you suggested went to Settings > Severs

Disabled the master, counted to 10, enabled the master.Problem persists. (for a moment I thought it was gone but then I realised I was looking to XOA not XO).

-

You only need to connect to the master, there's no direct connection to other hosts needed. You should only have the master server listed in there, is it the case, right?

-

@olivierlambert Yes only one server listed

-

Well since there is no issue when looking at XOA but only on XO, I am going to disregard this issue and wait to see if it resolves by itself until the weekend and if not I'll try and remove this XO build and make a new one.

Thank you for your suggestions, I'll try and let know how it went.

-

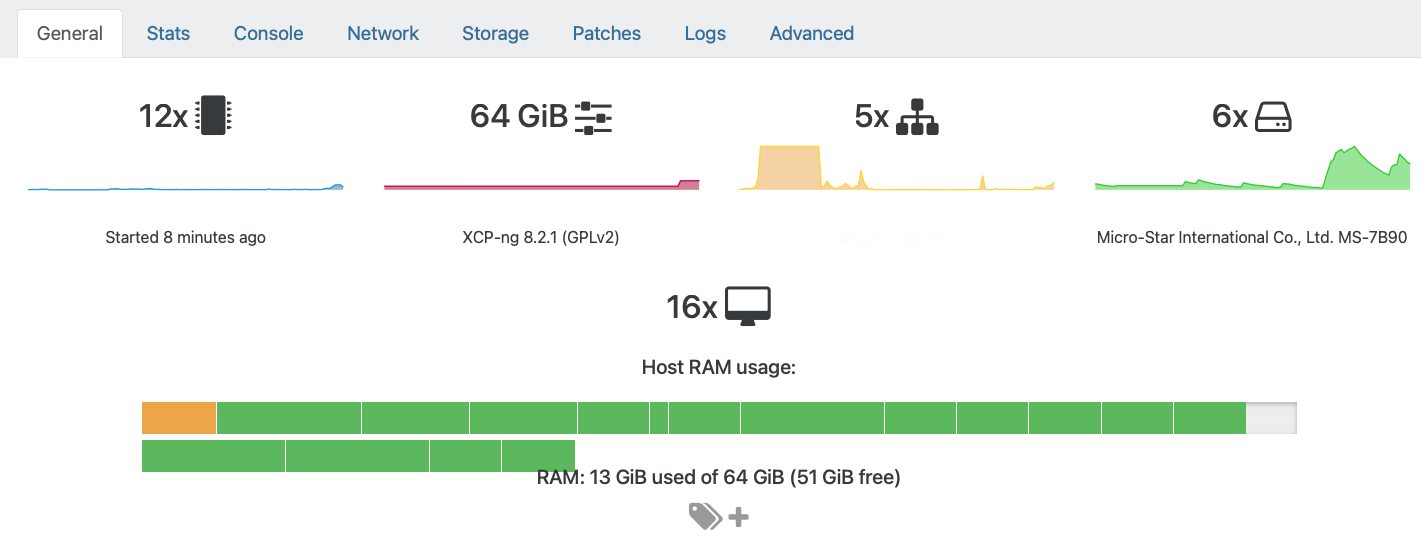

@olivierlambert It happens to me too now on XO Source (commit 039d5)... seems to be showing memory usage of ALL VMs in the graph, including guests NOT running. ONLY when you have a single host/pool. The actual memory calculation below the graph looks correct.

-

@Andrew Same here.

-

@olivierlambert @Danp @julien-f

Problem cause by XO commit 1718649e0c6e18fd01b6cb3fb3c6f740214decc4 -

Thank you everyone, I'm investigating.

-

Probably because we used $container in this view before, and it was only the host when the VM was running. Since we changed the logic, it broke that view.

-

It should be fixed, let me know if there are any other issues

https://github.com/vatesfr/xen-orchestra/commit/9e5541703b25f6533cd94bdd786a716b6f739615

-

Alright I updated XO again and the problem is gone.

I would just like to comment that unlike described by another user that was only happening on single-node installs, this was happening to me in 2 out 4 nodes in a cluster. Two hosts would show normal, and another two showed glitched.Anyway, SOLVED!

-

O olivierlambert marked this topic as a question on

O olivierlambert marked this topic as a question on

-

O olivierlambert has marked this topic as solved on

O olivierlambert has marked this topic as solved on