CBT: the thread to centralize your feedback

-

Because you are writing faster in the VM than the garbage collector can merge/coalesce.

You could try to change the leaf coalesce timeout value to see if it's better.

-

@manilx we use belows values:

/opt/xensource/sm/cleanup.py :

LIVE_LEAF_COALESCE_MAX_SIZE = 1024 * 1024 * 1024 # bytes

LIVE_LEAF_COALESCE_TIMEOUT = 300 # secondsSince then we do not see issues like these, leaf coalesce is different from normal coalesce when a snapshot is left behind. I think your problem is:

Problem 2: Coalesce due to Timed-out: Example: Nov 16 23:25:14 vm6 SMGC: [15312] raise util.SMException("Timed out") Nov 16 23:25:14 vm6 SMGC: [15312] Nov 16 23:25:14 vm6 SMGC: [15312] * Nov 16 23:25:14 vm6 SMGC: [15312] *** UNDO LEAF-COALESCE This is when the VDI is currently under significant IO stress. If possible, take the VM offline and do an offline coalesce or do the coalesce when the VM has less load. Upcoming versions of XenServer will address this issue more efficiently. -

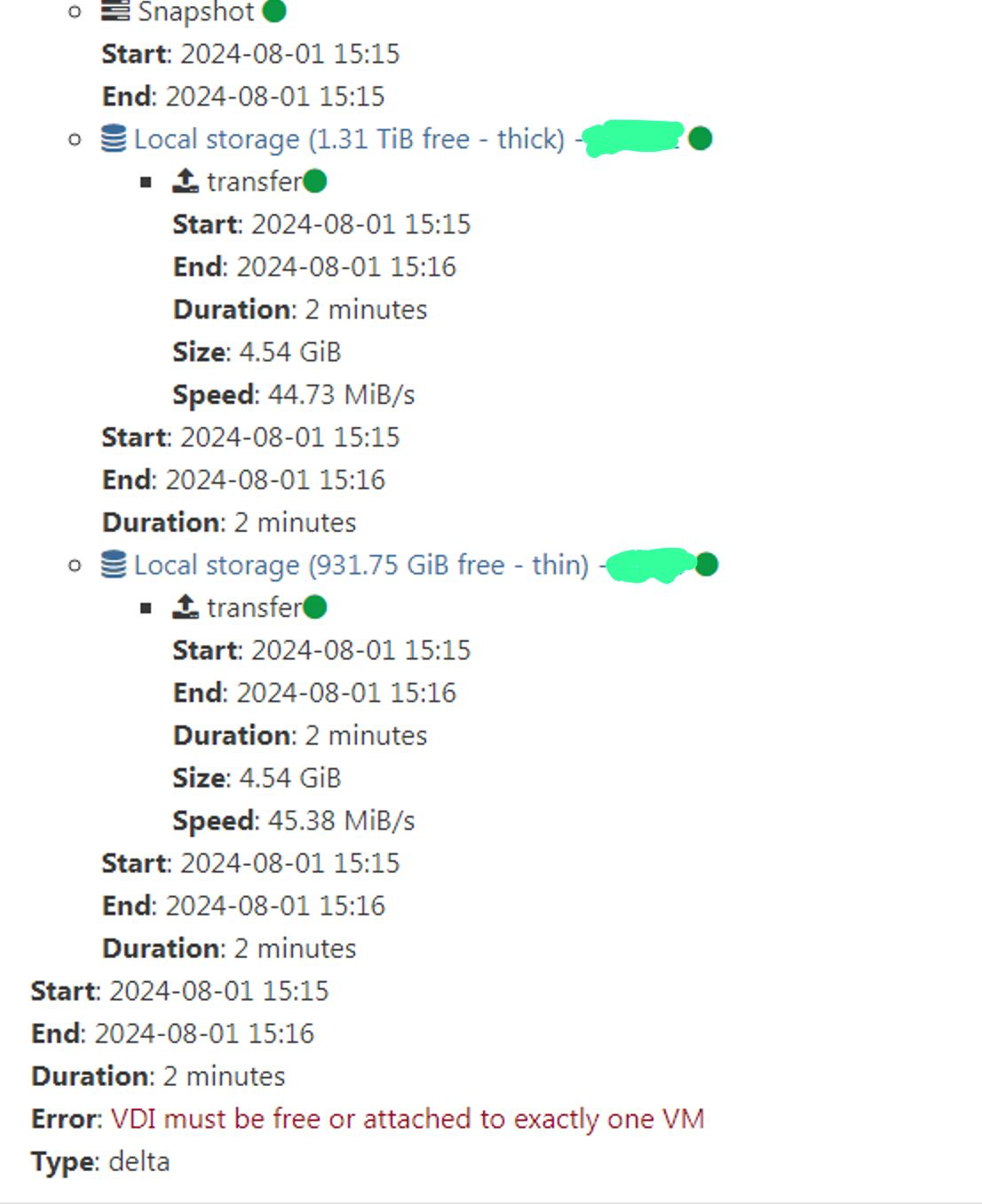

I have been seeing this error recently. "VDI must be free or attached to exactly one VM" with quite a few snapshots attached to the control domain when I look at the health dashboard. I am not sure if this is related to the cbt backups but wanted to ask. It seems to only being happening on my delta backups that do have cbt enabled.

-

@olivierlambert Actually I don't think this is the case. The VM's are completely idle doing noting.

-

@rtjdamen VM's are idle (there is no doubt here).

Will try these values and see how it goes. -

@manilx it did wonders at our setup, hope they will help you as well

-

@rtjdamen THX!

I changed /opt/xensource/sm/cleanup.py as per your settings and rebooted (perhaps bot needed).Looks good. Coalesce finished! Wonder why it did fail, as there was no load on hosts/vm's/nas share....

Will monitor.

-

@manilx P.S: Theses mods do not survive a host update, right?

-

@manilx nope, but i have talked with a dev about it and they are looking to make it a setting somewhere, don’t know the status of that. Good to see this works for you!

-

@manilx I have not tested that, but I would say that's correct. Upgrades are rather destructive for custom changes to system scripts and custom settings. This is to ensure that scripts and settings are set to standard known good values on install or upgrade.

I keep notes on my custom settings/scripts/configs so I can check them after an upgrade or a new install.

-

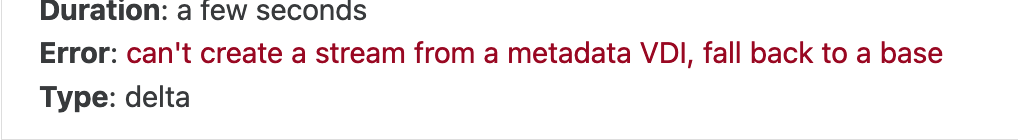

CR failed on all VM's with

Next one was ok.

This happens sometimes, it's not consistent.

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1722153616340", "jobId": "4c084697-6efd-4e35-a4ff-74ae50824c8b", "jobName": "CR", "message": "backup", "scheduleId": "b1cef1e3-e313-409b-ad40-017076f115ce", "start": 1722153616340, "status": "failure", "infos": [ { "data": { "vms": [ "52e64134-62e3-9682-4e3f-296a1198db4d", "43a4d905-7d13-85b8-bed3-f6b805ff26ac", "b5d74e0b-388c-019a-6994-e174c9ca7a51", "d6a5d420-72e6-5c87-a3af-b5eb5c4a44dd", "131ee7f6-4d58-31d9-39a8-53727cc3dc68" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "52e64134-62e3-9682-4e3f-296a1198db4d", "name_label": "XO" }, "id": "1722153619552", "message": "backup VM", "start": 1722153619552, "status": "failure", "tasks": [ { "id": "1722153619599", "message": "snapshot", "start": 1722153619599, "status": "success", "end": 1722153622486, "result": "6b6036ae-708e-4cb0-2681-12165ba19919" }, { "data": { "id": "0d9ee24c-ea59-e0e6-8c04-a9a65c22f110", "isFull": false, "name_label": "TBS-h574TX", "type": "SR" }, "id": "1722153622486:0", "message": "export", "start": 1722153622486, "status": "interrupted" } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:f35bea93-45b3-f4bd-2752-3853850ff73a" }, "message": "Snapshot data has been deleted" } ], "end": 1722153637891, "result": { "message": "can't create a stream from a metadata VDI, fall back to a base ", "name": "Error", "stack": "Error: can't create a stream from a metadata VDI, fall back to a base \n at Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/xapi/vdi.mjs:202:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:57:32\n at async Promise.all (index 0)\n at async cancelableMap (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:26:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:369:9)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } }, { "data": { "type": "VM", "id": "43a4d905-7d13-85b8-bed3-f6b805ff26ac", "name_label": "Bitwarden" }, "id": "1722153619556", "message": "backup VM", "start": 1722153619556, "status": "failure", "tasks": [ { "id": "1722153619603", "message": "snapshot", "start": 1722153619603, "status": "success", "end": 1722153624616, "result": "58f1ac5b-7de0-8276-3872-b2a7d5a26ec2" }, { "data": { "id": "0d9ee24c-ea59-e0e6-8c04-a9a65c22f110", "isFull": false, "name_label": "TBS-h574TX", "type": "SR" }, "id": "1722153624616:0", "message": "export", "start": 1722153624616, "status": "interrupted" } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:81a61f30-99a0-25bc-35ec-25cadb323a09" }, "message": "Snapshot data has been deleted" } ], "end": 1722153655152, "result": { "message": "can't create a stream from a metadata VDI, fall back to a base ", "name": "Error", "stack": "Error: can't create a stream from a metadata VDI, fall back to a base \n at Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/xapi/vdi.mjs:202:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:57:32\n at async Promise.all (index 0)\n at async cancelableMap (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:26:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:369:9)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } }, { "data": { "type": "VM", "id": "b5d74e0b-388c-019a-6994-e174c9ca7a51", "name_label": "Docker Server" }, "id": "1722153637896", "message": "backup VM", "start": 1722153637896, "status": "failure", "tasks": [ { "id": "1722153637925", "message": "snapshot", "start": 1722153637925, "status": "success", "end": 1722153639557, "result": "d820e4ad-462f-7043-4f1f-ee21ed986e8d" }, { "data": { "id": "0d9ee24c-ea59-e0e6-8c04-a9a65c22f110", "isFull": false, "name_label": "TBS-h574TX", "type": "SR" }, "id": "1722153639558", "message": "export", "start": 1722153639558, "status": "interrupted" } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:5f21b5e1-8423-3bbc-7361-6319bb25e97d" }, "message": "Snapshot data has been deleted" } ], "end": 1722153675901, "result": { "message": "can't create a stream from a metadata VDI, fall back to a base ", "name": "Error", "stack": "Error: can't create a stream from a metadata VDI, fall back to a base \n at Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/xapi/vdi.mjs:202:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:57:32\n at async Promise.all (index 0)\n at async cancelableMap (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:26:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:369:9)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } }, { "data": { "type": "VM", "id": "d6a5d420-72e6-5c87-a3af-b5eb5c4a44dd", "name_label": "Media Server" }, "id": "1722153655156", "message": "backup VM", "start": 1722153655156, "status": "failure", "tasks": [ { "id": "1722153655188", "message": "snapshot", "start": 1722153655188, "status": "success", "end": 1722153656817, "result": "6c572cec-ed70-d994-a88b-bc6066c06b0b" }, { "data": { "id": "0d9ee24c-ea59-e0e6-8c04-a9a65c22f110", "isFull": false, "name_label": "TBS-h574TX", "type": "SR" }, "id": "1722153656818", "message": "export", "start": 1722153656818, "status": "interrupted" } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:c755b6ed-5d00-397c-62a8-db643c3fbdcd" }, "message": "Snapshot data has been deleted" } ], "end": 1722153660309, "result": { "message": "can't create a stream from a metadata VDI, fall back to a base ", "name": "Error", "stack": "Error: can't create a stream from a metadata VDI, fall back to a base \n at Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/xapi/vdi.mjs:202:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:57:32\n at async Promise.all (index 0)\n at async cancelableMap (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:26:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:369:9)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } }, { "data": { "type": "VM", "id": "131ee7f6-4d58-31d9-39a8-53727cc3dc68", "name_label": "Unifi" }, "id": "1722153660312", "message": "backup VM", "start": 1722153660312, "status": "failure", "tasks": [ { "id": "1722153660341", "message": "snapshot", "start": 1722153660341, "status": "success", "end": 1722153662203, "result": "b0dac528-8914-141b-5d37-8b68bdeb7fe0" }, { "data": { "id": "0d9ee24c-ea59-e0e6-8c04-a9a65c22f110", "isFull": false, "name_label": "TBS-h574TX", "type": "SR" }, "id": "1722153662204", "message": "export", "start": 1722153662204, "status": "interrupted" } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:595f2f1f-ec64-1d43-b2de-574fcd621576" }, "message": "Snapshot data has been deleted" } ], "end": 1722153669757, "result": { "message": "can't create a stream from a metadata VDI, fall back to a base ", "name": "Error", "stack": "Error: can't create a stream from a metadata VDI, fall back to a base \n at Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/xapi/vdi.mjs:202:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:57:32\n at async Promise.all (index 0)\n at async cancelableMap (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_incrementalVm.mjs:26:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:369:9)\n at async file:///opt/xo/xo-builds/xen-orchestra-202407261701/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } } ], "end": 1722153675901 } -

-

Yes, a fix is coming in XOA latest tomorrow

-

@olivierlambert unfortunately not!

Got error can’t create a stream from a metadata VDI. Fall back to base -

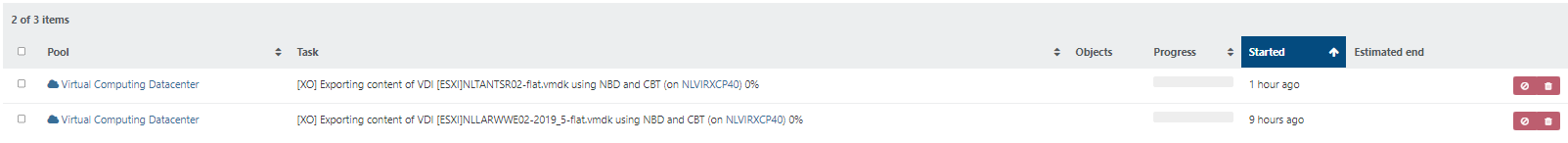

@olivierlambert i updated yesterday to the latest version, during the night our backups did run but still some errors.

I did not see the stream error, however it seems like the same behavior is occuring as we saw with the stream error, but now with error can't create a stream from a metadata VDI

some of these do have a hanging export job in XOA

-

@olivierlambert I updated today to confirm cb6cf and also got this error but only once in multiple backups.

Both server runs 8.2.1 version with latest updates.

-

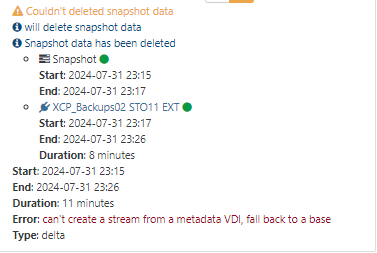

We have upgraded from commit f2188 to cb6cf and have 3 hosts. With none of these hosts backups worked anymore after doing the upgrade. When reverting back to f2188 all works again.

We also deleted orphaned VDIs and let the garbage collector do its job but it did not helped.Hosts/Errors :

1.) XCP-ng 8.2.1 : "VDI must be free or attached to exactly one VM"

2.) XCP-ng 8.2.1 (with latest updates): "VDI must be free or attached to exactly one VM"

3.) XenServer 7.1.0 : "MESSAGE_METHOD_UNKOWN(VDI.get_cbt_enabled)With the Xenserver 7.1.0 CBT is not enabled (and can not be enabled).

-

@Vinylrider @olivierlambert I found that only backups on the host with the latest updates are having problems eventually with the backup.

These patches with respective versions was not applied to others 2 hosts.

xapi-core 1.249.36

xapi-tests 1.249.36

xapi-xe 1.249.36

xen-dom0-libs 4.13.5

xen-dom0-tools 4.13.5

xen-hypervisor 4.13.5

xen-libs 4.13.5

xen-tools 4.13.5

xsconsole 10.1.13 -

@olivierlambert Running XO source master (commit d0bd6) and Delta backup to S3 is looking for an off-line host in the pool, so the backup fails. This host was evacuated and in maintenance mode and being rebooted by XO. The VM being backed up was running on a different host and the master was not on the off-line host. There are several other running hosts in the pool.

There's no reason XO/XCP should be doing anything with this host...

"error": "HOST_OFFLINE(OpaqueRef:65b7a047-094b-4c7a-a503-2823e92b9fe4)" -

So with the latest XO update released this week i experience a new behavior when trying to run a backup after a VM has moved from Host A to Host B (while staying on the same shared NFS SR)

The new error is "Error: can't create a stream from a metadata VDI, fall back to a base " it then retries and runs a full backup.