XCP-ng 8.3 updates announcements and testing

-

@flakpyro Thanks for letting us know. I suppose there was a mirror that was not ready yet, or had a transient issue, and unfortunately XOA's rolling pool update feature is not very resilient to that at the moment.

-

I reverted the package however i initially followed the directions provided by vates in the release blog post and ran "secureboot-certs clear" then on each VM with Secure boot enabled i clicked "Copy the pools default UEFI Certificates to the VM".

After reverting the updates and running secureboot-certs install again i went back and clicked "Copy the pools default UEFI Certificates to the VM" again thinking it would put the old certs back.

It sounds like this may not be enough and i need to remove the dbx record from each of these VMs. Am i correct or was that enough to fix these VMs?

Per the docs:

varstore-rm <vm-uuid> d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx Note that the GUID may be found by using varstore-ls <vm-uuid>.When i run the command i see

Exmaple:

varstore-ls f9166a11-3c3f-33f1-505c-542ce8e1764d 8be4df61-93ca-11d2-aa0d-00e098032b8c SecureBoot 8be4df61-93ca-11d2-aa0d-00e098032b8c DeployedMode 8be4df61-93ca-11d2-aa0d-00e098032b8c AuditMode 8be4df61-93ca-11d2-aa0d-00e098032b8c SetupMode 8be4df61-93ca-11d2-aa0d-00e098032b8c SignatureSupport 8be4df61-93ca-11d2-aa0d-00e098032b8c PK 8be4df61-93ca-11d2-aa0d-00e098032b8c KEK d719b2cb-3d3a-4596-a3bc-dad00e67656f db d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx 605dab50-e046-4300-abb6-3dd810dd8b23 SbatLevel fab7e9e1-39dd-4f2b-8408-e20e906cb6de HDDP e20939be-32d4-41be-a150-897f85d49829 MemoryOverwriteRequestControl bb983ccf-151d-40e1-a07b-4a17be168292 MemoryOverwriteRequestControlLock 9d1947eb-09bb-4780-a3cd-bea956e0e056 PPIBuffer 9d1947eb-09bb-4780-a3cd-bea956e0e056 Tcg2PhysicalPresenceFlagsLock eb704011-1402-11d3-8e77-00a0c969723b MTC 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0000 8be4df61-93ca-11d2-aa0d-00e098032b8c Timeout 8be4df61-93ca-11d2-aa0d-00e098032b8c Lang 8be4df61-93ca-11d2-aa0d-00e098032b8c PlatformLang 8be4df61-93ca-11d2-aa0d-00e098032b8c ConIn 8be4df61-93ca-11d2-aa0d-00e098032b8c ConOut 8be4df61-93ca-11d2-aa0d-00e098032b8c ErrOut 9d1947eb-09bb-4780-a3cd-bea956e0e056 Tcg2PhysicalPresenceFlags 8be4df61-93ca-11d2-aa0d-00e098032b8c Key0000 8be4df61-93ca-11d2-aa0d-00e098032b8c Key0001 5b446ed1-e30b-4faa-871a-3654eca36080 0050569B1890 937fe521-95ae-4d1a-8929-48bcd90ad31a 0050569B1890 9fb9a8a1-2f4a-43a6-889c-d0f7b6c47ad5 ClientId 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0003 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0004 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0005 4c19049f-4137-4dd3-9c10-8b97a83ffdfa MemoryTypeInformation 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0006 8be4df61-93ca-11d2-aa0d-00e098032b8c BootOrder 8c136d32-039a-4016-8bb4-9e985e62786f SecretKey 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0001 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0002So the command would be:

varstore-rm f9166a11-3c3f-33f1-505c-542ce8e1764d d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx correct? Does "d719b2cb-3d3a-4596-a3bc-dad00e67656f " indicate the old certs have been re-installed? -

@flakpyro It's in the middle. But the "d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx" part is always the same across all VMs.

After reverting the updates and running secureboot-certs install again

This will create a pool-level dbx which does not have the problem seen in varstored-1.2.0-3.1. So as long as you propagated the variables to all affected VMs without any errors, there's no need to delete them manually. But you can always delete them again if you're not sure.

-

@dinhngtu Ah okay, i was wondering if "d719b2cb-3d3a-4596-a3bc-dad00e67656f" indicated i was back on the safe certs since it is the same on all VMs since reverting and clicking "Copy the pools default UEFI Certificates to the VM"

So i need to run

varstore-rm f9166a11-3c3f-33f1-505c-542ce8e1764d d719b2cb-3d3a-4596-a3bc-dad00e67656f dbxwhile powered off to be safe?

-

Awesome thanks for the response. I took a snapshot and tried rebooting a VM and it booted back up without issue simply by clicking the propagate button on each affecting VM after reverting and running "secureboot-certs install"

-

-

@acebmxer Looks like it. You can recover by installing a preliminary test update here:

wget https://koji.xcp-ng.org/repos/user/8/8.3/xcpng-users.repo -O /etc/yum.repos.d/xcpng-users.repo yum update --enablerepo=xcp-ng-ndinh1 varstored varstored-tools secureboot-certs clearThen propagate SB certs to make sure that subsequent dbx updates will run normally.

Sorry for the inconvenience.

-

I was just getting ready to go update my pool... So is it safe now? And do these certs only affect Secure Boot VMs or UEFI VMs as well?

-

@dinhngtu Thank you for that reply.

Just to clarify I have 4 host. 2 host i updated to latest update once they became available to public via pool updates. The other 2 host I had to push the second beta release to get command secureboot-certs install to work. Then I pushed the rest of the update again once they became public.

So far im only seeing 1 vm having the issue on the later 2 host the had the beta updates installed. There that test PC and 1 windows server (Printsrver), xoproxy, and xoce.

Should i run those commands on all 4 host or just the later 2 with the troubled vm?

-

@Greg_E said in XCP-ng 8.3 updates announcements and testing:

I was just getting ready to go update my pool... So is it safe now? And do these certs only affect Secure Boot VMs or UEFI VMs as well?

This problem will affect UEFI VMs. See the announcement above for more details.

In short, if you haven't updated, you should be safe; the offending update has been withdrawn and no longer available from

yum update(as long as you're not using the testing branch).@acebmxer said in XCP-ng 8.3 updates announcements and testing:

@dinhngtu Thank you for that reply.

Just to clarify I have 4 host. 2 host i updated to latest update once they became available to public via pool updates. The other 2 host I had to push the second beta release to get command secureboot-certs install to work. Then I pushed the rest of the update again once they became public.

So far im only seeing 1 vm having the issue on the later 2 host the had the beta updates installed. There that test PC and 1 windows server (Printsrver), xoproxy, and xoce.

Should i run those commands on all 4 host or just the later 2 with the troubled vm?

I haven't tested using different versions of varstored within the same pool.

-

All 4 host have the same version. They were just not updated the same way at the same time was the point i was making and that so far only 1 vm seems to be affected.

But i guess to play it self i should downgrade all 4 host.

Edit - ran the following commands on master host of pool with the vm with issue. VM now boots up.

Sorry for all the questions.

wget https://koji.xcp-ng.org/repos/user/8/8.3/xcpng-users.repo -O /etc/yum.repos.d/xcpng-users.repo yum update --enablerepo=xcp-ng-ndinh1 varstored varstored-tools secureboot-certs clearShould run that above commands on all the remaining host?

Or should i run this command on any of the four host?

yum downgrade varstored-1.2.0-2.3.xcpng8.3 -

@acebmxer You can confirm if there are other affected VMs using this command:

xe vm-list --minimal | xargs -d, -Ix sh -c "echo Scanning x 1>&2; varstore-ls x" > /dev/nullIf there are still affected VMs, you must fix them on varstored-1.2.0-3.2 by

secureboot-certs clear+ propagating before downgrading to 2.3 (if you wish).Note that downgrading to 2.3 with pool certs cleared means you'll need to set up guest Secure Boot again. So I recommend staying on 3.2 if it's possible for you.

-

@dinhngtu The output of that command seems to result in the output of "Scanningn every UUID in my pool", most of which do not use SB. Im guessing that normal and i do not have any affected VMs any longer?

-

I opened a support ticket #7746470

I get the following from different host...

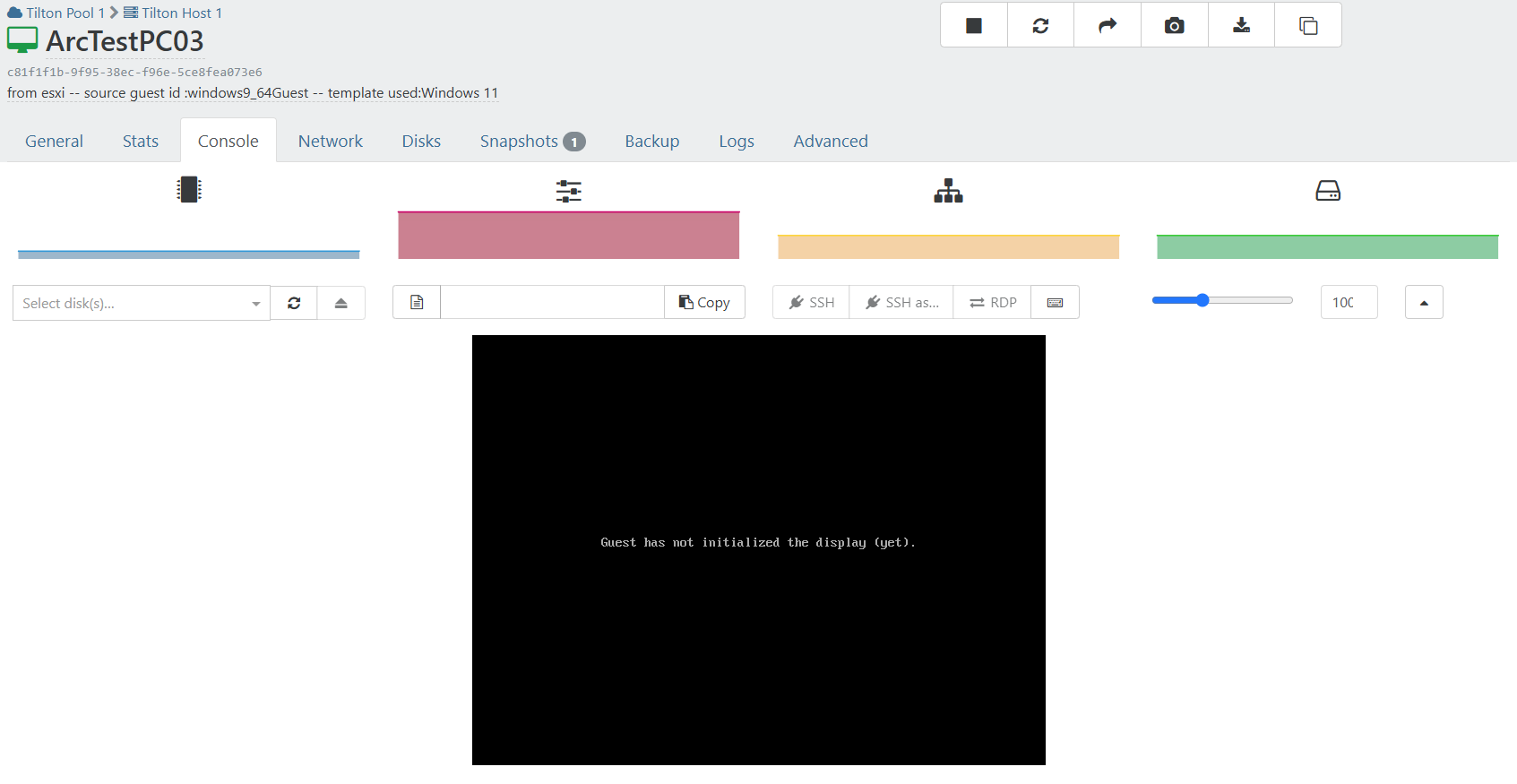

Pool1 Master host: [09:48 xcp-ng-vyadytkn ~]# xe vm-list --minimal | xargs -d, -Ix sh -c "echo Scanning x 1>&2; varstore-ls x" > /dev/null Scanning ab9e8cde-fad8-5089-29c8-c904a810857a Scanning d2025b59-6268-6bb9-28cd-e4812395563c Scanning 4567b8da-3a3c-00a4-cf6b-ccdae302a645 Scanning 291f9d05-88c8-fc37-107a-368a55c649be Scanning 88d7ba09-e65a-dd82-8d45-ec3df3e3f9d4 Scanning bcc182c6-dbb7-33c4-3e8b-c7db9b246e7b Scanning 5a2c18ca-e9ce-abfc-8bd7-2794f94c6c3a Scanning f4a4669a-2baf-47f2-b16a-08f60963e922 Scanning d4352870-d73c-5cda-9470-ffd7cd01e251 Scanning b0c21701-7309-dd9c-fa2a-bdc84a24565e Scanning 342b470e-fac9-6579-f82d-2517b8de6112 Scanning 2c21c005-9230-f3f3-cdf6-2d39407b3456 Scanning 102a5ac2-ab7f-68ca-9fa1-b549d64ec4a9 Scanning c9dadc90-6fb8-eee7-d3e5-e1ae1024a77f Scanning 5703adef-d804-6b15-ba2f-7b3357a711bb Scanning a8858f86-ceab-2d5d-5d46-1b083c3fa399 Scanning 82b191df-a63c-4357-b618-4467f714008a Pool 2 Master host (Problem VM) [10:02 localhost ~]# xe vm-list --minimal | xargs -d, -Ix sh -c "echo Scanning x 1>&2; varstore-ls x" > /dev/null Scanning ece6db75-533b-5359-1a99-28d5dc1a6912 Scanning c81f1f1b-9f95-38ec-f96e-5ce8fea073e6 unserialize_variables: Tolerating oversized data 60440 > 57344 Scanning 4fb6dee8-c251-93e6-0c20-b1c1c67351ba Scanning 8a570993-6522-c837-0931-6fd1812dbd96 Scanning b31749b8-bc1a-0e8a-a864-50a06391592e Scanning bf1b61d5-14ca-9f0e-7040-24c177693cea Scanning af6eeff7-5640-28a9-dbdb-87f094a04665 Scanning 43ba924b-8bf7-4a50-b258-2e977f6f2f0c Scanning 5a625c92-3702-2ce6-1790-39497745e83c Scanning 9f36a8c5-c988-8546-b0f9-30ac9d0a5015 Scanning a7f5b1bb-7fe2-d0b5-5187-f29e5d4d15d7 -

My production pool upgraded fine as far as I can tell, but not so good with the windows drivers, I'll make a thread on the drivers.

-

@flakpyro said in XCP-ng 8.3 updates announcements and testing:

@dinhngtu The output of that command seems to result in the output of "Scanningn every UUID in my pool", most of which do not use SB. Im guessing that normal and i do not have any affected VMs any longer?

Yes, it'll produce an error message in

unserialize_variableswhen there's a problem.@acebmxer Scanning on 1 host will scan the whole pool at once.

In the output you posted, there's only

c81f1f1b-9f95-38ec-f96e-5ce8fea073e6which needs fixing. -

@acebmxer Scanning on 1 host will scan the whole pool at once.

In the output you posted, there's only

c81f1f1b-9f95-38ec-f96e-5ce8fea073e6which needs fixing.That is the same pool/host i ran the following command on.

wget https://koji.xcp-ng.org/repos/user/8/8.3/xcpng-users.repo -O /etc/yum.repos.d/xcpng-users.repo yum update --enablerepo=xcp-ng-ndinh1 varstored varstored-tools secureboot-certs clearThe vm being called out is the vm that would not boot that is booting now after running the above command.

Is this the command i need to run?

varstore-rm c81f1f1b-9f95-38ec-f96e-5ce8fea073e6 d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx -

@acebmxer Yes, that'll fix the situation.

Note that we're discussing a different approach for the official update, which will involve using a manual repair tool.

-

@dinhngtu

Thank you for all the help. See output below. Please let me know if you would like for me to test anything from my side.[10:02 localhost ~]# xe vm-list --minimal | xargs -d, -Ix sh -c "echo Scanning x 1>&2; varstore-ls x" > /dev/null Scanning ece6db75-533b-5359-1a99-28d5dc1a6912 Scanning c81f1f1b-9f95-38ec-f96e-5ce8fea073e6 unserialize_variables: Tolerating oversized data 60440 > 57344 Scanning 4fb6dee8-c251-93e6-0c20-b1c1c67351ba Scanning 8a570993-6522-c837-0931-6fd1812dbd96 Scanning b31749b8-bc1a-0e8a-a864-50a06391592e Scanning bf1b61d5-14ca-9f0e-7040-24c177693cea Scanning af6eeff7-5640-28a9-dbdb-87f094a04665 Scanning 43ba924b-8bf7-4a50-b258-2e977f6f2f0c Scanning 5a625c92-3702-2ce6-1790-39497745e83c Scanning 9f36a8c5-c988-8546-b0f9-30ac9d0a5015 Scanning a7f5b1bb-7fe2-d0b5-5187-f29e5d4d15d7 [10:23 localhost ~]# varstore-rm c81f1f1b-9f95-38ec-f96e-5ce8fea073e6 d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx unserialize_variables: Tolerating oversized data 60440 > 57344 [11:40 localhost ~]# xe vm-list --minimal | xargs -d, -Ix sh -c "echo Scanning x 1>&2; varstore-ls x" > /dev/null Scanning ece6db75-533b-5359-1a99-28d5dc1a6912 Scanning c81f1f1b-9f95-38ec-f96e-5ce8fea073e6 Scanning 4fb6dee8-c251-93e6-0c20-b1c1c67351ba Scanning 8a570993-6522-c837-0931-6fd1812dbd96 Scanning b31749b8-bc1a-0e8a-a864-50a06391592e Scanning bf1b61d5-14ca-9f0e-7040-24c177693cea Scanning af6eeff7-5640-28a9-dbdb-87f094a04665 Scanning 43ba924b-8bf7-4a50-b258-2e977f6f2f0c Scanning 5a625c92-3702-2ce6-1790-39497745e83c Scanning 9f36a8c5-c988-8546-b0f9-30ac9d0a5015 Scanning a7f5b1bb-7fe2-d0b5-5187-f29e5d4d15d7 [11:40 localhost ~]# -

@acebmxer All looks good from the output. There might be some VMs that need fixes for Windows dbx updates to work properly, I'll try to add that in the official version.