@rtjdamen I understand now

Posts

-

RE: CBT: the thread to centralize your feedback

@rtjdamen Thanks for the super fast response!

Just removed the existing snapshots and the task is proceeding.

Did you mean cbt is enabled as opposed to cbt is invalid?

-

RE: CBT: the thread to centralize your feedback

I was looking to do some updates on our TrueNAS Scale device providing an NFS share to my XCP-ng hosts (8.2.1), we have CBT enabled for backups.

However when I try to move the Xen Orchestra VDI from TrueNAS to local storage I receive the following error:

{ "id": "0m261vorl", "properties": { "method": "vdi.migrate", "params": { "id": "f91f81f2-308d-4de9-879e-c1fa84a37d27", "sr_id": "49822b62-3367-7e7c-76ee-1cfc91a262e9" }, "name": "API call: vdi.migrate", "userId": "7b63bade-51f3-4916-9174-f969da17774a", "type": "api.call" }, "start": 1728731129889, "status": "failure", "updatedAt": 1728731132752, "end": 1728731132752, "result": { "code": "VDI_CBT_ENABLED", "params": [ "OpaqueRef:4f16cd0e-fbaf-48c3-aae4-092b9906b9e4" ], "task": { "uuid": "7ce61fba-d6d3-12cb-2585-79d5b69d3857", "name_label": "Async.VDI.pool_migrate", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20241012T11:05:31Z", "finished": "20241012T11:05:32Z", "status": "failure", "resident_on": "OpaqueRef:fe0440a3-4a31-44d6-8317-a0e64d0ee01e", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VDI_CBT_ENABLED", "OpaqueRef:4f16cd0e-fbaf-48c3-aae4-092b9906b9e4" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi-client/client.ml)(line 7))((process xapi)(filename ocaml/xapi-client/client.ml)(line 19))((process xapi)(filename ocaml/xapi-client/client.ml)(line 12359))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 134))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "VDI_CBT_ENABLED(OpaqueRef:4f16cd0e-fbaf-48c3-aae4-092b9906b9e4)", "name": "XapiError", "stack": "XapiError: VDI_CBT_ENABLED(OpaqueRef:4f16cd0e-fbaf-48c3-aae4-092b9906b9e4)\n at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202410111017/packages/xen-api/_XapiError.mjs:16:12)\n at default (file:///opt/xo/xo-builds/xen-orchestra-202410111017/packages/xen-api/_getTaskResult.mjs:13:29)\n at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202410111017/packages/xen-api/index.mjs:1041:24)\n at file:///opt/xo/xo-builds/xen-orchestra-202410111017/packages/xen-api/index.mjs:1075:14\n at Array.forEach (<anonymous>)\n at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202410111017/packages/xen-api/index.mjs:1065:12)\n at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202410111017/packages/xen-api/index.mjs:1238:14)" } }I can see a task disabling CBT on the disk and looking at the UI it shows as CBT disabled.

I experience the same issue attempting to migrate other VDI's too.

-

RE: VM must be a snapshot

The problem still existed on latest as of earlier today.

I removed the VM from the original (Smart Mode) backup job and cleaned up any VDI's and detached backups. As a test I created a separate backup of the VM in question pointing to the same target, this backup was successful.

Hopefully I've worked around the issue, I will try adding the VM back into a Smart Mode job in the coming days.

-

RE: VM must be a snapshot

With the latest commit on Master (72592), here is the resultant complete backup log

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1724152335898", "jobId": "5e0a3dca-5c7f-4459-9b57-6af74a01d812", "jobName": "Production every other day", "message": "backup", "scheduleId": "1f6ca79c-0e6e-460e-bad5-5e984ec28ef5", "start": 1724152335898, "status": "failure", "infos": [ { "data": { "vms": [ "45df57b9-e2d9-ed2f-e467-345bd6f10296" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "45df57b9-e2d9-ed2f-e467-345bd6f10296", "name_label": "sv-uts" }, "id": "1724152337383", "message": "backup VM", "start": 1724152337383, "status": "failure", "tasks": [ { "id": "1724152337409", "message": "clean-vm", "start": 1724152337409, "status": "success", "end": 1724152339005, "result": { "merge": false } }, { "id": "1724152339972", "message": "snapshot", "start": 1724152339972, "status": "success", "end": 1724152344659, "result": "3d4f7f45-753f-6f58-464a-53dc1d63c054" }, { "data": { "id": "179129c4-10c2-40f8-ba64-534ff4dc7da4", "isFull": true, "type": "remote" }, "id": "1724152344660", "message": "export", "start": 1724152344660, "status": "success", "tasks": [ { "id": "1724152350897", "message": "transfer", "start": 1724152350897, "status": "success", "end": 1724158256392, "result": { "size": 80103683584 } }, { "id": "1724158257369", "message": "clean-vm", "start": 1724158257369, "status": "success", "end": 1724158259162, "result": { "merge": false } } ], "end": 1724158259163 } ], "end": 1724158259163, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "VM must be a snapshot", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: VM must be a snapshot\n at Array.<anonymous> (file:///opt/xo/xo-builds/xen-orchestra-202408201207/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:245:20)\n at Function.from (<anonymous>)\n at asyncMap (/opt/xo/xo-builds/xen-orchestra-202408201207/@xen-orchestra/async-map/index.js:23:28)\n at Array.<anonymous> (file:///opt/xo/xo-builds/xen-orchestra-202408201207/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:233:13)\n at Function.from (<anonymous>)\n at asyncMap (/opt/xo/xo-builds/xen-orchestra-202408201207/@xen-orchestra/async-map/index.js:23:28)\n at IncrementalXapiVmBackupRunner._removeUnusedSnapshots (file:///opt/xo/xo-builds/xen-orchestra-202408201207/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:219:11)\n at IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202408201207/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:384:18)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202408201207/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } } ], "end": 1724158259164 } -

RE: VM must be a snapshot

@olivierlambert Just checked, we are one commit behind on Master.

I will look to update now.

-

VM must be a snapshot

We are using XO from sources (latest) against a pool with two XCP-NG hosts XCP-ng 8.2.1 (latest)

We have a VM that looks to be failing its delta backup to an offsite S3 target, we receive the error 'VM must be a snapshot'. Checked both backup and main pool health, no warnings are showing for snapshots or VDIs

We did try removing the existing snapshot and migrating the VM between hosts, but the error reappears.

Logfile -

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1724150430187", "jobId": "5e0a3dca-5c7f-4459-9b57-6af74a01d812", "jobName": "Production every other day", "message": "backup", "scheduleId": "1f6ca79c-0e6e-460e-bad5-5e984ec28ef5", "start": 1724150430187, "status": "failure", "infos": [ { "data": { "vms": [ "45df57b9-e2d9-ed2f-e467-345bd6f10296" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "45df57b9-e2d9-ed2f-e467-345bd6f10296", "name_label": "server" }, "id": "1724150431672", "message": "backup VM", "start": 1724150431672, "status": "failure", "tasks": [ { "id": "1724150431700", "message": "clean-vm", "start": 1724150431700, "status": "success", "end": 1724150433318, "result": { "merge": false } }, { "id": "1724150434097", "message": "clean-vm", "start": 1724150434097, "status": "success", "end": 1724150435469, "result": { "merge": false } } ], "end": 1724150435471, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "VM must be a snapshot", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: VM must be a snapshot\n at Array.<anonymous> (file:///opt/xo/xo-builds/xen-orchestra-202408191446/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:245:20)\n at Function.from (<anonymous>)\n at asyncMap (/opt/xo/xo-builds/xen-orchestra-202408191446/@xen-orchestra/async-map/index.js:23:28)\n at Array.<anonymous> (file:///opt/xo/xo-builds/xen-orchestra-202408191446/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:233:13)\n at Function.from (<anonymous>)\n at asyncMap (/opt/xo/xo-builds/xen-orchestra-202408191446/@xen-orchestra/async-map/index.js:23:28)\n at IncrementalXapiVmBackupRunner._removeUnusedSnapshots (file:///opt/xo/xo-builds/xen-orchestra-202408191446/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:219:11)\n at IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202408191446/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:354:16)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202408191446/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } } ], "end": 1724150435472 } -

Backup of Windows VM failing Health Check [Solved]

Issue Encountered:

A backup of a Windows VM failed a health check overnight, with the error message:

waitObjectState: timeout reached before OpaqueRef.Manual Check:

Performed a manual backup and health check, which resulted in the same error.

Diagnosis:

Connected to the VM using Xen Orchestra and monitored the console. Discovered that a Windows update was being applied during the VM boot process, which was causing the VM to not boot correctly from the perspective of Xen Orchestra (XO).

Resolution:

By applying the outstanding Windows update and rebooting the VM, the issue was resolved, and the backup and health check were successful afterward.

The problem was essentially due to the VM being in an update state during the health check, which prevented XO from properly detecting and managing the VM's state. Applying the update and rebooting ensured the VM was in a proper state for backup and health check operations.

-

RE: VMware migration tool: we need your feedback!

@danp

I was having a similar issue with OVA imports (using Chrome), tried a different browser (Firefox) and was able to import the OVA.Went back to the reverse proxy and tried in Firefox, I currently have two VMs importing directly from VMware

-

RE: VMware migration tool: we need your feedback!

@Danp No error messages and nothing in either Settings - Logs or via the CLI (journalctl)

It could be due to the age of the ESXi host or the fact I am using a reverse SSL proxy to cope with the legacy ciphers.

I'll look to export the VMs as OVA's and import that way.

-

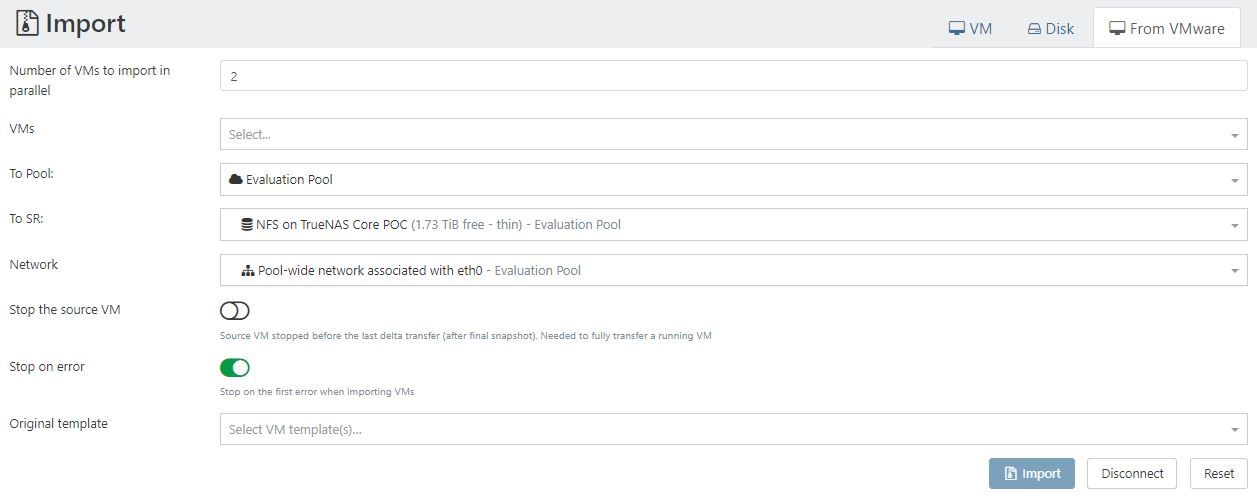

RE: VMware migration tool: we need your feedback!

Could anyone explain what we should be setting for 'Original Template' in the Import VM screen ?

Selecting a template appears to create a new empty VM with no apparent attempt to perform the migration.

Using Xen Orchestra from Sources (commit 636c8) against an ESXi 5.1 host, via an nginx reverse proxy (to work around legacy ciphers).

-

RE: Restoring from backup error: self-signed certificate

According to a closed issue from Ronivay the problem looks to be resolved.

https://github.com/ronivay/XenOrchestraInstallerUpdater/issues/227

I've just updated to latest from Branch="Master" and successfully performed a health check.

-

RE: VMware migration tool: we need your feedback!

Gave this is a try earlier with some changes to my openssl.cnf

Sadly still coming across the same error, is there a minimum ESXi version that the import tool will connect to ?

-

RE: VMware migration tool: we need your feedback!

@florent I still look to be receiving the same error, after updating to 0f0c0ec

write EPROTO C0D7ADA7B77F0000:error:0A000102:SSL routines:ssl_choose_client_version:unsupported protocol:../deps/openssl/openssl/ssl/statem/statem_lib.c:1987:Any further thoughts?

-

RE: VMware migration tool: we need your feedback!

@florent Just updated from sources, but my latest commit looks to be 0f0c0ec0d. Have I missed something ?

-

RE: VMware migration tool: we need your feedback!

Thanks for the quick response, the same error looks to persist.

Running the curl command gives

* Trying 192.168.xx.yy:443... * Connected to 192.168.xx.yy (192.168.xx.yy) port 443 (#0) * ALPN, offering h2 * ALPN, offering http/1.1 * successfully set certificate verify locations: * CAfile: /etc/ssl/certs/ca-certificates.crt * CApath: /etc/ssl/certs * TLSv1.3 (OUT), TLS handshake, Client hello (1): * TLSv1.3 (IN), TLS handshake, Server hello (2): * TLSv1.3 (OUT), TLS alert, protocol version (582): * error:1425F102:SSL routines:ssl_choose_client_version:unsupported protocol * Closing connection 0 curl: (35) error:1425F102:SSL routines:ssl_choose_client_version:unsupported protocolPerforming the same check with -tlsv1.0 gives

* Trying 192.168.xx.yy:443... * Connected to 192.168.xx.yy (192.168.xx.yy) port 443 (#0) * ALPN, offering h2 * ALPN, offering http/1.1 * successfully set certificate verify locations: * CAfile: /etc/ssl/certs/ca-certificates.crt * CApath: /etc/ssl/certs * TLSv1.3 (OUT), TLS handshake, Client hello (1): * TLSv1.3 (IN), TLS handshake, Server hello (2): * TLSv1.0 (IN), TLS handshake, Certificate (11): * TLSv1.0 (OUT), TLS alert, unknown CA (560): * SSL certificate problem: unable to get local issuer certificate * Closing connection 0 curl: (60) SSL certificate problem: unable to get local issuer certificate More details here: https://curl.se/docs/sslcerts.html curl failed to verify the legitimacy of the server and therefore could not establish a secure connection to it. To learn more about this situation and how to fix it, please visit the web page mentioned above.Not sure if this helps.

-

RE: VMware migration tool: we need your feedback!

@florent Thanks for reaching out

Updated XO from Sources to the commit from the branch.

When I attempt the import from VMware, the process doesn't show an error in the UI and the connect process button looks to spin. However, checking the logs I see the following error (with skip SSL enabled or disabled)

write EPROTO C0F754130E7F0000:error:0A000102:SSL routines:ssl_choose_client_version:unsupported protocol:../deps/openssl/openssl/ssl/statem/statem_lib.c:1987: -

RE: VMware migration tool: we need your feedback!

@florent thanks for the response

-

RE: VMware migration tool: we need your feedback!

This was my initial thought, I tried to drop the MinProtocol to TLSv1.0 in openssl.cnf and recomplile from source. But the error persisted,

Worst case I can look at manually exporting and importing the VMs.

-

RE: VMware migration tool: we need your feedback!

When I try the import from the UI directly I receive the following in the logs:

write EPROTO C0A77278D27F0000:error:0A000102:SSL routines:ssl_choose_client_version:unsupported protocol:../deps/openssl/openssl/ssl/statem/statem_lib.c:1987:I am using Xen Orchestra from sources (commit 6fe79)

xo-server 5.116.3

xo-web 5.119.1