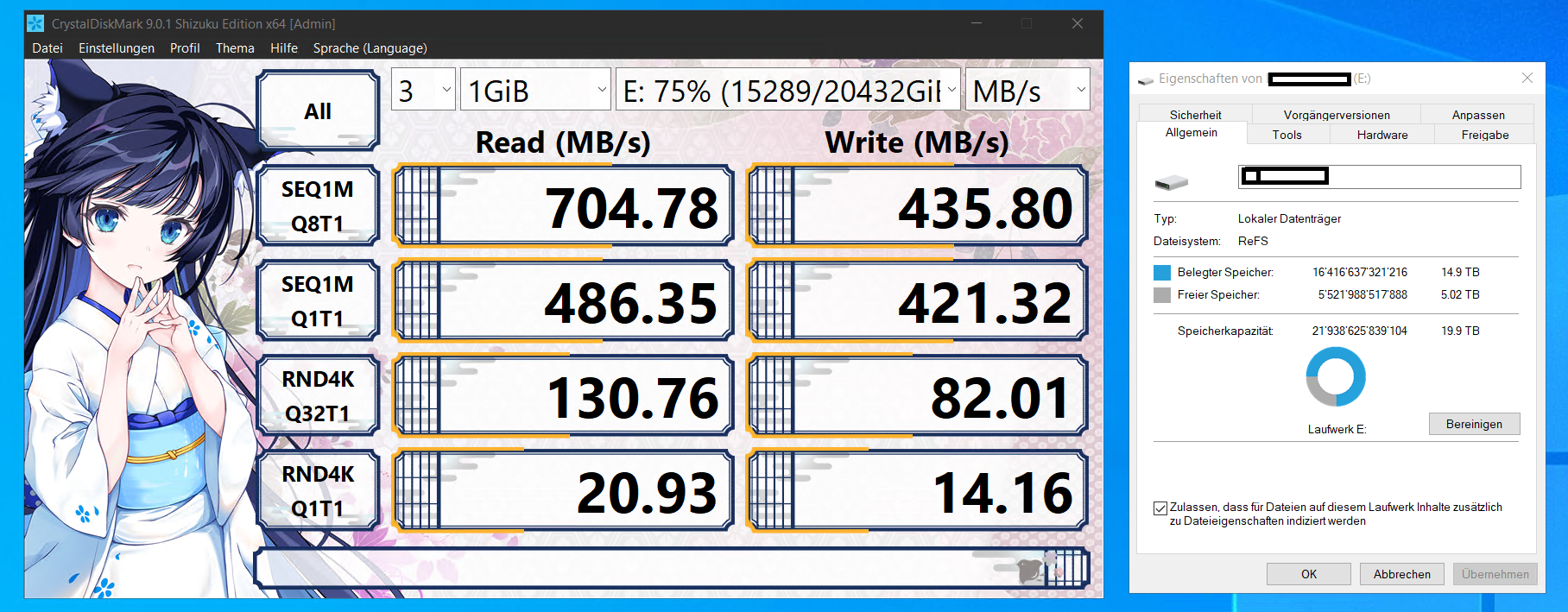

This benchmark was taken inside the Windows Server VM on that iSCSI device.

The iSCSI host is a QNAP NAS with Toshiba 18 TB drives configured in RAID-6.

I think this is a decent speed for non-flash storage. Both devices are on a 10Gbit network.

If you need more specific details, I'll be happy to help.

Posts

-

RE: VDI disk limit 2TB - How to convert from vmware 7Tb?

-

RE: VDI disk limit 2TB - How to convert from vmware 7Tb?

I also have Windows Server VMs with large disks of around 18TB, which are on a QNAP NAS mounted via iSCSI. This setup works just fine, and the performance is good on a 10 Gbit/s network.

-

RE: Warm migration stuck at 0%

I'm experiencing the same issue with the latest commit in XO from the sources.

I reverted back to an older version, and it worked again. -

RE: Veeam and XCP-ng

@Tristis-Oris said in Veeam and XCP-ng:

it has nothing to do with xen or provisioning type.

Then what is the root cause of this error below? I mean I can read: 'There is insufficient space.'

As I understand it, if XCP is creating a snapshot on an SR with thick provisioning, it takes up the same amount of storage as the original VDI.

So, I was trapped because of my mistake. But with Veeam and its Linux Agent, I could back it up regardless of insufficient space.

That's what I wanted to convey.{ "data": { "mode": "full", "reportWhen": "failure" }, "id": "1709124708488", "jobId": "72174171-6b1d-40ff-99f9-847f7c577fd3", "jobName": "XXXXX", "message": "backup", "scheduleId": "95f41044-842f-41c0-85ea-b65089b50f7f", "start": 1709124708488, "status": "failure", "infos": [ { "data": { "vms": [ "e06fd66b-9e4d-8df0-71f4-a8508ab677c1" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "e06fd66b-9e4d-8df0-71f4-a8508ab677c1", "name_label": "XXXXX_Debian-10.13" }, "id": "1709124711140", "message": "backup VM", "start": 1709124711140, "status": "failure", "tasks": [ { "id": "1709124711152", "message": "snapshot", "start": 1709124711152, "status": "failure", "end": 1709124739540, "result": { "code": "SR_BACKEND_FAILURE_44", "params": [ "", "There is insufficient space", "" ], "task": { "uuid": "825de1b6-967f-c1dc-1415-c852ef6179c0", "name_label": "Async.VM.snapshot", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240228T12:51:51Z", "finished": "20240228T12:52:19Z", "status": "failure", "resident_on": "OpaqueRef:8f335799-cec0-4024-83de-87a5b44839af", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "SR_BACKEND_FAILURE_44", "", "There is insufficient space", "" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [ "OpaqueRef:0c1f09de-c43d-4e71-9865-47d7c0dc6b46" ], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 80))((process xapi)(filename list.ml)(line 110))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 122))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 130))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 171))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 209))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 220))((process xapi)(filename list.ml)(line 121))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 222))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 442))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/xapi_vm_snapshot.ml)(line 33))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 131))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "SR_BACKEND_FAILURE_44(, There is insufficient space, )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_44(, There is insufficient space, )\n at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/_XapiError.mjs:16:12)\n at default (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/_getTaskResult.mjs:11:29)\n at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/index.mjs:1006:24)\n at file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/index.mjs:1040:14\n at Array.forEach (<anonymous>)\n at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/index.mjs:1030:12)\n at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/index.mjs:1203:14)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } }, { "id": "1709124739553", "message": "clean-vm", "start": 1709124739553, "status": "success", "end": 1709124739561, "result": { "merge": false } } ], "end": 1709124739562, "result": { "code": "SR_BACKEND_FAILURE_44", "params": [ "", "There is insufficient space", "" ], "task": { "uuid": "825de1b6-967f-c1dc-1415-c852ef6179c0", "name_label": "Async.VM.snapshot", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240228T12:51:51Z", "finished": "20240228T12:52:19Z", "status": "failure", "resident_on": "OpaqueRef:8f335799-cec0-4024-83de-87a5b44839af", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "SR_BACKEND_FAILURE_44", "", "There is insufficient space", "" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [ "OpaqueRef:0c1f09de-c43d-4e71-9865-47d7c0dc6b46" ], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 80))((process xapi)(filename list.ml)(line 110))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 122))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 130))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 171))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 209))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 220))((process xapi)(filename list.ml)(line 121))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 222))((process xapi)(filename ocaml/xapi/xapi_vm_clone.ml)(line 442))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/xapi_vm_snapshot.ml)(line 33))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 131))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "SR_BACKEND_FAILURE_44(, There is insufficient space, )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_44(, There is insufficient space, )\n at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/_XapiError.mjs:16:12)\n at default (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/_getTaskResult.mjs:11:29)\n at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/index.mjs:1006:24)\n at file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/index.mjs:1040:14\n at Array.forEach (<anonymous>)\n at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/index.mjs:1030:12)\n at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202402270007/packages/xen-api/index.mjs:1203:14)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } } ], "end": 1709124739563 } -

RE: Veeam and XCP-ng

I wanted to share a personal experience that might be helpful to others, especially if they're transitioning from VMware to XCP-NG like I am. With over 10 years of experience with VMware and about a year with XCP-NG, I found myself facing a challenge due to my lack of familiarity with XCP-NG.

During the transition, I overlooked a crucial detail: the storage provisioning. In VMware, I always used thick provisioning for its perceived speed advantages. However, in XCP-NG, I inadvertently configured one of my hosts with thick provisioning instead of thin provisioning.

This oversight became apparent when the thick-provisioned storage on one of my XCP-NG hosts reached its capacity limit. As a result, I couldn't perform any backups using Xen Orchestra (XO) because there wasn't enough storage available for snapshots.

While I prefer the simplicity of performing backups directly in XO, my mistake necessitated an alternative solution. Fortunately, I had a Veeam license available, and Veeam quickly came to the rescue. Despite my preference for XO, Veeam provided a reliable backup solution in this instance when XO couldn't.

-

RE: VMware import stuck at "Importing..."

ok.

maybe I find a clue on the ESXi logs. And when not. I'll take my chances using clonezilla. -

RE: VMware import stuck at "Importing..."

okay.

I'm also think (as I write this) that something might be wrong with the ESXi setup. So, I should check the ESXi logs.

It's interesting though that other VMs migrated just well.On the XO/XCP-NG side, are there any other log files I can look at besides the one for the import job?

-

RE: VMware import stuck at "Importing..."

@olivierlambert

XO from the source. I'm at c8bfd (22.09.2023).

But apparently there are newer commits.

I'll upgrade. -

RE: VMware import stuck at "Importing..."

@olivierlambert

XO is on version 5.122.0 -

VMware import stuck at "Importing..."

Hello

I'm currently in the process of migrating VMs from ESXi 6.7 to XCP-NG, using the built-in VMware import feature in xo-server. Some random and small Debian and Windows VMs (60-80GB) migrated smoothly and quickly, without any issues.

However, I've hit a roadblock with a particularly large VM (1.5TB) that refuses to complete its migration. The VM I'm attempting to migrate is a vCenter Server Appliance with 16 virtual disks. The initial stages of the import go swiftly, with the smaller virtual disks completing rapidly. However, the larger one takes significantly more time. After a few hours of monitoring the task list, the larger disk also finishes its migration.

The concern arises from the fact that the importing task remains stuck at the "started" status, with no activity apparent. Furthermore, the name of the VM continues to display as "[Importing...]-My-VCSA." Attempting to start the VM results in a message indicating that the VM is still in the process of migrating.

I am migrating the VCSA because I urgently need to reduce the number of hosts in my home lab due to rising electricity costs.

Here are some additional details:

I am connecting directly to the ESXi Host where my VCSA is located, and the VCSA is powered off.

My xo-server version is 5.122.0, and the VM has an adequate number of CPU cores and RAM.

The ESXi version is 6.7.0 Build 15160138.

Is there another log file I can consult? Below is the task log for reference:https://pastebin.com/cJinVk83//edit

I have some additional concerns. It seems that during the migration process, something on the ESXi host crashes. After all virtual disks are copied over, the ESXi host becomes highly unresponsive. The ESXi WebUI ceases to function, and attempts to shut down the host via the console also fail, often getting stuck at "Shutting down..." It's possible that certain services on the ESXi host have become stuck, preventing the migration from completing.