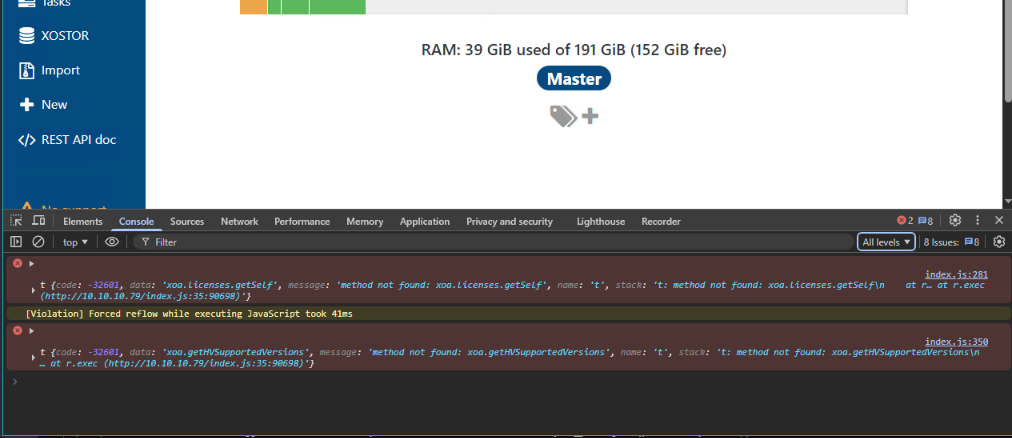

@olivierlambert Thanks! Just checked browser console in Chrome and Firefox and both show the same 2 js errors:

@olivierlambert Thanks! Just checked browser console in Chrome and Firefox and both show the same 2 js errors:

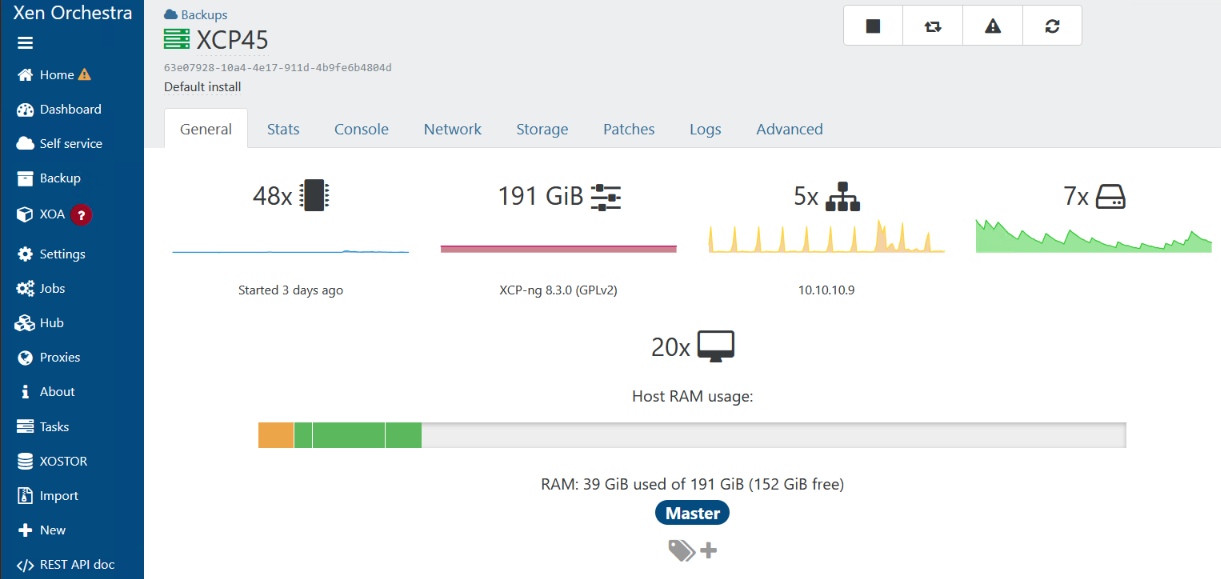

@olivierlambert Thanks for your help! It does appear the IPMI plugin works via CLI but still doesn't show up in XO web interface:

[14:00 XCP45 ~]# xe host-call-plugin host-uuid=63e07928-10a4-4e17-911d-4b9fe6b4804d plugin=ipmitool.py fn=is_ipmi_device_available

true

[14:00 XCP45 ~]# xe host-call-plugin host-uuid=63e07928-10a4-4e17-911d-4b9fe6b4804d plugin=ipmitool.py fn=get_all_sensors

[{"name": "Temp", "value": "47 degrees C", "event": "ok"}, {"name": "Temp", "value": "49 degrees C", "event": "ok"}, {"name": "Inlet Temp", "value": "22 degrees C", "event": "ok"}, {"name": "DIMM PG", "value": "0x00", "event": "ok"}, {"name": "NDC PG", "value": "0x00", "event": "ok"}, {"name": "PS1 PG FAIL", "value": "0x00", "event": "ok"}, {"name": "PS2 PG FAIL", "value": "0x00", "event": "ok"}, {"name": "BP0 PG", "value": "0x00", "event": "ok"}, {"name": "BP1 PG", "value": "0x00", "event": "ok"}, {"name": "1.8V SW PG", "value": "0x00", "event": "ok"}, {"name": "2.5V SW PG", "value": "0x00", "event": "ok"}, {"name": "5V SW PG", "value": "0x00", "event": "ok"}, {"name": "PVNN SW PG", "value": "0x00", "event": "ok"}, {"name": "VSB11 SW PG", "value": "0x00", "event": "ok"}, {"name": "VSBM SW PG", "value": "0x00", "event": "ok"}, {"name": "3.3V B PG", "value": "0x00", "event": "ok"}, {"name": "MEM012 VDDQ PG", "value": "0x00", "event": "ok"}, {"name": "MEM012 VPP PG", "value": "0x00", "event": "ok"}, {"name": "MEM012 VTT PG", "value": "0x00", "event": "ok"}, {"name": "MEM345 VDDQ PG", "value": "0x00", "event": "ok"}, {"name": "MEM345 VPP PG", "value": "0x00", "event": "ok"}, {"name": "MEM345 VTT PG", "value": "0x00", "event": "ok"}, {"name": "VCCIO PG", "value": "0x00", "event": "ok"}, {"name": "VCORE PG", "value": "0x00", "event": "ok"}, {"name": "FIVR PG", "value": "0x00", "event": "ok"}, {"name": "MEM012 VDDQ PG", "value": "0x00", "event": "ok"}, {"name": "MEM012 VPP PG", "value": "0x00", "event": "ok"}, {"name": "MEM012 VTT PG", "value": "0x00", "event": "ok"}, {"name": "MEM345 VDDQ PG", "value": "0x00", "event": "ok"}, {"name": "MEM345 VPP PG", "value": "0x00", "event": "ok"}, {"name": "MEM345 VTT PG", "value": "0x00", "event": "ok"}, {"name": "VCCIO PG", "value": "0x00", "event": "ok"}, {"name": "VCORE PG", "value": "0x00", "event": "ok"}, {"name": "FIVR PG", "value": "0x00", "event": "ok"}, {"name": "Fan1A", "value": "4440 RPM", "event": "ok"}, {"name": "Fan1B", "value": "4080 RPM", "event": "ok"}, {"name": "Fan2A", "value": "4320 RPM", "event": "ok"}, {"name": "Fan2B", "value": "3840 RPM", "event": "ok"}, {"name": "Fan3A", "value": "4200 RPM", "event": "ok"}, {"name": "Fan3B", "value": "3840 RPM", "event": "ok"}, {"name": "Presence", "value": "0x00", "event": "ok"}, {"name": "Presence", "value": "0x00", "event": "ok"}, {"name": "Presence", "value": "0x00", "event": "ok"}, {"name": "Presence", "value": "0x00", "event": "ok"}, {"name": "Intrusion Cable", "value": "0x00", "event": "ok"}, {"name": "VGA Cable Pres", "value": "0x00", "event": "ok"}, {"name": "Presence", "value": "0x00", "event": "ok"}, {"name": "BP0 Presence", "value": "0x00", "event": "ok"}, {"name": "BP1 Presence", "value": "0x00", "event": "ok"}, {"name": "Power Cable", "value": "0x00", "event": "ok"}, {"name": "Signal Cable", "value": "0x00", "event": "ok"}, {"name": "Power JBP1", "value": "0x00", "event": "ok"}, {"name": "Signal Cable", "value": "0x00", "event": "ok"}, {"name": "Power JBP2", "value": "0x00", "event": "ok"}, {"name": "Presence", "value": "0x00", "event": "ok"}, {"name": "Presence", "value": "0x00", "event": "ok"}, {"name": "Current 1", "value": "1.60 Amps", "event": "ok"}, {"name": "Current 2", "value": "0 Amps", "event": "ok"}, {"name": "Voltage 1", "value": "116 Volts", "event": "ok"}, {"name": "Voltage 2", "value": "120 Volts", "event": "ok"}, {"name": "Riser Config Err", "value": "0x00", "event": "ok"}, {"name": "OS Watchdog", "value": "0x00", "event": "ok"}, {"name": "SEL", "value": "Not Readable", "event": "ns"}, {"name": "Intrusion", "value": "0x00", "event": "ok"}, {"name": "Power Optimized", "value": "0x00", "event": "ok"}, {"name": "Pwr Consumption", "value": "168 Watts", "event": "ok"}, {"name": "PS Redundancy", "value": "0x00", "event": "ok"}, {"name": "Fan Redundancy", "value": "0x00", "event": "ok"}, {"name": "Redundancy", "value": "Not Readable", "event": "ns"}, {"name": "SD1", "value": "Not Readable", "event": "ns"}, {"name": "SD2", "value": "Not Readable", "event": "ns"}, {"name": "SD", "value": "Not Readable", "event": "ns"}, {"name": "IO Usage", "value": "0 percent", "event": "ok"}, {"name": "MEM Usage", "value": "0 percent", "event": "ok"}, {"name": "SYS Usage", "value": "0 percent", "event": "ok"}, {"name": "CPU Usage", "value": "0 percent", "event": "ok"}, {"name": "Status", "value": "0x00", "event": "ok"}, {"name": "Status", "value": "0x00", "event": "ok"}, {"name": "Status", "value": "0x00", "event": "ok"}, {"name": "Status", "value": "0x00", "event": "ok"}, {"name": "ROMB Battery", "value": "0x00", "event": "ok"}, {"name": "PCIe Slot1", "value": "0x00", "event": "ok"}, {"name": "PCIe Slot2", "value": "Not Readable", "event": "ns"}, {"name": "PCIe Slot3", "value": "Not Readable", "event": "ns"}, {"name": "Drive 0", "value": "0x00", "event": "ok"}, {"name": "Cable PCIe A0", "value": "0x00", "event": "ok"}, {"name": "Cable PCIe B0", "value": "0x00", "event": "ok"}, {"name": "Cable PCIe A1", "value": "0x00", "event": "ok"}, {"name": "Cable PCIe B1", "value": "0x00", "event": "ok"}, {"name": "Cable PCIe A2", "value": "0x00", "event": "ok"}, {"name": "Cable PCIe B2", "value": "Not Readable", "event": "ns"}, {"name": "Cable SAS A0", "value": "0x00", "event": "ok"}, {"name": "Cable SAS B0", "value": "0x00", "event": "ok"}, {"name": "Cable SAS A1", "value": "Not Readable", "event": "ns"}, {"name": "Cable SAS B1", "value": "Not Readable", "event": "ns"}, {"name": "Cable SAS A2", "value": "0x00", "event": "ok"}, {"name": "Cable SAS B2", "value": "Not Readable", "event": "ns"}, {"name": "Cable PCIe A0", "value": "Not Readable", "event": "ns"}, {"name": "Cable PCIe B0", "value": "Not Readable", "event": "ns"}, {"name": "ECC Corr Err", "value": "0xb0", "event": "ok"}, {"name": "ECC Uncorr Err", "value": "0xb2", "event": "ok"}, {"name": "PCI Parity Err", "value": "Not Readable", "event": "ns"}, {"name": "PCI System Err", "value": "0x96", "event": "ok"}, {"name": "SBE Log Disabled", "value": "0xa7", "event": "ok"}, {"name": "Unknown", "value": "0x00", "event": "ok"}, {"name": "CPU Machine Chk", "value": "0x00", "event": "ok"}, {"name": "Memory Spared", "value": "0x00", "event": "ok"}, {"name": "Memory Mirrored", "value": "0x00", "event": "ok"}, {"name": "PCIE Fatal Err", "value": "0xb0", "event": "ok"}, {"name": "Chipset Err", "value": "Not Readable", "event": "ns"}, {"name": "Err Reg Pointer", "value": "0x79", "event": "ok"}, {"name": "Mem ECC Warning", "value": "0x79", "event": "ok"}, {"name": "POST Err", "value": "Not Readable", "event": "ns"}, {"name": "Hdwr version err", "value": "Not Readable", "event": "ns"}, {"name": "Non Fatal PCI Er", "value": "0x00", "event": "ok"}, {"name": "Fatal IO Error", "value": "0x00", "event": "ok"}, {"name": "MSR Info Log", "value": "0x00", "event": "ok"}, {"name": "TXT Status", "value": "0x00", "event": "ok"}, {"name": "iDPT Mem Fail", "value": "0x00", "event": "ok"}, {"name": "Additional Info", "value": "0x00", "event": "ok"}, {"name": "CPU TDP", "value": "0x00", "event": "ok"}, {"name": "QPIRC Warning", "value": "0x00", "event": "ok"}, {"name": "QPIRC Warning", "value": "0x00", "event": "ok"}, {"name": "Link Warning", "value": "0x00", "event": "ok"}, {"name": "Link Warning", "value": "0x00", "event": "ok"}, {"name": "Link Error", "value": "0x00", "event": "ok"}, {"name": "MRC Warning", "value": "0x00", "event": "ok"}, {"name": "MRC Warning", "value": "0x00", "event": "ok"}, {"name": "Chassis Mismatch", "value": "0x00", "event": "ok"}, {"name": "FatalPCIErrOnBus", "value": "0x25", "event": "ok"}, {"name": "NonFatalPCIErBus", "value": "0x24", "event": "ok"}, {"name": "Fatal PCI SSD Er", "value": "0x23", "event": "ok"}, {"name": "NonFatalSSDEr", "value": "0x24", "event": "ok"}, {"name": "CPUMachineCheck", "value": "0x22", "event": "ok"}, {"name": "FatalPCIErARI", "value": "0x24", "event": "ok"}, {"name": "NonFatalPCIErARI", "value": "0x25", "event": "ok"}, {"name": "FatalPCIExpEr", "value": "0x24", "event": "ok"}, {"name": "NonFatalPCIExpEr", "value": "0x22", "event": "ok"}, {"name": "Cable SAS A0", "value": "Not Readable", "event": "ns"}, {"name": "Cable SAS B0", "value": "Not Readable", "event": "ns"}, {"name": "Fan4A", "value": "4320 RPM", "event": "ok"}, {"name": "Fan4B", "value": "4080 RPM", "event": "ok"}, {"name": "Fan5A", "value": "4080 RPM", "event": "ok"}, {"name": "Fan5B", "value": "3840 RPM", "event": "ok"}, {"name": "Fan6A", "value": "4320 RPM", "event": "ok"}, {"name": "Fan6B", "value": "3840 RPM", "event": "ok"}, {"name": "Fan7A", "value": "4440 RPM", "event": "ok"}, {"name": "Fan7B", "value": "3960 RPM", "event": "ok"}, {"name": "Fan8A", "value": "4320 RPM", "event": "ok"}, {"name": "Fan8B", "value": "3960 RPM", "event": "ok"}, {"name": "Unresp sensor", "value": "0x00", "event": "ok"}, {"name": "CP Left Pres", "value": "0x00", "event": "ok"}, {"name": "CP Right Pres", "value": "0x00", "event": "ok"}, {"name": "3.3V A PG", "value": "0x00", "event": "ok"}, {"name": "VSA PG", "value": "0x00", "event": "ok"}, {"name": "VSA PG", "value": "0x00", "event": "ok"}, {"name": "TPM Presence", "value": "0x00", "event": "ok"}, {"name": "Riser 1 Presence", "value": "0x00", "event": "ok"}, {"name": "Riser 2 Presence", "value": "0x00", "event": "ok"}, {"name": "Front LED Panel", "value": "0x00", "event": "ok"}, {"name": "OS Watchdog Time", "value": "0x00", "event": "ok"}, {"name": "Fan1A Status", "value": "0x00", "event": "ok"}, {"name": "Fan1B Status", "value": "0x00", "event": "ok"}, {"name": "Fan2A Status", "value": "0x00", "event": "ok"}, {"name": "Fan2B Status", "value": "0x00", "event": "ok"}, {"name": "Fan3A Status", "value": "0x00", "event": "ok"}, {"name": "Fan3B Status", "value": "0x00", "event": "ok"}, {"name": "Fan4A Status", "value": "0x00", "event": "ok"}, {"name": "Fan4B Status", "value": "0x00", "event": "ok"}, {"name": "Fan5A Status", "value": "0x00", "event": "ok"}, {"name": "Fan5B Status", "value": "0x00", "event": "ok"}, {"name": "Fan6A Status", "value": "0x00", "event": "ok"}, {"name": "Fan6B Status", "value": "0x00", "event": "ok"}, {"name": "Fan7A Status", "value": "0x00", "event": "ok"}, {"name": "Fan7B Status", "value": "0x00", "event": "ok"}, {"name": "Fan8A Status", "value": "0x00", "event": "ok"}, {"name": "Fan8B Status", "value": "0x00", "event": "ok"}, {"name": "NVDIMM Warning", "value": "0x21", "event": "ok"}, {"name": "NVDIMM Error", "value": "0x21", "event": "ok"}, {"name": "NVDIMM Info", "value": "0x00", "event": "ok"}, {"name": "Dedicated NIC", "value": "0x00", "event": "ok"}, {"name": "Presence", "value": "0x00", "event": "ok"}, {"name": "NVDIMM Battery", "value": "Not Readable", "event": "ns"}, {"name": "Exhaust Temp", "value": "39 degrees C", "event": "ok"}, {"name": "LT/Flex Addr", "value": "0x00", "event": "ok"}, {"name": "QPI Link Err", "value": "0x00", "event": "ok"}, {"name": "TPM Presence", "value": "0x20", "event": "ok"}, {"name": "CPU Link Info", "value": "0x20", "event": "ok"}, {"name": "Chipset Info", "value": "0x20", "event": "ok"}, {"name": "Memory Config", "value": "0x1f", "event": "ok"}, {"name": "POST Pkg Repair", "value": "0x20", "event": "ok"}, {"name": "Pfault Fail Safe", "value": "Not Readable", "event": "ns"}, {"name": "BP2 PG", "value": "0x00", "event": "ok"}, {"name": "MMIOChipset Info", "value": "0x00", "event": "ok"}, {"name": "DIMM Media Info", "value": "0x00", "event": "ok"}, {"name": "DIMMThermal Info", "value": "0x00", "event": "ok"}, {"name": "CPU Internal Err", "value": "0x00", "event": "ok"}, {"name": "GPU1 Temp", "value": "disabled", "event": "ns"}, {"name": "GPU2 Temp", "value": "disabled", "event": "ns"}, {"name": "GPU3 Temp", "value": "disabled", "event": "ns"}, {"name": "A1", "value": "0x00", "event": "ok"}, {"name": "A2", "value": "0x00", "event": "ok"}, {"name": "A3", "value": "0x00", "event": "ok"}, {"name": "A4", "value": "0x00", "event": "ok"}, {"name": "A5", "value": "0x00", "event": "ok"}, {"name": "A6", "value": "0x00", "event": "ok"}, {"name": "A7", "value": "0x00", "event": "ok"}, {"name": "A8", "value": "0x00", "event": "ok"}, {"name": "A9", "value": "0x00", "event": "ok"}, {"name": "A10", "value": "0x00", "event": "ok"}, {"name": "A11", "value": "0x00", "event": "ok"}, {"name": "A12", "value": "0x00", "event": "ok"}, {"name": "B1", "value": "0x00", "event": "ok"}, {"name": "B2", "value": "0x00", "event": "ok"}, {"name": "B3", "value": "0x00", "event": "ok"}, {"name": "B4", "value": "0x00", "event": "ok"}, {"name": "B5", "value": "0x00", "event": "ok"}, {"name": "B6", "value": "0x00", "event": "ok"}, {"name": "B7", "value": "0x00", "event": "ok"}, {"name": "B8", "value": "0x00", "event": "ok"}, {"name": "B9", "value": "0x00", "event": "ok"}, {"name": "B10", "value": "0x00", "event": "ok"}, {"name": "B11", "value": "0x00", "event": "ok"}, {"name": "B12", "value": "0x00", "event": "ok"}, {"name": "Therm Config Err", "value": "0x00", "event": "ok"}, {"name": "VCORE VR", "value": "1.78 Volts", "event": "ok"}, {"name": "VCORE VR", "value": "1.76 Volts", "event": "ok"}, {"name": "MEM012 VR", "value": "1.21 Volts", "event": "ok"}, {"name": "MEM345 VR", "value": "1.21 Volts", "event": "ok"}, {"name": "MEM012 VR", "value": "1.21 Volts", "event": "ok"}, {"name": "MEM345 VR", "value": "1.21 Volts", "event": "ok"}]

[14:01 XCP45 ~]#

[14:01 XCP45 ~]# xe host-call-plugin host-uuid=63e07928-10a4-4e17-911d-4b9fe6b4804d plugin=ipmitool.py fn=get_sensor args:sensors="Fan7A,PFault Fail Safe"

[{"info": [{"name": "Sensor ID", "value": "Fan7A (0x3e)"}, {"name": "Entity ID", "value": "7.1 (System Board)"}, {"name": "Sensor Type (Threshold)", "value": "Fan (0x04)"}, {"name": "Sensor Reading", "value": "4440 (+/- 120) RPM"}, {"name": "Status", "value": "ok"}, {"name": "Nominal Reading", "value": "10080.000"}, {"name": "Normal Minimum", "value": "16680.000"}, {"name": "Normal Maximum", "value": "23640.000"}, {"name": "Lower critical", "value": "600.000"}, {"name": "Lower non-critical", "value": "840.000"}, {"name": "Positive Hysteresis", "value": "120.000"}, {"name": "Negative Hysteresis", "value": "120.000"}, {"name": "Minimum sensor range", "value": "Unspecified"}, {"name": "Maximum sensor range", "value": "Unspecified"}, {"name": "Event Message Control", "value": "Per-threshold"}, {"name": "Readable Thresholds", "value": "lcr lnc"}, {"name": "Settable Thresholds", "value": ""}, {"name": "Threshold Read Mask", "value": "lcr lnc"}, {"name": "Assertion Events", "value": ""}, {"name": "Assertions Enabled", "value": "lnc- lcr-"}, {"name": "Deassertions Enabled", "value": "lnc- lcr-"}], "name": "Fan7A"}]

[14:02 XCP45 ~]#

[14:03 XCP45 ~]# xe host-call-plugin host-uuid=63e07928-10a4-4e17-911d-4b9fe6b4804d plugin=ipmitool.py fn=get_ipmi_lan

[{"name": "IP Address Source", "value": "Static Address"}, {"name": "IP Address", "value": "10.10.10.36"}, {"name": "Subnet Mask", "value": "255.255.255.0"}, {"name": "MAC Address", "value": "f4:02:70:ef:46:e6"}, {"name": "BMC ARP Control", "value": "ARP Responses Enabled, Gratuitous ARP Disabled"}, {"name": "Default Gateway IP", "value": "10.10.10.1"}, {"name": "802.1q VLAN ID", "value": "Disabled"}, {"name": "802.1q VLAN Priority", "value": "0"}, {"name": "RMCP+ Cipher Suites", "value": "3,17"}]

[14:03 XCP45 ~]#

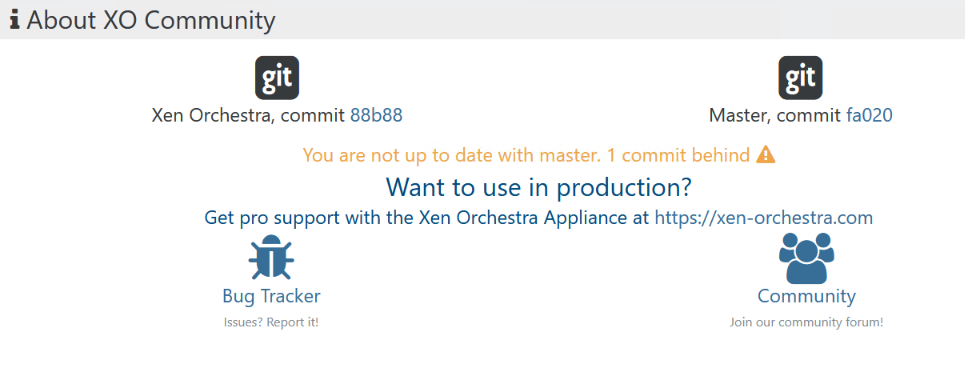

@acebmxer Thanks for the screenshot! My server is a Dell R640 and I check iDRAC Settings > Connectivity to confirm IPMI is enabled:

Not sure if I need to do anything else on XO or on the Dell server?

@olivierlambert I must be blind as I tried looking under the various tabs under the Host in XO but can't see it:

Thanks,

SW

Hi,

Just updated to xcp-ng 8.3 and I tried searching the XO docs for IPMI but can't find anything. How do I see IPMI info from our Dell PE 640 in XO?

Thank you,

SW

What was the fix for this as one of our Windows 10 VMs backups have started to fail and rebooting the VM only takes less than 1 minute so not sure increasing the timeout is the issue. Here is the backup log entries:

{

"data": {

"type": "VM",

"id": "07767a82-3cc0-b2b7-e399-90793896a574",

"name_label": "Windows10C"

},

"id": "1760322641022",

"message": "backup VM",

"start": 1760322641022,

"status": "failure",

"tasks": [

{

"id": "1760322641048",

"message": "clean-vm",

"start": 1760322641048,

"status": "success",

"tasks": [

{

"id": "1760322641516",

"message": "merge",

"start": 1760322641516,

"status": "success",

"end": 1760323012896

}

],

"warnings": [

{

"data": {

"path": "/xo-vm-backups/07767a82-3cc0-b2b7-e399-90793896a574/20251012T023042Z.json",

"actual": 5475663872,

"expected": 5477068288

},

"message": "cleanVm: incorrect backup size in metadata"

}

],

"end": 1760323013334,

"result": {

"merge": true

}

},

{

"id": "1760323014314",

"message": "snapshot",

"start": 1760323014314,

"status": "success",

"end": 1760323016106,

"result": "0b79d24f-a579-b669-50e1-412516276886"

},

{

"data": {

"id": "2a4bc998-972d-4132-83ba-f3c67b860676",

"isFull": false,

"type": "remote"

},

"id": "1760323016106:0",

"message": "export",

"start": 1760323016106,

"status": "failure",

"tasks": [

{

"id": "1760323018513",

"message": "transfer",

"start": 1760323018513,

"status": "success",

"end": 1760323462425,

"result": {

"size": 7688159232

}

},

{

"id": "1760323472984",

"message": "health check",

"start": 1760323472984,

"status": "failure",

"tasks": [

{

"id": "1760323480406",

"message": "transfer",

"start": 1760323480406,

"status": "success",

"end": 1760324685177,

"result": {

"size": 0,

"id": "490d3623-4b1f-e4a4-4947-8062aa0e757c"

}

},

{

"id": "1760324685178",

"message": "vmstart",

"start": 1760324685178,

"status": "failure",

"end": 1760325285289,

"result": {

"message": "timeout reached while waiting for OpaqueRef:5c4eef85-eebb-4480-826b-7bbe596f4349 to report the driver version through the Xen tools. Please check or update the Xen tools.",

"name": "Error",

"stack": "Error: timeout reached while waiting for OpaqueRef:5c4eef85-eebb-4480-826b-7bbe596f4349 to report the driver version through the Xen tools. Please check or update the Xen tools.\n at file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:275:23\n at new Promise (<anonymous>)\n at Xapi.waitObjectState (file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:259:12)\n at file:///opt/xen-orchestra/@xen-orchestra/backups/HealthCheckVmBackup.mjs:63:20\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)"

}

}

],

"end": 1760325289511,

"result": {

"message": "timeout reached while waiting for OpaqueRef:5c4eef85-eebb-4480-826b-7bbe596f4349 to report the driver version through the Xen tools. Please check or update the Xen tools.",

"name": "Error",

"stack": "Error: timeout reached while waiting for OpaqueRef:5c4eef85-eebb-4480-826b-7bbe596f4349 to report the driver version through the Xen tools. Please check or update the Xen tools.\n at file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:275:23\n at new Promise (<anonymous>)\n at Xapi.waitObjectState (file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:259:12)\n at file:///opt/xen-orchestra/@xen-orchestra/backups/HealthCheckVmBackup.mjs:63:20\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)"

}

}

],

"end": 1760325289511,

"result": {

"message": "timeout reached while waiting for OpaqueRef:5c4eef85-eebb-4480-826b-7bbe596f4349 to report the driver version through the Xen tools. Please check or update the Xen tools.",

"name": "Error",

"stack": "Error: timeout reached while waiting for OpaqueRef:5c4eef85-eebb-4480-826b-7bbe596f4349 to report the driver version through the Xen tools. Please check or update the Xen tools.\n at file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:275:23\n at new Promise (<anonymous>)\n at Xapi.waitObjectState (file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:259:12)\n at file:///opt/xen-orchestra/@xen-orchestra/backups/HealthCheckVmBackup.mjs:63:20\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)"

}

},

{

"data": {

"id": "349e7130-da43-6550-e7c0-aa0a84102e0f",

"isFull": false,

"name_label": "Local storage",

"type": "SR"

},

"id": "1760323016107",

"message": "export",

"start": 1760323016107,

"status": "failure",

"tasks": [

{

"id": "1760323017919",

"message": "transfer",

"start": 1760323017919,

"status": "success",

"end": 1760323463982,

"result": {

"size": 7688159232

}

},

{

"id": "1760323472984:0",

"message": "health check",

"start": 1760323472984,

"status": "failure",

"tasks": [

{

"id": "1760323473002",

"message": "copying-vm",

"start": 1760323473002,

"status": "success",

"end": 1760325038087,

"result": "OpaqueRef:0233c593-da90-4813-959b-46e4e679a662"

},

{

"id": "1760325038108",

"message": "vmstart",

"start": 1760325038108,

"status": "failure",

"end": 1760325638322,

"result": {

"message": "timeout reached while waiting for OpaqueRef:ca1c86dd-aafc-4f25-bd8c-89399771c004 to report the driver version through the Xen tools. Please check or update the Xen tools.",

"name": "Error",

"stack": "Error: timeout reached while waiting for OpaqueRef:ca1c86dd-aafc-4f25-bd8c-89399771c004 to report the driver version through the Xen tools. Please check or update the Xen tools.\n at file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:275:23\n at new Promise (<anonymous>)\n at Xapi.waitObjectState (file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:259:12)\n at file:///opt/xen-orchestra/@xen-orchestra/backups/HealthCheckVmBackup.mjs:63:20\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)"

}

}

],

"end": 1760325643426,

"result": {

"message": "timeout reached while waiting for OpaqueRef:ca1c86dd-aafc-4f25-bd8c-89399771c004 to report the driver version through the Xen tools. Please check or update the Xen tools.",

"name": "Error",

"stack": "Error: timeout reached while waiting for OpaqueRef:ca1c86dd-aafc-4f25-bd8c-89399771c004 to report the driver version through the Xen tools. Please check or update the Xen tools.\n at file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:275:23\n at new Promise (<anonymous>)\n at Xapi.waitObjectState (file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:259:12)\n at file:///opt/xen-orchestra/@xen-orchestra/backups/HealthCheckVmBackup.mjs:63:20\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)"

}

}

],

"end": 1760325643426,

"result": {

"message": "timeout reached while waiting for OpaqueRef:ca1c86dd-aafc-4f25-bd8c-89399771c004 to report the driver version through the Xen tools. Please check or update the Xen tools.",

"name": "Error",

"stack": "Error: timeout reached while waiting for OpaqueRef:ca1c86dd-aafc-4f25-bd8c-89399771c004 to report the driver version through the Xen tools. Please check or update the Xen tools.\n at file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:275:23\n at new Promise (<anonymous>)\n at Xapi.waitObjectState (file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:259:12)\n at file:///opt/xen-orchestra/@xen-orchestra/backups/HealthCheckVmBackup.mjs:63:20\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)"

}

}

],

"end": 1760325644170,

"result": {

"errors": [

{

"message": "timeout reached while waiting for OpaqueRef:5c4eef85-eebb-4480-826b-7bbe596f4349 to report the driver version through the Xen tools. Please check or update the Xen tools.",

"name": "Error",

"stack": "Error: timeout reached while waiting for OpaqueRef:5c4eef85-eebb-4480-826b-7bbe596f4349 to report the driver version through the Xen tools. Please check or update the Xen tools.\n at file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:275:23\n at new Promise (<anonymous>)\n at Xapi.waitObjectState (file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:259:12)\n at file:///opt/xen-orchestra/@xen-orchestra/backups/HealthCheckVmBackup.mjs:63:20\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)"

},

{

"message": "timeout reached while waiting for OpaqueRef:ca1c86dd-aafc-4f25-bd8c-89399771c004 to report the driver version through the Xen tools. Please check or update the Xen tools.",

"name": "Error",

"stack": "Error: timeout reached while waiting for OpaqueRef:ca1c86dd-aafc-4f25-bd8c-89399771c004 to report the driver version through the Xen tools. Please check or update the Xen tools.\n at file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:275:23\n at new Promise (<anonymous>)\n at Xapi.waitObjectState (file:///opt/xen-orchestra/@xen-orchestra/xapi/index.mjs:259:12)\n at file:///opt/xen-orchestra/@xen-orchestra/backups/HealthCheckVmBackup.mjs:63:20\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)"

}

],

"message": "all targets have failed, step: writer.healthCheck()",

"name": "Error",

"stack": "Error: all targets have failed, step: writer.healthCheck()\n at IncrementalXapiVmBackupRunner._callWriters (file:///opt/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/_Abstract.mjs:64:13)\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)\n at async IncrementalXapiVmBackupRunner._healthCheck (file:///opt/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/_Abstract.mjs:94:5)\n at async IncrementalXapiVmBackupRunner.run (file:///opt/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:407:5)\n at async file:///opt/xen-orchestra/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38"

}

},

@Danp Thank you! That's simple enough. I thought XO couldn't live migrate itself without running into an issue.

Thanks again!

SW

Hi,

Is it possible to live migrate XOCE via the XO web interface?

I have XOCE running on a single host which we use for nightly backups and DR of a 2 host pool. I want to perform a yum update on the host where XOCE vm is installed and don't want to lose access to XOCE during the reboot process. So I'm wondering if XOCE can be live migrated to the pool and then moved back to this single host after the reboot is complete.

Thank you,

SW

@StormMaster Thank you for sharing your findings!

@stephane-m-dev Thank you for the update! Looking forward to testing the fix just not sure how to replicate other than what @StormMaster found.

@andrewperry I couldn't get the live migration to work on large VMs. To move large VMs, I ended up taking a snapshot of the VM and then creating a new VM from that snapshot. It's not ideal but had to do that as we needed to retire some old servers and I couldn't get the live migration to work w/ large VMs.

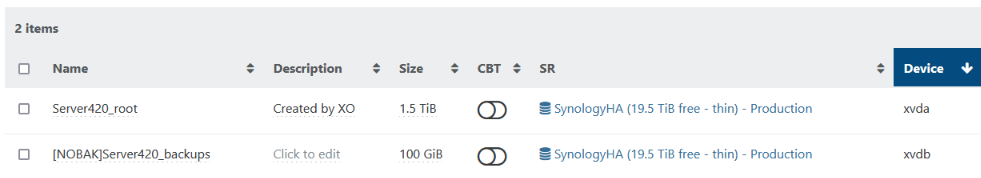

@olivierlambert Thank you! Is it possible to simply attach the shared from the source pool to the new pool without having to do a live/warm migration?

We are only moving this VM from one pool to a new pool but the VM disks are going to remain on the shared storage (NFS):

The reason I'm moving pools is we have newer servers.

Thank you,

SW

Hi,

I'm trying to live migrate a large VM from one host to another and XO built from source using the latest commit a7d7c is reporting it will take over 32 hours for the migration to complete. However, after about 20+ hours, the live migration fails at about 48% complete.

XO shows the following error under Settings > Logs:

vm.migrate

{

"vm": "3797df5e-695a-00be-86bd-318a7c48860f",

"mapVifsNetworks": {

"de9a4140-0b67-314b-6fed-0bbd00d4cf74": "eefd492c-2bef-502e-79e7-f5123209d887",

"cbea6ad6-cb84-5536-e021-d2bdabda6348": "34f336c5-b05d-4258-20d0-984523113b85"

},

"migrationNetwork": "02bfbb0b-5f1a-e47e-d50b-28f0f7c50b11",

"sr": "8da3d03e-4d2c-bab2-cd94-0d15168a58f3",

"targetHost": "2d926060-41bf-4e17-ba76-bac9b1112257"

}

{

"code": "MIRROR_FAILED",

"params": [

"OpaqueRef:7069c225-0bf2-433c-a255-0fab035ea70b"

],

"task": {

"uuid": "f31eafed-faea-2eb4-b9e9-d45cc7967498",

"name_label": "Async.VM.migrate_send",

"name_description": "",

"allowed_operations": [],

"current_operations": {

"DummyRef:|e8163dcb-8c54-4907-a0de-97d36cf2127e|task.cancel": "cancel"

},

"created": "20241014T04:40:24Z",

"finished": "20241015T00:09:27Z",

"status": "failure",

"resident_on": "OpaqueRef:d3f118d9-3aef-4d16-94d5-6d6fa22f84b9",

"progress": 1,

"type": "<none/>",

"result": "",

"error_info": [

"MIRROR_FAILED",

"OpaqueRef:7069c225-0bf2-433c-a255-0fab035ea70b"

],

"other_config": {

"mirror_failed": "b607eebc-49ad-4fc0-ad4a-b605fedfc51e"

},

"subtask_of": "OpaqueRef:NULL",

"subtasks": [],

"backtrace": "(((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 1556))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 131))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))"

},

"message": "MIRROR_FAILED(OpaqueRef:7069c225-0bf2-433c-a255-0fab035ea70b)",

"name": "XapiError",

"stack": "XapiError: MIRROR_FAILED(OpaqueRef:7069c225-0bf2-433c-a255-0fab035ea70b)

at Function.wrap (file:///opt/xen-orchestra/packages/xen-api/_XapiError.mjs:16:12)

at default (file:///opt/xen-orchestra/packages/xen-api/_getTaskResult.mjs:13:29)

at Xapi._addRecordToCache (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1041:24)

at file:///opt/xen-orchestra/packages/xen-api/index.mjs:1075:14

at Array.forEach (<anonymous>)

at Xapi._processEvents (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1065:12)

at Xapi._watchEvents (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1238:14)"

}

On the source host, /var/log/xensource.log show the following when the error occurs:

And on the target host, /var/log/xensource.log show the following:

I appreciate any help you can offer to help me identify why the live migration is failing.

Thank you,

SW

@enes-selcuk Did you find a setting that works best w/ Dell servers?

I have a Dell R640's which I'll be using for LAMP/LEMP vm servers and was wondering what are the best settings to use in Dell Bios and if there are any changes I need to make on the xcp-ng host?

Dual Intel(R) Xeon(R) Gold 5218 CPU @ 2.30GHz

Level 2 Cache 16x1 MB

Level 3 Cache 22 MB

Number of Cores 16

Dell Bios Setting:

System Profile: Performance Per Watt (OS)

CPU Power Management: OS DBPM

Memory Frequency: Maximum Performance

Turbo Boost: Enabled

C1E: Enabled

C States: Enabled

Write Data CRC: Disabled

Memory Patrol Scrub: Standard

Memory Refresh Rate: 1x

Uncore Frequency : Dynamic

Energy Efficient Policy: Balanced Performance

Number of Turbo Boost Enabled Cores for Processor 1: All

Number of Turbo Boost Enabled Cores for Processor 2: All

Monitor/Mwait: Enabled

Workload Profile: Not Available

CPU Interconnect Bus Link Power Management: Enabled

PCI ASPM L1 Link Power Management: Enabled

Thank you!

SW

@TS79 It seems something is not stable w/ SR NFS creation. The strange thing is XOCE allowed the creation of the NFS Remote under Settings > Remotes but refused to create the SR NFS until I patched to latest build (I was 3 commits behind) and then rebooted the entire server.

I was hoping to test this via just XO to rule out if it's an issue w/ XOCE or not but I couldn't get the XO deploy script to work. But I'll have to tackle that issue another day.

I was able to install a VM using the SR NFS and will be running som Disk IO tests to see how stable the NFS connection.

Thank you again for your help and testing this on your home lab! Greatly appreciate it!

Best Regards,

SW

@olivierlambert @TS79 I just rebooted the server, ran the XOCE update script, and tried adding the SR NFS to the new host and I was able to create the SR NFS!

I'm about to test it by installing a VM to confirm it's working properly!

@TS79 Thanks for the help! This host is NOT part of a pool. It's a standalone host.

I'm so glad you are also getting the same issue!!! Man I've been trying to figure out this error/bug for the past seveal days. Like you, it was working at some point as my old hosts have no problem w/ the NFS share from FreeNAS.

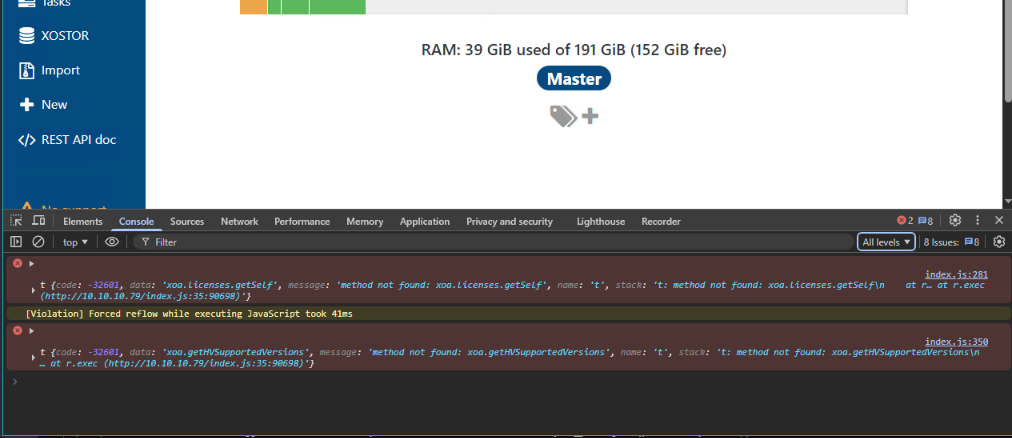

I used the Jarli01 script to build the XOCE and keep it updated regularly. I was 3 commits behind but just updated to the latest commit and still have the same issue.

@olivierlambert Any ideas why I can't deploy XO? Not sure if the logs I provided above are helpful.

Please let me know if you wish me to provide you with any additional logs, etc.

Thank you both!

SW

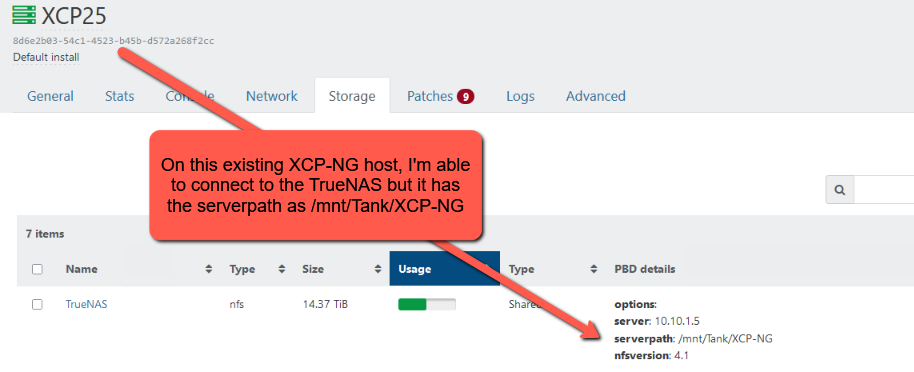

@TS79 Thanks again! The TrueNAS NFS path which I see on the existing XCP-NG Hosts has the serverpath: /mnt/Tank/XCP-NG (see screenshot below):

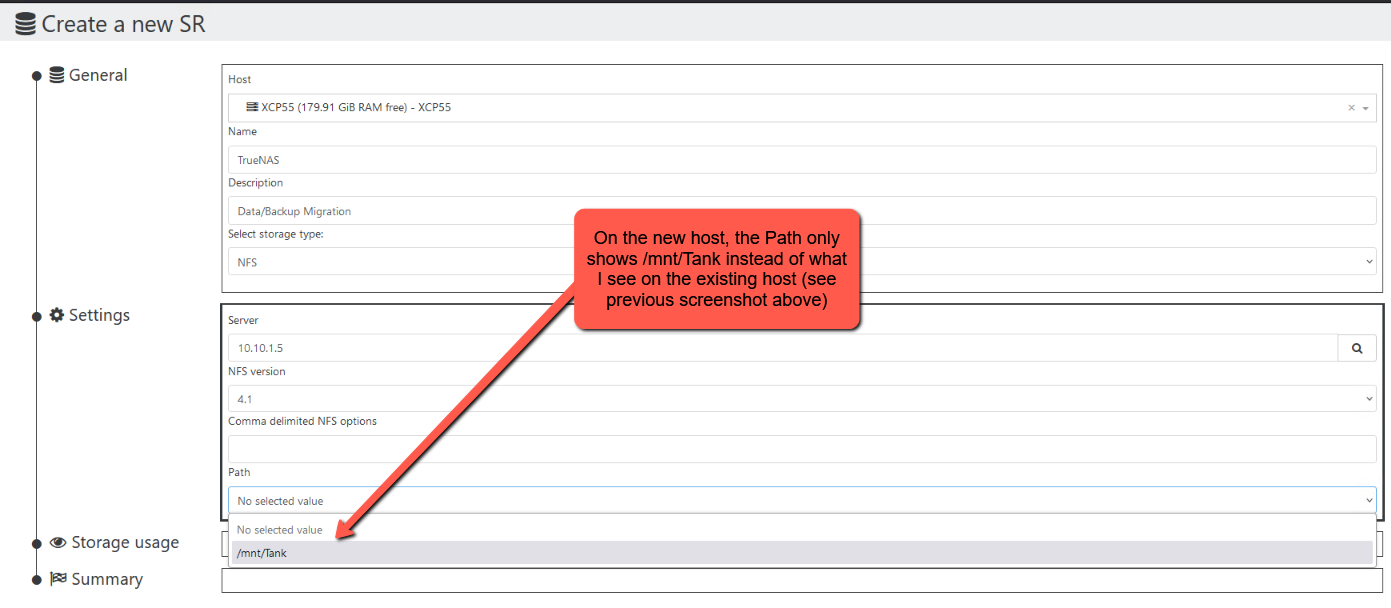

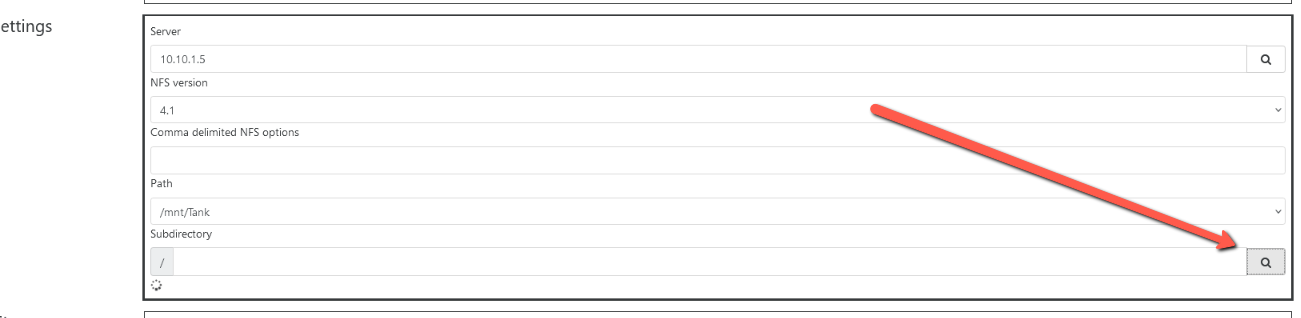

However, when I try to create this SR NFS on the new host, it only shows the serverpath as /mnt/Tank like so:

Using the search icon under the Subdirectory doesn't load anything:

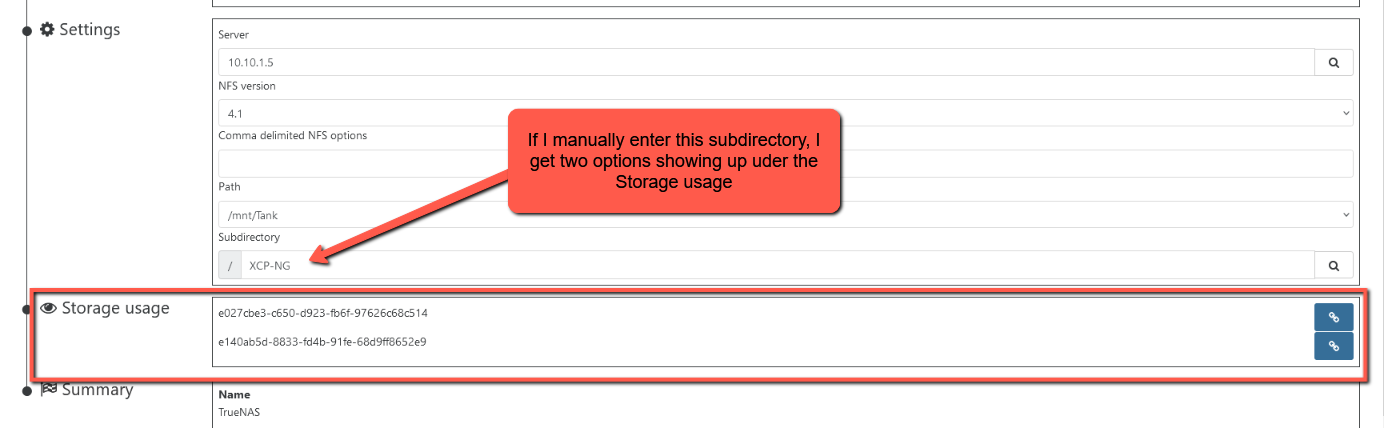

And if I manually enter XCP-NG in the Subdirectory field, I'm presented with two options under the "Storage usage":

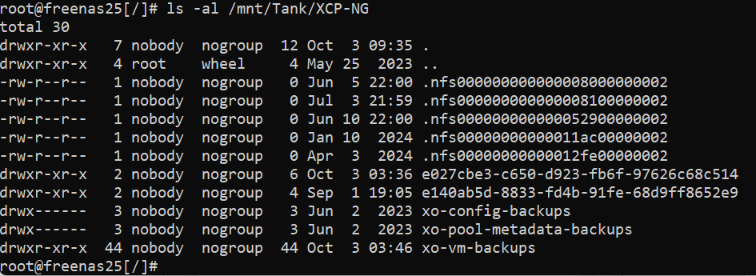

FreeNAS shows the following content in /mn/Tank/XCP-NG directory:

The "e027cbe3-c650-d923-fb6f-97626c68c514" and "e140ab5d-8833-fd4b-91fe-68d9ff8652e9" are SR for existing hosts that connect with no issues to TrueNAS via NFS.

On the networking error when trying to install XO using the deploy method, I ran the following command on this host and returned the following:

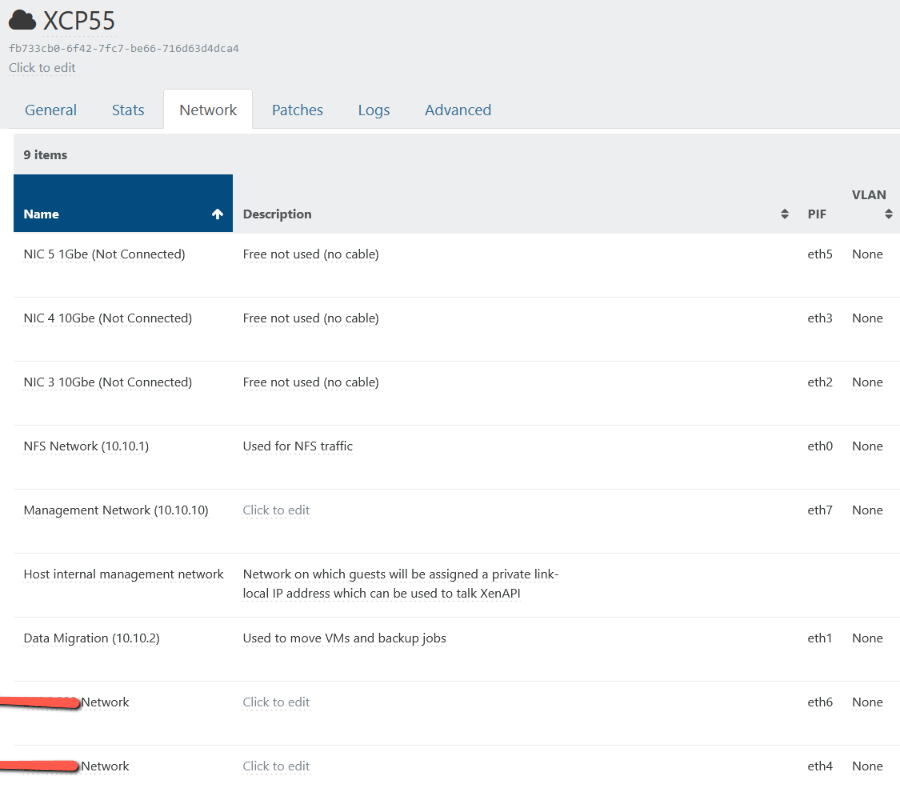

I'm not using any VLANs and not sure where to check the network configuration files but this is what I have via the XOCE for this host:

[12:24 XCP55 ~]# brctl show

bridge name bridge id STP enabled interfaces

[12:24 XCP55 ~]#

[12:24 XCP55 ~]# ip route show

default via 10.10.10.1 dev xenbr7

10.10.1.0/24 dev xenbr0 proto kernel scope link src 10.10.1.10

10.10.2.0/24 dev xenbr1 proto kernel scope link src 10.10.2.10

10.10.10.0/24 dev xenbr7 proto kernel scope link src 10.10.10.5

[12:25 XCP55 ~]#

[12:32 XCP55 ~]# ip a show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether a0:36:9f:8a:18:18 brd ff:ff:ff:ff:ff:ff

3: eth5: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq master ovs-system state DOWN group default qlen 1000

link/ether a0:36:9f:8a:18:19 brd ff:ff:ff:ff:ff:ff

4: eth6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether a0:36:9f:8a:18:1a brd ff:ff:ff:ff:ff:ff

5: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq master ovs-system state UP group default qlen 1000

link/ether e4:43:4b:c8:51:84 brd ff:ff:ff:ff:ff:ff

6: eth7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether a0:36:9f:8a:18:1b brd ff:ff:ff:ff:ff:ff

7: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq master ovs-system state UP group default qlen 1000

link/ether e4:43:4b:c8:51:85 brd ff:ff:ff:ff:ff:ff

8: eth2: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq master ovs-system state DOWN group default qlen 1000

link/ether e4:43:4b:c8:51:86 brd ff:ff:ff:ff:ff:ff

9: eth3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq master ovs-system state DOWN group default qlen 1000

link/ether e4:43:4b:c8:51:87 brd ff:ff:ff:ff:ff:ff

10: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 1e:13:9f:39:76:f9 brd ff:ff:ff:ff:ff:ff

11: xenbr4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether a0:36:9f:8a:18:18 brd ff:ff:ff:ff:ff:ff

14: xenbr2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether e4:43:4b:c8:51:86 brd ff:ff:ff:ff:ff:ff

15: xenbr6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether a0:36:9f:8a:18:1a brd ff:ff:ff:ff:ff:ff

16: xenbr5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether a0:36:9f:8a:18:19 brd ff:ff:ff:ff:ff:ff

17: xenbr3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether e4:43:4b:c8:51:87 brd ff:ff:ff:ff:ff:ff

18: xenbr7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether a0:36:9f:8a:18:1b brd ff:ff:ff:ff:ff:ff

inet 10.10.10.5/24 brd 10.10.10.255 scope global xenbr7

valid_lft forever preferred_lft forever

19: xenbr1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether e4:43:4b:c8:51:85 brd ff:ff:ff:ff:ff:ff

inet 10.10.2.10/24 brd 10.10.2.255 scope global xenbr1

valid_lft forever preferred_lft forever

20: xenbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether e4:43:4b:c8:51:84 brd ff:ff:ff:ff:ff:ff

inet 10.10.1.10/24 brd 10.10.1.255 scope global xenbr0

valid_lft forever preferred_lft forever

Thank you again!

SW

@olivierlambert I tailed the /var/log/xensource.log when I try to click on the "Deploy" button using the https://vates.tech/deploy if this is helpful:

Oct 3 11:34:23 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|audit] VM.import: url = '(url filtered)' sr='OpaqueRef:6aaef256-69c8-4928-8199-957dc4d01202' force='false'

Oct 3 11:34:23 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Failed to directly open the archive; trying gzip

Oct 3 11:34:23 XCP55 xapi: [debug||8801 ||import] Writing initial buffer

Oct 3 11:34:23 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Got XML

Oct 3 11:34:23 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] importing new style VM

Oct 3 11:34:23 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Importing 0 host(s)

Oct 3 11:34:23 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Importing 1 SR(s)

Oct 3 11:34:23 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Importing 1 VDI(s)

Oct 3 11:34:23 XCP55 xapi: [debug||8802 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VDI.create D:2ee7d07f3c8e created by task R:269b6e3fdd34

Oct 3 11:34:23 XCP55 xapi: [ info||8802 /var/lib/xcp/xapi||taskhelper] task VDI.create R:8e2c32b2c2a9 (uuid:6c7b2409-d437-931e-bd9c-43244e13b0fc) created (trackid=e5b9c7399e413bfe02ae51306d634912) by task R:269b6e3fdd34

Oct 3 11:34:23 XCP55 xapi: [debug||8802 /var/lib/xcp/xapi|VDI.create R:8e2c32b2c2a9|audit] VDI.create: SR = '70fc7ee7-8eb2-0cca-0807-83dba8917d5c (Local storage)'; name label = 'xoa root'

Oct 3 11:34:23 XCP55 xapi: [debug||8802 /var/lib/xcp/xapi|VDI.create R:8e2c32b2c2a9|message_forwarding] Marking SR for VDI.create (task=OpaqueRef:8e2c32b2-c2a9-4537-ba4e-bfba0a8da660)

Oct 3 11:34:23 XCP55 xapi: [ info||8802 /var/lib/xcp/xapi|VDI.create R:8e2c32b2c2a9|storage_impl] VDI.create dbg:OpaqueRef:8e2c32b2-c2a9-4537-ba4e-bfba0a8da660 sr:70fc7ee7-8eb2-0cca-0807-83dba8917d5c vdi_info:{"sm_config":{"import_task":"OpaqueRef:269b6e3f-dd34-4ff0-a13b-0ceaea1d15e0"},"sharable":false,"persistent":true,"physical_utilisation":0,"virtual_size":21474836480,"cbt_enabled":false,"read_only":false,"snapshot_of":"","snapshot_time":"19700101T00:00:00Z","is_a_snapshot":false,"metadata_of_pool":"","ty":"user","name_description":"","name_label":"xoa root","content_id":"","vdi":""}

Oct 3 11:34:23 XCP55 xapi: [debug||8803 ||dummytaskhelper] task VDI.create D:530849acd382 created by task R:8e2c32b2c2a9

Oct 3 11:34:23 XCP55 xapi: [debug||8803 |VDI.create D:530849acd382|sm] SM ext vdi_create sr=OpaqueRef:6aaef256-69c8-4928-8199-957dc4d01202 sm_config=[import_task=OpaqueRef:269b6e3f-dd34-4ff0-a13b-0ceaea1d15e0] type=[user] size=21474836480

Oct 3 11:34:23 XCP55 xapi: [ info||8803 |sm_exec D:cef862198a26|xapi_session] Session.create trackid=c434cfd79956a0dc270f86eb6e3c6560 pool=false uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

Oct 3 11:34:23 XCP55 xapi: [debug||8804 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:deba20300eeb created by task D:cef862198a26

Oct 3 11:34:24 XCP55 xapi: [debug||8805 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:host.get_other_config D:977f9d72bdb3 created by task D:530849acd382

Oct 3 11:34:24 XCP55 xapi: [debug||8806 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.get_other_config D:6af2095e7c63 created by task D:530849acd382

Oct 3 11:34:24 XCP55 xapi: [debug||8807 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VDI.db_introduce D:6ccc8d44b069 created by task D:530849acd382

Oct 3 11:34:24 XCP55 xapi: [ info||8807 /var/lib/xcp/xapi||taskhelper] task VDI.db_introduce R:8a81dc0481ad (uuid:7bff162e-8152-64bf-bf2d-48f27858f426) created (trackid=c434cfd79956a0dc270f86eb6e3c6560) by task D:530849acd382

Oct 3 11:34:24 XCP55 xapi: [debug||8807 /var/lib/xcp/xapi|VDI.db_introduce R:8a81dc0481ad|xapi_vdi] {pool,db}_introduce uuid=5a57f11e-be24-481a-8e89-b37069585c08 name_label=xoa root

Oct 3 11:34:24 XCP55 xapi: [debug||8807 /var/lib/xcp/xapi|VDI.db_introduce R:8a81dc0481ad|xapi_vdi] VDI.introduce read_only = false

Oct 3 11:34:24 XCP55 xapi: [debug||8808 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.get_virtual_allocation D:dad5f8c21fac created by task D:530849acd382

Oct 3 11:34:24 XCP55 xapi: [debug||8809 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.get_by_uuid D:1aa3954c501b created by task D:530849acd382

Oct 3 11:34:24 XCP55 xapi: [debug||8810 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.set_virtual_allocation D:a8530df366e1 created by task D:530849acd382

Oct 3 11:34:24 XCP55 xapi: [debug||8811 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.set_physical_size D:8510cfe86103 created by task D:530849acd382

Oct 3 11:34:24 XCP55 xapi: [debug||8812 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.set_physical_utilisation D:2d097d044c2d created by task D:530849acd382

Oct 3 11:34:24 XCP55 xapi: [ info||8803 |sm_exec D:cef862198a26|xapi_session] Session.destroy trackid=c434cfd79956a0dc270f86eb6e3c6560

Oct 3 11:34:24 XCP55 xapi: [debug||8802 /var/lib/xcp/xapi|VDI.create R:8e2c32b2c2a9|xapi_sr] OpaqueRef:0b1d4430-214b-466a-b5df-e6246544f682 snapshot_of <- OpaqueRef:NULL

Oct 3 11:34:24 XCP55 xapi: [debug||8802 /var/lib/xcp/xapi|VDI.create R:8e2c32b2c2a9|message_forwarding] Unmarking SR after VDI.create (task=OpaqueRef:8e2c32b2-c2a9-4537-ba4e-bfba0a8da660)

Oct 3 11:34:24 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Importing 1 VM_guest_metrics(s)

Oct 3 11:34:24 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Importing 1 VM(s)

Oct 3 11:34:24 XCP55 xapi: [debug||8813 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VM.create D:60ff9638321b created by task R:269b6e3fdd34

Oct 3 11:34:24 XCP55 xapi: [ info||8813 /var/lib/xcp/xapi||taskhelper] task VM.create R:7c496952935c (uuid:7d96d787-dcc6-a45e-8aa7-b646df253dc7) created (trackid=e5b9c7399e413bfe02ae51306d634912) by task R:269b6e3fdd34

Oct 3 11:34:24 XCP55 xapi: [debug||8813 /var/lib/xcp/xapi|VM.create R:7c496952935c|audit] VM.create: name_label = 'XOA' name_description = 'Xen Orchestra virtual Appliance'

Oct 3 11:34:24 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Created VM: OpaqueRef:fda15bd0-5dcd-44ad-8a37-5e110ce39724 (was Ref:208)

Oct 3 11:34:24 XCP55 xapi: [debug||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Importing 1 network(s)

Oct 3 11:34:24 XCP55 xapi: [debug||8814 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:network.get_by_name_label D:54e3bcbf2532 created by task R:269b6e3fdd34

Oct 3 11:34:24 XCP55 xapi: [debug||8815 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:network.get_all_records_where D:64c2c4875251 created by task R:269b6e3fdd34

Oct 3 11:34:24 XCP55 xapi: [debug||8816 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:network.create D:36398824e4bc created by task R:269b6e3fdd34

Oct 3 11:34:24 XCP55 xapi: [ info||8816 /var/lib/xcp/xapi||taskhelper] task network.create R:1662dcd1a2fb (uuid:225442be-036f-5a7b-7782-97f773e6af09) created (trackid=e5b9c7399e413bfe02ae51306d634912) by task R:269b6e3fdd34

Oct 3 11:34:24 XCP55 xapi: [debug||8816 /var/lib/xcp/xapi|network.create R:1662dcd1a2fb|audit] Network.create: name_label = 'Pool-wide network associated with eth0 on VLAN11'; bridge = 'xapi1'; managed = 'true'

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] network.create R:1662dcd1a2fb failed with exception Server_error(INVALID_VALUE, [ bridge; xapi1 ])

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] Raised Server_error(INVALID_VALUE, [ bridge; xapi1 ])

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] 1/8 xapi Raised at file ocaml/xapi/xapi_network.ml, line 266

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] 2/8 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] 3/8 xapi Called from file ocaml/xapi/rbac.ml, line 205

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] 4/8 xapi Called from file ocaml/xapi/server_helpers.ml, line 95

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] 5/8 xapi Called from file ocaml/xapi/server_helpers.ml, line 113

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] 6/8 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] 7/8 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 35

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace] 8/8 xapi Called from file lib/backtrace.ml, line 177

Oct 3 11:34:24 XCP55 xapi: [error||8816 /var/lib/xcp/xapi||backtrace]

Oct 3 11:34:24 XCP55 xapi: [error||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Import failed: failed to create Network with name_label Pool-wide network associated with eth0 on VLAN11

Oct 3 11:34:24 XCP55 xapi: [error||8800 HTTPS 10.10.10.57->:::80|VM.import R:269b6e3fdd34|import] Caught exception in import: INVALID_VALUE: [ bridge; xapi1 ]

Oct 3 11:34:24 XCP55 xapi: [debug||8817 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.slave_login D:9cb4342e73e2 created by task R:d59db384ea2e

Oct 3 11:34:24 XCP55 xapi: [ info||8817 /var/lib/xcp/xapi|session.slave_login D:8784583e6172|xapi_session] Session.create trackid=2f17fc053e7e09f323c4b973c22fc02c pool=true uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

Oct 3 11:34:24 XCP55 xapi: [debug||8818 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:eb92071215a7 created by task D:8784583e6172

Oct 3 11:34:24 XCP55 xapi: [debug||8819 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VM.destroy D:c98e01df046f created by task R:d59db384ea2e

Oct 3 11:34:24 XCP55 xapi: [ info||8819 /var/lib/xcp/xapi||taskhelper] task VM.destroy R:ca9ded8caef3 (uuid:c93118e0-1b98-1c5d-086a-76aa5a189c2d) created (trackid=2f17fc053e7e09f323c4b973c22fc02c) by task R:d59db384ea2e

Oct 3 11:34:24 XCP55 xapi: [debug||8819 /var/lib/xcp/xapi|VM.destroy R:ca9ded8caef3|audit] VM.destroy: VM = 'e6a49727-110e-2e25-287d-b706951e9161 (XOA)'

Oct 3 11:34:24 XCP55 xapi: [debug||8819 /var/lib/xcp/xapi|VM.destroy R:ca9ded8caef3|xapi_vm_helpers] VM.destroy: deleting DB records

Oct 3 11:34:24 XCP55 xapi: [debug||8820 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VDI.destroy D:5ef6f61c2f76 created by task R:d59db384ea2e

Oct 3 11:34:24 XCP55 xapi: [ info||8820 /var/lib/xcp/xapi||taskhelper] task VDI.destroy R:ed9f5fc50a0b (uuid:b4f54201-1cf9-f46c-9f4c-19694d00d14c) created (trackid=2f17fc053e7e09f323c4b973c22fc02c) by task R:d59db384ea2e

Oct 3 11:34:24 XCP55 xapi: [debug||8820 /var/lib/xcp/xapi|VDI.destroy R:ed9f5fc50a0b|audit] VDI.destroy: VDI = '5a57f11e-be24-481a-8e89-b37069585c08'

Oct 3 11:34:24 XCP55 xapi: [debug||8820 /var/lib/xcp/xapi|VDI.destroy R:ed9f5fc50a0b|message_forwarding] Marking SR for VDI.destroy (task=OpaqueRef:ed9f5fc5-0a0b-458f-8858-247e333dc1f9)

Oct 3 11:34:24 XCP55 xapi: [ info||8820 /var/lib/xcp/xapi|VDI.destroy R:ed9f5fc50a0b|storage_impl] VDI.destroy dbg:OpaqueRef:ed9f5fc5-0a0b-458f-8858-247e333dc1f9 sr:70fc7ee7-8eb2-0cca-0807-83dba8917d5c vdi:5a57f11e-be24-481a-8e89-b37069585c08

Oct 3 11:34:24 XCP55 xapi: [debug||8821 ||dummytaskhelper] task VDI.destroy D:a94b9e39269f created by task R:ed9f5fc50a0b

Oct 3 11:34:24 XCP55 xapi: [debug||8821 |VDI.destroy D:a94b9e39269f|sm] SM ext vdi_delete sr=OpaqueRef:6aaef256-69c8-4928-8199-957dc4d01202 vdi=OpaqueRef:0b1d4430-214b-466a-b5df-e6246544f682

Oct 3 11:34:24 XCP55 xapi: [ info||8821 |sm_exec D:73be5872978b|xapi_session] Session.create trackid=94d13e7939f10383fc10c4f3503f9c6f pool=false uname= originator=xapi is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

Oct 3 11:34:24 XCP55 xapi: [debug||8822 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:613297a97154 created by task D:73be5872978b

Oct 3 11:34:24 XCP55 xapi: [debug||8823 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:host.get_other_config D:4e078575ee73 created by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [debug||8824 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.get_other_config D:8c72ca70ce0b created by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [ info||8825 /var/lib/xcp/xapi|session.login_with_password D:133bcd0ae16f|xapi_session] Session.create trackid=ae9ea8a24a7e9e3eaea57666905e955f pool=false uname=root originator=SM is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

Oct 3 11:34:24 XCP55 xapi: [debug||8826 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:a3ee39a6ee41 created by task D:133bcd0ae16f

Oct 3 11:34:24 XCP55 xapi: [ info||8834 /var/lib/xcp/xapi|session.logout D:0ea100b8985d|xapi_session] Session.destroy trackid=ae9ea8a24a7e9e3eaea57666905e955f

Oct 3 11:34:24 XCP55 xapi: [debug||8835 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VDI.get_by_uuid D:c427804d38ef created by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [debug||8836 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:VDI.db_forget D:b3211d50868f created by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [ info||8836 /var/lib/xcp/xapi||taskhelper] task VDI.db_forget R:0444b480c4f2 (uuid:c1e60f49-fbf6-a978-02fc-89b7eb2eba83) created (trackid=94d13e7939f10383fc10c4f3503f9c6f) by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [debug||8836 /var/lib/xcp/xapi|VDI.db_forget R:0444b480c4f2|xapi_vdi] db_forget uuid=5a57f11e-be24-481a-8e89-b37069585c08

Oct 3 11:34:24 XCP55 xapi: [debug||8837 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.get_virtual_allocation D:c00facb0a49c created by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [debug||8838 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.get_by_uuid D:7b3016885891 created by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [debug||8839 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.set_virtual_allocation D:efa488742d4c created by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [debug||8840 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.set_physical_size D:3d15163a9337 created by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [debug||8841 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:SR.set_physical_utilisation D:13d788b1bcc6 created by task D:a94b9e39269f

Oct 3 11:34:24 XCP55 xapi: [ info||8842 /var/lib/xcp/xapi|session.login_with_password D:32d41049cdd7|xapi_session] Session.create trackid=c1a91205d46beaa8fd0f72c871cc7890 pool=false uname=root originator=SM is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

Oct 3 11:34:24 XCP55 xapi: [ info||8843 /var/lib/xcp/xapi|session.login_with_password D:91f9a8162270|xapi_session] Session.create trackid=4255185b7b399215999d881ccfd9bd69 pool=false uname=root originator=SM is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

Oct 3 11:34:24 XCP55 xapi: [debug||8844 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:bbc704b86901 created by task D:32d41049cdd7

Oct 3 11:34:24 XCP55 xapi: [debug||8845 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:2e27b0f38226 created by task D:91f9a8162270

Oct 3 11:34:24 XCP55 xapi: [ info||8859 /var/lib/xcp/xapi|session.logout D:77ab4a50a888|xapi_session] Session.destroy trackid=c1a91205d46beaa8fd0f72c871cc7890

Oct 3 11:34:24 XCP55 xapi: [ info||8860 /var/lib/xcp/xapi|session.logout D:3a423443cf9e|xapi_session] Session.destroy trackid=4255185b7b399215999d881ccfd9bd69

Oct 3 11:34:24 XCP55 xapi: [ info||8861 /var/lib/xcp/xapi|session.login_with_password D:ad7636f29052|xapi_session] Session.create trackid=22e9b2dee5433c30a4a7a7cfe803e354 pool=false uname=root originator=SM is_local_superuser=true auth_user_sid= parent=trackid=9834f5af41c964e225f24279aefe4e49

Oct 3 11:34:24 XCP55 xapi: [debug||8862 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:pool.get_all D:14b2b200097d created by task D:ad7636f29052

Oct 3 11:34:24 XCP55 xapi: [ info||8821 |sm_exec D:73be5872978b|xapi_session] Session.destroy trackid=94d13e7939f10383fc10c4f3503f9c6f

Oct 3 11:34:24 XCP55 xapi: [debug||8820 /var/lib/xcp/xapi|VDI.destroy R:ed9f5fc50a0b|message_forwarding] Unmarking SR after VDI.destroy (task=OpaqueRef:ed9f5fc5-0a0b-458f-8858-247e333dc1f9)

Oct 3 11:34:24 XCP55 xapi: [debug||8872 /var/lib/xcp/xapi||dummytaskhelper] task dispatch:session.logout D:1554b9af87b0 created by task R:d59db384ea2e

Oct 3 11:34:24 XCP55 xapi: [ info||8872 /var/lib/xcp/xapi|session.logout D:3189c746e134|xapi_session] Session.destroy trackid=2f17fc053e7e09f323c4b973c22fc02c

Oct 3 11:34:24 XCP55 xapi: [ warn||8801 ||pervasiveext] finally: Error while running cleanup after failure of main function: (Failure "Decompression via zcat failed: exit code 1")

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] VM.import R:269b6e3fdd34 failed with exception Server_error(INVALID_VALUE, [ bridge; xapi1 ])

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] Raised Server_error(INVALID_VALUE, [ bridge; xapi1 ])

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 1/22 xapi Raised at file ocaml/xapi-client/client.ml, line 7

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 2/22 xapi Called from file ocaml/xapi-client/client.ml, line 19

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 3/22 xapi Called from file ocaml/xapi-client/client.ml, line 9676

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 4/22 xapi Called from file ocaml/xapi/import.ml, line 93

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 5/22 xapi Called from file ocaml/xapi/import.ml, line 97

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 6/22 xapi Called from file ocaml/xapi/import.ml, line 1136

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 7/22 xapi Called from file list.ml, line 110

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 8/22 xapi Called from file list.ml, line 110

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 9/22 xapi Called from file ocaml/xapi/import.ml, line 1861

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 10/22 xapi Called from file ocaml/xapi/import.ml, line 1881

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 11/22 xapi Called from file ocaml/xapi/import.ml, line 2189

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 12/22 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 13/22 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 35

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 14/22 xapi Called from file lib/open_uri.ml, line 20

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 15/22 xapi Called from file lib/open_uri.ml, line 20

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 16/22 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 17/22 xapi Called from file ocaml/xapi/rbac.ml, line 205

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 18/22 xapi Called from file ocaml/xapi/server_helpers.ml, line 95

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 19/22 xapi Called from file ocaml/xapi/server_helpers.ml, line 113

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 20/22 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 24

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 21/22 xapi Called from file lib/xapi-stdext-pervasives/pervasiveext.ml, line 35

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace] 22/22 xapi Called from file lib/backtrace.ml, line 177

Oct 3 11:34:24 XCP55 xapi: [error||8800 :::80||backtrace]

Oct 3 11:34:24 XCP55 xapi: [ info||8873 /var/lib/xcp/xapi|session.logout D:18cedef1668a|xapi_session] Session.destroy trackid=22e9b2dee5433c30a4a7a7cfe803e354