SR Garbage Collection running permanently

-

@Danp

VDIs was migrated 8.2>8.3. Without snapshots, because of CBT.

Other pools was migrated by same way and have no such issue.i can't remember anything special, why that could happens.

-

@Tristis-Oris There's a fix for the time out during leaf coalesce on XCP-ng 8.3 that should be released soon. You can install it now with the following command --

yum install https://updates.xcp-ng.org/8/8.3/testing/x86_64/Packages/sm-3.2.3-1.15.xcpng8.3.x86_64.rpm https://updates.xcp-ng.org/8/8.3/testing/x86_64/Packages/sm-fairlock-3.2.3-1.15.xcpng8.3.x86_64.rpm -

@Danp reboot is required?

-

I have ran into this numerous times. Its one of the reasons i have not switched to "Purge Snapshot data when using CBT" on all my jobs yet.

I hope the fixes in testing solve the issue, what has been fixing it for me in the meantime is modifying the following:

Edit /opt/xensource/sm/cleanup.py : Modify LIVE_LEAF_COALESCE_MAX_SIZE and LIVE_LEAF_COALESCE_TIMEOUT to the following values: LIVE_LEAF_COALESCE_MAX_SIZE = 1024 * 1024 * 1024 # bytes LIVE_LEAF_COALESCE_TIMEOUT = 300 # seconds -

@Tristis-Oris Not AFAIK but it wouldn't hurt to be sure that the latest fixes are running.

-

- install patch, reboot pool.

- GC job started during restart and stuck at 0%, so i restart toolstack again.

- now nothing is running, bad snapshots not disappeared.

Should i wait longer or?

-

@Tristis-Oris Did you install the patch on all of the hosts in the pool? Have you tried rescanning an SR to kick off the GC process?

-

@Danp after some time GC task started automaticaly and running for 1 hour already. Still about 50%.

-

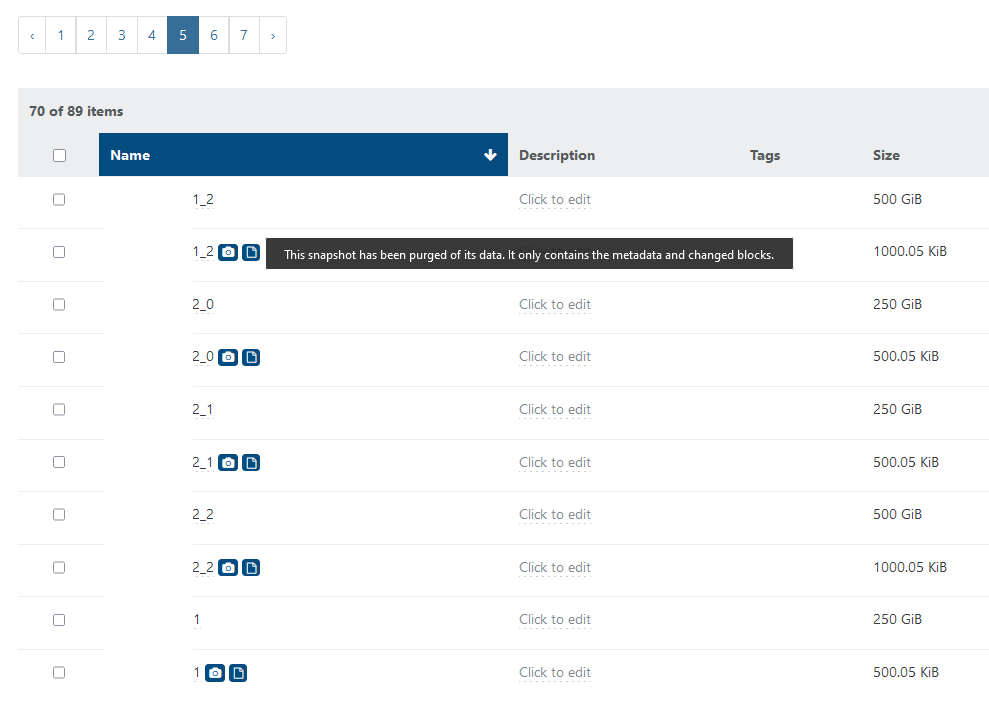

@Tristis-Oris GC done, ~5 items removed, ~20 left.

-

Good, so it is working

-

@olivierlambert is it some limit for items removal per run?

-

The GC is doing one chain after another. We told XenServer team back in 2016 that it could probably merge multiple chains at once, but they told us it was too risky. So we did not focus on that. Patience is key there. Clearly, we'll do better in the future.

-

@olivierlambert got it. Will see what happens in few days.

-

It will accelerate. First merges are the slowest ones, but then it's going faster and faster.

-

2 days, few backup cycles, snapshots amount won't descrease.

-

@Tristis-Oris Check

SMlogfor further exceptions. -

@Tristis-Oris Note that if the SR storage device is around 90% or more full, a coalesce may not work. You have to either delete or move then enough storage so that there is adequate free space.

How full is the SR? That said, a coalesce process can take up to 24 hours to complete. I wonder if this shows up and with what progress when you run "xe task-list" ? -

@Danp No, can't find any exceptions.

that typical log for now, it repeating a lot of times:Jan 20 00:02:00 host SM: [2736362] Kicking GC Jan 20 00:02:00 host SM: [2736362] Kicking SMGC@93d53646-e895-52cf-7c8e-df1d5e84f5e4... Jan 20 00:02:00 host SM: [2736362] utilisation 40394752 <> 34451456 * Jan 20 00:02:00 host SM: [2736362] VDIs changed on disk: ['34fa9f2d-95fa-468e-986c-ade22b92b1f3', '56b94e20-01ae-4da1-99f8-03aa901da64f', 'a75c6e7b-7f8d-4a4b-99$ Jan 20 00:02:00 host SM: [2736362] Updating VDI with location=34fa9f2d-95fa-468e-986c-ade22b92b1f3 uuid=34fa9f2d-95fa-468e-986c-ade22b92b1f3 * Jan 20 00:02:00 host SM: [2736362] lock: released /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr Jan 20 00:02:00 host SMGC: [2736466] === SR 93d53646-e895-52cf-7c8e-df1d5e84f5e4: gc === Jan 20 00:02:00 host SM: [2736466] lock: opening lock file /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/running * Jan 20 00:02:00 host SMGC: [2736466] Found 0 cache files Jan 20 00:02:00 host SM: [2736466] lock: tried lock /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr, acquired: True (exists: True) Jan 20 00:02:00 host SM: [2736466] ['/usr/bin/vhd-util', 'scan', '-f', '-m', '/var/run/sr-mount/93d53646-e895-52cf-7c8e-df1d5e84f5e4/*.vhd'] Jan 20 00:02:00 host SM: [2736614] lock: opening lock file /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr Jan 20 00:02:00 host SM: [2736614] sr_update {'host_ref': 'OpaqueRef:3570b538-189d-6a16-fe61-f6d73cc545dc', 'command': 'sr_update', 'args': [], 'device_config':$ Jan 20 00:02:00 host SM: [2736614] lock: closed /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr Jan 20 00:02:01 host SM: [2736466] pread SUCCESS * Jan 20 00:02:01 host SMGC: [2736466] SR 93d5 ('VM Sol flash') (73 VDIs in 39 VHD trees): Jan 20 00:02:01 host SMGC: [2736466] *70119dcb(50.000G/45.497G?) Jan 20 00:02:01 host SMGC: [2736466] e6a3e53a(50.000G/107.500K?) * Jan 20 00:02:01 host SM: [2736466] lock: released /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr Jan 20 00:02:01 host SMGC: [2736466] Got sm-config for *70119dcb(50.000G/45.497G?): {'vhd-blocks': 'eJzFlrFuwjAQhk/Kg5R36Ngq5EGQyJSsHTtUPj8WA4M3GDrwBvEEDB2yEaQQ$ * Jan 20 00:02:01 host SMGC: [2736466] No work, exiting Jan 20 00:02:01 host SMGC: [2736466] GC process exiting, no work left Jan 20 00:02:01 host SM: [2736466] lock: released /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/gc_active Jan 20 00:02:01 host SMGC: [2736466] In cleanup Jan 20 00:02:01 host SMGC: [2736466] SR 93d5 ('VM Sol flash') (73 VDIs in 39 VHD trees): no changes Jan 20 00:02:01 host SM: [2736466] lock: closed /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/running@tjkreidl Free space is enough. I never see GC job running for a long, so never see it at other pools or after fix. Have no any coalesce in queue.

xe task-listshow nothing.Maybe i need to cleanup bad VDIs manually for first time?

-

@Tristis-Oris It wouldn't hurt to do a manual cleanup. Not sure if a reboot might help, but strange that no task is showing as active. Do you have other SRs on which you can try a scan/coalesce?

Are there any VMs in a weird power state? -

@tjkreidl nothing unusual.

I found same issue on another 8.3 pool, another SR, but never seen related GC tasks. No exceptions at log.

But no problems with 3rd 8.3 pool.SR scan don't trigger GC, can i run it manually?