Our future backup code: test it!

-

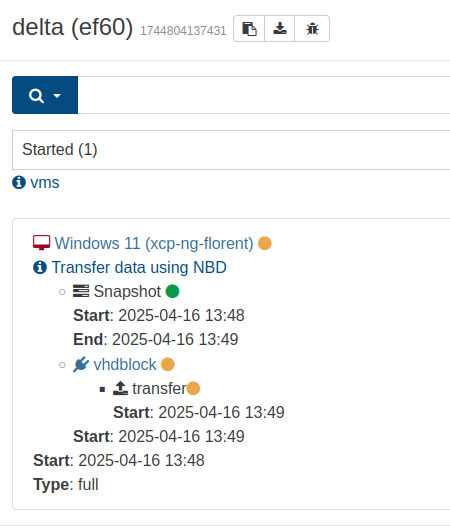

1 vm, 1 storage, NBD connections: 1. delta, first full.

Duration: 3 minutes

Size: 26.54 GiB

Speed: 160.71 MiB/sDuration: 4 minutes

Size: 26.53 GiB

Speed: 113.74 MiB/s -

@Tristis-Oris Do you have the same performance without NBD ?

Does your storage use blocks ? -

@florent blocks! i forgot about that checkbox)

better but not same.

Duration: 3 minutes

Size: 26.53 GiB

Speed: 143.7 MiB/sSpeed: 146.09 MiB/s

-

@Tristis-Oris I made a little change, can you update (like the last time ) and retest ?

-

@florent

same speed after fix.

142.56 MiB/s - 145.63 MiB/smaybe i miss something else? same database as prod, only another LUN connected for backups.

-

@Tristis-Oris no it's on our end

Could you retry nbd + target a block based directory ?

ON my test setup, with the latest changes I get better speed than master ( 190MB/s per disk vs 130-170 depending on the run and settings on master)I got quite a huge variation between the same runs (40MB/s)

-

@florent yep, now it equal. Maybe my hw bottleneck? i can also check with ssd storage to see max speed.

Duration: 3 minutes

Size: 26.53 GiB

Speed: 157.78 MiB/s

Speed: 149.39 MiB/s

Speed: 163.76 MiB/sNo more errors

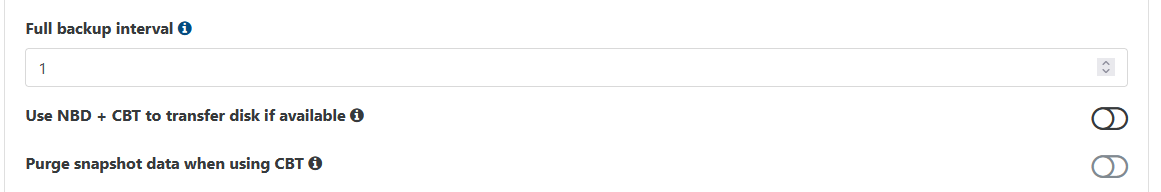

incorrect backup size in metadata.But still no NBD(

-

@Tristis-Oris that is already a good news.

I pushed an additional fix : the NBD info was not shown on the UI

-

-

well, that was my CPU bottleneck. XO live at most stable DC, but oldest one.

- Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

flash:

Speed: 151.36 MiB/s

summary: { duration: '3m', cpuUsage: '131%', memoryUsage: '162.19 MiB' }

hdd:

Speed: 152 MiB/s

summary: { duration: '3m', cpuUsage: '201%', memoryUsage: '314.1 MiB' }- Intel(R) Xeon(R) Gold 5215 CPU @ 2.50GHz

flash:

Speed: 196.78 MiB/s

summary: { duration: '3m', cpuUsage: '129%', memoryUsage: '170.8 MiB' }

hdd:

Speed: 184.72 MiB/s

summary: { duration: '3m', cpuUsage: '198%', memoryUsage: '321.06 MiB' }- Intel(R) Xeon(R) Platinum 8260 CPU @ 2.40GHz

flash:

Speed: 222.32 MiB/s

Speed: 220 MiB/s

summary: { duration: '2m', cpuUsage: '155%', memoryUsage: '183.77 MiB' }hdd:

Speed: 185.63 MiB/s

Speed: 185.21 MiB/s

summary: { duration: '3m', cpuUsage: '196%', memoryUsage: '315.87 MiB' }Look at high memory usage with hdd.

sometimes i still got errors.

"id": "1744875242122:0", "message": "export", "start": 1744875242122, "status": "success", "tasks": [ { "id": "1744875245258", "message": "transfer", "start": 1744875245258, "status": "success", "end": 1744875430762, "result": { "size": 28489809920 } }, { "id": "1744875432586", "message": "clean-vm", "start": 1744875432586, "status": "success", "warnings": [ { "data": { "path": "/xo-vm-backups/d4950e88-f6aa-dbc1-e6fe-e3c73ebe9904/20250417T073405Z.json", "actual": 28489809920, "expected": 28496828928 }, "message": "cleanVm: incorrect backup size in metadata" }"id": "1744876967012:0", "message": "export", "start": 1744876967012, "status": "success", "tasks": [ { "id": "1744876970075", "message": "transfer", "start": 1744876970075, "status": "success", "end": 1744877108146, "result": { "size": 28489809920 } }, { "id": "1744877119430", "message": "clean-vm", "start": 1744877119430, "status": "success", "warnings": [ { "data": { "path": "/xo-vm-backups/d4950e88-f6aa-dbc1-e6fe-e3c73ebe9904/20250417T080250Z.json", "actual": 28489809920, "expected": 28496828928 }, "message": "cleanVm: incorrect backup size in metadata" } -

i tried to move tests to another vm, but again can't build it with same commands(

yarn start yarn run v1.22.22 $ node dist/cli.mjs node:internal/modules/esm/resolve:275 throw new ERR_MODULE_NOT_FOUND( ^ Error [ERR_MODULE_NOT_FOUND]: Cannot find module '/opt/xen-orchestra/@xen-orchestra/xapi/disks/XapiProgress.mjs' imported from /opt/xen-orchestra/@xen-orchestra/xapi/disks/Xapi.mjs at finalizeResolution (node:internal/modules/esm/resolve:275:11) at moduleResolve (node:internal/modules/esm/resolve:860:10) at defaultResolve (node:internal/modules/esm/resolve:984:11) at ModuleLoader.defaultResolve (node:internal/modules/esm/loader:685:12) at #cachedDefaultResolve (node:internal/modules/esm/loader:634:25) at ModuleLoader.resolve (node:internal/modules/esm/loader:617:38) at ModuleLoader.getModuleJobForImport (node:internal/modules/esm/loader:273:38) at ModuleJob._link (node:internal/modules/esm/module_job:135:49) { code: 'ERR_MODULE_NOT_FOUND', url: 'file:///opt/xen-orchestra/@xen-orchestra/xapi/disks/XapiProgress.mjs' } Node.js v22.14.0 error Command failed with exit code 1. info Visit https://yarnpkg.com/en/docs/cli/run for documentation about this command. -

@Tristis-Oris thanks , I missed a file

I pushed it just now -

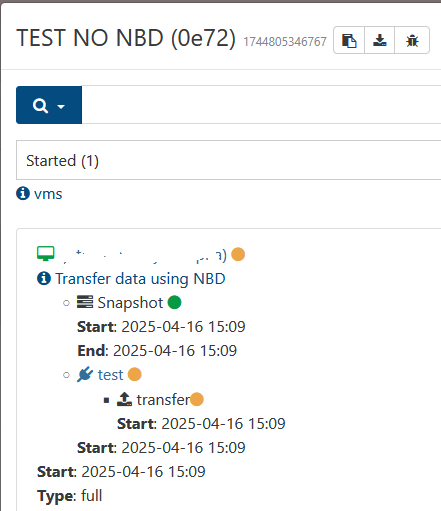

@florent I finally got the new code running and I tested a Delta Backup (full first run) with NBD x3 enabled and it's leaving

NBD transfer (on xcp1) 99%connected after a run. The backup does complete but the task is stuck. -

@Andrew nice catch andrew I will look into it

is it keeping disk attached to dom0 ? (in dashboard -> health ) -

@florent No. The dashboard health is clean.

No VDIs attached to control domain -

so that is probably only a off by one error in the task code

Thanks andrew -

@florent code is now merged into master

-

-

@florent updated my homelab XO instance this morning to try this out since its been merged.

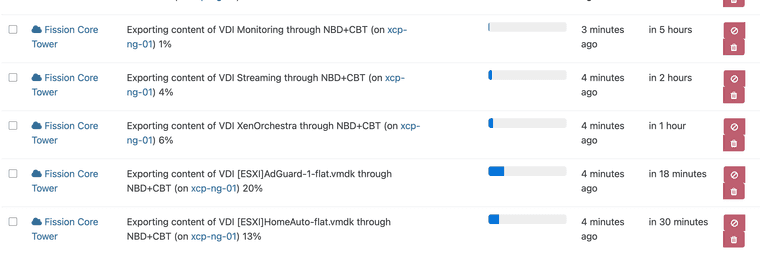

Seems like concurrency has changed if the field is left empty? It used to default to 2 but now i see it trying to back up every VM in the job at once?I dont think this is the case anymore, its just the completed tasks do not update their progress and clear properly.Under the "Backups" Tab in the XO the backup says successful, however none of the concurrent backup tasks have completed under tasks. L

ooking at the backup "Remote" storage i see it still appears to be writing data so perhaps the job is not actually complete as XO states. --This was the merge operation happening in the background as it turns out the job was actually complete however the tasks were not clearing. -

@florent Backup did seem to run however the tasks never cleared. I restarted the toolstack on both hosts which cleared the tasks.

Set job concurrency to 2 manually on the job and ran it again. Once again the task list filled with all the VMs from the job at once, the job seems to process and succeed but the tasks never clear and you cant really tell whats happening since the task window becomes cluttered with VMs from the job with a random state of progress that never seems to clear on its own. I think ill have to roll back to a previous release for now. Hopefully this plan isn't to push this to the XOA appliance just yet!

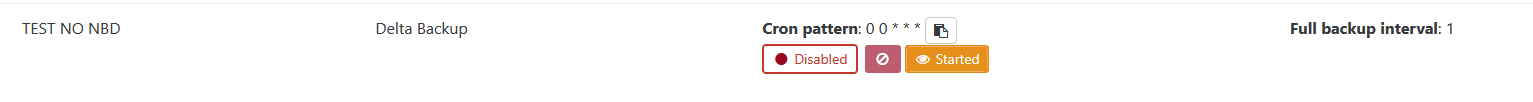

Stuck tasks:

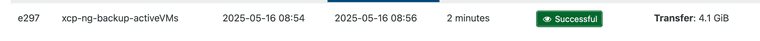

Yet completed and successful job: