10gb backup only managing about 80Mb

-

@utopianfish Or look for deals in places like amazon.com or bestbuy.com or even Ebay.com.

-

@nikade Did the same. VLANs are great! We did use separate NICs for iSCSI storage. But the PMI and VMs, traffic was handled easily by the dual 10 GiB NICs, even with several hundred XenDesktop VMs hosted among three servers (typically around 8- VMs per server).

-

@tjkreidl I have same results as you : ~80MB/s for backups over a 2x10Gb network bond.

Only way i managed to get overt his 'limit' is by using XOproxies, on another VLAN on this SAME bond

Don't ask me why, but bandwith tests (when you click TEST on a remote) tripled comparing to the remote on same VLAN as the XOA.Isolating is a better best practive for backup networks, but also has the benefit of getting more bandwith ? strange.

My backups can now reach 100/110 MB/s (yeah tripled only in the test...)

-

@Pilow I think what you are seeing is the result of tapdisk single threaded nature (among other things master Dom0 related). I would suggest changing concurrency to 4 or 8 and see if the speed AT THE PORT is higher during backups. You may still only see <80MiB/s PER vm getting backed up, maybe less, but you may end with 4 or 8 backing up at 40 to 60MiB/s == high total bandwidth.

also note.. you 61.63MiB (note capital B for Mebibyte/s) is == 517.267 mb/s network speed megabite/second

-

okay i'll up the concurrency, backup is happening in 35 minutes, i'll report back

-

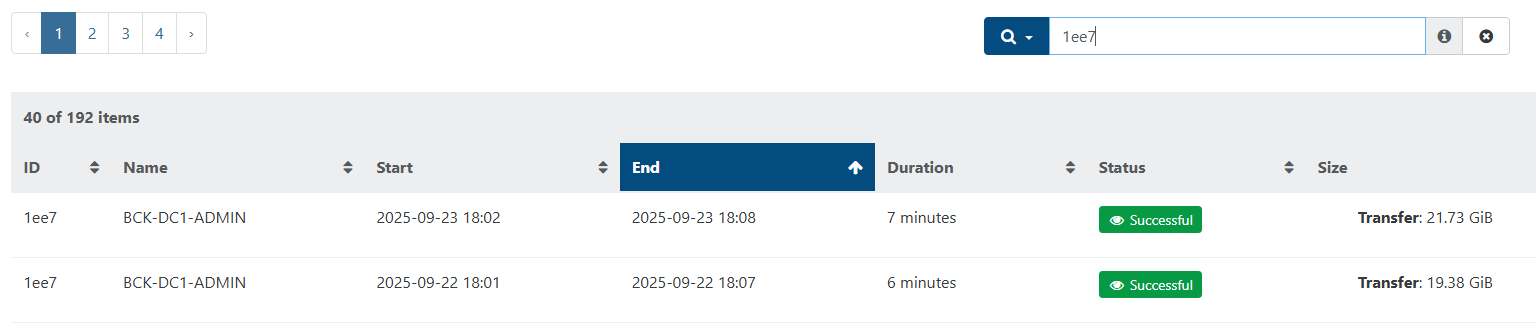

results from upgrading from 3 concurrency (yesterday) to 6 concurrency today

backup of 9 VMs, proceeded by XOA (was a delta not a full)

XOA is 4 vCPU, 8Gb RAM (tuned in systemd service to let 7Gb to xo-server, 1Gb to debian)

remote is a same LAN (10Gb/s) S3 remote, with 25Gb/s iSCSI storage

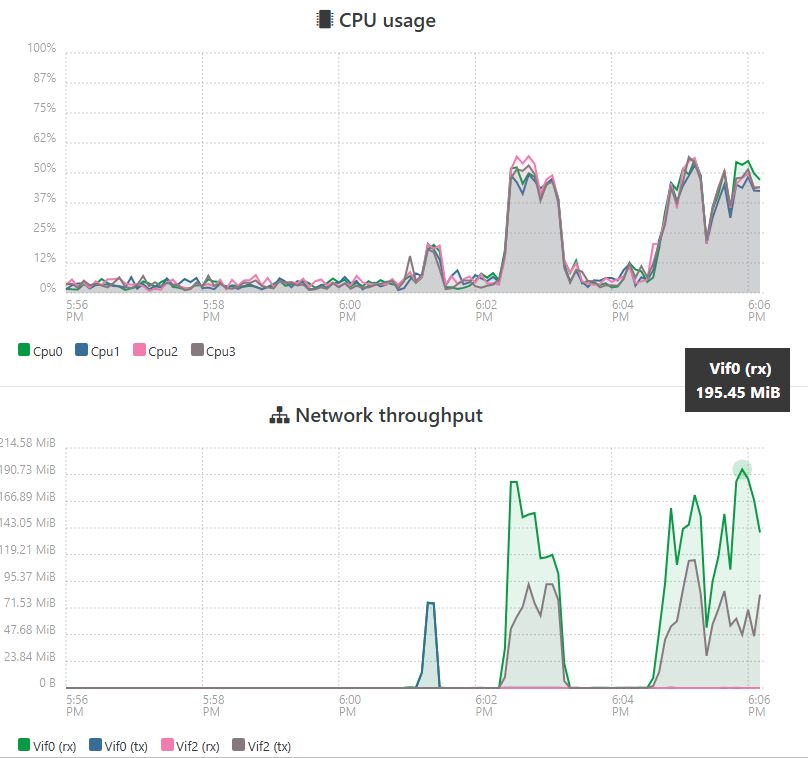

on XOA, I can see a peak of RX of 195.45MiB/s on VIF0, transmitting to S3 remote on VIF2 at lesser bandwith

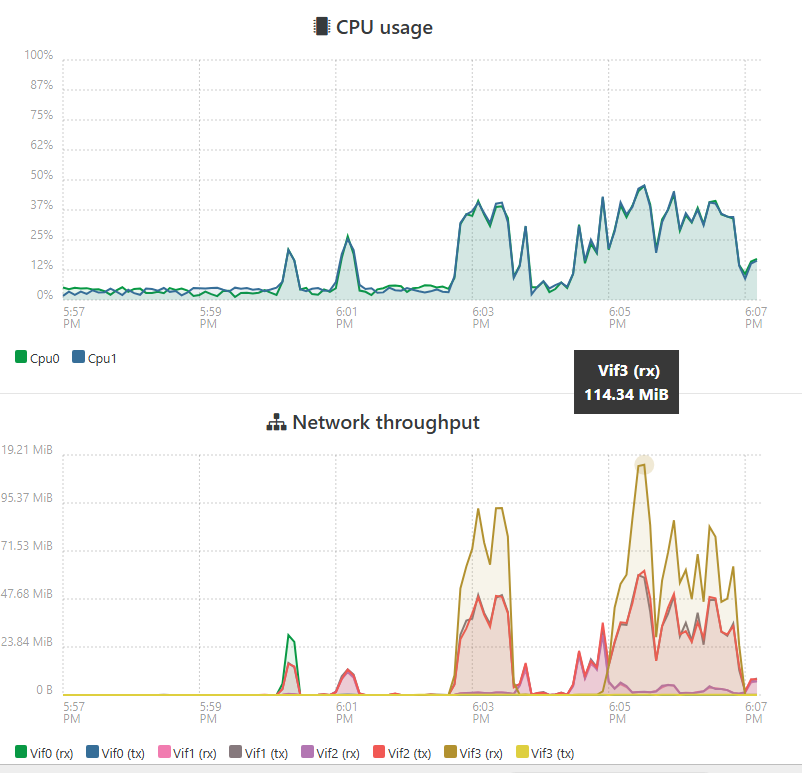

simultaneously, on the S3 remote, I can see incoming traffic of maximum 114.34MiB/s on VIF3 (LAN), transmitting same speed on iSCSI (2 active paths, 2 lines of TX are the same bandwith consumption, half/half need to add them)

per VM speed in the job report is between Speed: 37.34 MiB/s and Speed: 143.09 MiB/s

-

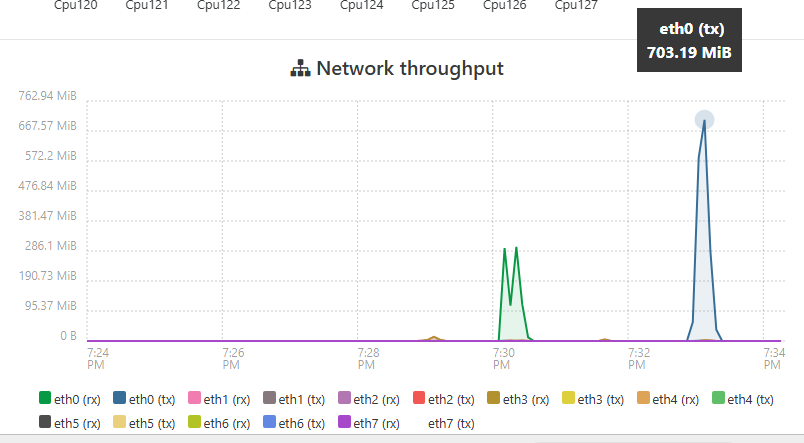

the 10Gb card can really be used at its full potential in XCP

This is a graph when live migrating 4 VMs from one host to another... using the same VLAN on same BOND as backups transfers.

why can't we have these speeds in backups ?

-

Because you are comparing apples and carrots. Live migrating a VM is moving RAM between hosts, not moving any data blocks stored on a storage repository (SR) or backup repo (BR). There are MANY more layers involved with blocks. Try to live migrate a VM with its storage in live, you'll see you'll be ballpark VM backup speed.

-

indeed.

What should we expect with smapiV3/QCOW2 ?

I won't take it for granted but, shall we get out the current ballpark ?

-

Impossible to tell yet, more in few months.