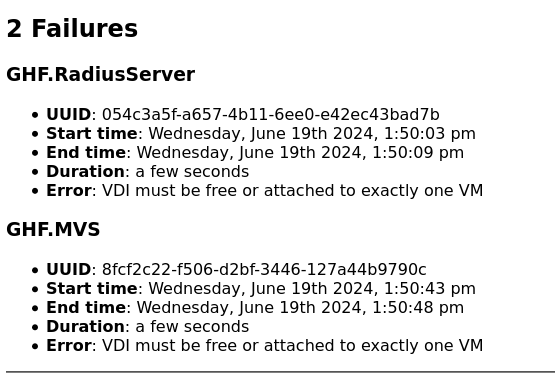

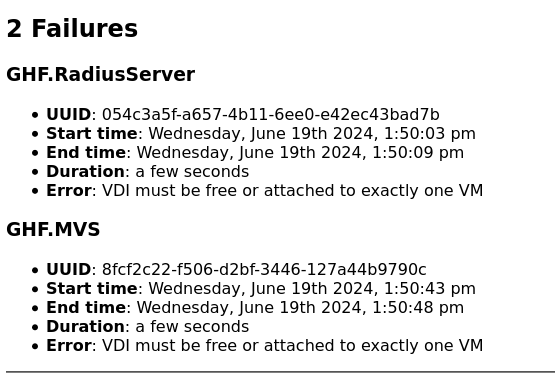

backups started failing: Error: VDI must be free or attached to exactly one VM

-

@manilx This could be related to a recent change in the source code. Checking in with the dev team now and will let you know what I find.

-

@Danp Next delta failed again.

Trying to restore previous XO backup....

-

@manilx Restored Xen Orchestra, commit 31461

Master, commit c134bBackups fine.

-

@manilx Hi, manlix

after a working backup, do you have any disk attached to dom0 ? (dashboard => health )

-

@florent Hi,

Reverted to previous VM and deleted the new one..... Can't check.

BUT I checked the dashboard health before and there was nothing wrong. -

Hi Guys, Just chimeing in that I am seeing the same problem

-

Oh.. Just a side note here: I went into the backup history and "restarted" the backups on the failed VM's and both VM's backed up successfully. Huh...

-

@Anonabhar because this is an immediate error for something thta was under the radarn and was (mostly ) recovering by itself

But when it fails, it's not great ( no VDI coalesce for moth to work with) .

We'll try to find a good equilibrium point between enuring backup are running correctly and detecting problems early by the end of the month

-

@Anonabhar I did restart the failed backups and they always errored out again....

-

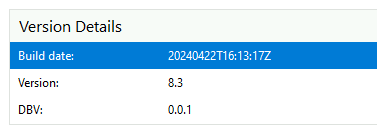

@florent Just installed latest build. Same errors.

Dashboard/Health no errors.Reverting.....

-

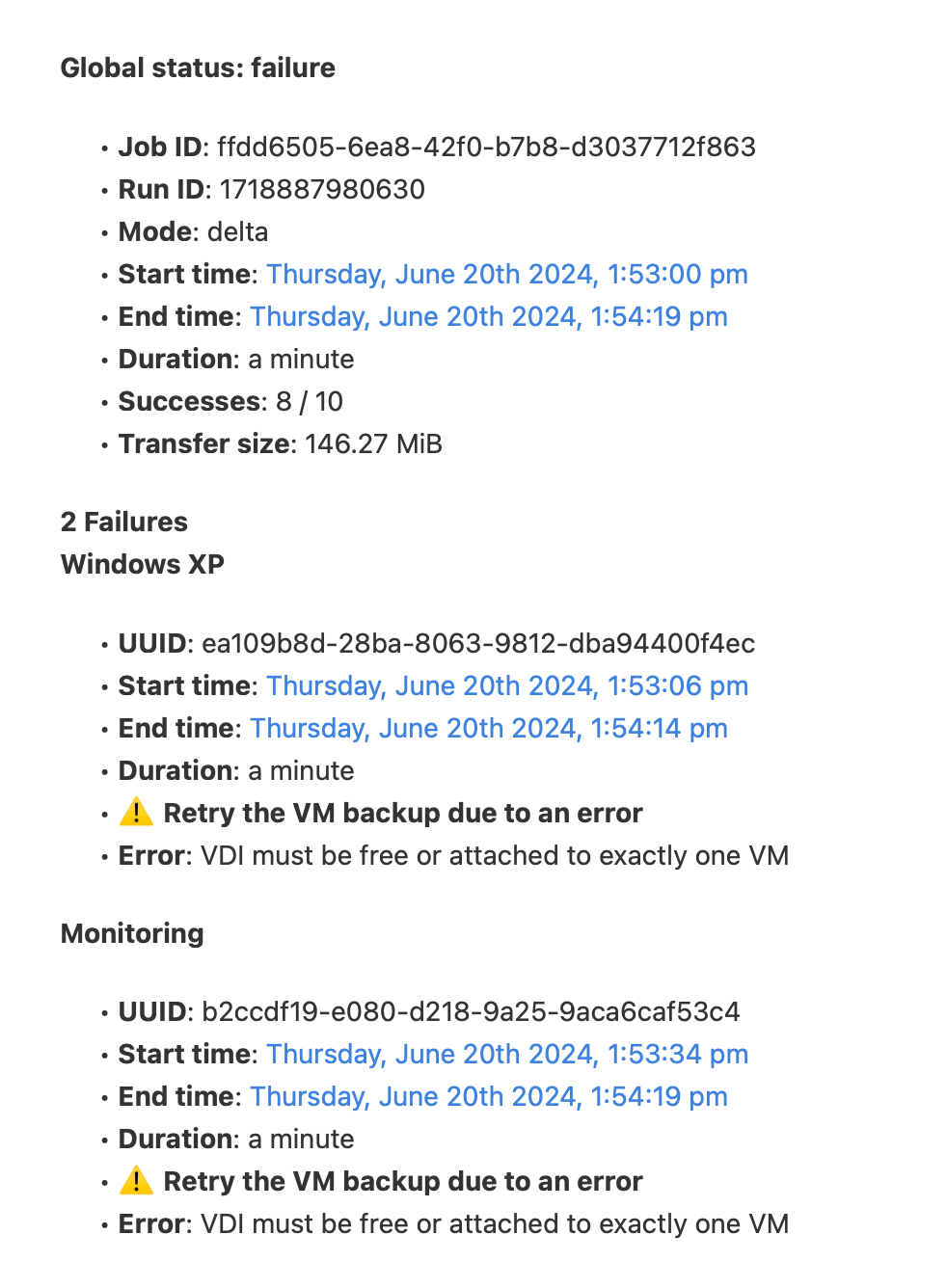

@florent I just upgraded to latest build as a test again. As no new news here I'm hoping that one update will fix this.

Anyway tested a backup and got the error again:

2 out of 10 VM's

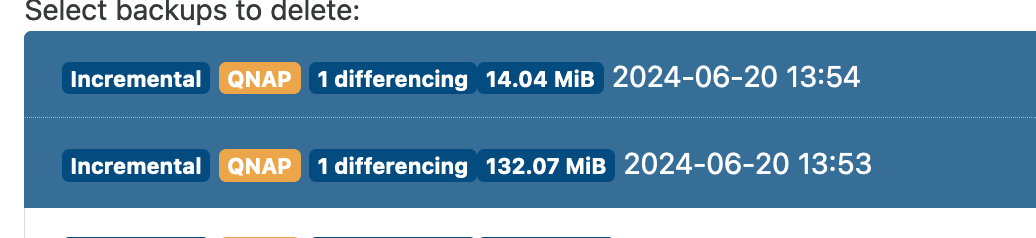

But I found another clue, when trying to delete the backups I see that on those failed VM's I get TWO backups in the list, instead of just one I've started!!!

Again: Xen Orchestra, commit 31461 Master, commit 16ae1 is last working build.

-

M manilx referenced this topic on

-

@florent @manilx @olivierlambert I have these failures too. I find the VDI attached to the control domain as a leftover from Continuous Replication. If I remove the dead VDI then backups work again. The problem is on going as I do CR every hour and sometimes it leaves one or more (random) dead VDI attached to the Control Domain.

I'm waiting for florent to push a XO CR fix before I update to the latest XO. But I don't expect the problem to be resolved in the latest XO update.

-

@Andrew Only doing Delya backups here and no dead VDI left attached...

Something seriously broke.....

-

@manilx They get stuck for me on Delta Backups too (not just CR).

Sometimes I have to do a Restart Toolstack to free it.

Check for running tasks that are stuck. Or try a Restart Toolstack on the master (it normally does not hurt anything to do that just to try it).

-

M manilx referenced this topic on

-

Hi,

We added 2 fixes in latest commit on master, please restart your XAPI (to clear all tasks), and try again with your updated XO

-

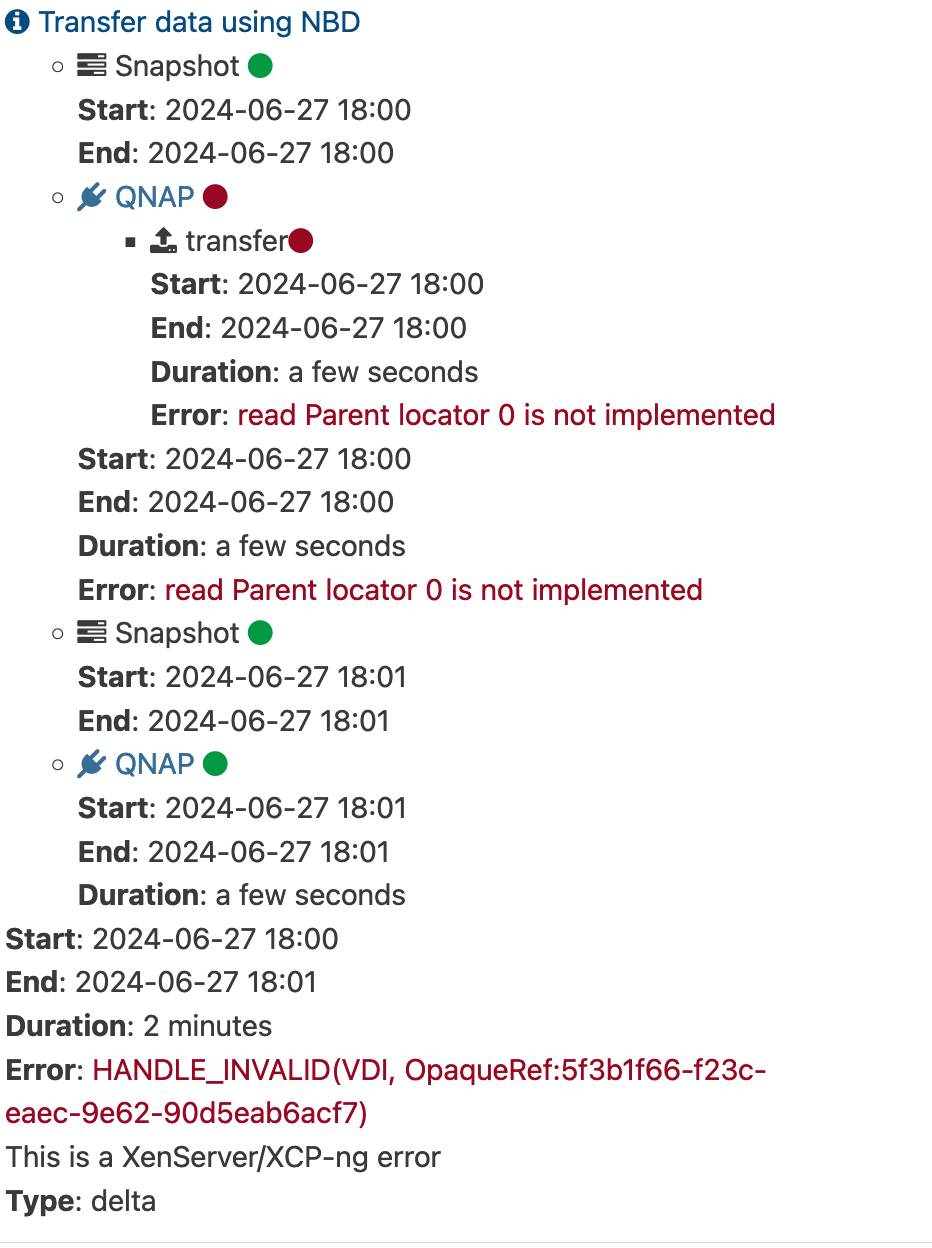

@olivierlambert Updated and still backups are broken:

Rolling back

-

Can you try without any previous snapshot?

-

@olivierlambert I reverted to previous version and backups OK.

Then I updated again, deleted all snapshots (had to wait a while for garbage collection) and run fresh backups:1st full: backup progress bars not updating, staying at 0% (I can only check progress by seeing data written to the NAS). Backup finished OK using NBD.

next delta: also run OK, without progress and all VM's showed ' cleanVm: incorrect backup size in metadata'

So, some progress

progress bars are really missing now

progress bars are really missing now

Speed still saturating the 2,5G management interface, so good.

-

@olivierlambert P.S. After delta backup I have all VM's with 'Unhealthy VDIs' waiting to coalesce but no Garbarge Collection job running.....

running

cat /var/log/SMlog | grep -i coalesceon host gives:Jun 27 18:48:03 vp6670 SM: [1043898] ['/usr/sbin/cbt-util', 'coalesce', '-p', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/c892688d-10b6-4ddd-938c-fb4f2bc3eb77.cbtlog', '-c', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/6f6389a1-9387-4fdd-8d4c-97cb1cf459bc.cbtlog'] Jun 27 18:48:08 vp6670 SM: [1044409] ['/usr/sbin/cbt-util', 'coalesce', '-p', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/af7a9b25-b1a4-40c0-9d48-21e1f0a9e4b4.cbtlog', '-c', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/35b88709-1e17-4bde-8799-0c8b4c879868.cbtlog'] Jun 27 18:48:22 vp6670 SM: [1045338] ['/usr/sbin/cbt-util', 'coalesce', '-p', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/0fea5975-e83a-4c30-89ea-c38b8c8ccb23.cbtlog', '-c', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/8893a898-2c21-4325-a7ee-882b3e5b9223.cbtlog'] Jun 27 18:48:25 vp6670 SM: [1045614] ['/usr/sbin/cbt-util', 'coalesce', '-p', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/99ff967e-fbd3-4b86-a76a-37879a08ef56.cbtlog', '-c', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/fcbee773-7147-4f80-9eca-48383c73fd81.cbtlog'] Jun 27 18:48:29 vp6670 SM: [1046130] ['/usr/sbin/cbt-util', 'coalesce', '-p', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/3b268ed5-7856-4948-bd27-bcc71b33ce66.cbtlog', '-c', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/19d60fac-c805-4c6c-8f02-819dd68a4407.cbtlog'] Jun 27 18:48:34 vp6670 SM: [1046641] ['/usr/sbin/cbt-util', 'coalesce', '-p', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/b83b7e8f-aede-4a37-92f1-4c867af6e39b.cbtlog', '-c', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/fdfa17b2-c47d-4b0b-93c3-df78653fbb8f.cbtlog'] Jun 27 18:48:39 vp6670 SM: [1047270] ['/usr/sbin/cbt-util', 'coalesce', '-p', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/b49458fc-3047-417e-b9db-8d188e74105e.cbtlog', '-c', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/77a7f093-11a1-4e6c-a502-e8aa07f1fa88.cbtlog'] Jun 27 18:48:41 vp6670 SM: [1047416] ['/usr/sbin/cbt-util', 'coalesce', '-p', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/49911f5d-5da5-48d7-a099-2ad83d2004d5.cbtlog', '-c', '/var/run/sr-mount/ea3c92b7-0e82-5726-502b-482b40a8b097/95395cf6-f234-43a6-a9ad-87a09fb2fa3e.cbtlog'] -

@olivierlambert After 15min still nothing. No coalesce. Even after rescanning storage.

So still something broken.

Will revert as I need backups working reliably.......