CBT: the thread to centralize your feedback

-

This post is deleted! -

updated to

fix_cbtbranch.CR NBD backup works.

Delta NBD backup works.

just once, so we can't be sure yet.No broken tasks is generated.

Still confused why CBT toggle is enabled on some VMs.

2 similars vms on same pool, same storage, same ubuntu version. One is enabled automaticaly, other is not. -

@florent i did some testing with the data_destroy branch on my lab, it seems to work as required, indeed the snapshot is hidden when it is cbt only.

What i am not shure is correct, when the data destroy action is done, i would expect a snapshot is showing up for coalesce but it does not. Is it too small, and quick removed so it will not be visible in XOA? on larger vms with our production i can see these snapshots showing for coalesce? Or when you do vdi.data_destroy will it try to coalesce directly without garbage collection afterwards?

-

@florent what does happen when we upgrade to this version by the end of july, we do now use NDB without cbt on most backups. will all need to run a full or does it 'convert' the method to the cbt situation? i asume as the checkbox for data destroy will be disabled in general it will not change that much to the backup at day one as long as u not switch to the data destroy option?

-

The transition to CBT should be done smoothly and without any manual intervention. @florent will provide more details on how

-

All tests with 2 vms were so far succesfull, no issues found in our lab. Good job guys!

-

@olivierlambert how many time for us with precompiled XOA?

-

Tomorrow

-

@olivierlambert sounds good!

-

Things are looking good on my end as well.

-

@olivierlambert Looks like it's back to single threaded bottlenecks...

I see a lot of single core 100% utilization on the XO VM.

-

@Andrew Hi Andrew, can't reproduce on my end, all cores utilized at the same time around 30 to 40 % for 2 simultanious backups.

-

@rtjdamen It happens when Continuous Replication is running. The source and destination and network can do 10Gb/sec.

I'll have to work on a better set of conditions and tests to replicate the issue.

I know it's slower because the hourly replication was taking 5-6 minutes and now takes 9-10 minutes. It's more of an issue when the transfer is >300GB.

Just feedback....

-

@Andrew understood! We do not use that at this time.

-

@olivierlambert Hi Olivier, do you have an ETA?

-

Today

-

@olivierlambert Running current XO master (commit 1ace3), using Backup and running a full backup to a NFS remote, it now ignores the

[NOBAK]on a disk.Also, if you cancel the export task the backup is still marked as Successful. I never tried that before so it may have always done that.

-

@Andrew Thanks for your feedback, we are currently investigating this issue, we have difficulties reproducing this issue though.

Also, if you cancel the export task the backup is still marked as Successful. I never tried that before so it may have always done that.

Yes, this is not supported, the task is only here for informational purposes.

-

@Andrew i tried the same on our end, changed one of the disk names to [NOBAK] in front but the disk is still processed. Not a big of a deal for us, we do not use this currenlty but wanted to confirm we see the same behavior.

I also tested with a new vm, created 2 disks, added [NOBAK] in front of the diskname, ran backup job and both disks are exported.

@olivierlambert are u still able to deliver the update to latest today?

-

@julien-f Updated to Xen Orchestra, commit 1ace3

Running my delta backups now all worked without issues. Also Coalesces finished fine.

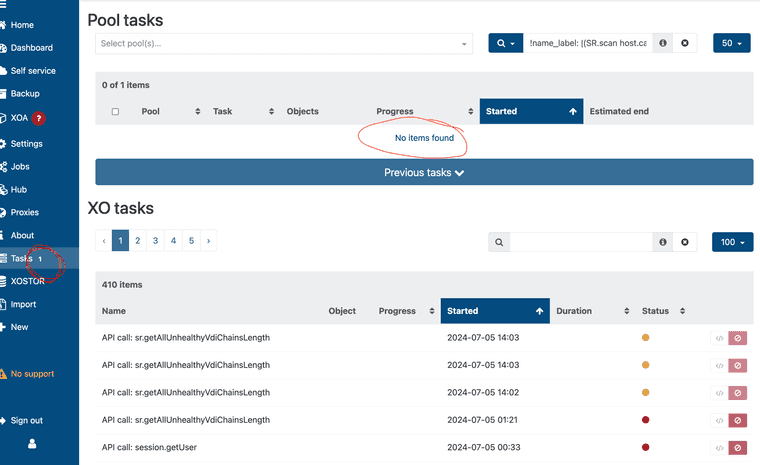

The only thing I note is that there is always one (or +1) tasks showb when there is actually none running: