@sir_alex_leo

I don't know but are they of the same type ??

Total Width: 128 bits Data Width: 64 bits

Total Width: 64 bits Data Width: 64 bits

@sir_alex_leo

I don't know but are they of the same type ??

Total Width: 128 bits Data Width: 64 bits

Total Width: 64 bits Data Width: 64 bits

@gduperrey

Ran updates on my old hosts

i7 gen4 and ryzen5

nothing exploded yet after ~10h of "testing"

@stormi

Did some minor testing and it seems to work fine

@MathieuRA

Yes, I can confirm if I disable the Secure boot option the VM will start.

After a quick flash of the pxe boot screen, the Debian installer will appear, and I can continue the installation.

The new template for debian 13 is working in XO-Lite

@ThierryEscande

Ran some tests for 20 min

Seems to work fine

[18:39 x1 ~]# ethtool -i eth0

driver: e1000e

version: 5.10.179

firmware-version: 0.13-3

expansion-rom-version:

bus-info: 0000:00:19.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: yes

@stormi

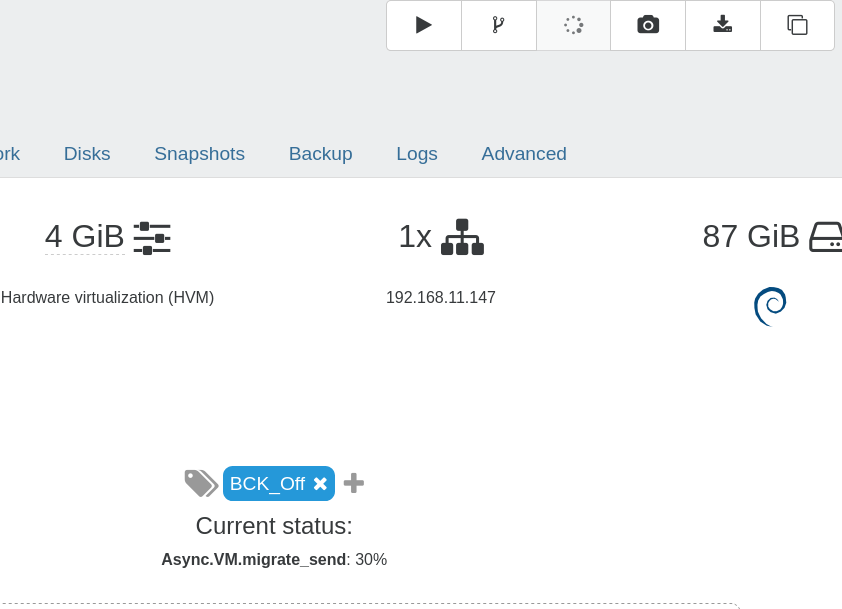

I ran the xcp-ng-testing and now the migration seems to work

@andriy.sultanov

Sorry, if I only could read...

Anyhow, My updated host running on intel seems to work just fine.

Seems to work fine on my old test rig i7-4710MQ with NFS

I updated my test host and all seems to work fine.

But I have 1 question:

Do I need to disable the testing repo or is it removed at the reboot?

yum clean metadata --enablerepo=xcp-ng-testing

yum update --enablerepo=xcp-ng-testing

reboot

[10:33 x1 ~]# yum repolist

Inlästa insticksmoduler: fastestmirror

Loading mirror speeds from cached hostfile

Excluding mirror: updates.xcp-ng.org

* xcp-ng-base: mirrors.xcp-ng.org

Excluding mirror: updates.xcp-ng.org

* xcp-ng-updates: mirrors.xcp-ng.org

förråds-id förrådsnamn status

xcp-ng-base XCP-ng Base Repository 4 376

xcp-ng-updates XCP-ng Updates Repository 125

repolist: 4 501

@acebmxer said in VM Pool To Pool Migration over VPN:

Maybe VPN overhead.

Have You checked the VPN capacity spec of Your firewalls?

No idea if anyone have "fixed" anything

No, the XO commit 5fcb6 hang for ~3 min at reboot today.

edit: I disabled the sceduled reboot yesterday.

@DwightHat

No

I did schedule a reboot of XO every morning and it seems it has worked because I "forgot" about it

No idea if anyone have "fixed" anything

I'm not running https and still on node v22

@Gheppy

My links from 6 to 5 works fine

Haven’t tested all of them of course, but quit a few and none of them have faild

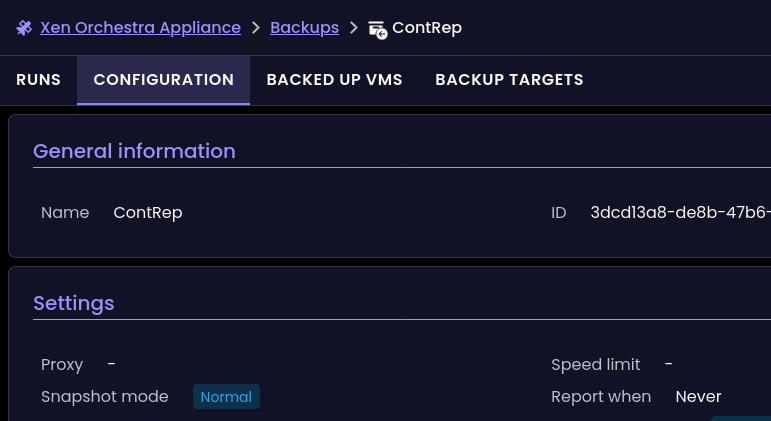

I run these kinds of backup jobs

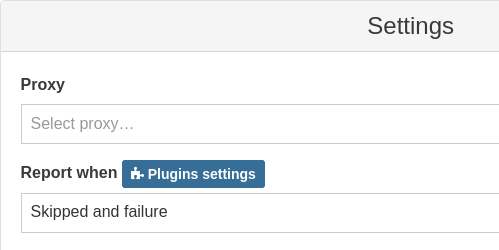

In ContRep, Conf/meta and in one of the Full Backup jobs I get Report when Never

But they are enabled in XO5 with mail address and Report when Skipped and failure

I fired up my 2nd XO wich is a ronivay script version.

Ran the update to latest commit 9f387

When I logged in, It start in the XO5 page

In my 1st XO "from docs", It start in the XO6

Maybe ronivay is not up to date with the new #release" of XO6

If You are running this type script, maybe that is the culprit

@probain Well I’ve been running commit 035ee for a few days now and haven't had any problems. But haven't done a lot of work with it.

When I update, I always run some kind of full/complicated update since I've had some problems before and with this type of update I've never had any issues.

sudo su

nvm install 22 && npm install --global yarn && cd ~/xen-orchestra/ && git checkout . && git pull --ff-only && yarn && yarn build --force && yarn run turbo run build --filter @xen-orchestra/web && systemctl restart xo-server.service && cd && systemctl daemon-reload

Or maybe just a simple CTRL F5 in Your browser will work

Commit 035ee in dashboard

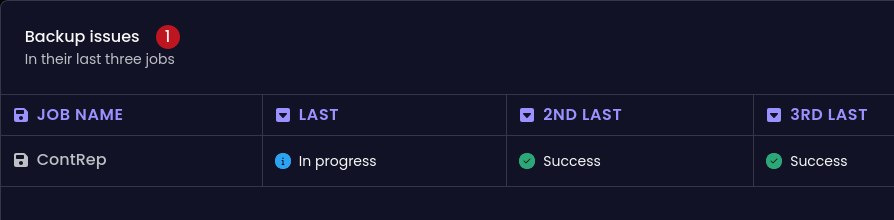

I'm not sure if it's a good idea to show a Backup issue every time a Cont.rep. job is run

It would be more or less a static feature for somebody who run Cont rep frequently. and as a consequence won't react when a proper alert should be displayed

Are you in UTC+1? This one might simply be because the VM's name shows the UTC time while, in the tables, we convert dates to your actual timezone.

Yes, I'm in CET, UTC+1 and 24h

Yes the Cont.Rep. VMs show UTC. During summertime, it was 2h off.

Its just cosmetics, not "urgent", but still in AM/PM

These are also still in AM/PM