Hi,

I’m afraid I’ve encountered the same problem:

-

Xen Orchestra, commit 0b52a

-

v6

Hi,

I’m afraid I’ve encountered the same problem:

Xen Orchestra, commit 0b52a

v6

I was thinking more of updating the entire set of the agent and drivers, similar to how XenServer VM Tools for Windows have it implemented. Using a scheduled job, they regularly check whether an update is available, and if so, they carry out the update of both the agent and the drivers themselves.

Can we look forward to automatic updates arriving in the future as well?

@dinhngtu I would prefer some standardized OS information for Windows systems, similar to this info:

Get-ComputerInfo | Select-Object OsName, OsVersion, OsBuildNumber, OsArchitecture

OsName OsVersion OsBuildNumber OsArchitecture

------ --------- ------------- --------------

Microsoft Windows Server 2025 Standard Evaluation 10.0.26100 26100 64-bit

@dinhngtu said in XCP-ng Windows PV tools announcements:

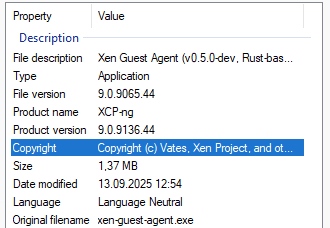

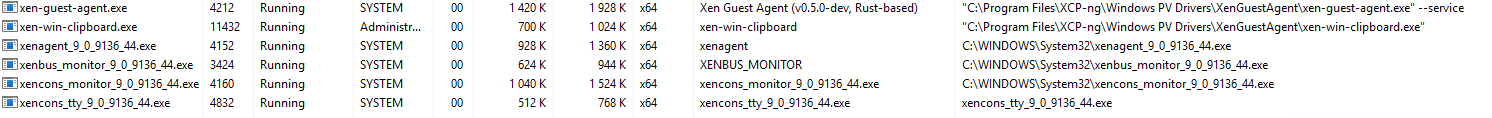

@abudef Got it, the agent was not changed since the last version so the build number was not bumped. I'll keep that in mind for the next release.

Hi, the agent still identifies as version 9.0.9065-44. It would be great if it identified with the same version as the drivers, so it would be clear at first glance which version it's running.

@kagbasi-ngc Hi, thanks for your thoughts.

I get on well with Linux myself - I’m using XO from source following the documentation, and actually, Ronivay’s script, as you mentioned, makes it all even handier. Still, I can’t help but think - your average home user, a total amateur, is just going to land on the XCP-ng host homepage and click "Deploy XOA". And then they’ve no backup, outside of the trial period. But sure, if XOA is aimed squarely at business and enterprise users with paid licences, fair enough that makes perfect sense.

I just feel like backup isn’t really a purely business or enterprise feature, unlike, say, proxy instances or hyper-converged storage. It's something even home users would genuinely benefit from.

But as it is mentioned above, that’s just how it’s set up - and like you said yourself, everyone’s got the chance to learn something new. And sure, in the age of AI chatbots, there’s really no excuse not to manage it

Hi,

congratulations on another milestone, XOA 5.108! A massive thanks to all the developers, and I’m really rooting for you - may both Vates and our whole community continue to grow strong.

I’d love to ask someone from Vates how they see the position of XOA compared to the self-compiled version of XO. As most folks know, XOA is incredibly easy and elegant to install on an XCP-ng server - even a complete beginner with no experience can manage it without much bother.

But there is one key limitation in XOA that affects all casual users once the trial period ends - the integrated Backup tool is no longer available. Sure, if you compile XO from source, the Backup functionality is there. But even though we have a detailed guide, getting XO from source up and running can be a bit of a tough nut to crack for users with no Linux experience. Even using the pre-made scripts (like XenOrchestraInstallerUpdater, and the like), which seem to be the preferred method among the tech-savvy YouTubers promoting XCP-ng/XO(A), still calls for at least a bit of Linux know-how.

Now, things like XOSTOR or Proxies - a home user probably won’t need them. But Backup? That’s something that could really be made available in XOA for the everyday user.

What’s your take on that, Vates?

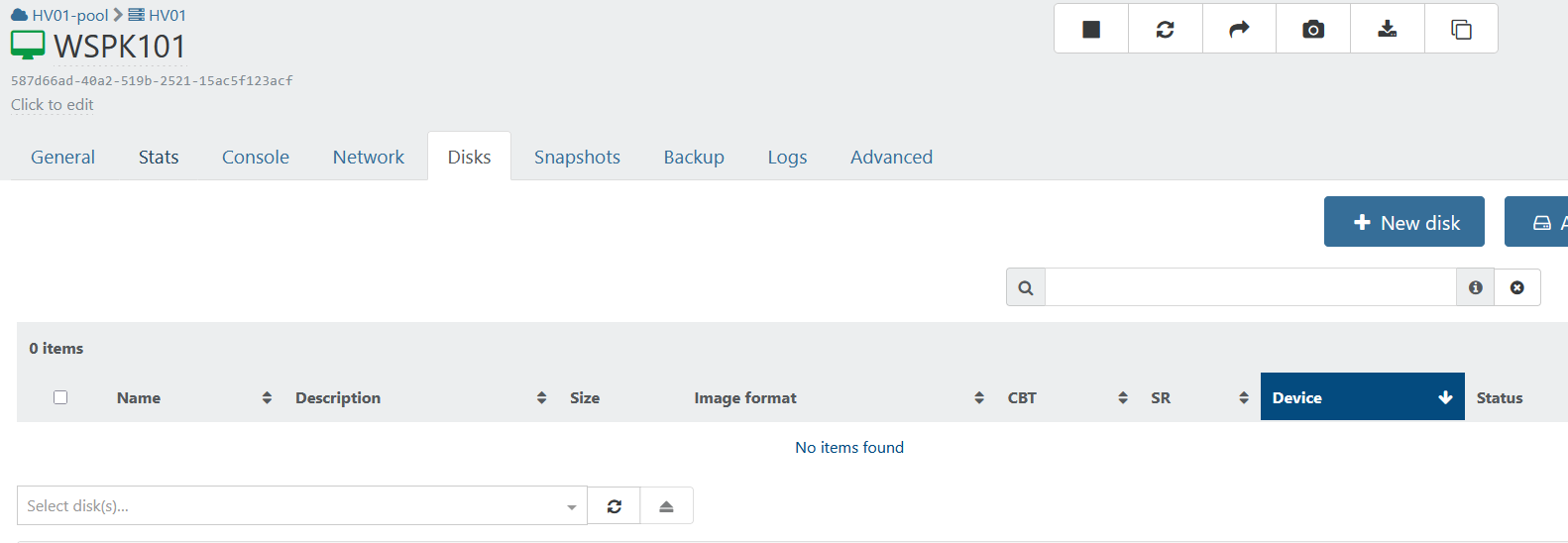

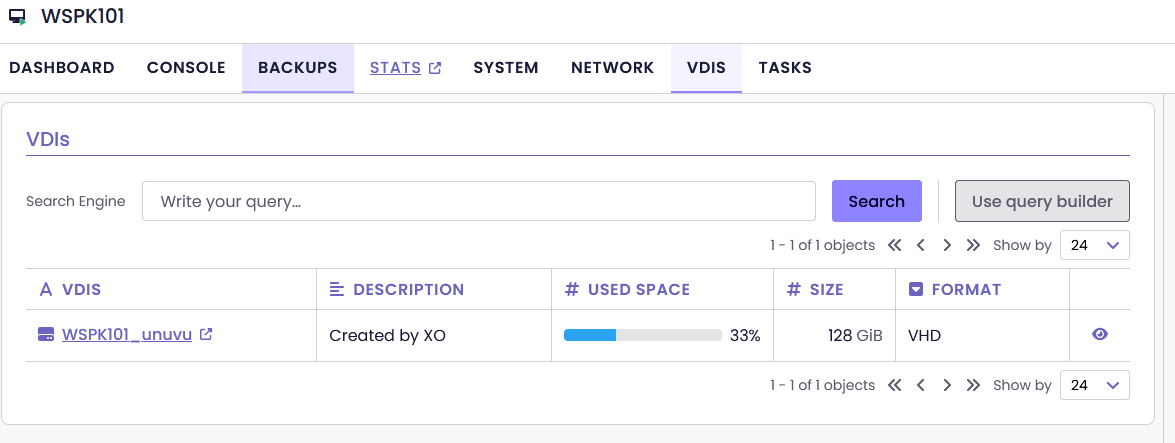

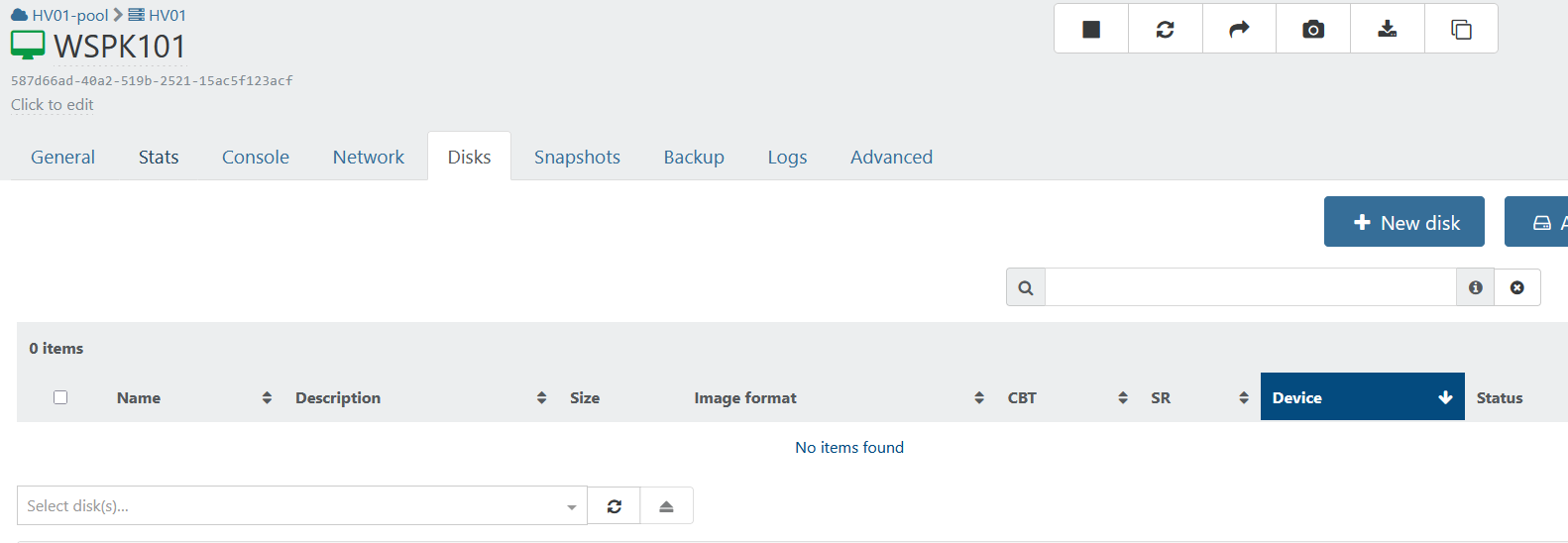

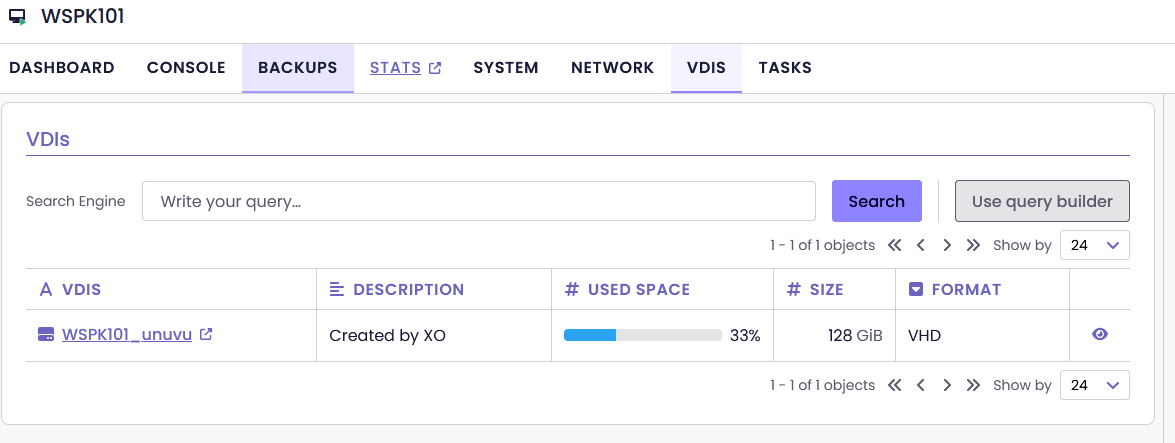

I also see it in my lab

The array was created later after installation manually using unused disks. And then it was connected in the normal way as a local SR using XO.

It would be nice if it would also handle the state of other mdamd RAID arrays. After all, the All mdadm RAID are healthy message kind of predetermines it

Yeah, that's it, thanks.

device = '/dev/md0'

@andriy.sultanov said in XCP-ng 8.3 updates announcements and testing:

does this reproduce if you reboot again

Yes, this does. Logs have been provided via pm.

[18:38 vms04 ~]# xe host-call-plugin host-uuid=5f94209f-8801-4179-91d5-5bdf3eb1d3f1 plugin=raid.py fn=check_raid_pool

{}

[18:38 vms04 ~]#

[18:38 vms04 ~]# cat /proc/mdstat

Personalities : [raid0]

md0 : active raid0 sdb[1] sda[0] sdc[2]

11720658432 blocks super 1.2 512k chunks

unused devices: <none>

[18:56 vms04 ~]# mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Fri May 2 14:43:06 2025

Raid Level : raid0

Array Size : 11720658432 (11177.69 GiB 12001.95 GB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Fri May 2 14:43:06 2025

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : vms04:0 (local to host vms04)

UUID : bbdc7253:792a8ada:ee21a207:4b8d52d2

Events : 0

Number Major Minor RaidDevice State

0 8 0 0 active sync /dev/sda

1 8 16 1 active sync /dev/sdb

2 8 32 2 active sync /dev/sdc

[18:56 vms04 ~]#

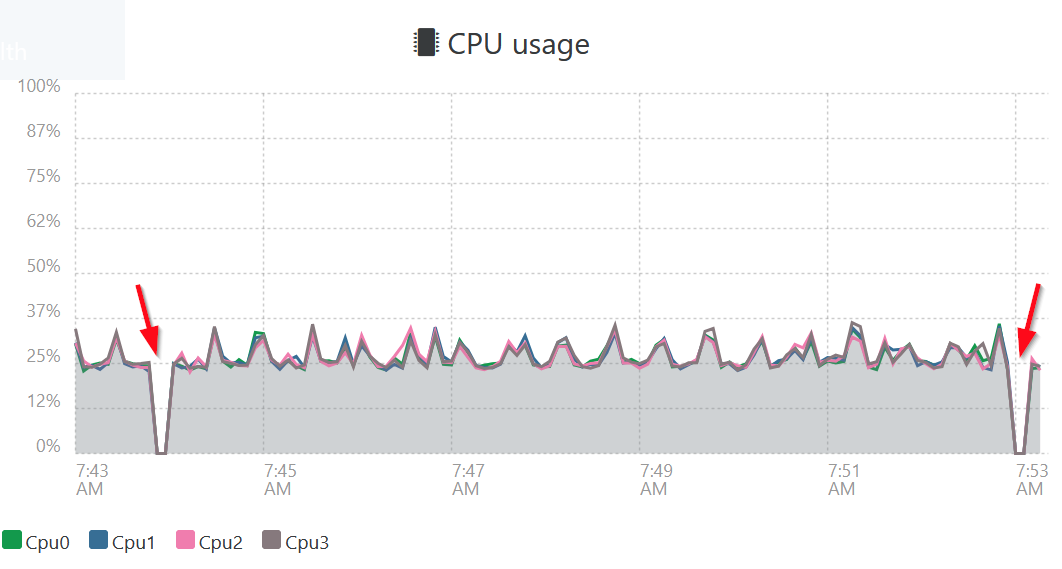

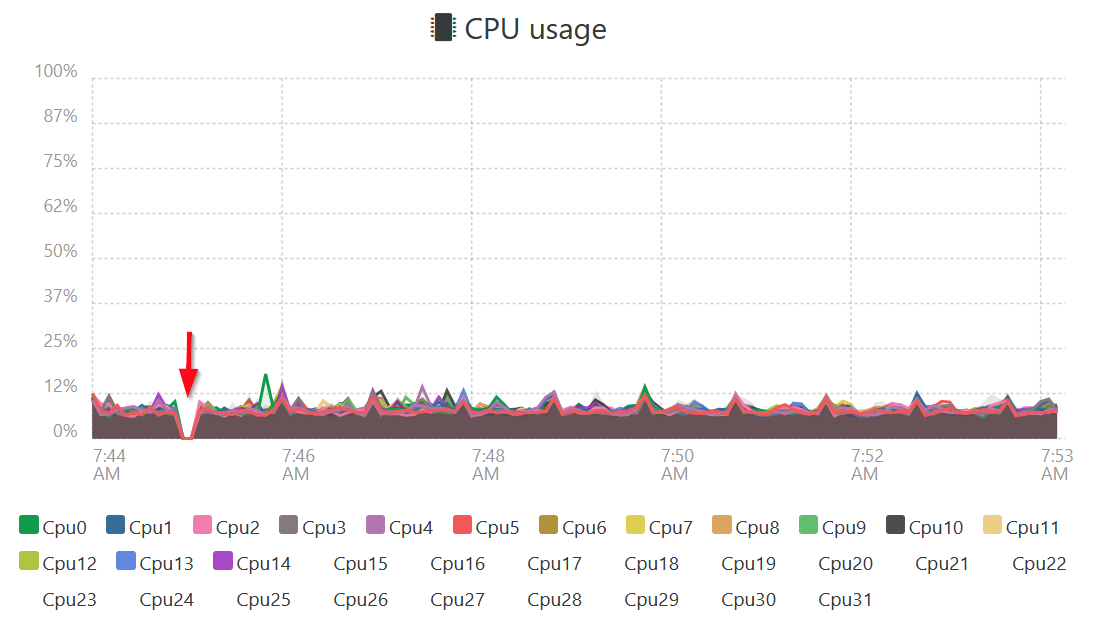

@stormi No stats until the toolstack restarts, as with previous candidates

So it looks like it was a browser cache problem, solved by deleting it.

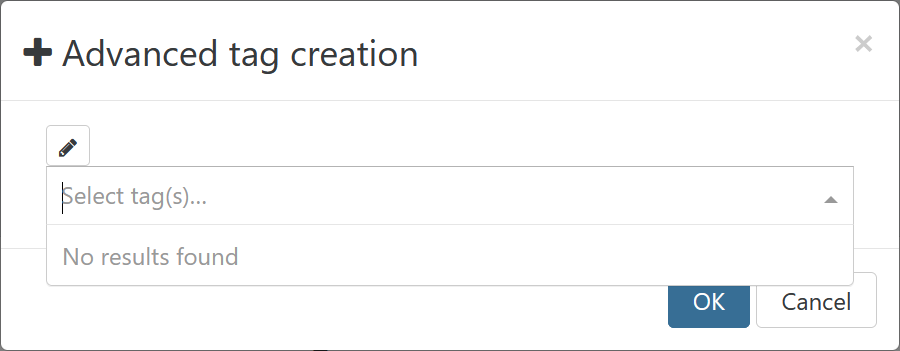

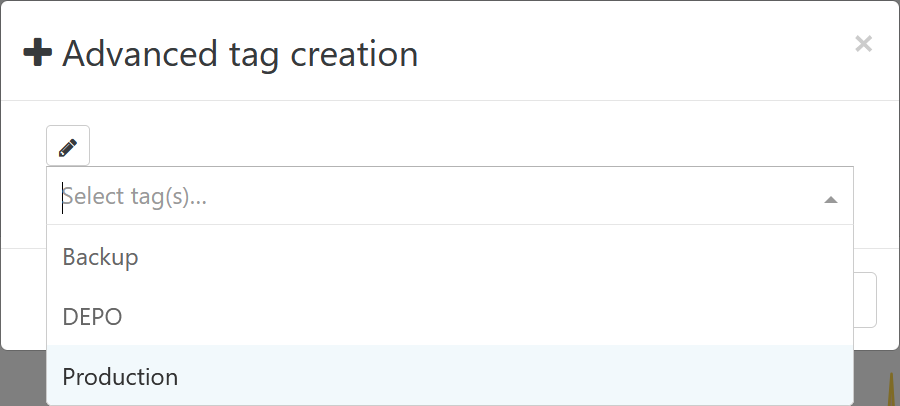

Hi,

In XO from source commit ab569, the tags I'm using don't appear in the pick list.

In XOA, they are available in the pick list

Any idea?

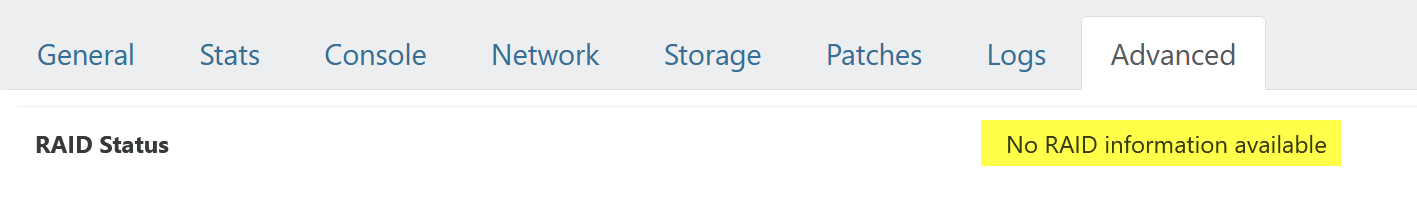

Under what circumstances is the RAID array status displayed here? I have an mdadm RAID array on my host (dom0), but here it says "No RAID information available":

@XCP-ng-JustGreat Until this is fixed, you need to restart the toolstack after each host restart, which is not often done, so it's not really a problem.

@Greg_E Please try restarting the toolstack once more.