I have the same problem with 2 servers.

The solution for me was to:

- migrate the VM between servers with different SR or to a 3rd one.

- export-import, virtual machines that are not that important and may have downtime.

I have the same problem with 2 servers.

The solution for me was to:

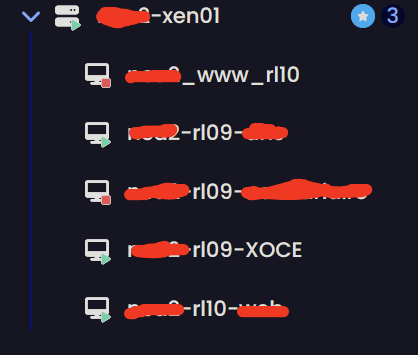

For me it's a bit confusing XO6.

For example, why are the icons so small and in such far corners, like New VM? It's like you want it to be hidden.

Isn't it more intuitive if they're at the top/bottom of this menu or in line with title (***-xen01)?

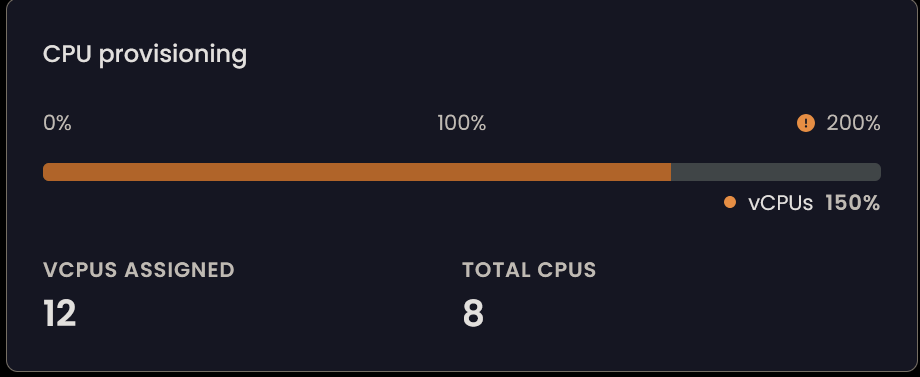

Another thing is the number of allocated processors. In my opinion it is misleading. It shows the total number of allocated processors even to VMs that are not started.

It is not better to be something like:

8 vCPUs running VMs / 4 vCPUs stopped VMs - total number allocated vCPUs 12/ Total CPUs 8.

And something like 8 - green / 4 -red - 12 orange/ 8 grey

Running VM

@MathieuRA

I also confirm that it works. Thank you

I managed to get it working, although the solution is not exactly to my liking.

I enabled both 80 and 443

[http]

[[http.listen]]

port = 80

[[http.listen]]

port = 443

cert = '/opt/ssl-local/fullchain.pem'

key = '/opt/ssl-local/privkey.pem'

# CRITICAL WEBSOCKET CONFIGURATION

[http.upgrade]

'/v5/api' = true

'/v5/api/updater' = true

# Make sure these routes are allowed for WebSocket upgrade

[http.routes]

'/v5/api' = { upgrade = true }

'/v5/api/updater' = { upgrade = true }

# List of files/directories which will be served.

[http.mounts]

'/v6' = '../../@xen-orchestra/web/dist/'

'/' = '../xo-web/dist/'

[redis]

[remoteOptions]

[plugins]

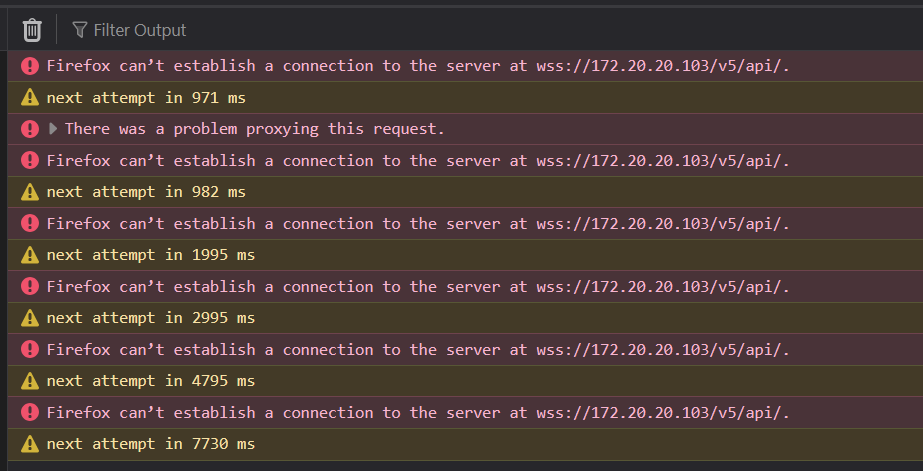

@ph7

On http it works, but without the lines below (those with /v5) in the config. On https it is the problem and it shouldn't be.

...

# List of files/directories which will be served.

[http.mounts]

'/v6' = '../../@xen-orchestra/web/dist/'

'/' = '../xo-web/dist/'

# List of proxied URLs (HTTP & WebSockets).

[http.proxies]

#'/v5/api' = 'ws://localhost:9000/api'

#'/v5/api/updater' = 'ws://localhost:9001'

#'/v5/rest' = 'http://localhost:9000/rest'

...

I don't run any scripts, I install according to the documentation.

nodejs 22

npm install -g npm

git clone -b master https://github.com/vatesfr/xen-orchestra

cp xo-server.toml /opt/xen-orchestra/packages/xo-server/.xo-server.toml

cd /opt/xen-orchestra

yarn

yarn build

# XO6

yarn run turbo run build --filter @xen-orchestra/web

yarn cache clean

systemctl restart xo-server

The last compilation (b667bc8) works perfectly, this version is fine too, except for the links from v6 to v5 which are many.

In my opinion, v6 is not yet ready to be the default, too many links to v5..

Same problem for me to.

The configuration file is like below, now. If I click on v6 from v5 it works but not the other way around.

And the links always go to /v5, but if v5 is the default what's the point?

I think that's the problem, instead of taking into account the config for the base they are explicitly passed in the links.

The config

[http]

[http.cookies]

[[http.listen]]

port = 443

cert = '/opt/ssl-local/fullchain.pem'

key = '/opt/ssl-local/privkey.pem'

[http.mounts]

'/v6' = '../../@xen-orchestra/web/dist/'

'/' = '../xo-web/dist/'

# List of proxied URLs (HTTP & WebSockets).

[http.proxies]

'/v5/api' = 'ws://localhost:9000/api'

'/v5/api/updater' = 'ws://localhost:9001'

'/v5/rest' = 'http://localhost:9000/rest'

[redis]

[remoteOptions]

[plugins]

The problem with /v5

How do I make these settings permanent even if I update the server?

ALLOW: class=0a # CDC-Data

ALLOW: class=0b # Smartcard

from /etc/xensource/usb-policy.conf

Thank you

hmm,

I found the solution, after I read here on forum.

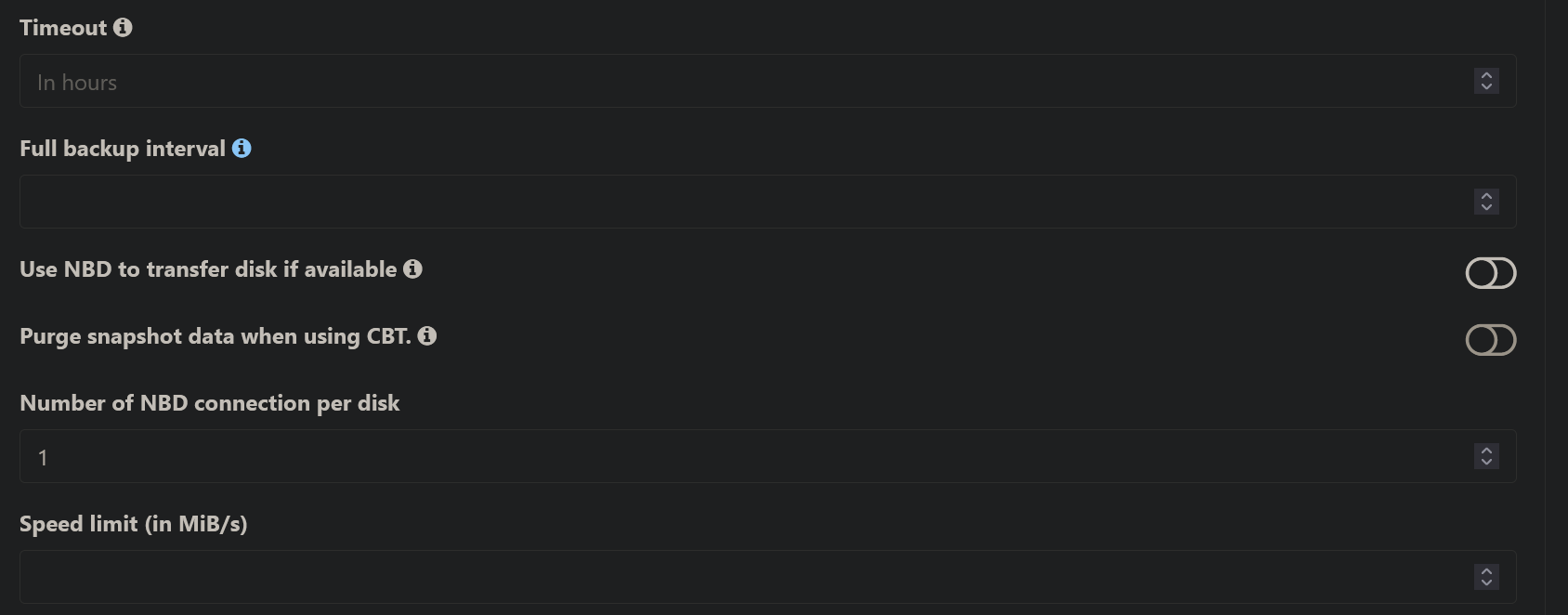

I enable this options and save and then disable and save and now is ok. Task is running again. It is running with last commit to

So the problem is with these settings which may not exist in the task created before the update.

@florent

The same result

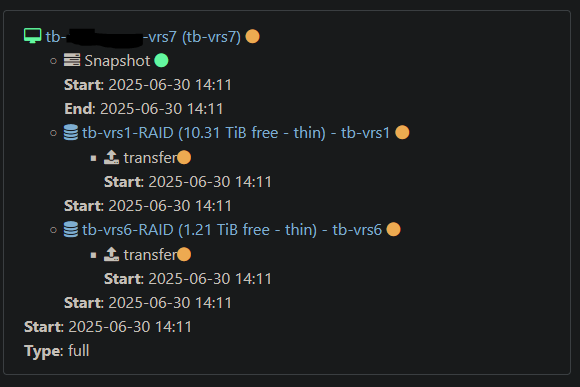

Logs, but task is blocked on importing step

{

"data": {

"mode": "delta",

"reportWhen": "always"

},

"id": "1751375761951",

"jobId": "109e74e9-b59f-483b-860f-8f36f5223789",

"jobName": "tb-xxxx-xxxx-vrs7",

"message": "backup",

"scheduleId": "40f57bd8-2557-4cf5-8322-705ec1d811d2",

"start": 1751375761951,

"status": "pending",

"infos": [

{

"data": {

"vms": [

"629bdfeb-7700-561c-74ac-e151068721c2"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "629bdfeb-7700-561c-74ac-e151068721c2",

"name_label": "tb-xxxx-xxxx-vrs7"

},

"id": "1751375768046",

"message": "backup VM",

"start": 1751375768046,

"status": "pending",

"tasks": [

{

"id": "1751375768586",

"message": "snapshot",

"start": 1751375768586,

"status": "success",

"end": 1751375772595,

"result": "904c2b00-087f-45ae-9799-b6dad1680aff"

},

{

"data": {

"id": "1afcdfda-6ede-3cb5-ecbf-29dc09ea605c",

"isFull": true,

"name_label": "tb-vrs1-RAID",

"type": "SR"

},

"id": "1751375772596",

"message": "export",

"start": 1751375772596,

"status": "pending",

"tasks": [

{

"id": "1751375773638",

"message": "transfer",

"start": 1751375773638,

"status": "pending"

}

]

},

{

"data": {

"id": "a5d2b22e-e4be-c384-9187-879aa41dd70f",

"isFull": true,

"name_label": "tb-vrs6-RAID",

"type": "SR"

},

"id": "1751375772611",

"message": "export",

"start": 1751375772611,

"status": "pending",

"tasks": [

{

"id": "1751375773654",

"message": "transfer",

"start": 1751375773654,

"status": "pending"

}

]

}

]

}

]

}

@florent

I will update to the latest version and look at the logs.

Now I have version 7994fc52c31821c2ad482471319551ee00dc1472

@olivierlambert

I update to last commit, two more change is on git now

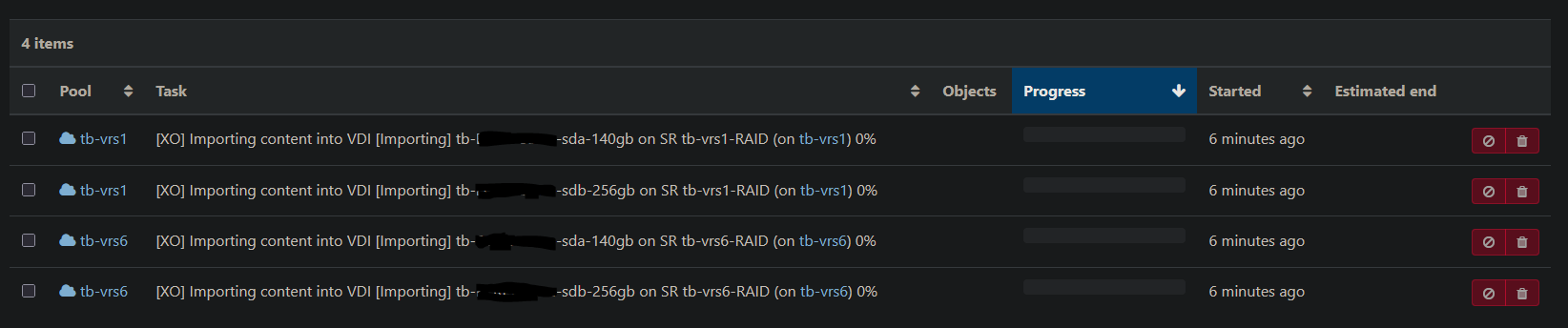

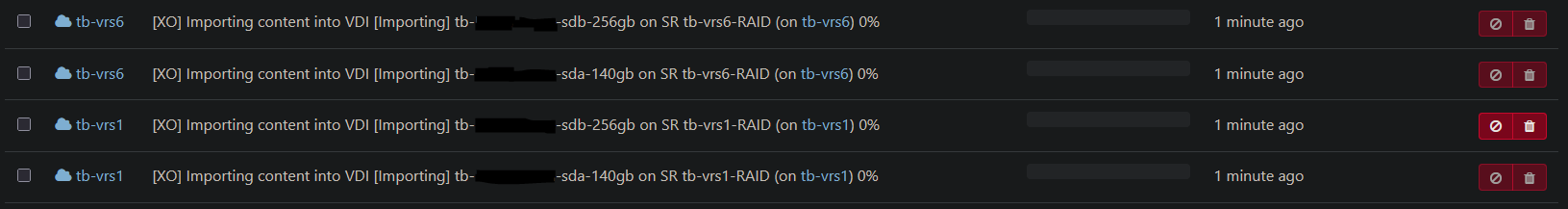

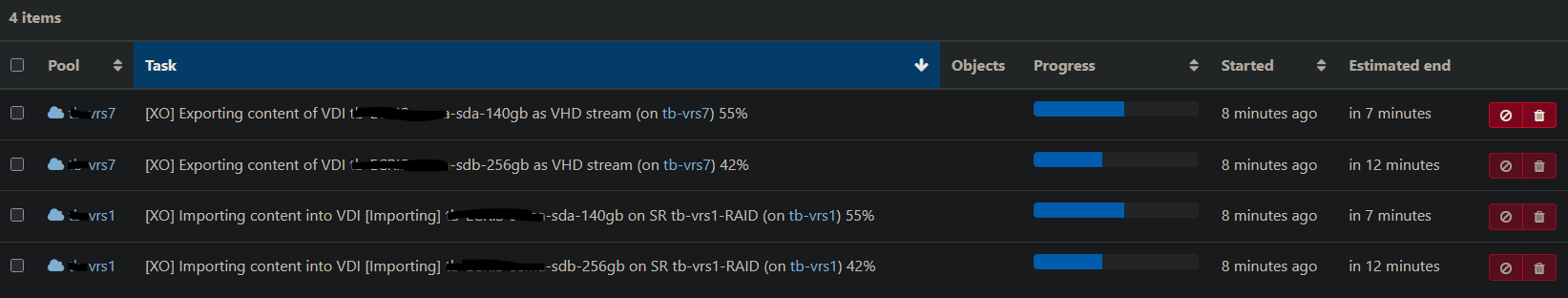

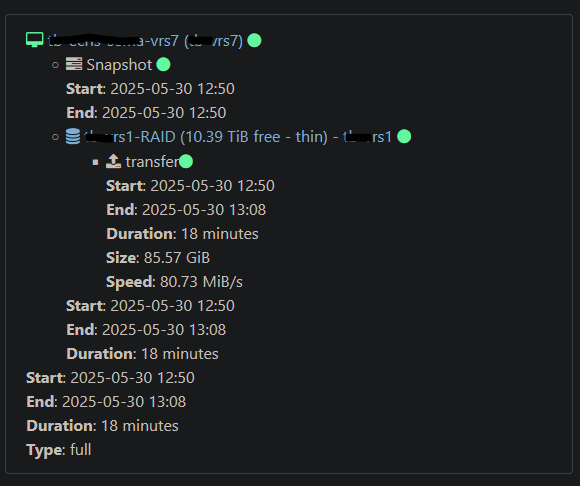

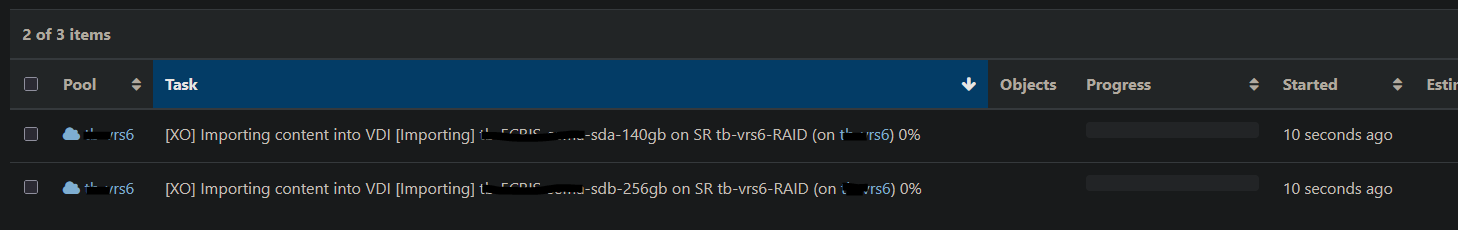

The problem still exists. From what I've noticed, export tasks start being created and then disappear shortly after. And then a few moments later the import tasks appear and it remains blocked like this.

Only up to this commit 7994fc52c31821c2ad482471319551ee00dc1472 (inclusive) , everything is ok.

EDIT: I read on the forum about similar problems. I don't have NBD enabled.

@olivierlambert

The problem start with this commit cbc07b319fea940cab77bc163dfd4c4d7f886776

This is ok 7994fc52c31821c2ad482471319551ee00dc1472

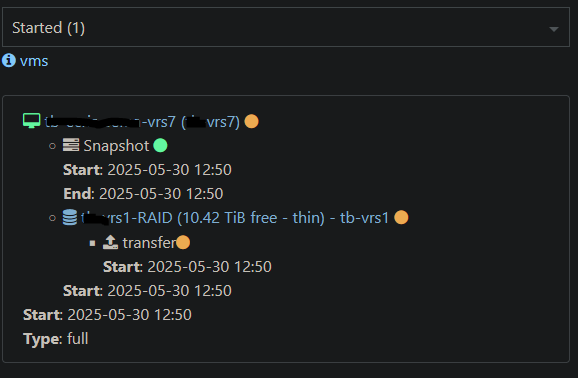

Hello, the problem has reappeared.

This time with a different behavior.

It hangs at this stage, and the export tasks do not even start/show.

Info:

XCP-ng 8.3 up to date,

XOCE commit fcefaaea85651c3c0bb40bfba8199dd4e963211c

I tested with commit e64c434 and it is ok.

Thank you

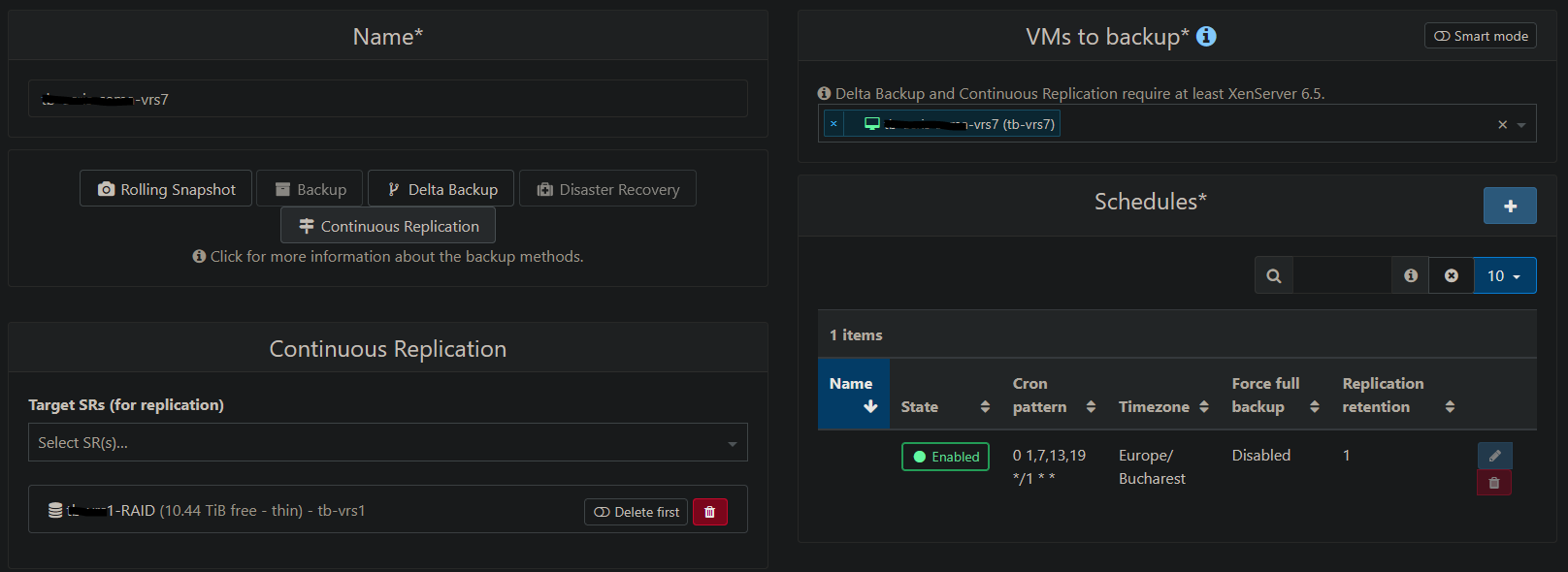

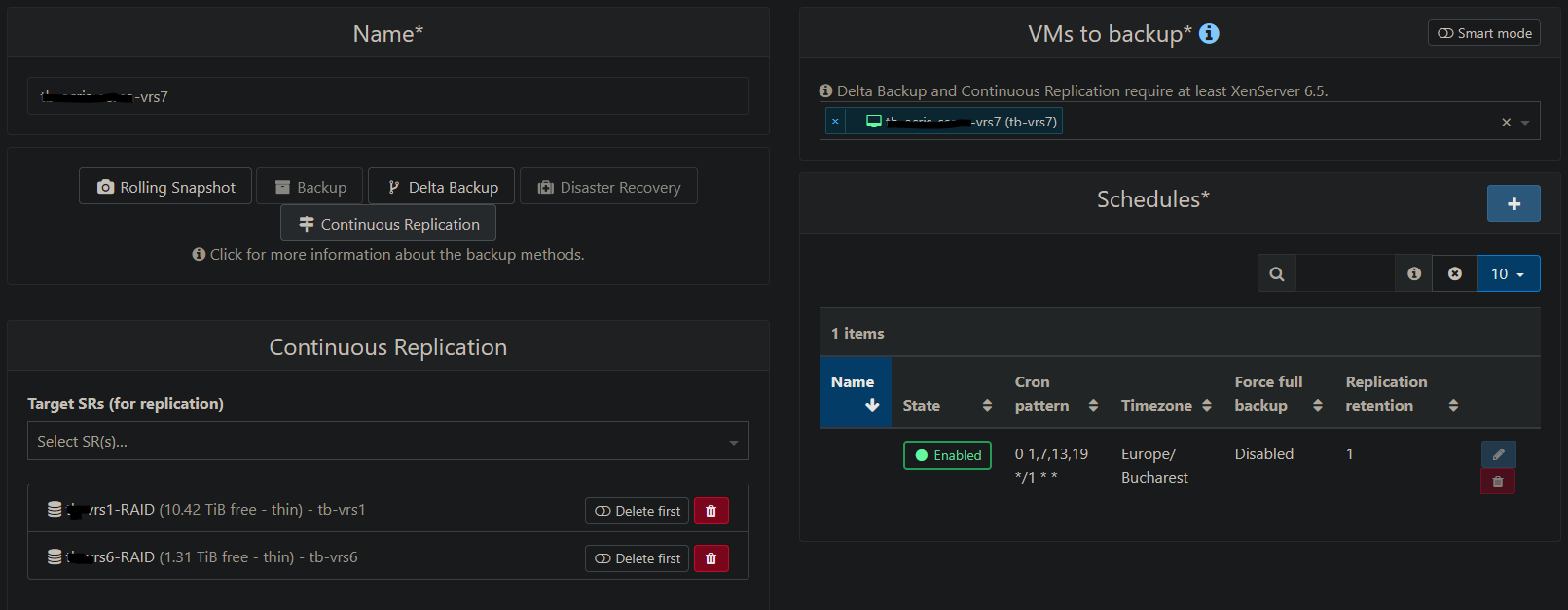

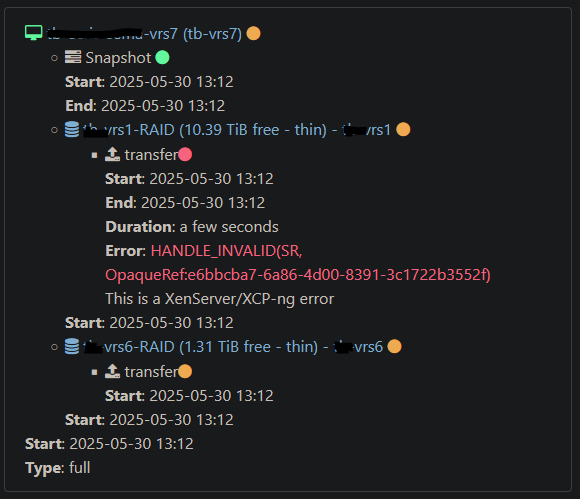

Hello,

I have problems with continuous replication, meaning that if I choose to do it on a single SR everything is ok but if I add two or more SRs it crashes and gives the error message below.

The problem is that it does nothing, it stays stuck and it is always the first SR in the list.

Info:

Here it is with only one SR and everything is ok:

configuration:

transfer:

message

Here it is with two SRs

configuration:

transfer:

message:

log

{

"data": {

"mode": "delta",

"reportWhen": "always"

},

"id": "1748599936065",

"jobId": "109e74e9-b59f-483b-860f-8f36f5223789",

"jobName": "********-vrs7",

"message": "backup",

"scheduleId": "40f57bd8-2557-4cf5-8322-705ec1d811d2",

"start": 1748599936065,

"status": "pending",

"infos": [

{

"data": {

"vms": [

"629bdfeb-7700-561c-74ac-e151068721c2"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "629bdfeb-7700-561c-74ac-e151068721c2",

"name_label": "********-vrs7"

},

"id": "1748599941103",

"message": "backup VM",

"start": 1748599941103,

"status": "pending",

"tasks": [

{

"id": "1748599941671",

"message": "snapshot",

"start": 1748599941671,

"status": "success",

"end": 1748599945608,

"result": "5c030f40-0b34-d1b4-10aa-f849548aa0b7"

},

{

"data": {

"id": "1afcdfda-6ede-3cb5-ecbf-29dc09ea605c",

"isFull": true,

"name_label": "********-RAID",

"type": "SR"

},

"id": "1748599945609",

"message": "export",

"start": 1748599945609,

"status": "pending",

"tasks": [

{

"id": "1748599948875",

"message": "transfer",

"start": 1748599948875,

"status": "failure",

"end": 1748599949159,

"result": {

"code": "HANDLE_INVALID",

"params": [

"SR",

"OpaqueRef:e6bbcba7-6a86-4d00-8391-3c1722b3552f"

],

"call": {

"duration": 6,

"method": "VDI.create",

"params": [

"* session id *",

{

"name_description": "********-sdb-256gb",

"name_label": "********-sdb-256gb",

"other_config": {

"xo:backup:vm": "629bdfeb-7700-561c-74ac-e151068721c2",

"xo:copy_of": "59ee458d-99c8-4a45-9c91-263c9729208b"

},

"read_only": false,

"sharable": false,

"SR": "OpaqueRef:e6bbcba7-6a86-4d00-8391-3c1722b3552f",

"tags": [],

"type": "user",

"virtual_size": 274877906944,

"xenstore_data": {}

}

]

},

"message": "HANDLE_INVALID(SR, OpaqueRef:e6bbcba7-6a86-4d00-8391-3c1722b3552f)",

"name": "XapiError",

"stack": "XapiError: HANDLE_INVALID(SR, OpaqueRef:e6bbcba7-6a86-4d00-8391-3c1722b3552f)\n at XapiError.wrap (file:///opt/xen-orchestra/packages/xen-api/_XapiError.mjs:16:12)\n at file:///opt/xen-orchestra/packages/xen-api/transports/json-rpc.mjs:38:21\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)"

}

}

]

},

{

"data": {

"id": "a5d2b22e-e4be-c384-9187-879aa41dd70f",

"isFull": true,

"name_label": "********-vrs6-RAID",

"type": "SR"

},

"id": "1748599945617",

"message": "export",

"start": 1748599945617,

"status": "pending",

"tasks": [

{

"id": "1748599948887",

"message": "transfer",

"start": 1748599948887,

"status": "pending"

}

]

}

]

}

]

}

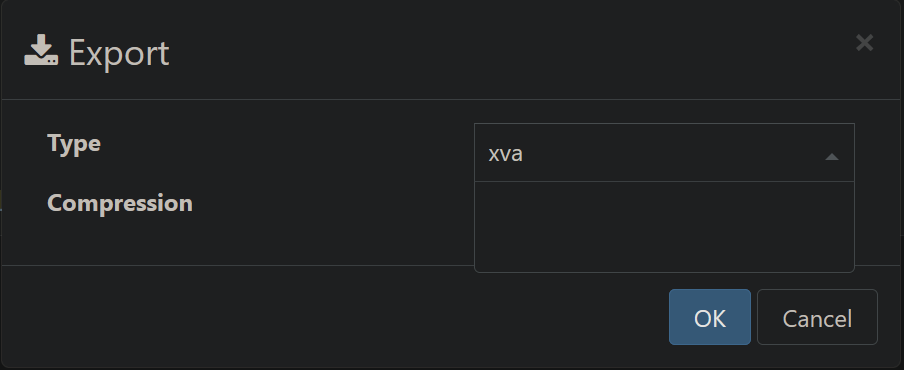

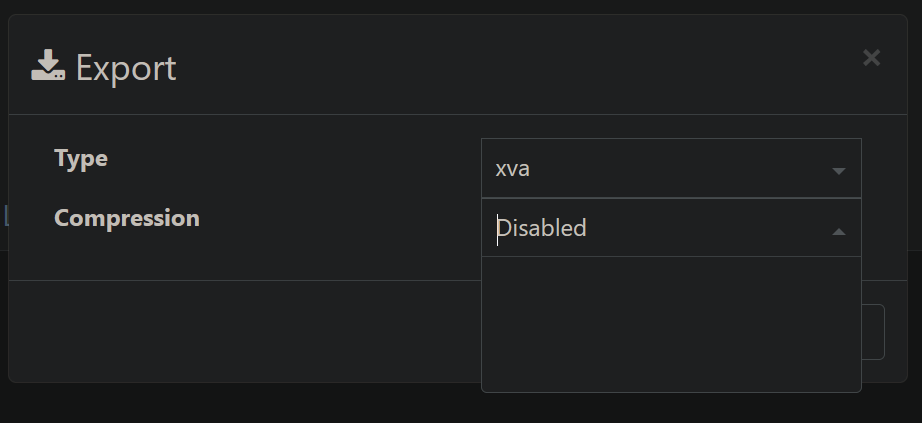

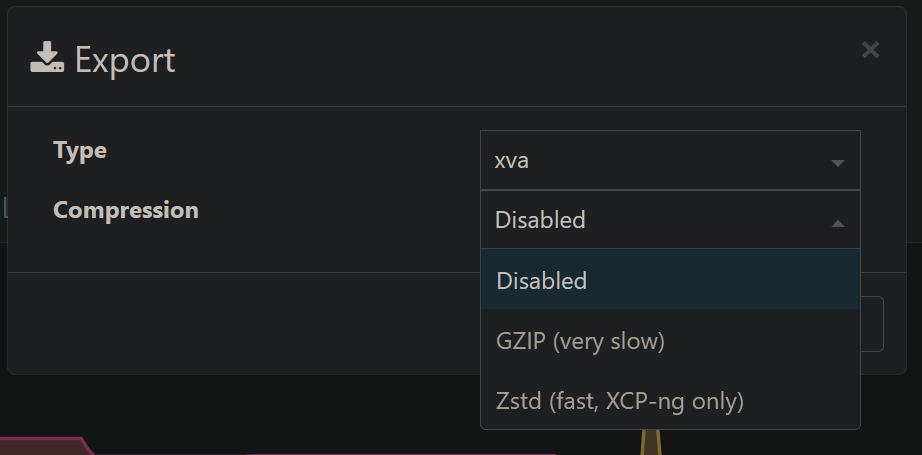

I have a problem after commit 6e508b0.

I can no longer select the export method.

With 6e508b0, all are ok

Commit a386680

Commit 6e508b0

At a certain point, I don't know when because we are talking about a home lab here.

I could attach the USB HDD same as a virtual HDD and I could put exclusions on it

More detailed:

At the moment I have this:

A VM with TrueNAS that has:

I want to backup the TrueNAS system, which means only sda

I excluded sdb via [NOBAK] [NOSNAP]

But I can no longer exclude sdc via [NOBAK] [NOSNAP]