@dinhngtu I'll try this on the next VM that exhibits the behavior as to not interrupt the end users on the completed VMs.

Posts

-

RE: Windows Xen Guest Agent (Rust-based) - Not reporting the IP address to Xen Orchestra

-

RE: Windows Xen Guest Agent (Rust-based) - Not reporting the IP address to Xen Orchestra

@dinhngtu Below is what I can find on the latest VM where this occurred. I saw a Windows report error that said it might have info, but there is nothing there.

Faulting application name: xen-guest-agent.exe, version: 9.0.9137.2, time stamp: 0x68e7c2e8 Faulting module name: xen-guest-agent.exe, version: 9.0.9137.2, time stamp: 0x68e7c2e8 Exception code: 0xc0000409 Fault offset: 0x00000000000a2c39 Faulting process id: 0xa20 Faulting application start time: 0x01dc439b0e299e74 Faulting application path: C:\Program Files\XCP-ng\Windows PV Drivers\XenGuestAgent\xen-guest-agent.exe Faulting module path: C:\Program Files\XCP-ng\Windows PV Drivers\XenGuestAgent\xen-guest-agent.exe Report Id: 145e6fdf-9c31-408c-a704-f09b923f957d Faulting package full name: Faulting package-relative application ID:Fault bucket , type 0 Event Name: BEX64 Response: Not available Cab Id: 0 Problem signature: P1: xen-guest-agent.exe P2: 9.0.9137.2 P3: 68e7c2e8 P4: xen-guest-agent.exe P5: 9.0.9137.2 P6: 68e7c2e8 P7: 00000000000a2c39 P8: c0000409 P9: 0000000000000007 P10: Attached files: These files may be available here: C:\ProgramData\Microsoft\Windows\WER\ReportQueue\AppCrash_xen-guest-agent._3e70918689a16355b26c3269beb18dcb31a29bf_48acc870_17b92d64 Analysis symbol: Rechecking for solution: 0 Report Id: 145e6fdf-9c31-408c-a704-f09b923f957d Report Status: 4 Hashed bucket: -

RE: Windows Xen Guest Agent (Rust-based) - Not reporting the IP address to Xen Orchestra

@dinhngtu On the Windows Event Viewer, I didn't see anything out of the ordinary. Unfortunately, Ansible posts a lot of info in there so it may be getting drowned out. If I get a chance to test with another migrated VM, I will check again.

-

Windows Xen Guest Agent (Rust-based) - Not reporting the IP address to Xen Orchestra

On some of our VMs that we migrated over from ESXi, we have found that the new Xen Guest Agent (the one included in the new xcpng-winpv ISO) sometimes will not report the IP address of the Guest after a reboot.

So far this has happened on several of our Windows Server 2016 and 2019 VMs. When the reboot occurs, the IP address will display briefly after booting back up, but once the Management agent is detected, the IP will go back to saying there is no IPv4 address.

If I log into the Guest OS and restart the Xen Guest Agent (Rust-based) Windows Service, the IP address will show up immediately in XO. If I set the Xen Guest Agent Windows Service to Startup Type Automatic (Delayed Start) and reboot, the IP address will eventually be reported once the Service starts.

This isn't a major issue, since we can work around it by setting the Xen Guest Agent Service to Delayed Start in Windows, but we thought we would provide some feedback in case others are experiencing it too.

Note: We updated XO last week just before starting to install the new drivers/agents and our Pool is up to date according to the Pool Master.

-

RE: XCP-ng Guest Agent - Reported Windows Version for Servers

@dinhngtu Awesome and thank you so much!

When the new version is built, will we need to download it and install from Github or is there another update mechanism?

-

XCP-ng Guest Agent - Reported Windows Version for Servers

Good Afternoon Everyone!

I am really excited for the release of the signed drivers and the guest agent for XCP-ng, so I installed it on one of our servers to test it. Everything went smoothly, but I noticed one thing after the install.

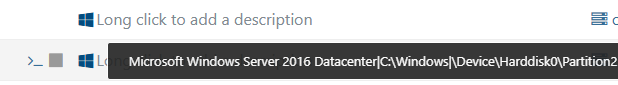

On the test VM, it reports the version of Windows using the underlying version as Windows 10 instead of the server version such as Windows Server 2016 Datacenter. Below is the example. While this isn't a huge deal, since we can manually tag the VM, it is nice that with the XenServer agent we don't have to tag them to make them searchable/filterable on "Windows Server nnnn" in XO. Since we also have Windows 10 and 11 VMs in our environment, the Server 2016 test VM also shows up as Windows 10 in the list.

XCP-ng Agent Windows Server 2016

Xen Server Agent Windows Server 2016

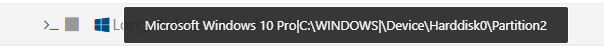

This is what the XenServer agent reports for a Windows 10 install.

As stated above, there is an easy albeit tedious workaround, so this is more feedback than an issue. I also appreciate how the XCP-ng agent does not include all the C:\ drive info that the XenServer agent does. That makes it look cleaner in my opinion.

-

RE: ISO Importing Results in .img Files

Another thing I noticed is that if I rename the ISO in the XO UI, it will keep that name in the UI, but I can't seem to use it mount to any VMs using the Console or Disks tab. A rescan of the SR didn't seem to make a difference either.

-

RE: ISO Importing Results in .img Files

This is still happening for us on our Community Edition instance that I updated today, but the odd thing I noticed is that it doesn't happen immediately. When I upload an iso file, it keeps the name for a while, but when I return the next day, it is renamed to the <uuid>.img file.

Maybe there's some maintenance job that runs that does the renaming?

-

RE: Rocky Linux 8 (RHEL 8) Potential for Performance Issue

@DustinB The vendor's documentation states official support for RHEL/Rocky/Alma/Oracle Linux 9.0+ systems.

@plaidypus said in Rocky Linux 8 (RHEL

Potential for Performance Issue:

Potential for Performance Issue:Is this still an issue, and if so, would the recommendation be to use EXT4 for both the OS and the data drive?

My apologies, I may have added too much context and information for this. I agree that this is not an issue for XO, but that was also not the original question. If I use Rocky Linux 8 and follow the XCP-ng recommendation in the documentation to use ext4, does this apply to both the root filesystem and also our secondary disk where more of the intensive IO will be?

-

RE: Rocky Linux 8 (RHEL 8) Potential for Performance Issue

@DustinB We currently pay for support on this product, and it supposedly supports Rocky Linux 9, but in practice it will not deploy unless we trick it into thinking it is RHEL 9 by swapping out the /etc/redhat-release file. While this workaround did work, our team was not confident in keeping the workaround in place for the automation processes.

Our team wants to get it working with Rocky 9, but upgrading the management software is out of our allowed scope for now (airgapping, InfoSec approvals) and working with their support is slow and our timeline to move these systems is short. Since we know the processes work with Rocky 8, we were instructed to go with that.

With that in mind, I think we are going to set up a new template with ext4 as the filesystem.

-

Rocky Linux 8 (RHEL 8) Potential for Performance Issue

In the XCP-ng Documentation, it states there are some performance issues with XFS on kernels prior to 4.20 after a live migration is performed. We decided in our migrations to use Rocky Linux 9 to work around this, but some of our older software only officially supports Rocky Linux 8.10 (kernel 4.18).

The servers will be running databases that live on a secondary drive. Is this still an issue, and if so, would the recommendation be to use EXT4 for both the OS and the data drive?

https://docs.xcp-ng.org/vms/#performance-drop-after-live-migration-for-rhel-8-like-vms

"On some RHEL 8-like systems, running kernels prior to v4.20, and using XFS as default root file system, performance issues have been observed after a live migration under heavy disk activity.XFS seems to have better performances with recent kernels but for older ones we recommend to use another journaled file system like EXT4."

-

RE: Wide VMs on XCP-ng

@planedrop Thanks for the information! Back to the more general idea of the wide VMs, I think it was originally more of an efficiency issue. Our Support team noticed high CPU usage, but the pCPU and overall host usage was very low.

Turns out we had stacked multiple, heavy-utilized, wide VMs on the same hosts. After looking at the stats, there so was so much co-stop that they were wasting so much time on trying to co-schedule the vCPUs. After spreading out the wide VMs we actually saw the hosts overall consume more CPU and the performance issues went away.

With us getting fresh start on a new hypervisor, instilling a desire for right-sizing VMs and scaling out versus up will probably be the way to go.

Thanks again for all your help!

-

RE: Guest Tools Recognized as Wrong Version

@olivierlambert Thanks for the information! Will any newer version of the Xen Guest Tools installed continue to register as 8.3.60-1 moving forward?

Is the Xen Guest Agent ready for GA or should we hold off on installing it for now?

-

Guest Tools Recognized as Wrong Version

While I don't think this is a big deal, I thought I would bring it up.

We just installed the latest Xen Guest Tools for Linux from the Github page below. The version on Github, and when installing it via dnf on our guest, shows 8.4.0-1. When I look at the VM in the XOA web UI, it says, "Management agent 8.3.60-1 detected." I tried restarting the xe-linux-distribution service and rebooting the VM, but it shows the same version detected.

https://github.com/xenserver/xe-guest-utilities/releases

Is there just a discrepancy in the detection or is this working as intended? I don't think there is likely to be any impact but wanted to check before installing it on more VMs.

Any advice, recommendations or information is appreciated. Thanks in advance!

-

RE: Wide VMs on XCP-ng

@planedrop One workload is a .NET application that regularly uses 80-100% of 26 vCPUs, but unfortunately, I don't know much about the application as I am not on the development team. The other is for ElasticSearch. The latter is not much of an issue anymore (I convinced them to reduce the number of vCPUs as we showed it rarely uses that much).

-

RE: Wide VMs on XCP-ng

@Andrew Per your example, if the NUMA size is 16 cores with 32 threads, would a 32 vCPU VM fit inside the NUMA node or would that spread them out across both nodes?

-

RE: Wide VMs on XCP-ng

@planedrop This is for wide VMs spanning across socket NUMA nodes. These are all dual-socket servers.

I was hoping if there are any best practices for topology or anything like that. I don't have any desire/intention to pin the vCPUs onto a specific NUMA node. Coming from vSphere, I was instructed, if at all possible, not to have more vCPUs on a VM than there are pCPUs on a NUMA node. That way you can let the scheduler handle it optimally.

-

Wide VMs on XCP-ng

Good Morning Everyone,

We are currently in the midst of testing XCP-ng as a replacement for our vSphere infrastructure. I have a general idea of the how wide VMs work in VMware, and what settings should be in place, for wide VMs that are larger than a NUMA node on our servers.

Are there any recommendations for VMs on XCP-ng that are span across NUMA nodes? Any advice or information would be greatly appreciated.

Thanks!