@florent i've a little problem with backup to s3/wasabi..

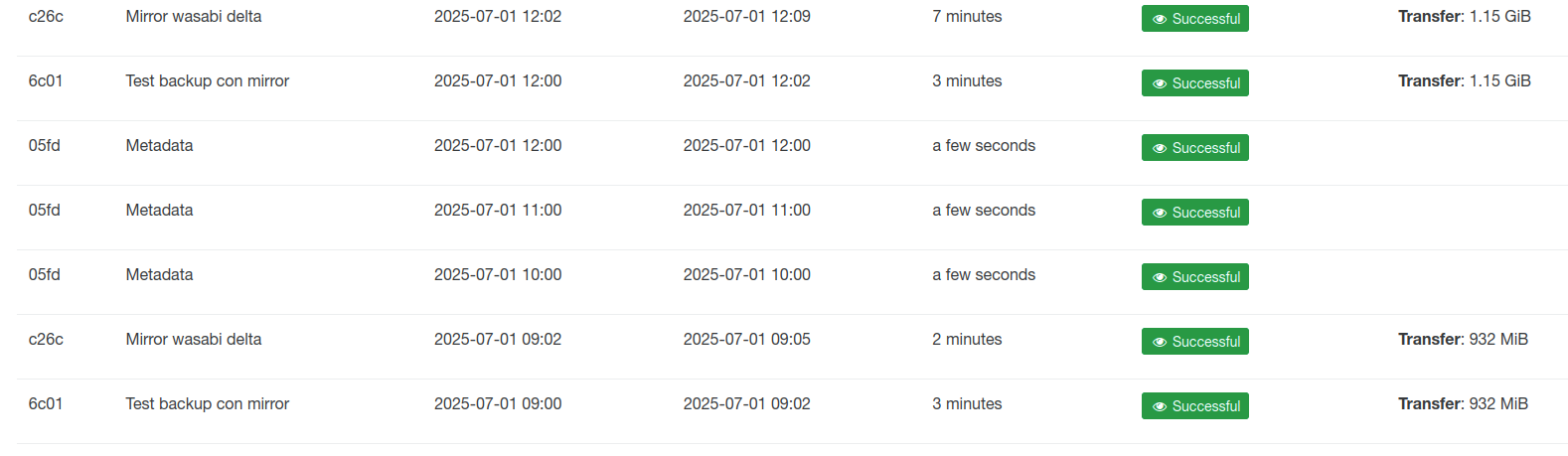

for delta seems all ok:

{

"data": {

"mode": "delta",

"reportWhen": "failure"

},

"id": "1751914964818",

"jobId": "e4adc26c-8723-4388-a5df-c2a1663ed0f7",

"jobName": "Mirror wasabi delta",

"message": "backup",

"scheduleId": "62a5edce-88b8-4db9-982e-ad2f525c4eb9",

"start": 1751914964818,

"status": "success",

"infos": [

{

"data": {

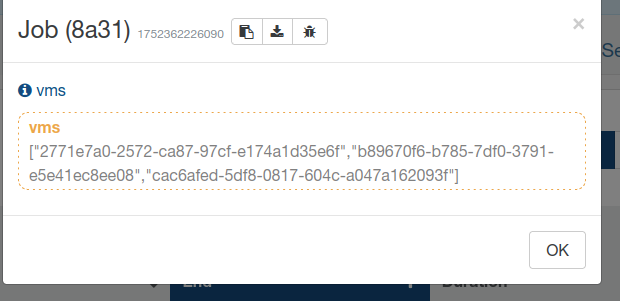

"vms": [

"2771e7a0-2572-ca87-97cf-e174a1d35e6f",

"b89670f6-b785-7df0-3791-e5e41ec8ee08",

"cac6afed-5df8-0817-604c-a047a162093f"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "b89670f6-b785-7df0-3791-e5e41ec8ee08"

},

"id": "1751914968373",

"message": "backup VM",

"start": 1751914968373,

"status": "success",

"tasks": [

{

"id": "1751914968742",

"message": "clean-vm",

"start": 1751914968742,

"status": "success",

"end": 1751914979708,

"result": {

"merge": false

}

},

{

"data": {

"id": "ea222c7a-b242-4605-83f0-fdcc9865eb88",

"type": "remote"

},

"id": "1751914984503",

"message": "export",

"start": 1751914984503,

"status": "success",

"tasks": [

{

"id": "1751914984667",

"message": "transfer",

"start": 1751914984667,

"status": "success",

"end": 1751914992365,

"result": {

"size": 125829120

}

},

{

"id": "1751914995521",

"message": "clean-vm",

"start": 1751914995521,

"status": "success",

"tasks": [

{

"id": "1751915004208",

"message": "merge",

"start": 1751915004208,

"status": "success",

"end": 1751915018911

}

],

"end": 1751915020075,

"result": {

"merge": true

}

}

],

"end": 1751915020077

}

],

"end": 1751915020077

},

{

"data": {

"type": "VM",

"id": "2771e7a0-2572-ca87-97cf-e174a1d35e6f"

},

"id": "1751914968380",

"message": "backup VM",

"start": 1751914968380,

"status": "success",

"tasks": [

{

"id": "1751914968903",

"message": "clean-vm",

"start": 1751914968903,

"status": "success",

"end": 1751914979840,

"result": {

"merge": false

}

},

{

"data": {

"id": "ea222c7a-b242-4605-83f0-fdcc9865eb88",

"type": "remote"

},

"id": "1751914986808",

"message": "export",

"start": 1751914986808,

"status": "success",

"tasks": [

{

"id": "1751914987416",

"message": "transfer",

"start": 1751914987416,

"status": "success",

"end": 1751914993152,

"result": {

"size": 119537664

}

},

{

"id": "1751914996024",

"message": "clean-vm",

"start": 1751914996024,

"status": "success",

"tasks": [

{

"id": "1751915005023",

"message": "merge",

"start": 1751915005023,

"status": "success",

"end": 1751915035567

}

],

"end": 1751915039414,

"result": {

"merge": true

}

}

],

"end": 1751915039414

}

],

"end": 1751915039415

},

{

"data": {

"type": "VM",

"id": "cac6afed-5df8-0817-604c-a047a162093f"

},

"id": "1751915020089",

"message": "backup VM",

"start": 1751915020089,

"status": "success",

"tasks": [

{

"id": "1751915020443",

"message": "clean-vm",

"start": 1751915020443,

"status": "success",

"end": 1751915030194,

"result": {

"merge": false

}

},

{

"data": {

"id": "ea222c7a-b242-4605-83f0-fdcc9865eb88",

"type": "remote"

},

"id": "1751915034962",

"message": "export",

"start": 1751915034962,

"status": "success",

"tasks": [

{

"id": "1751915035142",

"message": "transfer",

"start": 1751915035142,

"status": "success",

"end": 1751915052723,

"result": {

"size": 719323136

}

},

{

"id": "1751915056146",

"message": "clean-vm",

"start": 1751915056146,

"status": "success",

"tasks": [

{

"id": "1751915064681",

"message": "merge",

"start": 1751915064681,

"status": "success",

"end": 1751915116508

}

],

"end": 1751915117838,

"result": {

"merge": true

}

}

],

"end": 1751915117839

}

],

"end": 1751915117839

}

],

"end": 1751915117839

}

For full i'm not sure:

{

"data": {

"mode": "full",

"reportWhen": "always"

},

"id": "1751757492933",

"jobId": "35c78a31-67c5-47ba-9988-9c4cb404ed8e",

"jobName": "Mirror wasabi full",

"message": "backup",

"scheduleId": "476b863d-a651-42e5-9bb3-db830dbdac7c",

"start": 1751757492933,

"status": "success",

"infos": [

{

"data": {

"vms": [

"2771e7a0-2572-ca87-97cf-e174a1d35e6f",

"b89670f6-b785-7df0-3791-e5e41ec8ee08",

"cac6afed-5df8-0817-604c-a047a162093f"

]

},

"message": "vms"

}

],

"end": 1751757496499

}

XOA send to me the email with this report

Job ID: 35c78a31-67c5-47ba-9988-9c4cb404ed8e

Run ID: 1751757492933

Mode: full

Start time: Sunday, July 6th 2025, 1:18:12 am

End time: Sunday, July 6th 2025, 1:18:16 am

Duration: a few seconds

four second for 203 gb?