Backups not working

-

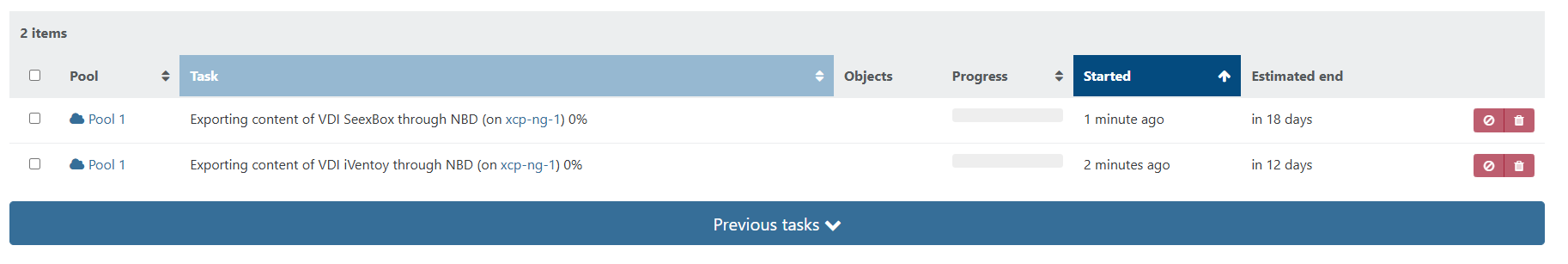

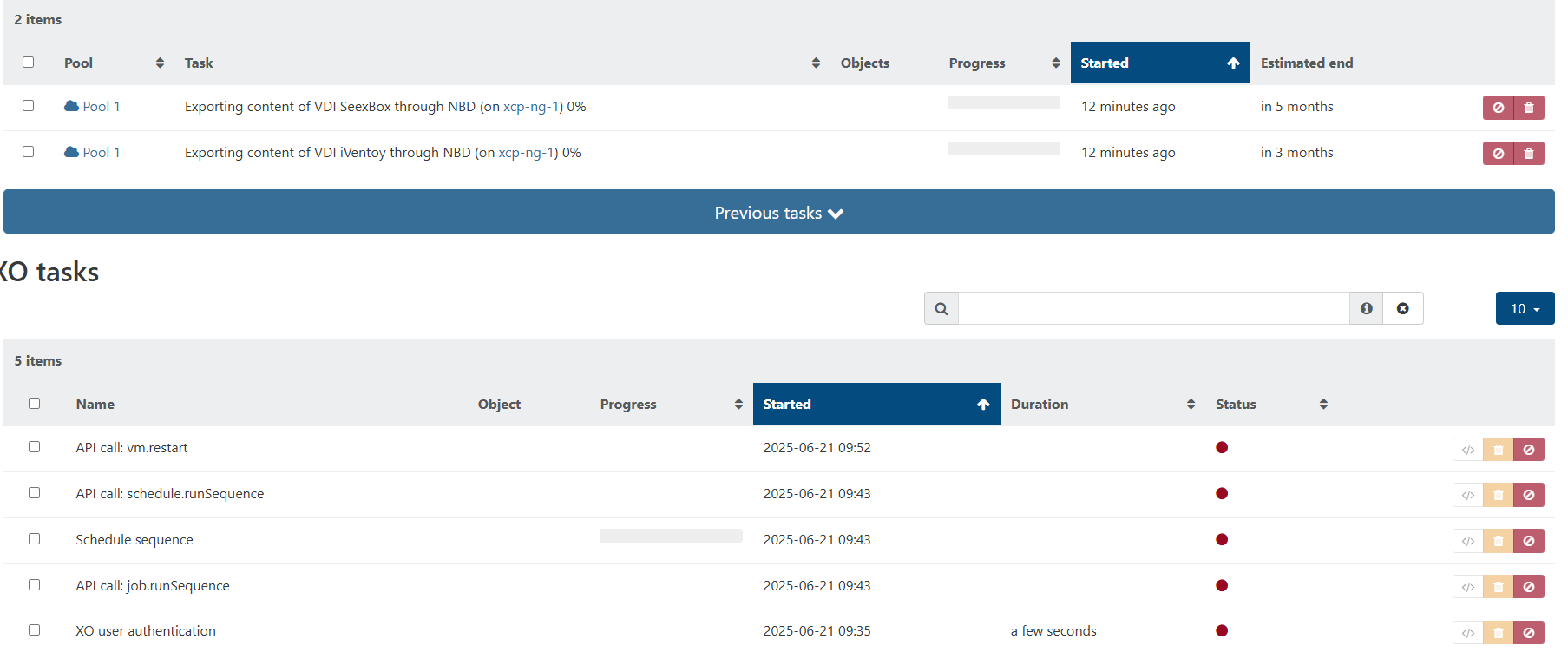

Last night 2 of my vms failed to complete a delta backup. As the tasks could not be cancled in any way i rebooted XO (built from sources) the task still show "runing" so i restarted the tool stack on host 1 and the tasks cleared. I attempted to restart failed backups and again the backup just hangs. It create the snapshot but never transfer data. The Remote is the same location as the nfs storage the vms are running from. So i know the Storage is good.

A few more rebooted of XO and tool stack. I rebooted both host and each time backups get stuck. If i try to start a new backup (same job) all vms hang. I tried to run a full delta backup and same. I tried to update XO but I am on current master build as of today (6b263) I tried to do a force update and still backup never completes.

I built a new VM for XO and installed from sources and still fail.

Here is one of the logs from the backups...

{ "data": { "mode": "delta", "reportWhen": "always", "hideSuccessfulItems": true }, "id": "1750503695411", "jobId": "95ac8089-69f3-404e-b902-21d0e878eec2", "jobName": "Backup Job 1", "message": "backup", "scheduleId": "76989b41-8bcf-4438-833a-84ae80125367", "start": 1750503695411, "status": "failure", "infos": [ { "data": { "vms": [ "b25a5709-f1f8-e942-f0cc-f443eb9b9cf3", "3446772a-4110-7a2c-db35-286c73af4ab4", "bce2b7f4-d602-5cdf-b275-da9554be61d3", "e0a3093a-52fd-f8dc-1c39-075eeb9d0314", "afbef202-af84-7e64-100a-e8a4c40d5130" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "b25a5709-f1f8-e942-f0cc-f443eb9b9cf3", "name_label": "SeedBox" }, "id": "1750503696510", "message": "backup VM", "start": 1750503696510, "status": "interrupted", "tasks": [ { "id": "1750503696519", "message": "clean-vm", "start": 1750503696519, "status": "success", "end": 1750503696822, "result": { "merge": false } }, { "id": "1750503697911", "message": "snapshot", "start": 1750503697911, "status": "success", "end": 1750503699564, "result": "6e2edbe9-d4bd-fd23-28b9-db4b03219e96" }, { "data": { "id": "1575a1d8-3f87-4160-94fc-b9695c3684ac", "isFull": false, "type": "remote" }, "id": "1750503699564:0", "message": "export", "start": 1750503699564, "status": "success", "tasks": [ { "id": "1750503701979", "message": "clean-vm", "start": 1750503701979, "status": "success", "end": 1750503702141, "result": { "merge": false } } ], "end": 1750503702142 } ], "warnings": [ { "data": { "attempt": 1, "error": "invalid HTTP header in response body" }, "message": "Retry the VM backup due to an error" } ] }, { "data": { "type": "VM", "id": "3446772a-4110-7a2c-db35-286c73af4ab4", "name_label": "XO" }, "id": "1750503696512", "message": "backup VM", "start": 1750503696512, "status": "interrupted", "tasks": [ { "id": "1750503696518", "message": "clean-vm", "start": 1750503696518, "status": "success", "end": 1750503696693, "result": { "merge": false } }, { "id": "1750503712472", "message": "snapshot", "start": 1750503712472, "status": "success", "end": 1750503713915, "result": "a1bdef52-142c-5996-6a49-169ef390aa2e" }, { "data": { "id": "1575a1d8-3f87-4160-94fc-b9695c3684ac", "isFull": false, "type": "remote" }, "id": "1750503713915:0", "message": "export", "start": 1750503713915, "status": "success", "tasks": [ { "id": "1750503716280", "message": "clean-vm", "start": 1750503716280, "status": "success", "end": 1750503716383, "result": { "merge": false } } ], "end": 1750503716385 } ], "warnings": [ { "data": { "attempt": 1, "error": "invalid HTTP header in response body" }, "message": "Retry the VM backup due to an error" } ] }, { "data": { "type": "VM", "id": "bce2b7f4-d602-5cdf-b275-da9554be61d3", "name_label": "iVentoy" }, "id": "1750503702145", "message": "backup VM", "start": 1750503702145, "status": "interrupted", "tasks": [ { "id": "1750503702148", "message": "clean-vm", "start": 1750503702148, "status": "success", "end": 1750503702233, "result": { "merge": false } }, { "id": "1750503702532", "message": "snapshot", "start": 1750503702532, "status": "success", "end": 1750503704850, "result": "05c5365e-3bc5-4640-9b29-0684ffe6d601" }, { "data": { "id": "1575a1d8-3f87-4160-94fc-b9695c3684ac", "isFull": false, "type": "remote" }, "id": "1750503704850:0", "message": "export", "start": 1750503704850, "status": "interrupted", "tasks": [ { "id": "1750503706813", "message": "transfer", "start": 1750503706813, "status": "interrupted" } ] } ], "infos": [ { "message": "Transfer data using NBD" } ] }, { "data": { "type": "VM", "id": "e0a3093a-52fd-f8dc-1c39-075eeb9d0314", "name_label": "Docker of Things" }, "id": "1750503716389", "message": "backup VM", "start": 1750503716389, "status": "interrupted", "tasks": [ { "id": "1750503716395", "message": "clean-vm", "start": 1750503716395, "status": "success", "warnings": [ { "data": { "path": "/xo-vm-backups/e0a3093a-52fd-f8dc-1c39-075eeb9d0314/20250604T160135Z.json", "actual": 6064872448, "expected": 6064872960 }, "message": "cleanVm: incorrect backup size in metadata" } ], "end": 1750503716886, "result": { "merge": false } }, { "id": "1750503717182", "message": "snapshot", "start": 1750503717182, "status": "success", "end": 1750503719640, "result": "9effb56d-68e6-8015-6bd5-64fa65acbada" }, { "data": { "id": "1575a1d8-3f87-4160-94fc-b9695c3684ac", "isFull": false, "type": "remote" }, "id": "1750503719640:0", "message": "export", "start": 1750503719640, "status": "interrupted", "tasks": [ { "id": "1750503721601", "message": "transfer", "start": 1750503721601, "status": "interrupted" } ] } ], "infos": [ { "message": "Transfer data using NBD" } ] } ], "end": 1750504870213, "result": { "message": "worker exited with code null and signal SIGTERM", "name": "Error", "stack": "Error: worker exited with code null and signal SIGTERM\n at ChildProcess.<anonymous> (file:///opt/xo/xo-builds/xen-orchestra-202506202218/@xen-orchestra/backups/runBackupWorker.mjs:24:48)\n at ChildProcess.emit (node:events:518:28)\n at ChildProcess.patchedEmit [as emit] (/opt/xo/xo-builds/xen-orchestra-202506202218/@xen-orchestra/log/configure.js:52:17)\n at Process.ChildProcess._handle.onexit (node:internal/child_process:293:12)\n at Process.callbackTrampoline (node:internal/async_hooks:130:17)" } }

-

Hi,

Can you try with XOA stable then latest and see if it works? This way it could help to pinpoint the culprit.

-

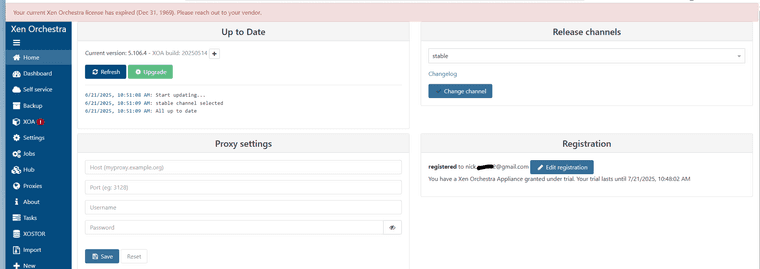

Update - I got XOA working trying backups now.

I deployed XOA my initial try had expired so i create new account. Registered XOA got it updated but when i clicked on backups it was still locked down. Now am stuck here...

-

Backups are running and completing with XOA.

-

-

Just saw that post. Just restored backup of xo and going to try.

-

Reverted back to commit c8f0a and backups are working.

-

@acebmxer Yeap, latest build introduced a bug in the backups.....

-

same issue going to disable backup for now until either bug fixed or i figure out how to go back to an older version.

update - went to Xen Orchestra, commit fb9bd and backup working again.

-

Has anyone tried turning off delta, and turning off compression?

Not ideal, but its how I'm dealing with some different issues (windows with big empty disks).

-

@Greg_E

I didnt try any changes to backup settings to test if any form of the backups would work on the latest commit. I just went back to an earlier version to perform backups using original settings. -

Might be interesting to pinpoint the exact problematic commit so we got a fix on Monday

-

@olivierlambert Happened between commit 19412 and commit 6b263.....

-

@olivierlambert Pretty sure the problem originates from this PR.

-

It may be related to the other topic, but I see a few things :

-

invalid HTTP header in response body. There is an issue while trying to download the disk. The XO logs ( journalctl ) should contains lines ith the

xo:xapi:vdikey regarding this.

It may be worth to tests without purge snapshot , if this error reoccur -

SIGTERM is probably more an out of memory issue on the backup worker. How muck memory does your XO have ?

-

-

@florent said in Backups not working:

It may be related to the other topic, but I see a few things :

-

invalid HTTP header in response body. There is an issue while trying to download the disk. The XO logs ( journalctl ) should contains lines ith the

xo:xapi:vdikey regarding this.

It may be worth to tests without purge snapshot , if this error reoccur -

SIGTERM is probably more an out of memory issue on the backup worker. How muck memory does your XO have ?

I am pretty sure both this post and - https://xcp-ng.org/forum/topic/10970/xo-community-edition-backups-dont-work-as-of-build-6b263/12 are the same problem....

To answer your question my XO has 6GB ram set.

Edit - Also note my backups were failing local nfs backup storage.

-

-

Backup done to NFS share

Xen Orchestra, commit fb9bd - backup work

Xen Orchestra, commit 6b263 - backup broken

Xen Orchestra, commit 1a7b5 - backup working

looks like the issue might been resolved with the latest build.

-

@marcoi said in Backups not working:

Backup done to NFS share

Xen Orchestra, commit fb9bd - backup work

Xen Orchestra, commit 6b263 - backup broken

Xen Orchestra, commit 1a7b5 - backup working

looks like the issue might been resolved with the latest build.

Thank you for the update. I will update as well and report back.

-

@marcoi Confirmed: commit 1a7b5 backing up again without issues.

-

Confirm commit 1a7b5 backups completed successfully.