-

Tested in my lab, so far so good.

More testers means faster release

-

@gduperrey This update breaks the XOSTOR setup in my test lab. Would you like any information from me for troubleshooting? If not, what's the easiest way to revert the test updates?

-

Hmm no reason for that, did you have other updates coming with it? (ie replacing some other unrelated packages).

-

Adding @ronan-a in the loop.

When you said "break", can you be more specific @JeffBerntsen ?

-

@olivierlambert said in Updates announcements and testing:

Adding @ronan-a in the loop.

When you said "break", can you be more specific @JeffBerntsen ?

@olivierlambert said in Updates announcements and testing:

Hmm no reason for that, did you have other updates coming with it? (ie replacing some other unrelated packages).

I have 3 servers in my lab's test pool, vh97, vh98, and vh99. Server vh97 was the pool master. I placed it into maintenance mode and shifted the pool master to vh98 in the process, installed the updates, and rebooted.

When vh97 came back up, it could no longer attach to the XOSTOR SR. Attempting to replug the PBD gives me errors indicating that vh97 can no longer see the SR. It appears that there are some related errors in the SMlog file (I've captured a copy for future examination).

The updates installed were only the ones from this most recent set. All of the other test updates from this thread from the last two weeks were already installed and running without problems on all 3 servers in the pool.

-

If you test XOSTOR, don't test other updates, because this might break the rest.

Also, never switch the master after updates, the master HAS to reboot first, whatever happens.

-

@olivierlambert said in Updates announcements and testing:

If you test XOSTOR, don't test other updates, because this might break the rest.

Also, never switch the master after updates, the master HAS to reboot first, whatever happens.

I know that XOSTOR might break during an update test. I thought whether it did or didn't might be something you would be interested in as part of testing and that's part of why I test with it in place and running.

-

@JeffBerntsen What's your sm version? I suppose, you updated your hosts and you don't have the right one.

Please send me the output of:

rpm -qa | grep sm-.

-

@ronan-a said in Updates announcements and testing:

@JeffBerntsen What's your sm version? I suppose, you updated your hosts and you don't have the right one.

Please send me the output of:

rpm -qa | grep sm-.

Definitely possible although it appears they were updated as part of installing the previous updates in this thread from the past couple of weeks.

What I have is:

sm-cli-0.23.0-6.xcpng8.2.x86_64 sm-rawhba-2.30.7-1.3.xcpng8.2.x86_64 sm-2.30.7-1.3.xcpng8.2.x86_64 -

@JeffBerntsen I think I will release a new

linstorRPM to override thesmtesting package. The current is:sm-2.30.7-1.2.0.linstor.1.xcpng8.2.x86_64.rpm. For the moment, you can downgrade if you want.

-

@ronan-a said in Updates announcements and testing:

@JeffBerntsen I think I will release a new

linstorRPM to override thesmtesting package. The current is:sm-2.30.7-1.2.0.linstor.1.xcpng8.2.x86_64.rpm. For the moment, you can downgrade if you want.

That seems to have taken care of it. Left all upgraded versions from this thread in place except for sm and sm-rawhba which have been downgraded to the linstor versions from the regular repo. All seems to be working well now.

-

@gduperrey Updating my playlab and all is good with some easy VM operations and VM/storage migration test. Now let's see how the next few days of normal tasks turn out.

-

The update is published. Thanks for your tests!

Blog post: https://xcp-ng.org/blog/2022/07/15/retbleed-security-patch/ -

@AlexD2006 said in Updates announcements and testing:

@stormi

is there some information about that regression?

maybe i can help to test.

have a lab pool here with 3 hosts on a iscsi SR.It was a missing python module import in the previous update candidate - so never went into production -, entirely our fault and easily fixed (but not so easily tested. You'd need to call the

on-slaveplugin with theis_openfunction on a VDI of the SR). -

New maintenance update candidate (openvswitch, qemu, xen, microcode, xapi, Guest tools...)

Several package updates that we had queued for a future update are ready for you to test them. Some of them were already submitted to you earlier in this thread, and others are new.

The complete list is detailed again in this message.

-

xs-openssl:

- was rebuilt without compression support. Although compression was not offered by default and the clients that connect to port 443 of XCP-ng hosts don't enable compression by default, it's better security-wise not to support it at all (due to CRIME), and this will make security scanners happier.

- received a patch from RHEL 8's openssl which fixes a potential denial of service: "CVE-2022-0778 openssl: Infinite loop in BN_mod_sqrt() reachable when parsing certificates"

-

xcp-ng-xapi-plugins received a few fixes:

- Avoid accidentally installing updates from repositories that users may have enabled on XCP-ng (even if they should never do this), when using the updater plugin (Xen Orchestra uses it to install updates).

- In the updater plugin again, error handling was broken: whenever an error would occur (such as a network issue preventing from installing the updates), another error would be raised from the error handler, and thus mask the actual reason for the initial error. That's what happens when you write command with 3 m

.

.

-

blktap:

- received a fix backported from one if Citrix Hypervisor's hotfixes, which addresses a possible segmentation fault if you create a lot of snapshots at the same time.

-

sm ("Storage Manager", responsible for the SMAPIv1 storage management layer) received a few fixes:

- We fixed an issue with local ISO SRs and mountpoints: creating a local ISO SR on a directory that is a mountpoint for another filesystem would unmount it. The patch was not accepted upstream because it touches legacy code that Citrix won't support, according to the developer who answered, but we considered it safe and useful enough to apply it to XCP-ng anyway.

- The (experimental) MooseFS driver will now default to creating a subdirectory in the mounted directory, to avoid collision between several SRs using the same share.

- The update also includes the followings fix from one of Citrix Hypervisor's hotfixes: CA-352880: when deleting an HBA SR remove the kernel devices

- Two other fixes which are hard to explain in user terms but typically don't affect the majority of users.

-

xen, microcode_ctl:

- Update the Intel microcode for IPU 2022.2

- AMD IOMMU fix

- Fix others issues like slow boot when VGA is enabled

-

Openvswitch:

- Open vSwitch ignores the bond_updelay setting for LACP bonds.

- Some packets might be dropped by a link after LACP renegotiation completes, but before bond updelay completes.

- The openvswitch logrotate script outputs spurious error messages into dead.letter.

-

qemu:

- If you add SR-IOV to a VM with GPU-Passthrough enabled, the VM doesn't boot.

-

XAPI:

- Add the other-config:ethtool-advertise option to the network commands. This option sets the speed and duplex of a NIC as advertised by the auto-negotiation process.

- Resolve other issues

-

XCP-ng Guests tools

- Integrate last changes from upstream

- Change the network interface to take in charge last releases with enX interfaces

- Support RHEL 9, Almalinux 9, Rocky Linux 9, Centos Stream 9...

- In the RPMs, switch the service to systemd by default and provide legacy RPMs for older systems with simply chkconfig. Not done yet for DEB packages.

Test on XCP-ng 8.2

From an up to date host:

yum clean metadata --enablerepo=xcp-ng-testing yum update blktap forkexecd gpumon sm sm-cli sm-rawhba xcp-ng-xapi-plugins xs-openssl-libs xen-dom0-libs xen-dom0-tools xen-hypervisor xen-libs xen-tools microcode_ctl openvswitch qemu rrd2csv rrdd-plugins squeezed vhd-tool xenopsd xapi-core xapi-tests xapi-xe varstored-guard xcp-networkd xcp-ng-pv-tools xapi-nbd xapi-storage-script xcp-rrdd xenopsd-cli xenopsd-xc --enablerepo=xcp-ng-testing rebootVersions:

- blktap-3.37.4-1.0.1.xcpng8.2

- forkexecd-1.18.0-3.2.xcpng8.2

- gpumon-0.18.0-4.2.xcpng8.2

- sm-2.30.7-1.3.xcpng8.2

- sm-cli-0.23.0-7.xcpng8.2

- sm-rawhba-2.30.7-1.3.xcpng8.2

- xcp-ng-xapi-plugins-1.7.2-1.xcpng8.2

- xs-openssl-libs-1.1.1k-5.1.xcpng8.2

- xen-dom0-libs-4.13.4-9.25.1.xcpng8.2

- xen-dom0-tools-4.13.4-9.25.1.xcpng8.2

- xen-hypervisor-4.13.4-9.25.1.xcpng8.2

- xen-libs-4.13.4-9.25.1.xcpng8.2

- xen-tools-4.13.4-9.25.1.xcpng8.2

- microcode_ctl-2.1-26.xs22.xcpng8.2

- openvswitch-2.5.3-2.3.12.1.xcpng8.2

- qemu-4.2.1-4.6.2.1.xcpng8.2

- rrd2csv-1.2.5-7.1.xcpng8.2

- rrdd-plugins-1.10.8-5.1.xcpng8.2

- squeezed-0.27.0-5.xcpng8.2

- vhd-tool-0.43.0-4.1.xcpng8.2

- xenopsd-0.150.12-1.1.xcpng8.2

- xapi-core-1.249.25-2.1.xcpng8.2

- xapi-tests-1.249.25-2.1.xcpng8.2

- xapi-xe-1.249.25-2.1.xcpng8.2

- varstored-guard-0.6.2-1.xcpng8.2

- xcp-networkd-0.56.2-1.xcpng8.2

- xcp-ng-pv-tools-8.2.0-11.xcpng8.2

- xapi-nbd-1.11.0-3.2.xcpng8.2

- xapi-storage-script-0.34.1-2.1.xcpng8.2

- xcp-rrdd-1.33.0-5.1.xcpng8.2

- xenopsd-cli-0.150.12-1.1.xcpng8.2

- xenopsd-xc-0.150.12-1.1.xcpng8.2

What to test

Normal use and anything else you want to test. The closer to your actual use of XCP-ng, the better.

We also ask you to give a special attention to the updated guest tools for linux. We tested them on a large variety of linux systems, but we can't cover every special cases in our tests, so your help is more than welcome.

The installation instructions for the tools did not change: see https://xcp-ng.org/docs/guests.html#install-from-the-guest-tools-iso.

/!\ The only tools that were updated are those provided by XCP-ng through the guest tools ISOs. Tools provided by packages in the repositories of various Linux distributions are not maintained directly by us.

Test window before official release of the updates

No precise ETA, but the sooner the feedback the better.

-

-

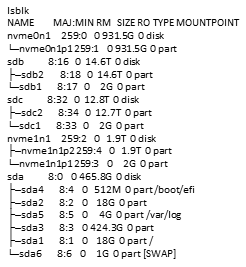

I did a fresh install using "xcp-ng-8.2.1.iso". However after doing that it seems that I have no local storage. I do have a couple of error messages

More info:

- I did install on a 500GB sata SSD previously used for other purposes

- I have other sata and nvme drives in the system ZFS-formated, those drive are in use in case I boot the system as TrueNas store (SO NOT USED BY XCP-NG)

- during boot I get following alarms:

- EFI_MEMMAP is not enabled

- fcore_driver CRITICAL TRace back most recent call File "/opt/xensource/libexec/fcoe_driver", line 34 CalledProcessError: Command '['fcoeadm', '--i] returned non-zero exit status 2\n"]

- It seems that I have no local storage ..

lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT nvme0n1 259:0 0 931.5G 0 disk └─nvme0n1p1 259:1 0 931.5G 0 part sdb 8:16 0 14.6T 0 disk ├─sdb2 8:18 0 14.6T 0 part └─sdb1 8:17 0 2G 0 part sdc 8:32 0 12.8T 0 disk ├─sdc2 8:34 0 12.7T 0 part └─sdc1 8:33 0 2G 0 part nvme1n1 259:2 0 1.9T 0 disk ├─nvme1n1p2 259:4 0 1.9T 0 part └─nvme1n1p1 259:3 0 2G 0 part sda 8:0 0 465.8G 0 disk ├─sda4 8:4 0 512M 0 part /boot/efi ├─sda2 8:2 0 18G 0 part ├─sda5 8:5 0 4G 0 part /var/log ├─sda3 8:3 0 424.3G 0 part ├─sda1 8:1 0 18G 0 part / └─sda6 8:6 0 1G 0 part [SWAP]- I tried to add local storage ==> LVM creation failed

[12:16 Tiger ~]# xe sr-create name-label="LocalStore" type=ext device-config-device=/dev/sda3 share=false content-type=user Error code: SR_BACKEND_FAILURE_77 Error parameters: , Logical Volume group creation failed,- Trying to fix the problems, I decided to update the system (as described in this tread, which did not solve the problems!

So, three questions:

- is it possible to make an installer available for the actual test version, so that I can do an real installation using that installer !!

That would make testing easier and more realistic - how to solve the described problems

- I would appreciate formal zfs support, as far as possible I use ZFS

-

@gduperrey Updated my two host playlab without a problem. Installed and/or update guest tools (now reporting

7.30.0-11) on some mainstream Linux distros worked as well as the usual VM operations in the pool. Looks good

-

@gduperrey I jumped in all the way by mistake... I updated a wrong host, so I just did them all. Older AMD, Intel E3/E5, NUC11, etc. So far, so good. Add/migrate/backup/etc VMs are working as usual. Good for guest tools too, but mine are mostly Debian 7-11. Stuff is as usual so far.

-

The updates are unrelated to your issue.

You are welcome to start a new thread about it.

That way, rather than commenting in this thread, you will receive greater assistance from the community.Did you look at the wiki's troubleshooting page to analyze your issue?

https://xcp-ng.org/docs/troubleshooting.html#the-3-step-guideWe don't see anything in your lsblk. Perhaps you could include the 'xe sr-list' result in your new topic.

Have you tried to create your SR using XOA or just the server's command line?

We don't offer an updated installer right now. Although we're working on a method to regularly and automatically build an updated iso. It will take some time before we can use it for the community.

As we know, ZFS is supported, some users use it. What exactly do you mean by zfs support?

-

I did post here, because I am almost sure that I am facing serious bugs in the actual version. Of course I can be wrong, however I do think that the described problems are not related to things I am doing wrong. So it is more that I intended to make the team aware of the problems, then that I think I need help .....

Because I assume bugs, I did upgrade intermediately after the install, .... however, that did not solve the noted issues ...

I also installed Ubuntu in an VM on my windows10 system and compiled XOA. And tryed to generate storage from there, which not so strange, did lead to the same error messages seen before (I tried to define storage types EXT and ZFS).

Related to ZFS, I wrote that since I did read some were that it was not yet formally supported.

Note that my server does have disks installed (not intended for XCP-ng). The disks are ZFS storage pools active when I boot the server as TrueNAS server. That should not be a problem .... IMHO.

Note that I can not detach them, since it also involves NVME-drivers on the motherboard.

Also note that the SSD use for XCP-ng has been used for other purposes before probably including ZFS-partitions. I write that here because I noted people reporting issues related to disks previously used for ZFS.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login