VMware migration tool: we need your feedback!

-

@KPS the most stable is always to stop the VMs

Trying to warm migrate a running VM between different hypervisor is always tricky, if not brittle, and we work to harden this process, define more clearly the limits and extends the possibilty (for example vsan)What are the rough sizes of the VMs ? how many VM ? What is the timeframe of the full migration ?

Do you think you can give a try to the xva import on a stopped VM ? on some of my tests, this migration is completely saturating my network link and my disks, as shown in the PR. The fastest way is to have the XO doing the migration directly in the ESXI. As an additional bonus, the disk produced is thin

-

When migrating VMs from ESXi, the CPU count and Memory allocation is taken over to XCP-ng.

That's all fine for me.

However, after import, the static min memory allocation cannot be reduced.

Is there a workaround?[02:56 xcp01 ~]# xe vm-param-get uuid=... param-name=memory-static-min

8589934592

[02:57 xcp01 ~]# xe vm-memory-static-range-set uuid=... min=4294967296 max=8589934592

The dynamic memory range violates constraint static_min <= dynamic_min <= dynamic_max <= static_max. -

If you want to change the min, just change the min not the whole range

-

Hello Olivier

Min value is deactivated and not changeable via GUI.

However, I found out, that the static max value can be changed below the currently set static min value. (looks a bit strange to me?)

Example:

before change

=> min 8GB max 8GB

change max value via GUI to 4GB

after change

=> min 4GB max 4GB

Then change the max value to 8GB again.

=> min 4GB max 8GBBest regards

Thomas -

Yes, because there's no reason to modify the static min. I was asking why are you trying to change the whole range via the CLI.

But first, what do you want to achieve functionally speaking?

-

To be able to start with dynamic memory at 4GB (min max) for the Windows Guest.

And then if needed, to be able, to expand to 8GB (min max) on the fly.

However, it seems, that the Windows Guest (2022 Server) always sees static max correct? -

Do you really need dynamic memory? I would prefer to avoid it if you can. Each migration will deflate the VM to the dynamic min, which is a cause of a lot of crashes and migration issues (if not taken into account, eg dynamic too low vs current usage). Keep it with static as far as possible.

-

Ok. Thank you for the advise.

Will follow as recommended.

Best regards -

Any plans to check how many vNICs there are and allow us to map each one correctly.

All of our vmware VMs have multiple vNICs and are in different port-groups.

Its not a major hassle to change them post-migration - just a nice-to-have.

-

You mean to map each VIF to each Network of your choice?

-

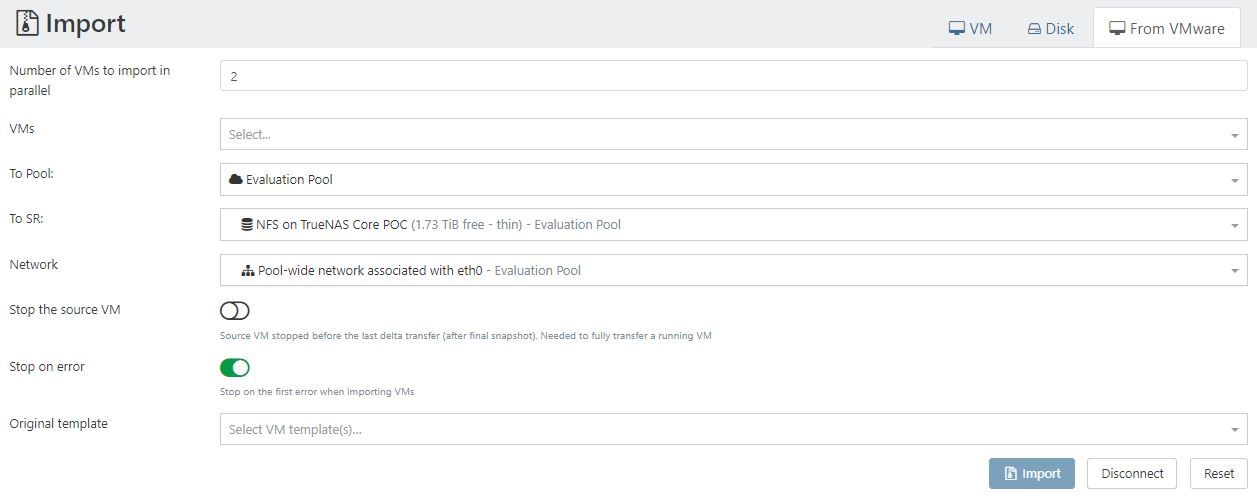

@olivierlambert - Yes, so when we do a V2V there is only 1 VIF listed to put the migrated VIFs in to, even if the VM has 5 VIFs

Is it possible to query each vmx and allow us to select a 'network' for each VIF that it has?

-

Question for @florent

-

@Riven said in VMware migration tool: we need your feedback!:

@olivierlambert - Yes, so when we do a V2V there is only 1 VIF listed to put the migrated VIFs in to, even if the VM has 5 VIFs

Is it possible to query each vmx and allow us to select a 'network' for each VIF that it has?

not for now

-

@olivierlambert I wanted to comment on this topic, we have migrated around 450 vms in 3 months using this feature, it really was a game changer and without it we were never able to make the step to XCP-NG. during the migration process we saw the feature being improved, overall speed and reliability were great. Compliments on this feature and i think it will help a lot of future customers migrating to XCP-NG.

-

Migration fails, here is the raw log;

{ "id": "0lxdi3kqv", "properties": { "name": "importing vms 14", "userId": "9045a9e7-f9b2-44fd-81e9-02c1ea88b37b", "total": 1, "done": 0, "progress": 0 }, "start": 1718297723335, "status": "interrupted", "updatedAt": 1718298405138, "tasks": [ { "id": "c3bsox2ydxp", "properties": { "name": "importing vm 14" }, "start": 1718297723339, "status": "pending", "tasks": [ { "id": "lvtzb0k3sc9", "properties": { "name": "connecting to 10.x.x.12" }, "start": 1718297723341, "status": "success", "end": 1718297723756, "result": { "_events": {}, "_eventsCount": 0 } }, { "id": "n6tsha4ebu", "properties": { "name": "get metadata of 14" }, "start": 1718297723756, "status": "pending" } ] } ] } -

@tcp_len We recently released a Vmware migration checklist that you can view here -- https://help.vates.tech/kb/en-us/37/133

Frankly, that log doesn't give us much to go on so you will need to provide more details if you want further assistance, ie:

- ESXi version

- Type of datastore

- Version of XOA or current commit if using XO from sources

- XCP host version; fully patched?

- Warm or cold migration?

- Does the VM have any snapshots?

- etc

-

- ESXI version 6.5

- Data Store - VMFS5

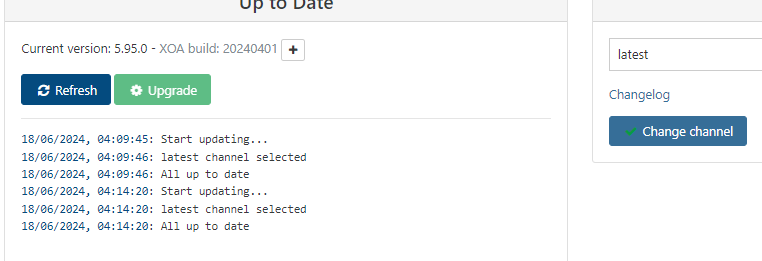

- XOA

- XCP - Welcome To XCP-Ng 8.2.1

- Cold Migraration

- No Snapshots

From the checklist posted, I should uninstall the Vmware tools before I attempt migration as well as run #yum update on the XCP host.

-

Could anyone explain what we should be setting for 'Original Template' in the Import VM screen ?

Selecting a template appears to create a new empty VM with no apparent attempt to perform the migration.

Using Xen Orchestra from Sources (commit 636c8) against an ESXi 5.1 host, via an nginx reverse proxy (to work around legacy ciphers).

-

@andyh This is intended for you to select the template that best represents the source VM. If you are migrating a Debian VM, then pick the Debian option that best matches the source VM.

I'm unsure about compatibility with an ESXi version that one. Do you get any error messages or have you checked the XO logs?

-

@Danp No error messages and nothing in either Settings - Logs or via the CLI (journalctl)

It could be due to the age of the ESXi host or the fact I am using a reverse SSL proxy to cope with the legacy ciphers.

I'll look to export the VMs as OVA's and import that way.