CBT: the thread to centralize your feedback

-

@rtjdamen We are investigating it as well

For the XO backend team, the next few months are focused on stabilization, bug fixes and maintenance

-

@julien-f @florent @olivierlambert I'm using XO source master (commit c5f6b). Running Continuous Replication with NBD+CBT and Purge Snapshot. With a single (one) NBD connection on the backup job, things work correctly. With two NBD connections I see some Orphan VDIs left almost every time after the backup job runs. They are different ones each time.

-

I noticed when migrating some VDIs with CBT enabled from one NFS SR another that the CBT only snapshots are left behind. Perhaps this is why a full backup seems to be required after a storage migration?

-

@flakpyro i know from our other backup software we use that migrating cbt enabled vdi’s is not supported on xen, when u migrate between sr it will disable cbt on that vdi. This is causing a new full. This is by design

-

@rtjdamen Makes sense, it would be nice is XOA cleaned up these small CBT snapshots though after a migration from one SR to another.

-

@flakpyro indeed, sounds like a bug. It should delete the snapshot, disable cbt and then migrate to the other sr.

-

I've been out of life for a while.

is it any known problem with CBT file restore?

-

@Tristis-Oris Working fine here, with cbt backups.

-

@Tristis-Oris Is your backed up VM using LVM for it's root filesystem?

-

@Andrew most of them, yes.

-

@Tristis-Oris This is a known issue from before and not related to CBT backups.

Message Posting File restore error on LVMs

File level restoration not working on LVM partition #7029

(https://github.com/vatesfr/xen-orchestra/issues/7029) -

@Andrew got it. i miss that ticket.

-

@Andrew ext4 or windows

-

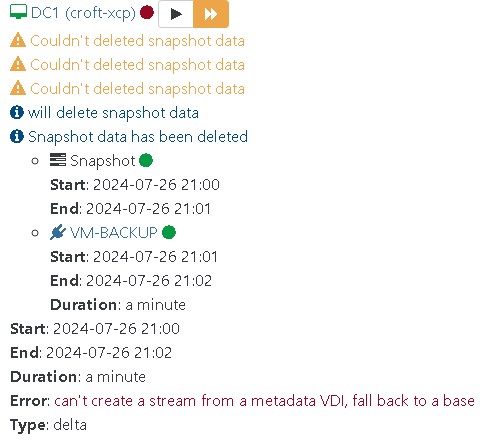

Hi, I am running from the sources and I'm almost up to date (991b4) . Can anyone please tell me what this means "Error: can't create a stream from a metadata VDI, fall back to a base". Restarting the backup results in a full backup rather than an incremental.

Thanks.

-

@frank-s This PR is where the update occurred -- https://github.com/vatesfr/xen-orchestra/pull/7836

@florent should be able to explain the meaning.

-

Getting coalesce stuck on one SR, NFS share.

After running a Continuous Replication job. When I use an internal SSD as the target this does not happen.Jul 26 22:32:19 npb7 SMGC: [2253452] Another GC instance already active, exiting Jul 26 22:32:19 npb7 SMGC: [2253452] In cleanup Jul 26 22:32:19 npb7 SMGC: [2253452] SR 0d9e ('TBS-h574TX') (0 VDIs in 0 VHD trees): no changes Jul 26 22:32:49 npb7 SMGC: [2253692] === SR 0d9ee24c-ea59-e0e6-8c04-a9a65c22f110: gc === Jul 26 22:32:49 npb7 SMGC: [2253722] Will finish as PID [2253723] Jul 26 22:32:49 npb7 SMGC: [2253692] New PID [2253722] Jul 26 22:32:49 npb7 SMGC: [2253723] Found 0 cache files Jul 26 22:32:49 npb7 SMGC: [2253723] Another GC instance already active, exiting Jul 26 22:32:49 npb7 SMGC: [2253723] In cleanup Jul 26 22:32:49 npb7 SMGC: [2253723] SR 0d9e ('TBS-h574TX') (0 VDIs in 0 VHD trees): no changes Jul 26 22:33:19 npb7 SMGC: [2253917] === SR 0d9ee24c-ea59-e0e6-8c04-a9a65c22f110: gc === Jul 26 22:33:19 npb7 SMGC: [2253947] Will finish as PID [2253948] Jul 26 22:33:19 npb7 SMGC: [2253917] New PID [2253947] Jul 26 22:33:19 npb7 SMGC: [2253948] Found 0 cache files Jul 26 22:33:19 npb7 SMGC: [2253948] Another GC instance already active, exiting Jul 26 22:33:19 npb7 SMGC: [2253948] In cleanup Jul 26 22:33:19 npb7 SMGC: [2253948] SR 0d9e ('TBS-h574TX') (0 VDIs in 0 VHD trees): no changes Jul 26 22:33:49 npb7 SMGC: [2254139] === SR 0d9ee24c-ea59-e0e6-8c04-a9a65c22f110: gc === Jul 26 22:33:49 npb7 SMGC: [2254172] Will finish as PID [2254173] Jul 26 22:33:49 npb7 SMGC: [2254139] New PID [2254172] Jul 26 22:33:49 npb7 SMGC: [2254173] Found 0 cache files Jul 26 22:33:49 npb7 SMGC: [2254173] Another GC instance already active, exiting Jul 26 22:33:49 npb7 SMGC: [2254173] In cleanup Jul 26 22:33:49 npb7 SMGC: [2254173] SR 0d9e ('TBS-h574TX') (0 VDIs in 0 VHD trees): no changes Jul 26 22:33:49 npb7 SMGC: [2251795] Child process completed successfully Jul 26 22:33:49 npb7 SMGC: [2251795] GC active, quiet period ended Jul 26 22:33:50 npb7 SMGC: [2251795] SR 0d9e ('TBS-h574TX') (6 VDIs in 5 VHD trees): no changes Jul 26 22:33:50 npb7 SMGC: [2251795] Got on-boot for 82664934(60.000G/2.914G?): 'persist' Jul 26 22:33:50 npb7 SMGC: [2251795] Got allow_caching for 82664934(60.000G/2.914G?): False Jul 26 22:33:50 npb7 SMGC: [2251795] Got other-config for 82664934(60.000G/2.914G?): {'xo:backup:job': '4c084697-6efd-4e35-a4ff-74ae50824c8b', 'xo:backup:datetime': '20240726T20:00:30Z', 'xo:backup:schedule': 'b1cef1e3-e313-409b-ad40-017076f115ce', 'xo:backup:vm': 'd6a5d420-72e6-5c87-a3af-b5eb5c4a44dd', 'xo:backup:sr': '0d9ee24c-ea59-e0e6-8c04-a9a65c22f110', 'content_id': '4be773b3-10dc-9a12-1f82-f575f5f6555b', 'xo:copy_of': 'bb4d2fa2-6241-4856-b196-39f16894f5ef'} Jul 26 22:33:50 npb7 SMGC: [2251795] Removed vhd-blocks from 82664934(60.000G/2.914G?) Jul 26 22:33:50 npb7 SMGC: [2251795] Set vhd-blocks = eJztV72O00AQHjsRWMJkU15xOlknRJ0yBdIZiQegpMwbQIUol7sGURAegIJH4AmQQRQUFHmEK+m4coWQlh2vZz1ezzo5paDhK+zZmdlvxrM/k9zk9RwY8gKfSreDGRgALzYPoPzm3tbiOGMzSkAvqEAPiAL8fNlG1hjFYFS2ARLOWTbjw/clnGL6NZqW3PL7BJTneRrHzxPJdVBCXOP1huu+M/8V8S9ycz3z39/yKGM4pUB9KzyE7TtXr1GKS8nZoSHhflEacw5faH17l3IwYeNfOVQNiHjcfXbxmSn7uhQR37kv9ifIdvh+k8jzUPw4ixSb2MOIImEHL+E10PePq5a9COKdyET7rmXNNLO4xX7mV8T4h7FkdmqlvQ2fpp9WD8hT5yUGJZGdUDJK+w02Xwwcw+4fQgs6CZj3dquloyA5935NmhCNIz4TjOlpHA1OeYWWLZmwdu0N4i6rOXcEOrMdy/qM1f9Y9OfaCT+DWntdiBMtAmZVTSZRMZn2p1wfaX0FL630be4fu8eF3T2DtJSu+qyS2/nSv5Zf9+Yx/GYlpFUJspL2LNN86CowZutRCoXNojFSXriTR661wBMOICsZCW8n4k9j8kiqj1N+Jkt/94JalYjDttpEWVXHknQIjsHDWOMSqlu5EH1XQTLR+nQs47Rv4Em+p/2PYDjPSnbBprfCG3gBj2CNqjW3F9QUdSJGSo8NbiOpxW2g9GmSh8Vh1Wz8C9vZiNGklj3qjGFiy7s0hhqeDVd0uBC84m6YG62FavPw+7BORJF1glVCyk0f/cvs4Ph0k+2wYLQZu/sN2zlK1BXt4PcSL+hePO9c73VUFJ/WtJqefh0rlHCxckwa26j4X2Laq8fs6o+Qw/FIxscmab1of7nCd8C8L+wYSZ7/mIT+d3Ht1i3nX50i2oE= for 82664934(60.000G/2.914G?) Jul 26 22:33:50 npb7 SMGC: [2251795] Set vhd-blocks = eJz73/7//H+ag8O4pRhobzteMND2N4zaP2o/0eD3ANtPdfBngPPfjxFu/4eBLv9GwcgGDwbW+oFO/w2j9o/aPwpGMGhgGEgwUPbT2/+4Qh8Aq3GH/w== for *d3a809af(60.000G/54.305G?) Jul 26 22:33:50 npb7 SMGC: [2251795] Num combined blocks = 27750 Jul 26 22:33:50 npb7 SMGC: [2251795] Coalesced size = 54.305G Jul 26 22:33:50 npb7 SMGC: [2251795] Leaf-coalesce candidate: 82664934(60.000G/2.914G?) Jul 26 22:33:50 npb7 SMGC: [2251795] SR 0d9e ('TBS-h574TX') (6 VDIs in 5 VHD trees): no changes Jul 26 22:33:50 npb7 SMGC: [2251795] Got sm-config for *d3a809af(60.000G/54.305G?): {'vhd-blocks': 'eJz73/7//H+ag8O4pRhobzteMND2N4zaP2o/0eD3ANtPdfBngPPfjxFu/4eBLv9GwcgGDwbW+oFO/w2j9o/aPwpGMGhgGEgwUPbT2/+4Qh8Aq3GH/w=='} Jul 26 22:33:50 npb7 SMGC: [2251795] Got on-boot for 82664934(60.000G/2.914G?): 'persist' Jul 26 22:33:50 npb7 SMGC: [2251795] Got allow_caching for 82664934(60.000G/2.914G?): False Jul 26 22:33:50 npb7 SMGC: [2251795] Got other-config for 82664934(60.000G/2.914G?): {'xo:backup:job': '4c084697-6efd-4e35-a4ff-74ae50824c8b', 'xo:backup:datetime': '20240726T20:00:30Z', 'xo:backup:schedule': 'b1cef1e3-e313-409b-ad40-017076f115ce', 'xo:backup:vm': 'd6a5d420-72e6-5c87-a3af-b5eb5c4a44dd', 'xo:backup:sr': '0d9ee24c-ea59-e0e6-8c04-a9a65c22f110', 'content_id': '4be773b3-10dc-9a12-1f82-f575f5f6555b', 'xo:copy_of': 'bb4d2fa2-6241-4856-b196-39f16894f5ef'} Jul 26 22:33:50 npb7 SMGC: [2251795] Removed vhd-blocks from 82664934(60.000G/2.914G?) Jul 26 22:33:50 npb7 SMGC: [2251795] Set vhd-blocks = eJztV72O00AQHjsRWMJkU15xOlknRJ0yBdIZiQegpMwbQIUol7sGURAegIJH4AmQQRQUFHmEK+m4coWQlh2vZz1ezzo5paDhK+zZmdlvxrM/k9zk9RwY8gKfSreDGRgALzYPoPzm3tbiOGMzSkAvqEAPiAL8fNlG1hjFYFS2ARLOWTbjw/clnGL6NZqW3PL7BJTneRrHzxPJdVBCXOP1huu+M/8V8S9ycz3z39/yKGM4pUB9KzyE7TtXr1GKS8nZoSHhflEacw5faH17l3IwYeNfOVQNiHjcfXbxmSn7uhQR37kv9ifIdvh+k8jzUPw4ixSb2MOIImEHL+E10PePq5a9COKdyET7rmXNNLO4xX7mV8T4h7FkdmqlvQ2fpp9WD8hT5yUGJZGdUDJK+w02Xwwcw+4fQgs6CZj3dquloyA5935NmhCNIz4TjOlpHA1OeYWWLZmwdu0N4i6rOXcEOrMdy/qM1f9Y9OfaCT+DWntdiBMtAmZVTSZRMZn2p1wfaX0FL630be4fu8eF3T2DtJSu+qyS2/nSv5Zf9+Yx/GYlpFUJspL2LNN86CowZutRCoXNojFSXriTR661wBMOICsZCW8n4k9j8kiqj1N+Jkt/94JalYjDttpEWVXHknQIjsHDWOMSqlu5EH1XQTLR+nQs47Rv4Em+p/2PYDjPSnbBprfCG3gBj2CNqjW3F9QUdSJGSo8NbiOpxW2g9GmSh8Vh1Wz8C9vZiNGklj3qjGFiy7s0hhqeDVd0uBC84m6YG62FavPw+7BORJF1glVCyk0f/cvs4Ph0k+2wYLQZu/sN2zlK1BXt4PcSL+hePO9c73VUFJ/WtJqefh0rlHCxckwa26j4X2Laq8fs6o+Qw/FIxscmab1of7nCd8C8L+wYSZ7/mIT+d3Ht1i3nX50i2oE= for 82664934(60.000G/2.914G?) Jul 26 22:33:50 npb7 SMGC: [2251795] Set vhd-blocks = eJz73/7//H+ag8O4pRhobzteMND2N4zaP2o/0eD3ANtPdfBngPPfjxFu/4eBLv9GwcgGDwbW+oFO/w2j9o/aPwpGMGhgGEgwUPbT2/+4Qh8Aq3GH/w== for *d3a809af(60.000G/54.305G?) Jul 26 22:33:50 npb7 SMGC: [2251795] Num combined blocks = 27750 Jul 26 22:33:50 npb7 SMGC: [2251795] Coalesced size = 54.305G Jul 26 22:33:50 npb7 SMGC: [2251795] Leaf-coalesce candidate: 82664934(60.000G/2.914G?) Jul 26 22:33:50 npb7 SMGC: [2251795] Leaf-coalescing 82664934(60.000G/2.914G?) -> *d3a809af(60.000G/54.305G?) Jul 26 22:33:50 npb7 SMGC: [2251795] SR 0d9e ('TBS-h574TX') (6 VDIs in 5 VHD trees): no changes Jul 26 22:33:50 npb7 SMGC: [2251795] Got other-config for 82664934(60.000G/2.914G?): {'xo:backup:job': '4c084697-6efd-4e35-a4ff-74ae50824c8b', 'xo:backup:datetime': '20240726T20:00:30Z', 'xo:backup:schedule': 'b1cef1e3-e313-409b-ad40-017076f115ce', 'xo:backup:vm': 'd6a5d420-72e6-5c87-a3af-b5eb5c4a44dd', 'xo:backup:sr': '0d9ee24c-ea59-e0e6-8c04-a9a65c22f110', 'content_id': '4be773b3-10dc-9a12-1f82-f575f5f6555b', 'xo:copy_of': 'bb4d2fa2-6241-4856-b196-39f16894f5ef'} Jul 26 22:33:50 npb7 SMGC: [2251795] Running VHD coalesce on 82664934(60.000G/2.914G?) Jul 26 22:34:02 npb7 SMGC: [2251795] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Jul 26 22:34:02 npb7 SMGC: [2251795] *********************** Jul 26 22:34:02 npb7 SMGC: [2251795] * E X C E P T I O N * Jul 26 22:34:02 npb7 SMGC: [2251795] *********************** Jul 26 22:34:02 npb7 SMGC: [2251795] _doCoalesceLeaf: EXCEPTION <class 'util.SMException'>, Timed out Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 2450, in _liveLeafCoalesce Jul 26 22:34:02 npb7 SMGC: [2251795] self._doCoalesceLeaf(vdi) Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 2484, in _doCoalesceLeaf Jul 26 22:34:02 npb7 SMGC: [2251795] vdi._coalesceVHD(timeout) Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 934, in _coalesceVHD Jul 26 22:34:02 npb7 SMGC: [2251795] self.sr.uuid, abortTest, VDI.POLL_INTERVAL, timeOut) Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 189, in runAbortable Jul 26 22:34:02 npb7 SMGC: [2251795] raise util.SMException("Timed out") Jul 26 22:34:02 npb7 SMGC: [2251795] Jul 26 22:34:02 npb7 SMGC: [2251795] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Jul 26 22:34:02 npb7 SMGC: [2251795] *** UNDO LEAF-COALESCE Jul 26 22:34:02 npb7 SMGC: [2251795] *** leaf-coalesce undo successful Jul 26 22:34:02 npb7 SMGC: [2251795] Got sm-config for 82664934(60.000G/2.914G?): {'paused': 'true', 'vhd-blocks': 'eJztV72O00AQHjsRWMJkU15xOlknRJ0yBdIZiQegpMwbQIUol7sGURAegIJH4AmQQRQUFHmEK+m4coWQlh2vZz1ezzo5paDhK+zZmdlvxrM/k9zk9RwY8gKfSreDGRgALzYPoPzm3tbiOGMzSkAvqEAPiAL8fNlG1hjFYFS2ARLOWTbjw/clnGL6NZqW3PL7BJTneRrHzxPJdVBCXOP1huu+M/8V8S9ycz3z39/yKGM4pUB9KzyE7TtXr1GKS8nZoSHhflEacw5faH17l3IwYeNfOVQNiHjcfXbxmSn7uhQR37kv9ifIdvh+k8jzUPw4ixSb2MOIImEHL+E10PePq5a9COKdyET7rmXNNLO4xX7mV8T4h7FkdmqlvQ2fpp9WD8hT5yUGJZGdUDJK+w02Xwwcw+4fQgs6CZj3dquloyA5935NmhCNIz4TjOlpHA1OeYWWLZmwdu0N4i6rOXcEOrMdy/qM1f9Y9OfaCT+DWntdiBMtAmZVTSZRMZn2p1wfaX0FL630be4fu8eF3T2DtJSu+qyS2/nSv5Zf9+Yx/GYlpFUJspL2LNN86CowZutRCoXNojFSXriTR661wBMOICsZCW8n4k9j8kiqj1N+Jkt/94JalYjDttpEWVXHknQIjsHDWOMSqlu5EH1XQTLR+nQs47Rv4Em+p/2PYDjPSnbBprfCG3gBj2CNqjW3F9QUdSJGSo8NbiOpxW2g9GmSh8Vh1Wz8C9vZiNGklj3qjGFiy7s0hhqeDVd0uBC84m6YG62FavPw+7BORJF1glVCyk0f/cvs4Ph0k+2wYLQZu/sN2zlK1BXt4PcSL+hePO9c73VUFJ/WtJqefh0rlHCxckwa26j4X2Laq8fs6o+Qw/FIxscmab1of7nCd8C8L+wYSZ7/mIT+d3Ht1i3nX50i2oE=', 'vhd-parent': 'd3a809af-ea4d-438b-a7e3-bc6a125bd35e'} Jul 26 22:34:02 npb7 SMGC: [2251795] Unpausing VDI 82664934(60.000G/2.914G?) Jul 26 22:34:02 npb7 SMGC: [2251795] Got sm-config for 82664934(60.000G/2.914G?): {'vhd-blocks': 'eJztV72O00AQHjsRWMJkU15xOlknRJ0yBdIZiQegpMwbQIUol7sGURAegIJH4AmQQRQUFHmEK+m4coWQlh2vZz1ezzo5paDhK+zZmdlvxrM/k9zk9RwY8gKfSreDGRgALzYPoPzm3tbiOGMzSkAvqEAPiAL8fNlG1hjFYFS2ARLOWTbjw/clnGL6NZqW3PL7BJTneRrHzxPJdVBCXOP1huu+M/8V8S9ycz3z39/yKGM4pUB9KzyE7TtXr1GKS8nZoSHhflEacw5faH17l3IwYeNfOVQNiHjcfXbxmSn7uhQR37kv9ifIdvh+k8jzUPw4ixSb2MOIImEHL+E10PePq5a9COKdyET7rmXNNLO4xX7mV8T4h7FkdmqlvQ2fpp9WD8hT5yUGJZGdUDJK+w02Xwwcw+4fQgs6CZj3dquloyA5935NmhCNIz4TjOlpHA1OeYWWLZmwdu0N4i6rOXcEOrMdy/qM1f9Y9OfaCT+DWntdiBMtAmZVTSZRMZn2p1wfaX0FL630be4fu8eF3T2DtJSu+qyS2/nSv5Zf9+Yx/GYlpFUJspL2LNN86CowZutRCoXNojFSXriTR661wBMOICsZCW8n4k9j8kiqj1N+Jkt/94JalYjDttpEWVXHknQIjsHDWOMSqlu5EH1XQTLR+nQs47Rv4Em+p/2PYDjPSnbBprfCG3gBj2CNqjW3F9QUdSJGSo8NbiOpxW2g9GmSh8Vh1Wz8C9vZiNGklj3qjGFiy7s0hhqeDVd0uBC84m6YG62FavPw+7BORJF1glVCyk0f/cvs4Ph0k+2wYLQZu/sN2zlK1BXt4PcSL+hePO9c73VUFJ/WtJqefh0rlHCxckwa26j4X2Laq8fs6o+Qw/FIxscmab1of7nCd8C8L+wYSZ7/mIT+d3Ht1i3nX50i2oE=', 'vhd-parent': 'd3a809af-ea4d-438b-a7e3-bc6a125bd35e'} Jul 26 22:34:02 npb7 SMGC: [2251795] In cleanup Jul 26 22:34:02 npb7 SMGC: [2251795] SR 0d9e ('TBS-h574TX') (6 VDIs in 5 VHD trees): no changes Jul 26 22:34:02 npb7 SMGC: [2251795] Removed leaf-coalesce from 82664934(60.000G/2.914G?) Jul 26 22:34:02 npb7 SMGC: [2251795] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Jul 26 22:34:02 npb7 SMGC: [2251795] *********************** Jul 26 22:34:02 npb7 SMGC: [2251795] * E X C E P T I O N * Jul 26 22:34:02 npb7 SMGC: [2251795] *********************** Jul 26 22:34:02 npb7 SMGC: [2251795] leaf-coalesce: EXCEPTION <class 'util.SMException'>, Timed out Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 2046, in coalesceLeaf Jul 26 22:34:02 npb7 SMGC: [2251795] self._coalesceLeaf(vdi) Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 2328, in _coalesceLeaf Jul 26 22:34:02 npb7 SMGC: [2251795] return self._liveLeafCoalesce(vdi) Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 2450, in _liveLeafCoalesce Jul 26 22:34:02 npb7 SMGC: [2251795] self._doCoalesceLeaf(vdi) Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 2484, in _doCoalesceLeaf Jul 26 22:34:02 npb7 SMGC: [2251795] vdi._coalesceVHD(timeout) Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 934, in _coalesceVHD Jul 26 22:34:02 npb7 SMGC: [2251795] self.sr.uuid, abortTest, VDI.POLL_INTERVAL, timeOut) Jul 26 22:34:02 npb7 SMGC: [2251795] File "/opt/xensource/sm/cleanup.py", line 189, in runAbortable Jul 26 22:34:02 npb7 SMGC: [2251795] raise util.SMException("Timed out") Jul 26 22:34:02 npb7 SMGC: [2251795] Jul 26 22:34:02 npb7 SMGC: [2251795] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Jul 26 22:34:02 npb7 SMGC: [2251795] Leaf-coalesce failed on 82664934(60.000G/2.914G?), skipping Jul 26 22:34:02 npb7 SMGC: [2251795] In cleanup Jul 26 22:34:02 npb7 SMGC: [2251795] Starting asynch srUpdate for SR 0d9ee24c-ea59-e0e6-8c04-a9a65c22f110 Jul 26 22:34:03 npb7 SMGC: [2251795] SR.update_asynch status changed to [success] Jul 26 22:34:03 npb7 SMGC: [2251795] SR 0d9e ('TBS-h574TX') (6 VDIs in 5 VHD trees): no changes Jul 26 22:34:03 npb7 SMGC: [2251795] Got sm-config for *d3a809af(60.000G/54.305G?): {'vhd-blocks': 'eJz73/7//H+ag8O4pRhobzteMND2N4zaP2o/0eD3ANtPdfBngPPfjxFu/4eBLv9GwcgGDwbW+oFO/w2j9o/aPwpGMGhgGEgwUPbT2/+4Qh8Aq3GH/w=='} Jul 26 22:34:03 npb7 SMGC: [2251795] No work, exiting Jul 26 22:34:03 npb7 SMGC: [2251795] GC process exiting, no work left Jul 26 22:34:03 npb7 SMGC: [2251795] In cleanup Jul 26 22:34:03 npb7 SMGC: [2251795] SR 0d9e ('TBS-h574TX') (6 VDIs in 5 VHD trees): no changes Jul 26 22:34:19 npb7 SMGC: [2254446] === SR 0d9ee24c-ea59-e0e6-8c04-a9a65c22f110: gc === Jul 26 22:34:19 npb7 SMGC: [2254476] Will finish as PID [2254477] Jul 26 22:34:19 npb7 SMGC: [2254446] New PID [2254476] Jul 26 22:34:19 npb7 SMGC: [2254477] Found 0 cache files Jul 26 22:34:19 npb7 SMGC: [2254477] SR 0d9e ('TBS-h574TX') (6 VDIs in 5 VHD trees): Jul 26 22:34:19 npb7 SMGC: [2254477] 3a763dec(48.002G/29.521G?) Jul 26 22:34:19 npb7 SMGC: [2254477] 561867f5(20.000G/19.309G?) Jul 26 22:34:19 npb7 SMGC: [2254477] ab0314a9(16.000G/7.636G?) Jul 26 22:34:19 npb7 SMGC: [2254477] d54d9ec4(24.002G/15.706G?) Jul 26 22:34:19 npb7 SMGC: [2254477] *d3a809af(60.000G/54.305G?) Jul 26 22:34:19 npb7 SMGC: [2254477] 82664934(60.000G/2.914G?) Jul 26 22:34:19 npb7 SMGC: [2254477] Jul 26 22:34:19 npb7 SMGC: [2254477] Got on-boot for 82664934(60.000G/2.914G?): 'persist' Jul 26 22:34:19 npb7 SMGC: [2254477] Got allow_caching for 82664934(60.000G/2.914G?): False Jul 26 22:34:19 npb7 SMGC: [2254477] Got other-config for 82664934(60.000G/2.914G?): {'xo:backup:job': '4c084697-6efd-4e35-a4ff-74ae50824c8b', 'xo:backup:datetime': '20240726T20:00:30Z', 'xo:backup:schedule': 'b1cef1e3-e313-409b-ad40-017076f115ce', 'xo:backup:vm': 'd6a5d420-72e6-5c87-a3af-b5eb5c4a44dd', 'xo:backup:sr': '0d9ee24c-ea59-e0e6-8c04-a9a65c22f110', 'content_id': '4be773b3-10dc-9a12-1f82-f575f5f6555b', 'xo:copy_of': 'bb4d2fa2-6241-4856-b196-39f16894f5ef'} Jul 26 22:34:19 npb7 SMGC: [2254477] Removed vhd-blocks from 82664934(60.000G/2.914G?) Jul 26 22:34:19 npb7 SMGC: [2254477] Set vhd-blocks = eJztV72O00AQHjsRWMJkU15xOlknRJ0yBdIZiQegpMwbQIUol7sGURAegIJH4AmQQRQUFHmEK+m4coWQlh2vZz1ezzo5paDhK+zZmdlvxrM/k9zk9RwY8gKfSreDGRgALzYPoPzm3tbiOGMzSkAvqEAPiAL8fNlG1hjFYFS2ARLOWTbjw/clnGL6NZqW3PL7BJTneRrHzxPJdVBCXOP1huu+M/8V8S9ycz3z39/yKGM4pUB9KzyE7TtXr1GKS8nZoSHhflEacw5faH17l3IwYeNfOVQNiHjcfXbxmSn7uhQR37kv9ifIdvh+k8jzUPw4ixSb2MOIImEHL+E10PePq5a9COKdyET7rmXNNLO4xX7mV8T4h7FkdmqlvQ2fpp9WD8hT5yUGJZGdUDJK+w02Xwwcw+4fQgs6CZj3dquloyA5935NmhCNIz4TjOlpHA1OeYWWLZmwdu0N4i6rOXcEOrMdy/qM1f9Y9OfaCT+DWntdiBMtAmZVTSZRMZn2p1wfaX0FL630be4fu8eF3T2DtJSu+qyS2/nSv5Zf9+Yx/GYlpFUJspL2LNN86CowZutRCoXNojFSXriTR661wBMOICsZCW8n4k9j8kiqj1N+Jkt/94JalYjDttpEWVXHknQIjsHDWOMSqlu5EH1XQTLR+nQs47Rv4Em+p/2PYDjPSnbBprfCG3gBj2CNqjW3F9QUdSJGSo8NbiOpxW2g9GmSh8Vh1Wz8C9vZiNGklj3qjGFiy7s0hhqeDVd0uBC84m6YG62FavPw+7BORJF1glVCyk0f/cvs4Ph0k+2wYLQZu/sN2zlK1BXt4PcSL+hePO9c73VUFJ/WtJqefh0rlHCxckwa26j4X2Laq8fs6o+Qw/FIxscmab1of7nCd8C8L+wYSZ7/mIT+d3Ht1i3nX50i2oE= for 82664934(60.000G/2.914G?) Jul 26 22:34:19 npb7 SMGC: [2254477] Set vhd-blocks = eJz73/7//H+ag8O4pRhobzteMND2N4zaP2o/0eD3ANtPdfBngPPfjxFu/4eBLv9GwcgGDwbW+oFO/w2j9o/aPwpGMGhgGEgwUPbT2/+4Qh8Aq3GH/w== for *d3a809af(60.000G/54.305G?) Jul 26 22:34:19 npb7 SMGC: [2254477] Num combined blocks = 27750 Jul 26 22:34:19 npb7 SMGC: [2254477] Coalesced size = 54.305G Jul 26 22:34:19 npb7 SMGC: [2254477] Leaf-coalesce candidate: 82664934(60.000G/2.914G?) Jul 26 22:34:19 npb7 SMGC: [2254477] GC active, about to go quiet Jul 26 22:34:49 npb7 SMGC: [2254681] === SR 0d9ee24c-ea59-e0e6-8c04-a9a65c22f110: gc === Jul 26 22:34:49 npb7 SMGC: [2254715] Will finish as PID [2254716] Jul 26 22:34:49 npb7 SMGC: [2254681] New PID [2254715] Jul 26 22:34:49 npb7 SMGC: [2254716] Found 0 cache files Jul 26 22:34:49 npb7 SMGC: [2254716] Another GC instance already active, exiting Jul 26 22:34:49 npb7 SMGC: [2254716] In cleanup Jul 26 22:34:49 npb7 SMGC: [2254716] SR 0d9e ('TBS-h574TX') (0 VDIs in 0 VHD trees): no changes -

Because you are writing faster in the VM than the garbage collector can merge/coalesce.

You could try to change the leaf coalesce timeout value to see if it's better.

-

@manilx we use belows values:

/opt/xensource/sm/cleanup.py :

LIVE_LEAF_COALESCE_MAX_SIZE = 1024 * 1024 * 1024 # bytes

LIVE_LEAF_COALESCE_TIMEOUT = 300 # secondsSince then we do not see issues like these, leaf coalesce is different from normal coalesce when a snapshot is left behind. I think your problem is:

Problem 2: Coalesce due to Timed-out: Example: Nov 16 23:25:14 vm6 SMGC: [15312] raise util.SMException("Timed out") Nov 16 23:25:14 vm6 SMGC: [15312] Nov 16 23:25:14 vm6 SMGC: [15312] * Nov 16 23:25:14 vm6 SMGC: [15312] *** UNDO LEAF-COALESCE This is when the VDI is currently under significant IO stress. If possible, take the VM offline and do an offline coalesce or do the coalesce when the VM has less load. Upcoming versions of XenServer will address this issue more efficiently. -

I have been seeing this error recently. "VDI must be free or attached to exactly one VM" with quite a few snapshots attached to the control domain when I look at the health dashboard. I am not sure if this is related to the cbt backups but wanted to ask. It seems to only being happening on my delta backups that do have cbt enabled.

-

@olivierlambert Actually I don't think this is the case. The VM's are completely idle doing noting.