@paco Oh, that's great information, thank you. I'd much rather reset that flag instead of constantly adjusting the fans.

Posts

-

RE: Seeking advice on debugging unexplained change in server fan speed

-

Cool way to indicate retention reasons on backups

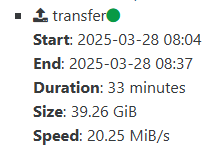

I recently implemented Kopia to replace Duplicati on some systems in my network. Now that I have more than a week of backups, I have gained appreciation for the retention tags they use in the KopiaUI program.

It does a good job of indicating why this version is being retained while also showing how many copies it is maintaining at each retention level. Really handy.

I just noticed the pin to the right. That lets you pin the snapshot so it won't be removed during cleanup. That's kinda cool to be able to single one out to hang on a bit longer, maybe while investigating something I suspect went wrong after that snapshot.

I'm not shilling for Kopia, I haven't used it enough to be sure I trust it yet. I am hoping XO could blatantly steal this kind of idea for backups, especially delta backups. I often get confused about how to get the retention I want when configuring backups and when I search for answers I see other people are also confused.

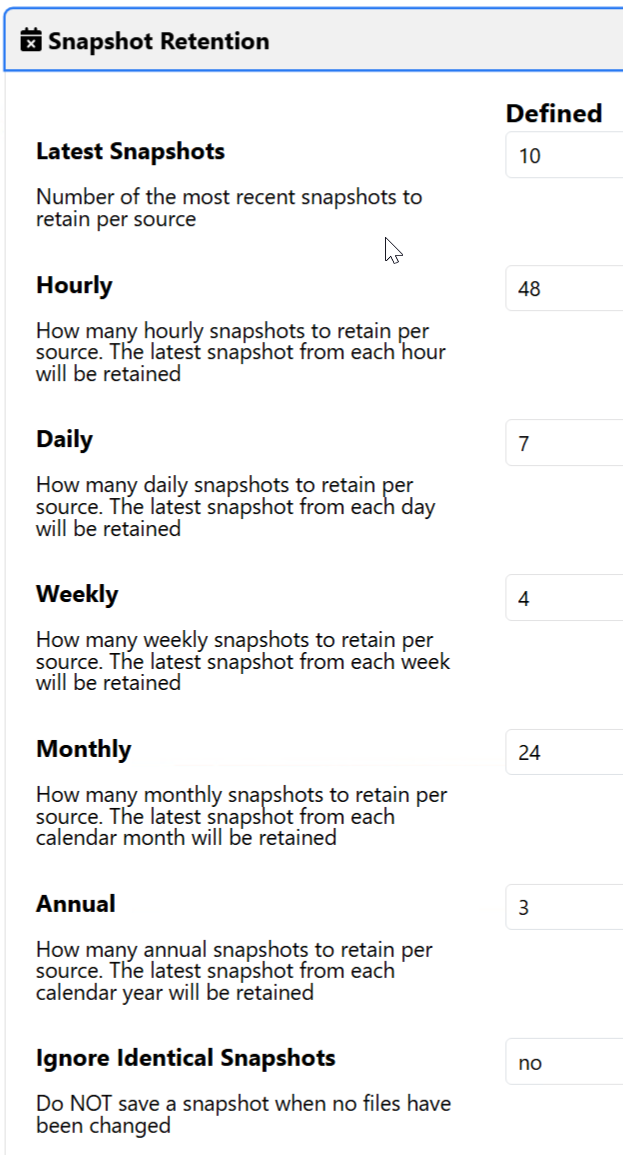

I guess for the sake of completeness, this is how KopiaUI lets me configure retention:

-

RE: Xen Orchestra from Sources unreachable after applying XCPng Patch updates

@JamfoFL Thank you for the follow-up. Now I feel like I can install those patches. Bonus that it appears there are 23 patches now rather than the 22 I saw last week. Now I'm glad I waited, though I think the newest patch doesn't apply to my hardware anyway.

-

RE: How do I diagnose a missing VDI error with Mirror backups

Thank you @florent. I'll do that.

Update: It worked.

-

RE: How do I diagnose a missing VDI error with Mirror backups

Those 5 mirror backups continued to fail over the weekend.

The good news is that the normal delta backups succeeded over the weekend and didn't have any random failures I've been seeing.

-

How do I diagnose a missing VDI error with Mirror backups

I have a couple Delta backups that target the same remote. Yesterday I set up a Mirror backup between that remote and another remote. When I ran the Mirror backup yesterday, it was successful.

Today 7 of the VMs succeed in the backup but 5 of them fail with:

Missing vdi <guid> which is a base for a deltaCould this be caused by the fact that the target for the Mirror backup used to be a target for the Delta backup so it has files on it already. Do I need to purge the folder entirely? I thought maybe that would give it a head start and the reason I'm using the Mirror backup is because the Delta backup used to fail randomly on random VMs when backing up to both remotes.

How would I go about diagnosing this? I assume since the guid for the vdi is missing, it's going to be hard for me to find since I can't find something that's missing. Alternatively, maybe it's because it's currently a weird mix of old Delta backups and new Mirror backups and maybe over the next few days it will purge enough old stuff that it will start working cleanly.

Should I delete the entire remote target of the Mirror to get it started cleanly? Or, more likely, delete all backups on that Mirror for the 5 VMs that failed with this error.

-

RE: Xen Orchestra from Sources unreachable after applying XCPng Patch updates

@JamfoFL Any luck getting this to work? I've put off installing the patches because I don't want to lose my XO installs.

-

RE: Can I rsync backups to another server rather that using two remotes?

@olivierlambert I totally forgot about mirror backups, thanks for the reminder.

I see that a mirror will let me have different backup retention on the mirror target than I do on the mirror source. That's fantastic in my case because the mirror source has less storage space than the mirror target. Using rsync would have limited the backup retention on the Synology.

Now can you tell me how to recover the hours I spent figuring out I couldn't get it working to pull from the Synology side then the time to figure out how to schedule tasks in UNRAID and learn rsync and confirm it would do what I want it to do?

-

Can I rsync backups to another server rather that using two remotes?

I have two remotes that I use for delta backups. One is on a 10Gbe network and the other only has a single 1Gbe port. Since XO writes those backups simultaneously, the backup takes a really long time and frequently randomly fails on random VMs.

I was thinking it would be better if I have XO perform the backup to the 10Gbe remote running UNRAID then schedule an rsync job to mirror that backup to the 1Gbe remote on a Synology array. I've already NFS mounted the Synology share to UNRAID and confirmed I can rsync to it from a prompt, I just need to create a cron job to do it daily. In addition to, hopefully, preventing the random failures, it would let XO complete the backup a lot faster and my UNRAID server and Synology will take care of the rest, reducing the load on my main servers during the workday. The current backup takes long enough that it sometimes spills into the workday.

I'm wondering if that will cause any restore problems. When XO writes to two remotes, does it write exactly the same files to both remote such that it can restore from the secondary even if it never wrote the files to that remote? I would assume they are all the same but want to make sure I don't create a set of backup files that won't be usable. Granted, I can, and will, test a restore from that other location but I don't want to destroy all the existing backups on that remote if this would be a known-bad setup.

Note, I did a --dry-run with rsync and see quite a few files that it would delete and a bunch it would copy. That surprises me because that is still an active remote for the delta backups. I'm suspicious that the differences are due to previously failed backups.

-

RE: Delta backups (NBD) stuck on "Exporting content of VDI Backups.." failing after upgrade from 8.3 RC2 to 8.3 final.

This happens to me on 8.2 with one specific VM. It doesn't always happen but about once a week (it feels like) the backup will get stuck in

Startingand it's always, or almost always, the same VM it's stuck on. I usually reboot the VM running XO and that makes the backup task change toInterruptedand then the next scheduled backup usually works.Would be nice to know if there's something that's causing this that I can try to fix. Not a world-ending problem but it's a nuisance since it stops all future backups for that Job until it's resolved. I'm happy that all the other VMs in that job get backed up at least.

-

RE: Our future backup code: test it!

@flakpyro Oh thank you. I try to go through every update post but I must have missed that one.

It worked

Makes a big difference for this VM if I back up a partially filled 250GB disk vs a largely full 8,250GB set of disks when 8TB of that doesn't need to be backed up.

-

RE: Our future backup code: test it!

Will this add the ability to control which disks on the VM will be backed up? I'd love to be able to select specific disks on a VM to backup and leave others out.

Maybe configured at the VM level rather than the backup level. Flag a disk as not needing backup and then the regular backup procedure would ignore it. However, I could also see why it might be better to control it by creating a specific backup for that VM so you could have different backup schedules, some that backup those extra disks and some that don't. I have no need to ever backup the extra disks at the moment though.

-

RE: Help: Clean shutdown of Host, now no network or VMs are detected

Looking through the kern.log files I found stuff I thought might be interesting but as I scroll, I see it happening a lot so I then wonder if it's normal. I see sets of three events:

Out of memory: Kill process ###

Killed process ###

oom_reaper: reaped process ###I wonder if it was starving for memory and having to kill off processes to survive then eventually died. I see these 90+ times in one log file and over 200 times in another. Don't know if this is just normal activity or indication of a problem.

-

RE: Help: Clean shutdown of Host, now no network or VMs are detected

@olivierlambert Having trouble finding the reboot in the log files because I don't know what to look for. I have nearly 1GB of log files from that day and unfortunately, I don't recall when the reboot happened. Is there something I can grep for in the log that would indicate the reboot and I can backtrack from there?

Tried feeding the GB of log files to Phi4 in my Ollama server but so far it has not been any help finding anything either. Well, it found how to make my office a lot louder by running the server fans at full speed for a few minutes but that wasn't helpful.

-

RE: What's the recommended way to reboot after applying XCP-ng patches?

@DustinB Yeah, that's what I will try to remember to do next time. This is still worlds easier for me than trying to keep my ESXi hosts up to date was back when I used them. So very much better than that. I very rarely updated those at all.

-

RE: Seeking advice on debugging unexplained change in server fan speed

So, a bit after I originally posted this, one of the two servers fans slowed back down and I don't know why. I only noticed it weeks later.

Then this morning we had a power outage and all the servers were shut down. When I booted them back up when power was restored, the other server was running the fans at normal speed. No idea why it went back, I didn't do anything to fix it.

After that reboot though, just a single fan in the server that originally didn't have that problem, is now running fast. That makes me wonder if the fan is failing so I'm looking to find some spares to keep around.

Any future reboots are going to make me a bit stressed wondering if the fans will speed up again.

I did install the pool patches today and that reboot didn't impact the fans, thankfully. I wish I understood what happened but if it happens again I might use this docker container to take over control of them: https://github.com/tigerblue77/Dell_iDRAC_fan_controller_Docker

-

RE: What's the recommended way to reboot after applying XCP-ng patches?

With the newest patches I decided to try the way you guys suggested. Install Pool Patches followed by Rolling Pool Reboot.

The Rolling Pool Reboot gives me an error:

CANNOT_EVACUATE_HOST(VM_REQUIRES_SR:<OpaqueRef guid list>So, it appears that I can't use that when I'm running local storage. I tried just rebooting the master and got the same error.

Shutting down all the VMs first allowed me to use the Rolling Pool Reboot. That's still better than my prior attempts. I only have about a dozen VMs running on three servers so it's not a huge deal to shut them down individually. However, it rebooted the master then rebooted one of the other hosts, then when it tried to reboot the third host I got the

CANNOT_EVACUATE_HOSTerror again because the master had restarted all the VMs on the third host.I think I'll just need to install the patches on the pool, disable all the hosts, reboot the pool then enable the hosts again. That's probably the smoothest method without having shared storage.

-

RE: Help: Clean shutdown of Host, now no network or VMs are detected

@olivierlambert I do have a /var/crash folder but it has nothing it in except a file from a year ago named

.sacrificial-space-for-logs.The Xen logs are verbose. Any suggestions of text to grep to find what I'm looking for? Other than the obvious

errorI should search for.Currently looking in xensource.log.* for error lines to see if I can figure anything out.

-

RE: Help: Clean shutdown of Host, now no network or VMs are detected

Just want to document that this happened again, on the same host.

My XO (from source) that manages my backups, ran the backups last night. I know it was active up until at least around 5:30am but by the time I got into the office it was inaccessible by browser, ssh and ping. Other XO instances showed that it was running but the Console tab didn't give me access to its console.

A few hours later I found that other VMs on that same host had become inaccessible in the same fashion and also had no console showing in XO.

An hour or two later I found that XO showed the host as being missing from the pool, which it had not been earlier in the day. When I checked the physical console for that host, I found it had red text "<hostname> login: root (automatic login)" and did not respond to the keyboard other than putting more red text on screen of whatever I typed. I hit ctrl-alt-del and it didn't seem to do anything so I typed random things, then XCP-ng started rebooting. I'm guessing it was 60 to 120 seconds after my first ctrl-alt-delete.

When it came back up it could not find the pool master and said it had no network interfaces. I was able to solve it by doing another emergency network reset.

Would be nice if this wouldn't happen, makes me super nervous about stability. Thankful that the two times this has happened, it was on my least mission critical server. However, it's the server that handles backups so it's still stressful to have it go down. Also makes me wonder if there might be something wrong with that server's hardware.

-

RE: Common Virtualization Tasks in XCP-ng

A powershell SDK? Oh, you just made me a rather happy guy.