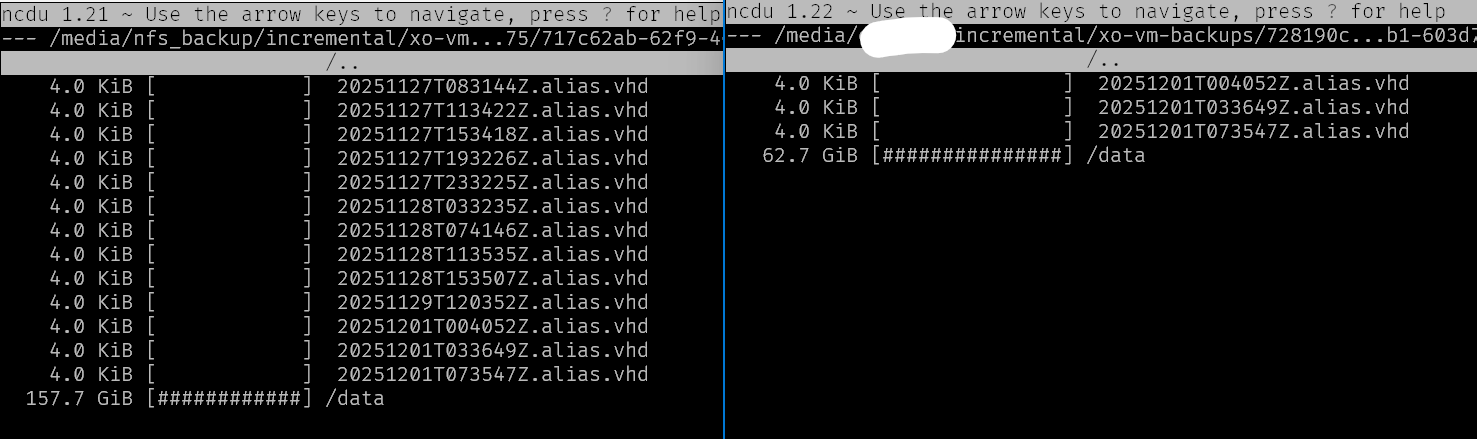

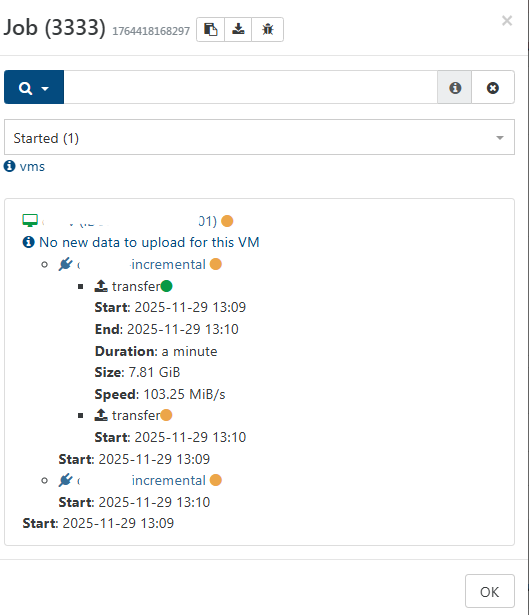

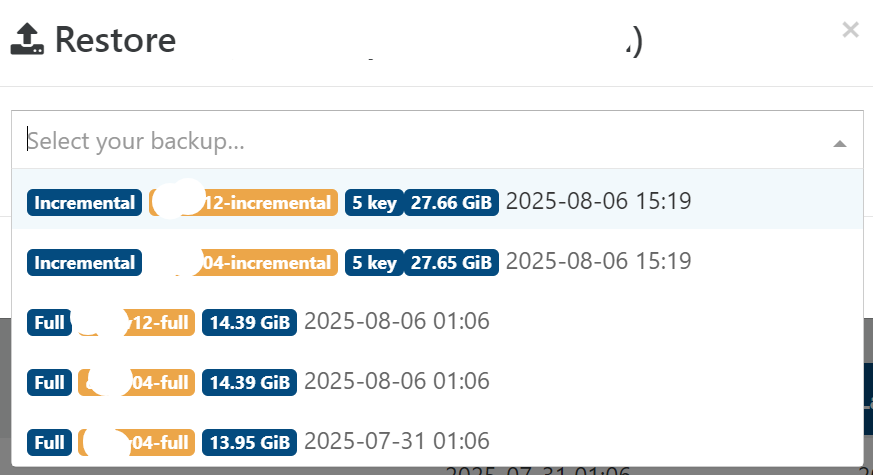

It looks like it transfers one backup, deletes it, starts the next backup, deletes it, starts the next one... and so on. This seems rather inefficient for full backups. I can understand it has to transfer the full chain when dealing with incremental backups, even if it has to prune and merge them afterwards.

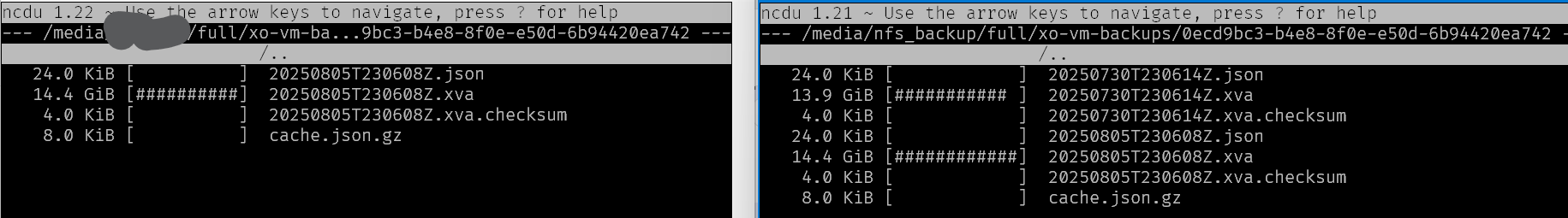

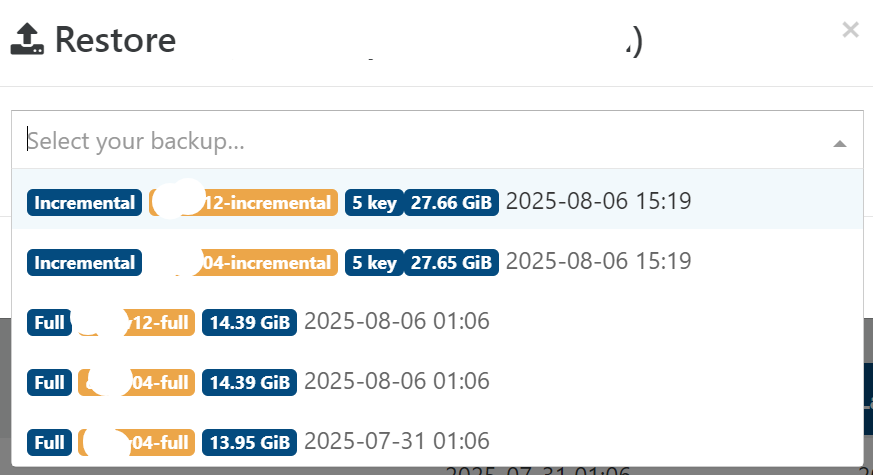

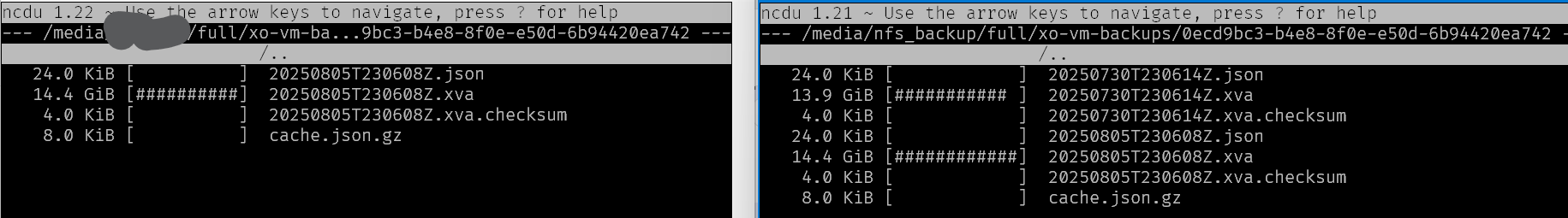

I also notice that even though I set the retention to 1000 in the full mirror job, not all backups are copied:

{

"data": {

"type": "VM",

"id": "0ecd9bc3-b4e8-8f0e-e50d-6b94420ea742"

},

"id": "1764584306124",

"message": "backup VM",

"start": 1764584306124,

"status": "success",

"infos": [

{

"message": "No new data to upload for this VM"

},

{

"message": "No healthCheck needed because no data was transferred."

}

],

"tasks": [

{

"id": "1764584306137:1",

"message": "clean-vm",

"start": 1764584306137,

"status": "success",

"end": 1764584306150,

"result": {

"merge": false

}

}

],

"end": 1764584306151

},

️ XO 6: dedicated thread for all your feedback!

️ XO 6: dedicated thread for all your feedback!