@sotero could you post the output of:

# Collect xen info

xl info

# Collect C-States info

xenpm start 3

?

@sotero could you post the output of:

# Collect xen info

xl info

# Collect C-States info

xenpm start 3

?

/opt/xensource/libexec/xen-cmdline --set-dom0 "pcie_port_pm=off"

reboot

As an another option, you can also test the pcie_aspm=off that we did before for the Nvidia GPU (edit: added the command to remove the pcie_port_pm):

/opt/xensource/libexec/xen-cmdline --delete-dom0 "pcie_port_pm"

/opt/xensource/libexec/xen-cmdline --set-dom0 "pcie_aspm=off"

reboot

Tux

@steff22 I'm seeing that this new card has large BAR. Could you disable in the BIOS:

Tux

@steff22 said in Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work:

03:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Navi 32 [Radeon RX 7700 XT / 7800 XT] (rev ff) (prog-if ff)

!!! Unknown header type 7f

Kernel driver in use: pciback03:00.1 Audio device: Advanced Micro Devices, Inc. [AMD/ATI] Navi 31 HDMI/DP Audio (rev ff) (prog-if ff)

!!! Unknown header type 7f

Kernel driver in use: pciback

Something went wrong with the card detection. Since it's a big file, instead of posting, could you upload (last button) /var/log/kern.log ?

Searching around some old forums with this error, it's said that if the system has the AMD iGPU + AMD dGPU combo, the iGPU takes precedence and power down the dGPU. Verify in the BIOS (under Advanced > AMD PBS > Graphics or something like that) if there's any configuration regarding this hybrid mode.

Those last messages you posted are normal (guest net and disk infos).

Tux

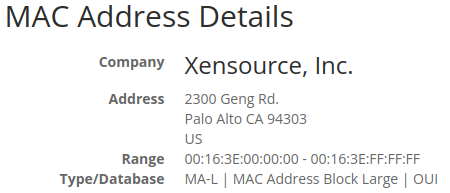

FYI, Xen has a registered OUI: 00:16:3E

edit: I didn't read @olivierlambert previous post mentioning the OUI

Source: https://www.macvendorlookup.com

@steff22 Any change via grub menu is non-persistent and will be reverted in the next (re)boot. It's useful for testing/troubleshooting purposes.

Regarding dom0 memory increasing, it was a simple boot test to verify possible lack of the resource. Given that IOMMU compat fix, I'd keep the default value for now.

For the new AMD dGPU failing, any PCI error in dom0 /var/log/kern.log? Post the output of lspci -k -v .

Tux

@steff22 Wow, great news! Kudos to the Xen & XCP-ng dev teams

@steff22 said in Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work:

The bios disabled internal ipma when an Ext GPU card is connected even though int gpu is selected as primary gpu in the bios. So I only see xcp-ng startup on screen no xsconsole. Have tried without a screen connected extgpu same error then

I suggest to call the Asrock support and explain this behavior.

@steff22 said in Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work:

no. 2 Have tried pressing Detect only to be told that there is no more screen. Have only tried reboot

Could you try the shutdown/start after the driver installation?

@steff22 said in Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work:

At first I thought there was something wrong with the bios. But this works with Vmware esxi and proxmox.

Considering it worked with the same XCP-ng version, but on a different hardware, that's why I'm more inclined to a Xen incompatibility issue with the combo Nvidia + some AMD motherboards. If you search the forum, there's a mixed result about that.

@steff22 I have some questions:

[Detect] button at the display settings window?Nonetheless, if the same dGPU card works normally on another XCP-ng host, a possible Xen passthrough incompatibility with that AM5 board should be considered. For example:

Tux

@steff22 After reading this Blue Iris topic, I wonder if it's related. As of Xen 4.15, there was a change on MSRs handling that would cause a guest crash if it tries to access those registers. XCP-ng 8.3 has the Xen 4.17 version. The issue seems to be CPU-vendor-model dependent too.

https://xcp-ng.org/forum/topic/8873/windows-blue-iris-xcp-ng-8-3

It's worth to test the solution provided there (VM shutdown/start cycle is required to take effect):

xe vm-param-add uuid=<VM-UUID> param-name=platform msr-relaxed=true

Replace the <VM-UUID> with your VM W10 uuid.

Tux

@steff22 weird bug. Is that W10 VM a fresh install on Xen? It seems that the driver or the dGPU are timing out somehow. Could be related to PCI power management (ASPM), but I'm not sure. You could try booting dom0 with pcie_aspm=off just for testing.

/opt/xensource/libexec/xen-cmdline --set-dom0 "pcie_aspm=off"

reboot

Another option that comes to mind is to compare the VM attributes on Proxmox and try to spot any VM config differences by set/unset the PCI Express option.

Tux

@steff22 Ah, you should try to reproduce the BSOD and then run the xl dmesg. I was wondering why there's no error this time in the log

@steff22 Ok, let's boot Xen in verbosity=all mode:

/opt/xensource/libexec/xen-cmdline --set-xen "loglvl=all guest_loglvl=all"

reboot

After the VM BSODs, post the xl dmesg output.

Tux

@Teddy-Astie @steff22 For the Windows VM, Xen is indeed triggering a guest crash:

(XEN) [ 1022.240112] d1v2 VIRIDIAN GUEST_CRASH: 0x116 0xffffdb8ffaf76010 0xfffff8077938e9f0 0xffffffffc0000001 0x4

I also noticed that dom0 memory was autoset to only 2.6G (out of 32G total) which might be low for a more resource-hog dGPU. Before booting Xen in debug mode, could we rule this out by testing a non-persistent boot change to a higher value (eg. 8G)?

<e> to edit the boot linedom0_mem=8192M,max:8192M<F10> to bootfree -m). It must be within the 7000-8000 range.Tux

@steff22 what's the output of lspci -k and xl pci-assignable-list ?

Also, the outputs of the system logs re. GPU and IOMMU initialization would be very useful:

egrep -i '(nvidia|vga|video|pciback)' /var/log/kern.log

xl dmesg

Tux

@steff22 Assuming the xen-pciback.hide was previously set, could you try this workaround (no guarantee that'll work, since each motherboard and BIOSes have their quirks):

/opt/xensource/libexec/xen-cmdline --set-dom0 pci=realloc

reboot

@jshiells said in MAP_DUPLICATE_KEY error in XOA backup - VM's wont START now!:

@tuxen no sorry, great idea but we are not seeing any errors like that in kern.log. this problem when it happens is across several xen hosts all at the same time. it would be wild if all of the xen hosts were having hardware issues during the small window of time this problem happened in. if it was one xen server then i would look at hardware but its all of them, letting me believe its XOA, a BUG in xcp-ng or a storage problem (even though we have seen no errors or monitoring blips at all on the truenas server)

In a cluster with shared resources, It only takes one unstable host or a crashed PID left with an open shared resource to cause some unclean state cluster-wide. If the hosts and VMs aren't protected by a HA/STONITH mechanism to automatically release the resources, a qemu crash might keep one or more VDIs in an invalid, blocked state and affect SR scans done by the master. Failed SR scans may prevent SR-dependent actions (eg. VM boot, snapshots, GC kicking etc).

I'm not saying there isn't a bug somewhere but running MEMTEST/CPU tests on the host that triggered the bad RIP error would be my starting point of investigation. Just to be sure.

@jshiells Did you also check /var/log/kern.log for hardware errors? I'm seeing qemu process crashing with bad RIP (Instruction pointer) value which screams for a hardware issue, IMO. Just a 1-bit flipping in memory is enough to cause unpleasant surprises. I hope the servers are using ECC memory. I'd run a memtest and some CPU stress test on that server.

Some years ago, I had a two-socket Dell server with one bad core (no errors reported at boot). When the Xen scheduler ran a task on that core... Boom. Host crash.

@cunrun @jorge-gbs any init errors in dom0 /var/log/kern.log re. GIM driver? Also, if you search some topics here covering this specific GPU, there were mixed results booting dom0 with pci=realloc,assign-busses. Maybe it worth a try.

@KPS one thing is clear to me. The reboot is triggering a VM shutdown due to a system crash (kernel errors and memory dump files being a lead). Without a detailed stack trace (like Linux's kernel panic) and the difficulty in reproducing the issue, troubleshooting is a very hard task. One last thing I'd check is the /var/log/daemon.log at the VM shutdown time window.