XO - Files Restore

-

That's 100% unrelated. If you do file level restore, you need to have an XO environment that's able to mount volumes from LVM. That's why I said you should test with XOA to validate your environment (just import a fresh one, connect to your backup repo and try to file restore).

-

@xcplak you can try to install the package lvm2 on your xo source

-

Ok, now it's clear!

@AtaxyaNetwork

Already installed :lvm2 is already the newest version (2.03.16-2). -

@olivierlambert

Just tried on XOA, I can't mount the NFS share on it. I get :Test remote Unsupported state or unable to authenticate data -

I just deployed a new NFS share for testing.

The share is mounted on both XO and the newly deployed XOA.

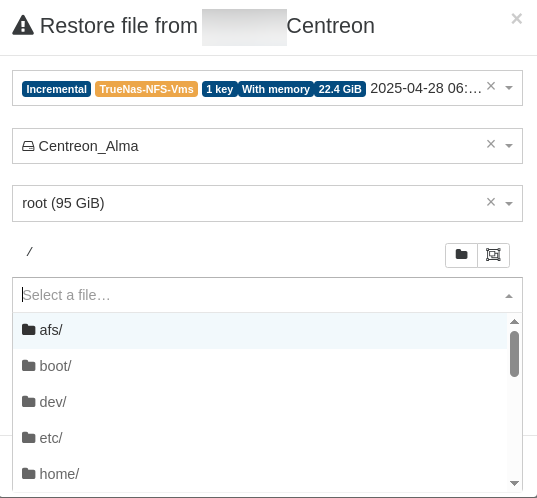

I backed up a VM that I can see the files in using XO.

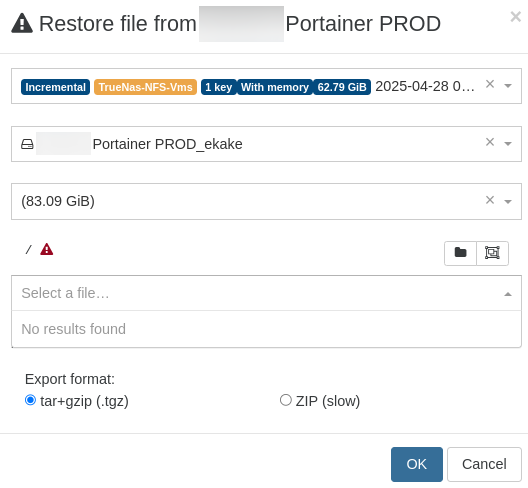

I faced the same issue with both XOA and XO, I can’t see the files.

I backed up the same VM using XOA and tried to restore the files, same issue. -

You have a remote (backup repo) configuration issue. That's unrelated, check your permissions.

-

Why would this issue be related to my NFS share? I can access the share without any problems, read and write operations work fine. Other NFS shares with multiple applications running are also functioning normally.

I checked the XO logs and found the following:

xo-server[679]: 2025-04-29T21:45:58.276Z xo:api WARN | backupNg.listFiles(...) [114ms] =!> Error: Command failed: vgchange -ay vg_iredmail xo-server[679]: File descriptor 22 (/var/lib/xo-server/data/leveldb/LOG) leaked on vgchange invocation. Parent PID 679: node xo-server[679]: File descriptor 24 (/var/lib/xo-server/data/leveldb/LOCK) leaked on vgchange invocation. Parent PID 679: node xo-server[679]: File descriptor 25 (/dev/fuse) leaked on vgchange invocation. Parent PID 679: node xo-server[679]: File descriptor 26 (/var/lib/xo-server/data/leveldb/MANIFEST-000103) leaked on vgchange invocation. Parent PID 679: node xo-server[679]: File descriptor 31 (/dev/fuse) leaked on vgchange invocation. Parent PID 679: node xo-server[679]: File descriptor 34 (/var/lib/xo-server/data/leveldb/000356.log) leaked on vgchange invocation. Parent PID 679: node xo-server[679]: WARNING: Couldn't find device with uuid oWTE8A-LNny-Ifsc-Jmtp-lTkB-dSjt-2AqhwY. xo-server[679]: WARNING: VG vg_iredmail is missing PV oWTE8A-LNny-Ifsc-Jmtp-lTkB-dSjt-2AqhwY (last written to /dev/xvda4). xo-server[679]: Refusing activation of partial LV vg_iredmail/lv_root. Use '--activationmode partial' to override.To me, this looks more like an issue with file restore than the NFS itself.

It seems similar to what's reported here:

https://github.com/vatesfr/xen-orchestra/issues/7029 -

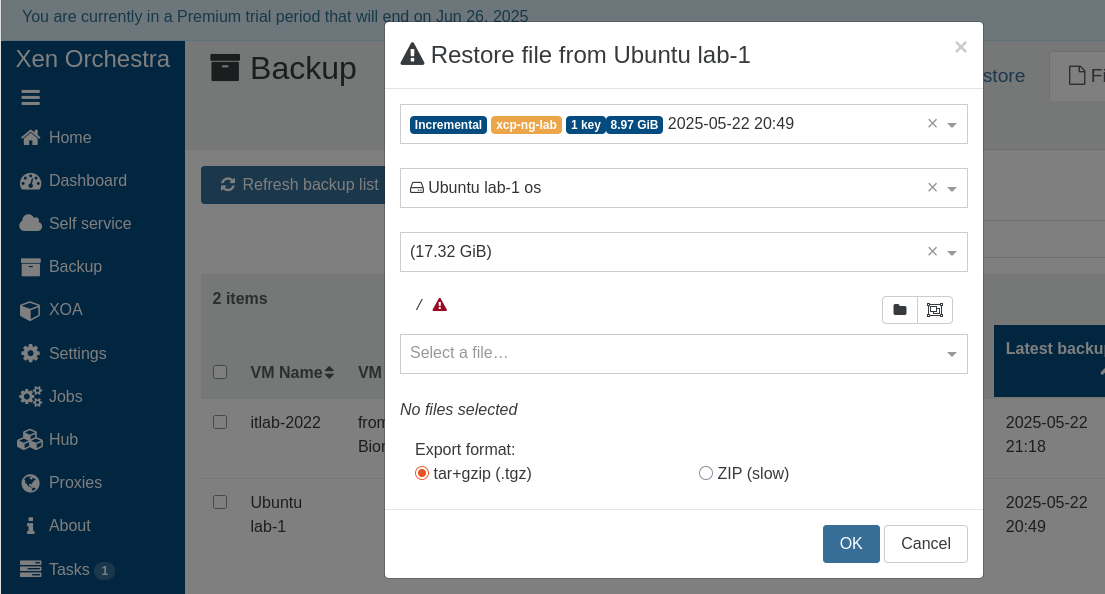

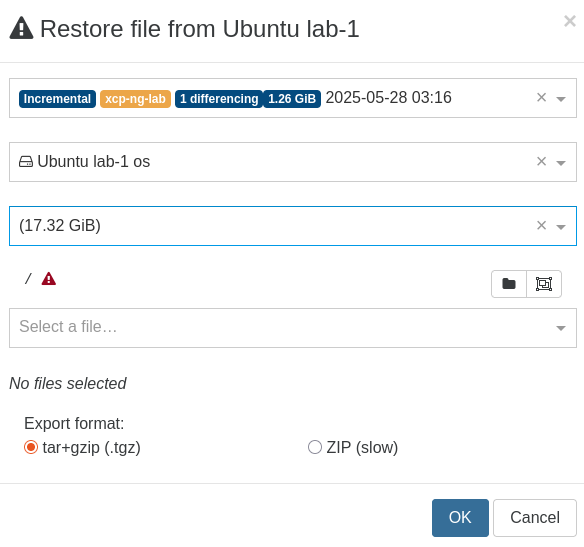

@xcplak for what it's worth, I confirm that file restore from LVM partitions do not work using XOA (I activated my trial just to test this):

-

From the log on the XOA-VM (/var/lib/xo-server/data/leveldb/001205.log)

{"method":"backupNg.listFiles","params":{"remote":"3f679c65-1290-4264-8ede-d91d08b2abf0","disk":"/xo-vm-backups/f94129f5-5c2c-6d6b-51e7-eeed24bf965b/vdis/6f239189-6971-4d3a-8e6a-5d43bd8bdce3/e163632c-9dca-4947-9c44-b2b794008294/20250522T184952Z.alias.vhd","path":"/","partition":"23a71973-6022-44f5-b51a-6d48b93c6cfd"},"name":"API call: backupNg.listFiles","userId":"3ffcc4df-8f09-41ce-99dc-8a1f1c568c56","type":"api.call"},"start":1748214110665,"status":"failure","updatedAt":1748214110991,"end":1748214110991,"result":{"code":32,"killed":false,"signal":null,"cmd":"mount --options=loop,ro,norecovery,sizelimit=18594398208,offset=2879389696 --source=/tmp/axvvrbout2/vhd0 --target=/tmp/4rksxkr68si","message":"Command failed: mount --options=loop,ro,norecovery,sizelimit=18594398208,offset=2879389696 --source=/tmp/axvvrbout2/vhd0 --target=/tmp/4rksxkr68si\nmount: /tmp/4rksxkr68si: unknown filesystem type 'LVM2_member'.\n dmesg(1) may have more information after failed mount system call.\n","name":"Error","stack":"Error: Command failed: mount --options=loop,ro,norecovery,sizelimit=18594398208,offset=2879389696 --source=/tmp/axvvrbout2/vhd0 --target=/tmp/4rksxkr68si\nmount: /tmp/4rksxkr68si: unknown filesystem type 'LVM2_member'.\n dmesg(1) may have more information after failed mount system call.\n\n at genericNodeError (node:internal/errors:984:15)\n at wrappedFn (node:internal/errors:538:14)\n at ChildProcess.exithandler (node:child_process:422:12)\n at ChildProcess.emit (node:events:518:28)\n at ChildProcess.patchedEmit [as emit] (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/log/configure.js:52:17)\n at maybeClose (node:internal/child_process:1104:16)\n at Socket.<anonymous> (node:internal/child_process:456:11)\n at Socket.emit (node:events:518:28)\n at Socket.patchedEmit [as emit] (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/log/configure.js:52:17)\n at Pipe.<anonymous> (node:net:343:12)\n at Pipe.callbackTrampoline (node:internal/async_hooks:130:17)"}}j▒▒5▒▒▒!tasks!0mb49jl91%▒▒▒▒▒!tasks!0mb49jl97▒{ -

@peo Still an existing problem:

https://github.com/vatesfr/xen-orchestra/issues/7029 -

Pinging @lsouai-vates

-

@olivierlambert better ask @florent

-

@peo Thanks for the info!

I also tested it with XOA and got the same result.

However, when deploying the same VM without LVM, file restore worked perfectly with both XO and XOA.

It definitely seems related to the GitHub issue.In the meantime, I've switched to a commercial backup solution until it's resolved.

-

@xcplak With a LVM guest, you can restore the whole VM now. The backup data is actually saved correctly and it is possible to manually restore a single file. So they can fix XO and the old backup data is still good.

-

@Andrew

I'm not sure I understand what you meant.

Full VM restore is working, the issue is with file-level restore. So restoring a full 200GB VM just to retrieve 2 or 3 files isn't really a viable solution. -

Great that this bug/problem is being confirmed by others. Reproducing is as simple as create a new Linux VM (only using defaults when installing Linux), back it up, then try to restore files.

Restoring single files is a feature that is at least needed in production environments (anything outside the "home lab"). Personally, I have no problem with waiting an hour or two for a full restore to a temporary VM to be able to access a file deleted or modified by mistake.

There are people willing to help find and pinpoint problems like this, but having to use XOA to get attention to problems, given that it requires a license beyond the free trial month makes it less appealing for us spend our free time to help with this. -

I can confirm this as well

several new ubuntu 24 vm's with LVM having this problem. I thought i was going crazy

existing debian 10,11,12 VM's using LVM are not effected

vm's not using LVM are not effected. -

@xcplak @peo @Andrew some reply from XO dev:

"from ubuntu 20 ( I think) the default partition scheme includes a lvm group name ubuntu-vg . Any duplicate on this will be unmountable in XO

the first one will work, but not the next one, at least until it is dismounted ( after 10 minutes unused)

DEcreasing this delay won't change the root cause, and will cause other issues when user deselect/Reselect a disk" -

@lsouai-vates Is this a description of the cause of the problem ? From what you describe, if one selects a backup containing a LVM partition scheme, it should be able to be mounted at least the first time ?

As in here: I verified that this machine is using LVM first, and when selecting it, I immediately selected the large LVM partition to try to restore a file from that (which failed).

The machine (Debian 12) running XO do not itself use LVM, so "ubuntu-vg" should be free for mounting this first time.