XCP-ng 8.3 updates announcements and testing

-

@marcoi said in XCP-ng 8.3 updates announcements and testing:

also noticed a new issue- seems like changes i had in the /etc/xensource/usb-policy.conf file for usb was lost during the upgrade.

I have some usb comm devices i use with a home assistant VM and they were gone post the upgrade.

anyway to make those change last post upgrade? Maybe make then options in gui so a config file can always be reflective of gui settings?

This one is known. I had opened an issue about it, but it didn't get much traction yet. We also have a related item in our backlog, but it's a matter of finding resources to handle it.

-

@stormi https://xcp-ng.org/forum/post/101015 V kind, thanks

-

@stormi Yes, I'm also affected in here, due to an USB device that's disabled by default.

-

I did post an alternative script here:

https://xcp-ng.org/forum/topic/8620/usb-passthrough-override-script-to-ensure-usb-policy-conf-consistencyBut it was later removed due to request by @stormi .

I'm currently using my script which basically backs up your settings and overrides the default one (after backing it up on first install) on every boot. I know its a crude way to handle but it was only meant to be temporary till you guys find a solution.

I have reposted it.

Please note : Issues caused by this script (if any) shall not be covered by the XCP-NG Support Team -

@gb.123 Please mention in your post that any issue caused by this script will not be covered by official support. The concerns I voiced then still hold.

-

Great Idea!

Post updated !

Update: I also added 'Automatic Backup' which backs up your original file in case something goes wrong.

-

New security and maintenance update candidates for you to test!

Security vulnerabilities have been detected and fixed for xen and varstored. We also publish other non-urgent updates which we had in the pipe for the next update release.

Security updates:

-

xen:- XSA-477 / VSA-2026-001: A buffer overflow in the Xen shadow tracing code could allow a DomU virtual machine to crash Xen, or potentially escalate privileges.

- XSA-479 / VSA-2026-003: Some Xen optimizations to avoid clearing internal CPU buffers when not required could allow one guest to leak data of another guest. A mitigation can be applied without the fix by rebooting vulnerable Xen with "spec-ctrl=ibpb-entry=hvm,ibpb-entry=pv" on the Xen command line at the cost of decreased performances.

-

varstored:- XSA-478 / VSA-2026-002: Within varstored, there were insufficient compiler barriers, creating TOCTOU issues with data in the shared buffer. An attacker with kernel level access in a VM can escalate privilege via gaining code execution within varstored.

Maintenance updates:

-

guest-templates-json:- Update VM template labels

- Sync RHEL10 template with XenServer's

-

intel-microcode:- Update to publicly released microcode-20251111

- Updates for multiple functional issues

-

kernel: Bug fixes in the NFS and NBD stacks for various deadlocks and other race conditions. -

qemu: Backport for CVE-2021-3929, fixing a DMA reentrancy flaw in NVMe emulation, that could lead to use-after-free from a malicious guest and potential arbitrary code execution. -

smartmontools: Update to minor release 7.5 -

swtpm: Synchronize with release 0.7.3-12 from XenServer. No functional changes. -

xapi: Fix regression on dynamic memory management during live migration, causing VMs not to balloon down before the migration. -

xcp-ng-release: Prevent remote syslog from being overwritten by system updates.

XOSTOR

In addition to the changes in common packages, the following XOSTOR-specific packages received updates:drbd: Reduces the I/O load and time during resync.drbd-reactor: Misc improvements regarding drbd-reactor and eventslinstor:- Resource delete: Fixed rare race condition where a delayed DRBD event causes "resource not found" ErrorReports

- Misc changes to robustify LINSTOR API calls and checks

If you are using Xostor, please refer to our documentation for the update method.

Test on XCP-ng 8.3

yum clean metadata --enablerepo=xcp-ng-testing,xcp-ng-candidates yum update --enablerepo=xcp-ng-testing,xcp-ng-candidates rebootThe usual update rules apply: pool coordinator first, etc.

Versions:

guest-templates-json: 2.0.15-1.1.xcpng8.3intel-microcode: 20251029-1.xcpng8.3kernel: 4.19.19-8.0.44.1.xcpng8.3qemu: 4.2.1-5.2.15.2.xcpng8.3smartmontools: 7.5-1.xcpng8.3swtpm: 0.7.3-12.xcpng8.3xapi: 25.33.1-2.3.xcpng8.3xcp-ng-release: 8.3.0-36xcp-python-libs: 3.0.10-1.1.xcpng8.3xen: 4.17.5-23.2.xcpng8.3varstored: 1.2.0-3.5.xcpng8.3

XOSTOR

drbd: 9.33.0-1.el7_9drbd-reactor: 1.9.0-1kmod-drbd: 9.2.16-1.0.xcpng8.3linstor: 1.33.0~rc.2-1.el8linstor-client: 1.27.0-1.xcpng8.3python-linstor: 1.27.0-1.xcpng8.3xcp-ng-linstor: 1.2-4.xcpng8.3

What to test

Normal use and anything else you want to test.

Test window before official release of the updates

2 days max.

-

-

Installed on my usual selection of hosts. (A mixture of AMD and Intel hosts, SuperMicro, Asus, and Minisforum). No issues after a reboot, PCI Passthru, backups, etc continue to work smoothly

-

@gduperrey Standard XCP 8.3 pools updated and running.

-

Thank you everyone for your tests and your feedback!

The updates are live now: https://xcp-ng.org/blog/2026/01/29/january-2026-security-and-maintenance-updates-for-xcp-ng-8-3-lts/

-

D dcskinner referenced this topic

-

@gduperrey updated 2 hosts @home. 5 @office.

Had to run yum clean metadata ; yum update on cli (cancelling to run RPU in XO) for updates to appear. -

@gduperrey we had the XOA update alert, upgraded to XOA 6.1.0

but no sign of XCP hosts updates ?

When patches are available, it usually pops up on its own, is there something to do on cli now ?

EDIT : my bad, we had a DNS resolution problem... I now see a bunch of updates available...

-

Yesterday, from memory: Up-to-date XO (CE) said there were Pool updates available, but the three individual XCP-ng hosts showed nothing available. I went to https://xcp-ng.org/blog/tag/security/ and did not see any new patches published for January, and I feared that the previous updates from October had somehow not been fully installed. I put hosts into Maintenance Mode and rebooted them, and patches were seemingly installed as part of the reboot. I don't recall if I rebooted (and therefore patched) the Master first or not as you are supposed to do. This was a bit unsettling.

As of this morning, Central Time US, for our three-node XS 8.4 Pool also managed by the same XO (CE), I see patches available in XO both at the Pool level and at the Host level as expected. (Yesterday, I did not see any patches reported by XO for our XS 8.4 Pool.)

-

@robertblissitt You can check

/var/log/yum.logon the XCP-ng hosts to see when the updates were actually applied, but there isn't anything in a standard installation of XO / XCP-ng that would trigger an "automated" update of missing patches. -

@Danp Thank you, and I could easily be misremembering how the patches got installed - I may have clicked a button (at the Host level?) to install them even though I could not see any to install. The other events I mention, however, I am more certain of.

-

applied latest patches to my two host pool without issue.

-

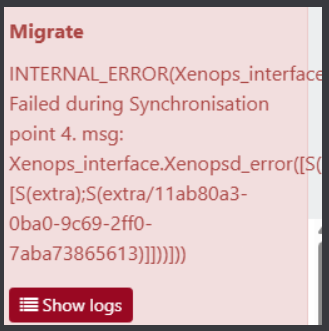

currently having heavy issues with a production cluster of 3 hosts

RPU launched, all VMs except one did evacuate the Master. we managed to shutdown this VM/restart it on another host

we had

Master patch & reboot proceeded

Then RPU tried to evacuate a slave host and all VM are now locked we can't shutdown/hard shutdown them,

we have a critical VM on this host that is still running, we tried to snapshot it in case of need of hard reboot of the host, but OPERATION NOT SUPPORTED DURING AN UPGRADE

we manually install patches on the host without reboot and then snapshot proceeded

I hope this VM is secured by this snapshot...ticket is open with pro support but quite stalled for now... no news since yesterday Ticket#7751752

-

That's a weird one

Ping @Team-Hypervisor-Kernel

Ping @Team-Hypervisor-Kernel -

@olivierlambert shoutout to @danp that did a takeover of the incident ticket

he headed me the right way to resolution of the problem, my production pool is back up & running with its VMs.

there was indeed a diff between what was seen by "xl list"/"xenops-cli list" and what was seen by XOA in the web ui.

a couple "xl destroy pid" to destroy zombie VMs, and toolstack restarts later, all is now up.I don't know how the hell a simple RPU did get me in this situation though...

-

Oh wow. Indeed, that's strange. And big kudos to @danp then!!