@florent Awesome, i have patched a second location's XOA and am not experiencing the issue anymore there either.

Posts

-

RE: XOA 6.2 parent VHD is missing followed by full Backup

-

RE: XOA 6.2 parent VHD is missing followed by full Backup

@florent thanks for the quick work on this. I have installed the patch and am running a test job. So far i do not see the rename error in the logs. The only error i see is:

Feb 27 08:26:45 xoa xo-server[1792434]: 2026-02-27T14:26:45.711Z vhd-lib:VhdFile INFO double close { Feb 27 08:26:45 xoa xo-server[1792434]: path: { Feb 27 08:26:45 xoa xo-server[1792434]: fd: 18, Feb 27 08:26:45 xoa xo-server[1792434]: path: '/xo-vm-backups/2e8d1b6c-5fe8-0f41-22b9-777eb61f582a/vdis/08dce402-b472-4fd5-ab38-549b4c263c31/6b1191c3-8052-4e16-9cfa-ede59bf790ea/20260131T040121Z.vhd' Feb 27 08:26:45 xoa xo-server[1792434]: }, Feb 27 08:26:45 xoa xo-server[1792434]: firstClosedBy: 'Error\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at VhdFile.dispose (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:134:22)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at RemoteVhdDisk.dispose (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:104:26)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at RemoteVhdDisk.close (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDisk.mjs:101:24)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at RemoteVhdDisk.unlink (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDisk.mjs:427:16)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at RemoteVhdDiskChain.unlink (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDiskChain.mjs:269:18)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at #step_cleanup (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/MergeRemoteDisk.mjs:279:23)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at async MergeRemoteDisk.merge (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/MergeRemoteDisk.mjs:159:11)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at async _mergeVhdChain (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_cleanVm.mjs:56:20)', Feb 27 08:26:45 xoa xo-server[1792434]: closedAgainBy: 'Error\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at VhdFile.dispose (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:129:24)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at RemoteVhdDisk.dispose (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:104:26)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at RemoteVhdDisk.close (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDisk.mjs:101:24)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDiskChain.mjs:85:52\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at Array.map (<anonymous>)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at RemoteVhdDiskChain.close (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDiskChain.mjs:85:35)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at #cleanup (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/MergeRemoteDisk.mjs:309:21)\n' + Feb 27 08:26:45 xoa xo-server[1792434]: ' at async _mergeVhdChain (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_cleanVm.mjs:56:20)' Feb 27 08:26:45 xoa xo-server[1792434]: }Im guessing this is unrelated?

-

RE: XOA 6.2 parent VHD is missing followed by full Backup

Some additional information since my original post.

The first time a backup runs after upgrading i see the log entries i posted above. The second time the backup runs, i see the messages in the screenshot i posted and a full backup run as the result. So it can be easily to think everything is working fine after updating and running a job only one time unless you are watching xo-server via journalctl or run the job a second time. I have run into this across 2 XOA appliances as well as 2 XOCE installs, all on unrelated networks / locations.

-

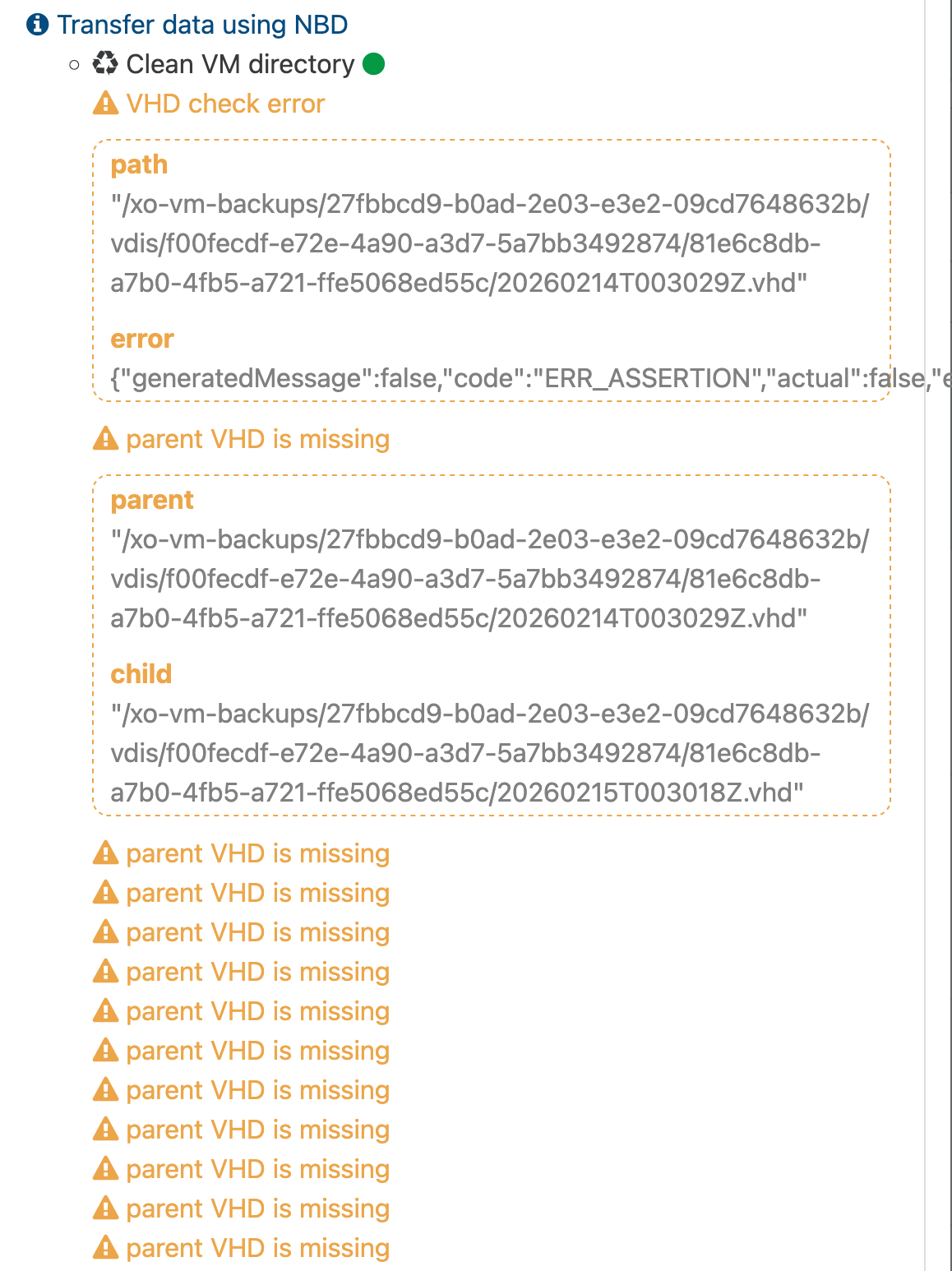

XOA 6.2 parent VHD is missing followed by full Backup

Since installing todays XOA appliance update i am noticing strange behaviour on multiple XO instances while backups are running. (2 XOA and my own XOCE in my home lab)

A number of VMs will display the error attached and then run a full backup. These jobs have been running flawlessly for quite sometime now. This wipes out the whole backup chain and leaves you with a single full backup in the end.

Looking at sudo journalctl -u xo-server -f -n 50 on XOA i see the following happening during the merge of each VM

Feb 26 21:20:24 xoa xo-server[1731622]: 2026-02-27T03:20:24.207Z xo:backups:mergeWorker INFO merge in progress { Feb 26 21:20:24 xoa xo-server[1731622]: done: 2647, Feb 26 21:20:24 xoa xo-server[1731622]: parent: '/xo-vm-backups/d6befe36-fea7-04f3-25f1-feada684702b/vdis/8ca7c07e-4509-463d-8867-87e6b2026ad6/5afe2bcd-42d4-4b58-ba95-2c77e5107692/20260207T014453Z.vhd', Feb 26 21:20:24 xoa xo-server[1731622]: progress: 93, Feb 26 21:20:24 xoa xo-server[1731622]: total: 2845 Feb 26 21:20:24 xoa xo-server[1731622]: } Feb 26 21:20:32 xoa xo-server[1731622]: 2026-02-27T03:20:32.199Z xo:fs:abstract WARN retrying method on fs { Feb 26 21:20:32 xoa xo-server[1731622]: method: '_write', Feb 26 21:20:32 xoa xo-server[1731622]: attemptNumber: 0, Feb 26 21:20:32 xoa xo-server[1731622]: delay: 100, Feb 26 21:20:32 xoa xo-server[1731622]: error: Error: EBADF: bad file descriptor, write Feb 26 21:20:32 xoa xo-server[1731622]: From: Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler.addSyncStackTrace (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/local.js:21:26) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler._writeFd (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/local.js:209:35) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler._write (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/abstract.js:680:25) Feb 26 21:20:32 xoa xo-server[1731622]: at loopResolver (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:83:46) Feb 26 21:20:32 xoa xo-server[1731622]: at new Promise (<anonymous>) Feb 26 21:20:32 xoa xo-server[1731622]: at loop (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:85:22) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler.retry (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:87:10) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler._write (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:103:18) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler.write (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/abstract.js:482:16) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler.write (/usr/local/lib/node_modules/xo-server/node_modules/limit-concurrency-decorator/index.js:97:24) { Feb 26 21:20:32 xoa xo-server[1731622]: errno: -9, Feb 26 21:20:32 xoa xo-server[1731622]: code: 'EBADF', Feb 26 21:20:32 xoa xo-server[1731622]: syscall: 'write' Feb 26 21:20:32 xoa xo-server[1731622]: }, Feb 26 21:20:32 xoa xo-server[1731622]: file: { Feb 26 21:20:32 xoa xo-server[1731622]: fd: 17, Feb 26 21:20:32 xoa xo-server[1731622]: path: '/xo-vm-backups/d6befe36-fea7-04f3-25f1-feada684702b/vdis/8ca7c07e-4509-463d-8867-87e6b2026ad6/5afe2bcd-42d4-4b58-ba95-2c77e5107692/20260206T014545Z.vhd' Feb 26 21:20:32 xoa xo-server[1731622]: }, Feb 26 21:20:32 xoa xo-server[1731622]: retryOpts: {} Feb 26 21:20:32 xoa xo-server[1731622]: } Feb 26 21:20:32 xoa xo-server[1731622]: 2026-02-27T03:20:32.200Z vhd-lib:VhdFile INFO double close { Feb 26 21:20:32 xoa xo-server[1731622]: path: { Feb 26 21:20:32 xoa xo-server[1731622]: fd: 18, Feb 26 21:20:32 xoa xo-server[1731622]: path: '/xo-vm-backups/d6befe36-fea7-04f3-25f1-feada684702b/vdis/8ca7c07e-4509-463d-8867-87e6b2026ad6/5afe2bcd-42d4-4b58-ba95-2c77e5107692/20260207T014453Z.vhd' Feb 26 21:20:32 xoa xo-server[1731622]: }, Feb 26 21:20:32 xoa xo-server[1731622]: firstClosedBy: 'Error\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at VhdFile.dispose (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:134:22)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at RemoteVhdDisk.dispose (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:104:26)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at RemoteVhdDisk.close (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDisk.mjs:101:24)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at RemoteVhdDisk.unlink (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDisk.mjs:426:16)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at RemoteVhdDiskChain.unlink (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDiskChain.mjs:268:18)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at #step_cleanup (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/MergeRemoteDisk.mjs:279:23)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at async MergeRemoteDisk.merge (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/MergeRemoteDisk.mjs:159:11)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at async _mergeVhdChain (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_cleanVm.mjs:56:20)', Feb 26 21:20:32 xoa xo-server[1731622]: closedAgainBy: 'Error\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at VhdFile.dispose (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:129:24)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at RemoteVhdDisk.dispose (/usr/local/lib/node_modules/xo-server/node_modules/vhd-lib/Vhd/VhdFile.js:104:26)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at RemoteVhdDisk.close (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDisk.mjs:101:24)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDiskChain.mjs:85:52\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at Array.map (<anonymous>)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at RemoteVhdDiskChain.close (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/RemoteVhdDiskChain.mjs:85:35)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at #cleanup (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/disks/MergeRemoteDisk.mjs:309:21)\n' + Feb 26 21:20:32 xoa xo-server[1731622]: ' at async _mergeVhdChain (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_cleanVm.mjs:56:20)' Feb 26 21:20:32 xoa xo-server[1731622]: } Feb 26 21:20:32 xoa xo-server[1731622]: 2026-02-27T03:20:32.274Z xo:backups:mergeWorker FATAL ENOENT: no such file or directory, rename '/run/xo-server/mounts/cd3f089c-7064-412e-86e3-b62c5647021a/xo-vm-backups/.queue/clean-vm/_20260227T020329Z-ukxolxktxyl' -> '/run/xo-server/mounts/cd3f089c-7064-412e-86e3-b62c5647021a/xo-vm-backups/.queue/clean-vm/__20260227T020329Z-ukxolxktxyl' { Feb 26 21:20:32 xoa xo-server[1731622]: error: Error: ENOENT: no such file or directory, rename '/run/xo-server/mounts/cd3f089c-7064-412e-86e3-b62c5647021a/xo-vm-backups/.queue/clean-vm/_20260227T020329Z-ukxolxktxyl' -> '/run/xo-server/mounts/cd3f089c-7064-412e-86e3-b62c5647021a/xo-vm-backups/.queue/clean-vm/__20260227T020329Z-ukxolxktxyl' Feb 26 21:20:32 xoa xo-server[1731622]: From: Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler.addSyncStackTrace (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/local.js:21:26) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler._rename (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/local.js:191:35) Feb 26 21:20:32 xoa xo-server[1731622]: at /usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/utils.js:29:26 Feb 26 21:20:32 xoa xo-server[1731622]: at new Promise (<anonymous>) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler.<anonymous> (/usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/fs/dist/utils.js:24:12) Feb 26 21:20:32 xoa xo-server[1731622]: at loopResolver (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:83:46) Feb 26 21:20:32 xoa xo-server[1731622]: at new Promise (<anonymous>) Feb 26 21:20:32 xoa xo-server[1731622]: at loop (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:85:22) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler.retry (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:87:10) Feb 26 21:20:32 xoa xo-server[1731622]: at LocalHandler._rename (/usr/local/lib/node_modules/xo-server/node_modules/promise-toolbox/retry.js:103:18) { Feb 26 21:20:32 xoa xo-server[1731622]: errno: -2, Feb 26 21:20:32 xoa xo-server[1731622]: code: 'ENOENT', Feb 26 21:20:32 xoa xo-server[1731622]: syscall: 'rename', Feb 26 21:20:32 xoa xo-server[1731622]: path: '/run/xo-server/mounts/cd3f089c-7064-412e-86e3-b62c5647021a/xo-vm-backups/.queue/clean-vm/_20260227T020329Z-ukxolxktxyl', Feb 26 21:20:32 xoa xo-server[1731622]: dest: '/run/xo-server/mounts/cd3f089c-7064-412e-86e3-b62c5647021a/xo-vm-backups/.queue/clean-vm/__20260227T020329Z-ukxolxktxyl' Feb 26 21:20:32 xoa xo-server[1731622]: } Feb 26 21:20:32 xoa xo-server[1731622]: }This files do exist prior to the job running, but are erased during the job execution.

Remotes are all NFS based, my home lab and one location are using TrueNAS, another location is using NFS backed by Ubuntu 24.04 LTS. This seems to not make any difference.

I submitted a ticket for this since i have pro support but wanted to put this out to the community to see if anyone else is running into this? I have reverted back to 6.1.2 and things are operating smoothly again.

-

RE: backup mail report says INTERRUPTED but it's not ?

@florent Not using the API but we have two Proxies.

-

RE: backup mail report says INTERRUPTED but it's not ?

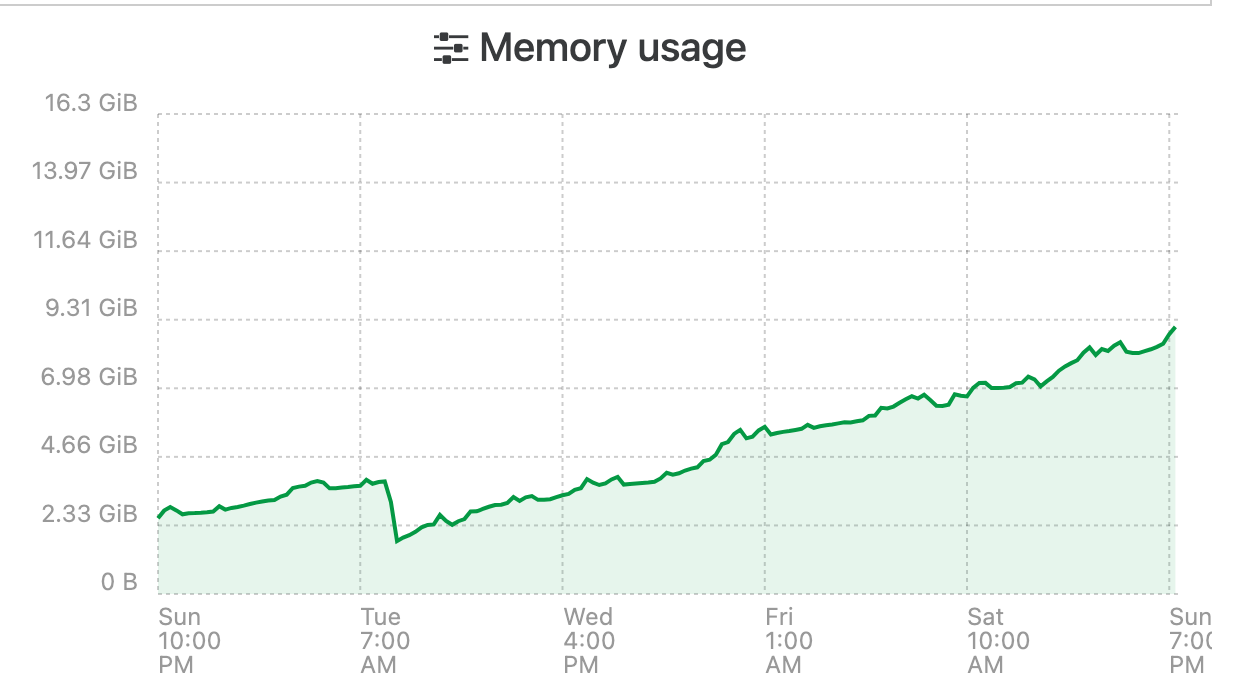

@florent I am also experiencing this on XOA and can send you a support tunnel number if you'd like. I rebooted it Sunday night after sharing the screenshot of memory usage above in this thread but it is slowly growing again. It used to crash and restart every 3 days but with --max-old-space-size=12288 i get around 7-8 day now before its an issue.

-

RE: backup mail report says INTERRUPTED but it's not ?

@olivierlambert Using the prebuilt XOA appliance which reports:

[08:39 23] xoa@xoa:~$ node --version v20.18.3 -

RE: backup mail report says INTERRUPTED but it's not ?

I have been having the same issue and have been watching it for the last couple weeks. Initially my XOA only had 8GB of ram assigned, i have bumped it up to 16 to try an alleviate the issue. Seems to be some sort of memory leak. This is the official XO Appliance too not XO CE.

I changed the systemd file to make use of the extra memory as per the docs,

ExecStart=/usr/local/bin/node --max-old-space-size=12288 /usr/local/bin/xo-serverIt seems that over time it will just consume all of its memory until it crashes and restarts no matter how much i assign.

-

RE: XCP-ng 8.3 updates announcements and testing

Installed on my usual selection of hosts. (A mixture of AMD and Intel hosts, SuperMicro, Asus, and Minisforum). No issues after a reboot, PCI Passthru, backups, etc continue to work smoothly

-

RE: XCP-ng 8.3 updates announcements and testing

@stormi Installed on my usual test hosts probably an hour after your initial post and let them run thoughout the day (Intel Minisforum MS-01, and Supermicro running a Xeon E-2336 CPU). Also installed onto a 2 host AMD epyc pool. Updates went smooth, backups continue to function as before.

A couple of windows VMs had secure boot enabled on our test pool. After the initial reboot i ran " secureboot-certs clear" on the pool master, then In XOA i clicked "Copy pool's default UEFI certificates to the VM" after that. The VMs continued to reboot without issue after. Strange to see someone else having issues with VMs not booting.

-

RE: CBT disabling itself / bug ?

@Pilow Sounds very similar to my experiences with CBT.

Check out my post here (and keep reading from that point down)

https://xcp-ng.org/forum/post/88968Can you check with the same commands i used?

-

RE: log_fs_usage / /var/log directory on pool master filling up constantly

One of our pools. (5 hosts, 6 NFS SRs) had this issue when we first deployed it. I engaged with support from Vates and they changed a setting that reduced the frequency of the SR.scan job from 30 seconds to every 2 mins instead. This totally fixed the issue for us going on a year and a half later.

I dug back in our documentation and found the command they gave us

xe host-param-set other-config:auto-scan-interval=120 uuid=<Host UUID>Where hosts UUID is your pool master.

-

RE: Lock file is already being held whereas no backup are running.

@henri9813 This is 100% related to the merge process still running in the background. I have seen it happen myself.

-

RE: [Old thread] XCP-ng Windows PV tools 9.0.9030 Testsign released: now with Rust-based Xen Guest Agent

@dinhngtu Thanks. We plan to migrate all Windows VMs from the Citrix tools down the road and only have a handful of VMs running these so far so will maybe hold off until the next version. Have been running the Linux rust tools for over a year with zero issues..

-

RE: [Old thread] XCP-ng Windows PV tools 9.0.9030 Testsign released: now with Rust-based Xen Guest Agent

@dinhngtu An issue i ran into in testing the final signed release.

On a Server 2025 VM after installing the tools the version is properly displayed in XOA, however after a migration the version is not displayed and instead "Management agent not detected" is shown, memory stats also stop displaying in XOA. After restarting the Rust tools service in windows however XOA picks up on the tools running within the VM again.

-

RE: VM start stuck on "Guest has not initialized the display (yet)."

@dinhngtu said in VM start stuck on "Guest has not initialized the display (yet).":

You must run secureboot-certs clear if you're updating from 1.2.0-2.4 or 1.2.0-3.1 and have previously run secureboot-certs install with the above versions installed.

Should we run this before installing the update or after 1.2.0-3.2 has been installed?

-

RE: XCP-ng 8.3 updates announcements and testing

@dinhngtu The output of that command seems to result in the output of "Scanningn every UUID in my pool", most of which do not use SB. Im guessing that normal and i do not have any affected VMs any longer?

-

RE: XCP-ng 8.3 updates announcements and testing

Awesome thanks for the response. I took a snapshot and tried rebooting a VM and it booted back up without issue simply by clicking the propagate button on each affecting VM after reverting and running "secureboot-certs install"

-

RE: XCP-ng 8.3 updates announcements and testing

@dinhngtu Ah okay, i was wondering if "d719b2cb-3d3a-4596-a3bc-dad00e67656f" indicated i was back on the safe certs since it is the same on all VMs since reverting and clicking "Copy the pools default UEFI Certificates to the VM"

So i need to run

varstore-rm f9166a11-3c3f-33f1-505c-542ce8e1764d d719b2cb-3d3a-4596-a3bc-dad00e67656f dbxwhile powered off to be safe?

-

RE: XCP-ng 8.3 updates announcements and testing

I reverted the package however i initially followed the directions provided by vates in the release blog post and ran "secureboot-certs clear" then on each VM with Secure boot enabled i clicked "Copy the pools default UEFI Certificates to the VM".

After reverting the updates and running secureboot-certs install again i went back and clicked "Copy the pools default UEFI Certificates to the VM" again thinking it would put the old certs back.

It sounds like this may not be enough and i need to remove the dbx record from each of these VMs. Am i correct or was that enough to fix these VMs?

Per the docs:

varstore-rm <vm-uuid> d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx Note that the GUID may be found by using varstore-ls <vm-uuid>.When i run the command i see

Exmaple:

varstore-ls f9166a11-3c3f-33f1-505c-542ce8e1764d 8be4df61-93ca-11d2-aa0d-00e098032b8c SecureBoot 8be4df61-93ca-11d2-aa0d-00e098032b8c DeployedMode 8be4df61-93ca-11d2-aa0d-00e098032b8c AuditMode 8be4df61-93ca-11d2-aa0d-00e098032b8c SetupMode 8be4df61-93ca-11d2-aa0d-00e098032b8c SignatureSupport 8be4df61-93ca-11d2-aa0d-00e098032b8c PK 8be4df61-93ca-11d2-aa0d-00e098032b8c KEK d719b2cb-3d3a-4596-a3bc-dad00e67656f db d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx 605dab50-e046-4300-abb6-3dd810dd8b23 SbatLevel fab7e9e1-39dd-4f2b-8408-e20e906cb6de HDDP e20939be-32d4-41be-a150-897f85d49829 MemoryOverwriteRequestControl bb983ccf-151d-40e1-a07b-4a17be168292 MemoryOverwriteRequestControlLock 9d1947eb-09bb-4780-a3cd-bea956e0e056 PPIBuffer 9d1947eb-09bb-4780-a3cd-bea956e0e056 Tcg2PhysicalPresenceFlagsLock eb704011-1402-11d3-8e77-00a0c969723b MTC 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0000 8be4df61-93ca-11d2-aa0d-00e098032b8c Timeout 8be4df61-93ca-11d2-aa0d-00e098032b8c Lang 8be4df61-93ca-11d2-aa0d-00e098032b8c PlatformLang 8be4df61-93ca-11d2-aa0d-00e098032b8c ConIn 8be4df61-93ca-11d2-aa0d-00e098032b8c ConOut 8be4df61-93ca-11d2-aa0d-00e098032b8c ErrOut 9d1947eb-09bb-4780-a3cd-bea956e0e056 Tcg2PhysicalPresenceFlags 8be4df61-93ca-11d2-aa0d-00e098032b8c Key0000 8be4df61-93ca-11d2-aa0d-00e098032b8c Key0001 5b446ed1-e30b-4faa-871a-3654eca36080 0050569B1890 937fe521-95ae-4d1a-8929-48bcd90ad31a 0050569B1890 9fb9a8a1-2f4a-43a6-889c-d0f7b6c47ad5 ClientId 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0003 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0004 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0005 4c19049f-4137-4dd3-9c10-8b97a83ffdfa MemoryTypeInformation 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0006 8be4df61-93ca-11d2-aa0d-00e098032b8c BootOrder 8c136d32-039a-4016-8bb4-9e985e62786f SecretKey 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0001 8be4df61-93ca-11d2-aa0d-00e098032b8c Boot0002So the command would be:

varstore-rm f9166a11-3c3f-33f1-505c-542ce8e1764d d719b2cb-3d3a-4596-a3bc-dad00e67656f dbx correct? Does "d719b2cb-3d3a-4596-a3bc-dad00e67656f " indicate the old certs have been re-installed?