Let me create a card do check this hypothesis ( and do a the fix @andrew )

Posts

-

RE: Delta Backup not deleting old snapshots

-

RE: VDI not showing in XO 5 from Source.

@andrewperry hi this is an identified issue on xapi / storage side

this commit is making it visible .Pinging @anthoineb here for more info

-

RE: Delta Backup not deleting old snapshots

@Pa3docypris the other snapshots are correctly deleted ?

are these VM on a specific storage ?Do you have anythin in the xo-server logs ( like in journalctl ) VDI_IN_USE ?

can you post the full job JSON ( on the top of the backup log there is a download button ) ? eventually by direct chat to me if you prefer to keep it private

-

RE: backup mail report says INTERRUPTED but it's not ?

On tomorrow 's release we will:

- reduce the polling for the patches list

- add more debug to know exactly what request go through the xo6 api (rest api)

following this we will add core xo metrics to the openmetrics ( prometheus) export to allow users and support to dig through real world data when needed

-

RE: Import from VMware err: name: AssertionError: Expected "actual" to be strictly unequal to: undefined

@benapetr said in Import from VMware err: name: AssertionError: Expected "actual" to be strictly unequal to: undefined:

Hello, since this never had a clear resolution, here is explanation of the bug and why it happens:

There is a bug in disk iteration in that XO vmware plugin (somewhere in that esxi.mjs I don't remember exact location) - it basically expects that all disks of VM exist in same datastore and if they don't it crashes as the next disk in unexpectedly missing (undefined)

Workaround is rather simple - select the VM in vmware, migrate -> storage, disable DRS (important) and then select any DS that no disks current exist on. If you select and DS that is already used by same VM it will sometimes not get fixed! It also may happen even if VM is "apparently" looking like it's on single DS, even if it reports as such, still try to migrate it to another DS, and disable DRS so that really all files, even meta files are in same directory.

Then run XO import again, it will magically work.

thanks you for the input

we were under the assumption that all the disk of a VM are in the same datastore

the fix should be too hard now that you did the hard workedit: card created in our backlog, I will take a look after the release to see how hard it is to implement

-

RE: S3 Timeout

@frank-s said in S3 Timeout:

Apologies if this question has already been asked.

I have a site which is performing mirror backups to minio s3. Generally this is fine, but unfortunately the HITRON router for Virgin Media Business has a habit of hanging from time to time. The router has been changed to no avail. Apparently this is a common problem. So here is the question. If the router hangs while the s3 backup is active, how long have I got to power cycle the HITRON before the s3 connection times out?

Also is there a config file somewhere that can be used to change the timeout?

Thanks.the timeout for a S3 connection is 10 minutes per default, but it should fail faster if the link is down.

For now the only way to force stop a backup job is to restart the xo(a)this timeout is not configurable

-

RE: backup mail report says INTERRUPTED but it's not ?

thanks guy, I will connect in a few hours, and we will investigate tomorrow all day with @mathieura

-

RE: backup mail report says INTERRUPTED but it's not ?

@flakpyro yes, with pleasure

do you also use the rest api / xo6 ?

do you use proxies ? -

RE: backup mail report says INTERRUPTED but it's not ?

@Pilow I think I remember that you have a xoa ? would it be ok to open a support tunnel a give me the number by the chat ( or by a support ticket ) ?

are you querying on of the dashboard or only the individual CRUD api ? rest api or xo cli ?

We could add some debug

-

RE: backup mail report says INTERRUPTED but it's not ?

@Pilow said in backup mail report says INTERRUPTED but it's not ?:

@florent not really, it is activated and I tried to fiddle a bit with it, why ?

the smaller the changes, the more we can focus on the right component(s). And december release was quite massive ( a good consequence of the team growing)

can you disable it to be sure ?again, thank you for your help here

-

RE: backup mail report says INTERRUPTED but it's not ?

@Pilow at least this can rule out the disk data and backup archive handling (that changed a lot in 2025 for qcow2 )

so if it's backup related, it can be the task log and the scheduler.

are you using the open metrics plugin ?

-

RE: backup mail report says INTERRUPTED but it's not ?

@Pilow said in backup mail report says INTERRUPTED but it's not ?:

@florent do you have (heavy?) use of backup jobs on your XOA ?

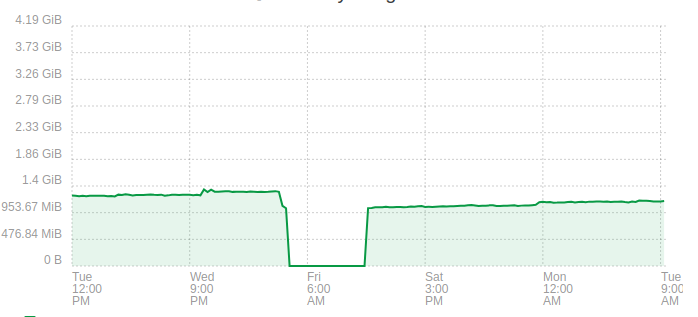

we all noticed this because of correlation between backup jobs & memory growthI do have all my backup jobs through XO PROXIES though, and XOA still explodes in RAM Usage...

I back up a only few VM on this one , it was to illustrate that we try to reproduce it with a scope small enough to improve the situation

is the memory stable on the proxy ?

-

RE: backup mail report says INTERRUPTED but it's not ?

we are trying to reproduce it here, without success for now

It would be good for us too if we can identify what cause this before the upcoming release ( thursday )for example here my xoa at home , seems quite ok running by itself :

to be fair the debug is not helped by the fact that we have one big process, with so much object that changes that exploring and pinpointing exactly what is growing is tricky ( or we may not look at the right place)

-

RE: Detached VM Snapshots after Warm Migration

@DustyArmstrong I think we won't do too much on XO5 for now, and for xo6 we are trying to do everything with a UX designer to ensure coherence, at least until we have a full working app

-

RE: bug about provoked BACKUP FELL BACK TO A FULL due to DR job

@LoTus111 the investigation is ongoing , no success for now

-

RE: Detached VM Snapshots after Warm Migration

I think at least showing them could be a good idea. this is planned ( but low priority)

-

RE: Detached VM Snapshots after Warm Migration

@Pilow said in Detached VM Snapshots after Warm Migration:

@DustyArmstrong I have hard times to understand how the VM is aware of the XOA it is/was attached to...

a VM resides on a host

a Host in in a pool

a Pool is attached to a (or many) XO/XOAhow the hell your VM is aware of its previous XO ?

- a running vm has a property resident_on exposed by xapi

- the queries from pools are handled separatly, thus we can decorate all the object of a xapi with a $pool property

- this is the

servercollection that track which server data we know, and which one are connected or not

Mots of the data lives in the xapi, that means that multiple XO can handle multiple pools. The exception are the SDN Controller ( best handled by only one XO) and the backups of the same VM.

-

RE: Detached VM Snapshots after Warm Migration

nice catch

you now know the internal magic of the xo backup . -

RE: vmware vm migration error cause ?

@yeopil21 you can target vsphere it should work

The issue here is that XO fail to link one of your datastore to the datacenter

is it XO from source or a XOA ? you should have in your server logs something like

can't find datacenter for datastorewith the datacenters and the datastore name as detected by XO

are you using an admin account on vsphere or is it a limited one ?