Do read the specific section in docs about virtualizing xcp-ng.

https://docs.xcp-ng.org/guides/xcpng-in-a-vm/

Posts

-

RE: Can only connect to one server at a time

-

RE: Other 2 hosts reboot when 1 host in HA enabled pool is powered off

@ha_tu_su

Here are the details, albeit little late than what I had promised. Mondays are...not that great.Below commands were executed on all 3 hosts after installation of xcp-ng.

yum update wget https://gist.githubusercontent.com/Wescoeur/7bb568c0e09e796710b0ea966882fcac/raw/052b3dfff9c06b1765e51d8de72c90f2f90f475b/gistfile1.txt -O install && chmod +x install ./install --disks /dev/sdd --thin --forceThen XO was installed on one of the hosts and a pool was created which consisted of 3 hosts. Before that NIC renumbering was done on VW1 was changed to match the NIC numbers for other 2 hosts.

Then XOSTOR SR was created by executing follwoing on master host:

xe sr-create type=linstor name-label=XOSTOR host-uuid=<MASTER_UUID> device-config:group-name=linstor_group/thin_device device-config:redundancy=2 shared=true device-config:provisioning=thinThen on host which is linstor controller below commands were executed. Each of the network has a /30 subnet.

linstor node interface create xcp-ng-vh1 strg1 192.168.255.1 linstor node interface create xcp-ng-vh1 strg2 192.168.255.10 linstor node interface create xcp-ng-vh2 strg1 192.168.255.5 linstor node interface create xcp-ng-vh2 strg2 192.168.255.2 linstor node interface create xcp-ng-vw1 strg1 192.168.255.9 linstor node interface create xcp-ng-vw1 strg2 192.168.255.6 linstor node-connection path create xcp-ng-vh1 xcp-ng-vh2 strg_path strg1 strg2 linstor node-connection path create xcp-ng-vh2 xcp-ng-vw1 strg_path strg1 strg2 linstor node-connection path create xcp-ng-vw1 xcp-ng-vh1 strg_path strg1 strg2After this HA was enabled on the pool by executing below commands on master host:

xe pool-ha-enable heartbeat-sr-uuids=<XOSTOR_SR_UUID> xe pool-param-set ha-host-failures-to-tolerate=2 uuid=<POOL_UUID>After this some test VMs were created as mentioned in Original Post. Host failure case works as expected for VH1 and VH2 host. For VW1 when it is switched off, VH1 and VH2 also reboot.

Let me know if any other information is required.

Thanks.

-

RE: Other 2 hosts reboot when 1 host in HA enabled pool is powered off

@Danp

I followed the commands for XOSTOR thin provisioning from the XOSTOR hyper convergence thread.For the storage network I am experimenting with linstor storage paths. Each node has 3 NICs defined using linstor commands - deflt, strg1 and strg2. Then I create specific paths between nodes using linstor commands.

VH1 strg1 nic -> VH2 strg2 nic

VH2 strg1 nic -> VW1 strg2 nic

VW1 strg1 nic -> VH2 strg2 nic

All these NICs are 10G. Each line above is a separate network with /30 subnet. The idea is basically connecting 3 hosts in 'mesh' storage network.Sorry for not being clear with commands. Typing from my phone without access to setup. I will be able to give a more clear picture on Monday.

Hope you guys have a great Sunday.

-

RE: Other 2 hosts reboot when 1 host in HA enabled pool is powered off

@olivierlambert

This doesn't happen when any of the other 2 hosts are individually rebooted for the same test.

Also I had been seeing this issue yesterday and today multiple times. After writing the post, I repeated same test for other 2 hosts and then one more time for VW1. This time everything went as expected.

I will give it one more round on Monday and update the post. -

RE: Ubuntu 24.04 VMs not reporting IP addresses to XCP-NG 8.2.1

I checked terminal history of my Ubuntu VM and the guest agent was installed from guest-tools.iso

-

Other 2 hosts reboot when 1 host in HA enabled pool is powered off

Hello,

Before I elaborate more on the problem below are some details of the setup for testing.

Hardware

VH1: Dell PowerEdge R640, 2x Intel(R) Xeon(R) Gold 6240 CPU @ 2.60GHz256, 256 GB RAM

VH2: Dell PowerEdge R640, 2x Intel(R) Xeon(R) Gold 6240 CPU @ 2.60GHz256, 256 GB RAM

VW1: Dell PowerEdge R350,1x Intel(R) Xeon(R) E-2378 CPU @ 2.60GHz, 32 GB RAMSoftware

XCP-ng 8.2.1 installed on all 3 hosts. All hosts updated 3 weeks back using yum update.

XO from sources updated to commit 6fcb8.Configuration

All the 3 hosts are added to a pool.

I have created XOSTOR shared storage using disks from all 3 hosts.

Enabled HA on pool, used XOSTOR storage as hearbeat SR.

Created few Windows Server 2002 and Ubuntu 24.04 VMs.

Enabled HA on some of the VMs (best-effort + restart). Made sure that sum of RAM on HA enabled VMs is less than 32 GB (it is 20 GB) to account for the smallest host.

Checked max hosts failure that can be tolerated by running:[12:31 xcp-ng-vh1 ~]# xe pool-ha-compute-max-host-failures-to-tolerate 2Test Case

Power off VW1 from IDRAC (Power Off System)

Expected Output: The 2 Ubuntu VMs running on VW1 will be migrated to surviving hosts.

Observed Output: After VW1 is powered of, other 2 surviving hosts in the cluster get rebooted. Have repeated this test case many times and same behaviour is observed.Disabling HA on the pool and repeating the test case does not exhibit the same behaviour. When VW1 is powered off, other hosts are unaffected.

Anyone have any idea why this can be happening?

Thanks.

-

RE: Ubuntu 24.04 VMs not reporting IP addresses to XCP-NG 8.2.1

I have Ubuntu 24.04 installed and Management Agent version listed in XO UI is 7.30.0-12.

I had installed the agent some time back so don't remember whether I installed using guest-utils ISO or from apt. -

RE: XCP-ng 8.3 betas and RCs feedback 🚀

@olivierlambert

Thanks for the info. No hurry. Better to do it right than fast. -

RE: Three-node Networking for XOSTOR

@ronan-a

Unfortunately, I am in the process of reinstalling XCP-ng on the nodes to start from scratch. Just thought I have tried too many things and somewhere forgot to undo the ‘wrong’ configs. So can’t run the command now. Although I had run this command before when I posted all the screenshots. The output had 2 entries (from my memory):1. StltCon <mgmt_ip> 3366 Plain 2. <storage_nw_ip> 3366 PlainI will repost with the required data when I get everything configured again.

Thanks.

-

Removing xcp-persistent-database resource

Hi

I am experimenting with XOSTOR. I removed the SR related to XOSTOR and then started deleting the resources from command line using

linstor resource-definition delete <rd_name>. I am now only left withxcp-persistent-databasebut I am not able to delete it because it is always in use by the host which is linstor-controller. Can anyone suggest me how I can delete this resource?I did read this reply from @ronan-a: https://xcp-ng.org/forum/topic/5361/xostor-hyperconvergence-preview/205

As per this I didwipefs -a -f /dev/<disk>on disks which were part of XOSTOR previously, but I don't see then in the XOA UI when I go to create new XOSTOR.Thanks.

-

RE: Three-node Networking for XOSTOR

@ha_tu_su

@olivierlambert @ronan-a : Any insight into this? -

RE: How to associate a VIF to a Network

@martinpedros

If you want to create an internal network for the VMs to talk to each other without that traffic getting out of a physical NIC, private network is what you are looking for.Take a look at this post: https://xcp-ng.org/forum/topic/7309/option-to-create-host-only-private-network

-

RE: Import from VMWare vSAN fails with 404 Not Found

@Danp

Thanks for the info. I will retest after next update to XOA and let you know. Thanks for all the wonderful work that you guys are doing.Cheers!!

-

RE: Import from VMWare vSAN fails with 404 Not Found

Hello

I am using XOA which comes with 15 days of trial which is still ongoing. Current version: 5.95.0 - XOA build: 20240401. I updated it using the latest branch.

After @456Q's response I tested migration from esxi to xcp with a VM which was not on vsan and this migration worked perfectly.

-

RE: Import from VMWare vSAN fails with 404 Not Found

Hello

Yes I am using vsan on esxi. I am using the latest XOA. I read some forum posts to confirm this capability exists in the migration tool but failed to find a definitive answer.

I don't have a NFS storage but can use the clonezilla method if vsan is not supported at the moment. Thanks for your response @456Q .

-

RE: Three-node Networking for XOSTOR

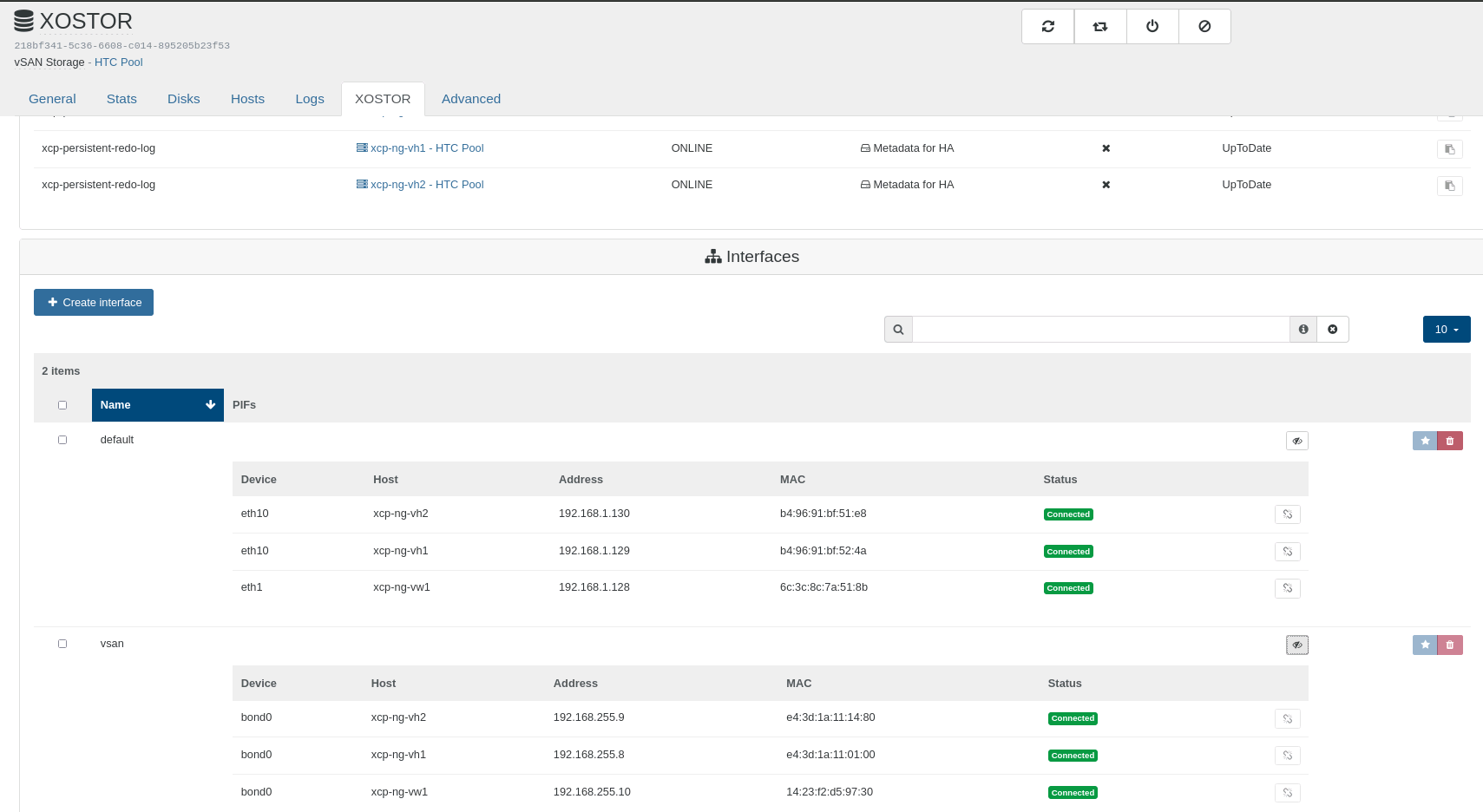

I have executed above steps and currently my XOSTOR network looks like this:

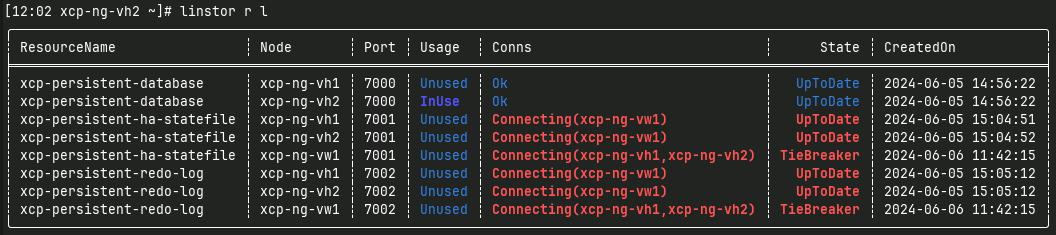

When I set 'vsan' as my preferred NIC, I get below output on linstor-controller node:

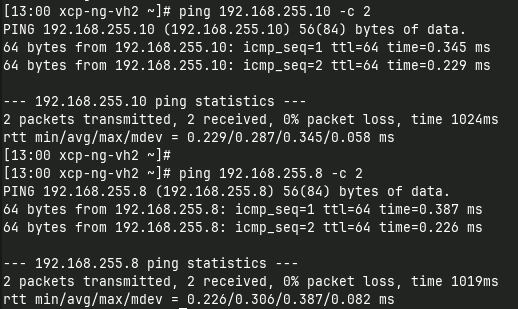

Connectivity between all 3 nodes is present:

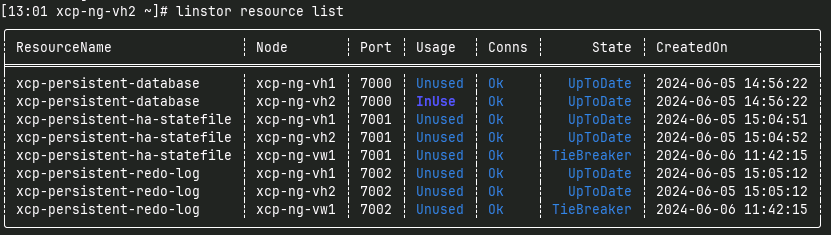

When I set 'default' as my preferred NIC, I get correct output on linstor-controller:

@ronan-a: Can you help out here?

Thanks.

-

Import from VMWare vSAN fails with 404 Not Found

Hello

I am testing importing a VM from a VMWare cluster with hosts having ESXi version 7.0.3.

I followed the process from here:

https://www.starwindsoftware.com/blog/why-you-should-consider-xcp-ng-as-an-alternative-to-vmware

since this has screenshots which helped.

VM already has a snapshot taken a month before and was not powered on.After I click import, the task fails with below log:

{ "id": "0lx2q9g9l", "properties": { "name": "importing vms vm-10006", "userId": "20dd4394-9f78-4eec-b5fb-52c33f1d83ca", "total": 1, "done": 0, "progress": 0 }, "start": 1717646306457, "status": "failure", "updatedAt": 1717646350874, "tasks": [ { "id": "iepm5pwfirp", "properties": { "name": "importing vm vm-10006", "done": 1, "progress": 100 }, "start": 1717646306458, "status": "failure", "tasks": [ { "id": "nusjc9sexcs", "properties": { "name": "connecting to 172.16.199.17" }, "start": 1717646306458, "status": "success", "end": 1717646306535, "result": { "_events": {}, "_eventsCount": 0 } }, { "id": "p9pua2ou99i", "properties": { "name": "get metadata of vm-10006" }, "start": 1717646306535, "status": "success", "end": 1717646308351, "result": { "name_label": "CA1", "memory": 4294967296, "nCpus": 2, "guestToolsInstalled": false, "guestId": "windows9Server64Guest", "guestFullName": [ "Microsoft Windows Server 2016 or later (64-bit)" ], "firmware": "uefi", "powerState": "poweredOff", "snapshots": { "lastUID": "2", "current": "2", "numSnapshots": "1", "snapshots": [ { "uid": "2", "filename": "CA1-Snapshot2.vmsn", "displayName": "VM Snapshot 5%2f8%2f2024, 6:00:35 PM", "description": "Before configuring Reverse Proxy", "createTimeHigh": "399344", "createTimeLow": "2036996630", "numDisks": "1", "disks": [ { "node": "scsi0:0", "capacity": 53687091200, "isFull": true, "uid": "282eeab9", "fileName": "vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00", "parentId": "ffffffff", "vmdkFormat": "VMFS", "nameLabel": "vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00", "datastore": "vsanDatastore", "path": "d0c68a65-2e21-8c59-9012-bc97e1f3cb00", "descriptionLabel": " from esxi" } ] } ] }, "disks": [ { "capacity": 53687091200, "isFull": false, "uid": "bb46d6c1", "fileName": "vsan://52c3a7299b70f961-4464791c3837099a/4b9d4c66-d886-9a92-03f2-bc97e1f3cb00", "parentId": "282eeab9", "parentFileName": "CA1.vmdk", "vmdkFormat": "VSANSPARSE", "nameLabel": "vsan://52c3a7299b70f961-4464791c3837099a/4b9d4c66-d886-9a92-03f2-bc97e1f3cb00", "datastore": "vsanDatastore", "path": "d0c68a65-2e21-8c59-9012-bc97e1f3cb00", "descriptionLabel": " from esxi", "node": "scsi0:0" } ], "networks": [ { "label": "UDH", "macAddress": "00:50:56:a4:6e:a2", "isGenerated": false }, { "label": "PDH", "macAddress": "00:50:56:a4:10:ed", "isGenerated": false } ] } }, { "id": "zp5lyelzz1f", "properties": { "name": "creating VM on XCP side" }, "start": 1717646308351, "status": "success", "end": 1717646315400, "result": { "uuid": "728671ef-4fa8-4ea8-2963-b876a4d6d0e0", "allowed_operations": [ "changing_NVRAM", "changing_dynamic_range", "changing_shadow_memory", "changing_static_range", "make_into_template", "migrate_send", "destroy", "export", "start_on", "start", "clone", "copy", "snapshot" ], "current_operations": {}, "name_label": "CA1", "name_description": "from esxi -- source guest id :windows9Server64Guest -- template used:Windows Server 2019 (64-bit)", "power_state": "Halted", "user_version": 1, "is_a_template": false, "is_default_template": false, "suspend_VDI": "OpaqueRef:NULL", "resident_on": "OpaqueRef:NULL", "scheduled_to_be_resident_on": "OpaqueRef:NULL", "affinity": "OpaqueRef:NULL", "memory_overhead": 37748736, "memory_target": 0, "memory_static_max": 4294967296, "memory_dynamic_max": 4294967296, "memory_dynamic_min": 4294967296, "memory_static_min": 4294967296, "VCPUs_params": {}, "VCPUs_max": 2, "VCPUs_at_startup": 2, "actions_after_shutdown": "destroy", "actions_after_reboot": "restart", "actions_after_crash": "restart", "consoles": [], "VIFs": [], "VBDs": [], "VUSBs": [], "crash_dumps": [], "VTPMs": [], "PV_bootloader": "", "PV_kernel": "", "PV_ramdisk": "", "PV_args": "", "PV_bootloader_args": "", "PV_legacy_args": "", "HVM_boot_policy": "BIOS order", "HVM_boot_params": { "order": "cdn" }, "HVM_shadow_multiplier": 1, "platform": { "videoram": "8", "hpet": "true", "secureboot": "auto", "viridian_apic_assist": "true", "apic": "true", "device_id": "0002", "cores-per-socket": "2", "viridian_crash_ctl": "true", "pae": "true", "vga": "std", "nx": "true", "viridian_time_ref_count": "true", "viridian_stimer": "true", "viridian": "true", "acpi": "1", "viridian_reference_tsc": "true" }, "PCI_bus": "", "other_config": { "mac_seed": "fb483627-5717-c52d-42be-cc434c2d87e6", "vgpu_pci": "", "base_template_name": "Other install media", "install-methods": "cdrom" }, "domid": -1, "domarch": "", "last_boot_CPU_flags": {}, "is_control_domain": false, "metrics": "OpaqueRef:f5c2ebc6-3b7d-49fc-9692-bee2aba8f123", "guest_metrics": "OpaqueRef:NULL", "last_booted_record": "", "recommendations": "<restrictions><restriction field=\"memory-static-max\" max=\"137438953472\" /><restriction field=\"vcpus-max\" max=\"32\" /><restriction property=\"number-of-vbds\" max=\"255\" /><restriction property=\"number-of-vifs\" max=\"7\" /><restriction field=\"has-vendor-device\" value=\"false\" /></restrictions>", "xenstore_data": {}, "ha_always_run": false, "ha_restart_priority": "", "is_a_snapshot": false, "snapshot_of": "OpaqueRef:NULL", "snapshots": [], "snapshot_time": "19700101T00:00:00Z", "transportable_snapshot_id": "", "blobs": {}, "tags": [], "blocked_operations": {}, "snapshot_info": {}, "snapshot_metadata": "", "parent": "OpaqueRef:NULL", "children": [], "bios_strings": {}, "protection_policy": "OpaqueRef:NULL", "is_snapshot_from_vmpp": false, "snapshot_schedule": "OpaqueRef:NULL", "is_vmss_snapshot": false, "appliance": "OpaqueRef:NULL", "start_delay": 0, "shutdown_delay": 0, "order": 0, "VGPUs": [], "attached_PCIs": [], "suspend_SR": "OpaqueRef:NULL", "version": 0, "generation_id": "0:0", "hardware_platform_version": 0, "has_vendor_device": false, "requires_reboot": false, "reference_label": "", "domain_type": "hvm", "NVRAM": {} } }, { "id": "wnneakglc7r", "properties": { "name": "build disks and snapshots chains for vm-10006" }, "start": 1717646315401, "status": "success", "end": 1717646315401, "result": { "scsi0:0": [ { "node": "scsi0:0", "capacity": 53687091200, "isFull": true, "uid": "282eeab9", "fileName": "vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00", "parentId": "ffffffff", "vmdkFormat": "VMFS", "nameLabel": "vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00", "datastore": "vsanDatastore", "path": "d0c68a65-2e21-8c59-9012-bc97e1f3cb00", "descriptionLabel": " from esxi" }, { "capacity": 53687091200, "isFull": false, "uid": "bb46d6c1", "fileName": "vsan://52c3a7299b70f961-4464791c3837099a/4b9d4c66-d886-9a92-03f2-bc97e1f3cb00", "parentId": "282eeab9", "parentFileName": "CA1.vmdk", "vmdkFormat": "VSANSPARSE", "nameLabel": "vsan://52c3a7299b70f961-4464791c3837099a/4b9d4c66-d886-9a92-03f2-bc97e1f3cb00", "datastore": "vsanDatastore", "path": "d0c68a65-2e21-8c59-9012-bc97e1f3cb00", "descriptionLabel": " from esxi", "node": "scsi0:0" } ] } }, { "id": "dt84vks1pa", "properties": { "name": "Cold import of disks scsi0:0" }, "start": 1717646315401, "status": "failure", "end": 1717646325555, "result": { "cause": { "size": 0 }, "message": "404 Not Found https://172.16.199.17/folder/d0c68a65-2e21-8c59-9012-bc97e1f3cb00/vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00?dcPath=Datacenter1&dsName=vsanDatastore", "name": "Error", "stack": "Error: 404 Not Found https://172.16.199.17/folder/d0c68a65-2e21-8c59-9012-bc97e1f3cb00/vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00?dcPath=Datacenter1&dsName=vsanDatastore\n at Esxi.#fetch (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/esxi.mjs:106:21)\n at Esxi.download (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/esxi.mjs:139:21)\n at DatastoreSoapEsxi.getSize (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/DatastoreSoapEsxi.mjs:19:17)\n at VhdEsxiRaw.readHeaderAndFooter (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/VhdEsxiRaw.mjs:54:20)\n at Function.open (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/VhdEsxiRaw.mjs:31:5)\n at importDiskChain (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/vmware/importDisksfromDatastore.mjs:22:13)\n at Task.runInside (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:175:22)\n at Task.run (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:158:20)" } } ], "end": 1717646350873, "result": { "cause": { "size": 0 }, "message": "404 Not Found https://172.16.199.17/folder/d0c68a65-2e21-8c59-9012-bc97e1f3cb00/vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00?dcPath=Datacenter1&dsName=vsanDatastore", "name": "Error", "stack": "Error: 404 Not Found https://172.16.199.17/folder/d0c68a65-2e21-8c59-9012-bc97e1f3cb00/vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00?dcPath=Datacenter1&dsName=vsanDatastore\n at Esxi.#fetch (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/esxi.mjs:106:21)\n at Esxi.download (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/esxi.mjs:139:21)\n at DatastoreSoapEsxi.getSize (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/DatastoreSoapEsxi.mjs:19:17)\n at VhdEsxiRaw.readHeaderAndFooter (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/VhdEsxiRaw.mjs:54:20)\n at Function.open (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/VhdEsxiRaw.mjs:31:5)\n at importDiskChain (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/vmware/importDisksfromDatastore.mjs:22:13)\n at Task.runInside (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:175:22)\n at Task.run (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:158:20)" } } ], "end": 1717646350874, "result": { "cause": { "size": 0 }, "succeeded": {}, "message": "404 Not Found https://172.16.199.17/folder/d0c68a65-2e21-8c59-9012-bc97e1f3cb00/vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00?dcPath=Datacenter1&dsName=vsanDatastore", "name": "Error", "stack": "Error: 404 Not Found https://172.16.199.17/folder/d0c68a65-2e21-8c59-9012-bc97e1f3cb00/vsan://52c3a7299b70f961-4464791c3837099a/d0c68a65-b66d-a07f-ae1c-bc97e1f3cb00?dcPath=Datacenter1&dsName=vsanDatastore\n at Esxi.#fetch (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/esxi.mjs:106:21)\n at Esxi.download (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/esxi.mjs:139:21)\n at DatastoreSoapEsxi.getSize (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/DatastoreSoapEsxi.mjs:19:17)\n at VhdEsxiRaw.readHeaderAndFooter (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/VhdEsxiRaw.mjs:54:20)\n at Function.open (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/vmware-explorer/VhdEsxiRaw.mjs:31:5)\n at importDiskChain (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/vmware/importDisksfromDatastore.mjs:22:13)\n at Task.runInside (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:175:22)\n at Task.run (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:158:20)" } } -

RE: Three-node Networking for XOSTOR

@ha_tu_su

Ok, I have setup everything as I said I would. The only thing I wasn't able to setup was a loopback adapter on witness node mentioned in step 4. I assigned the storage network IP on spare physical interface instead. This doesn't change the logic of how everything should work together.Till now, I have created XOSTOR, using disks from 2 main nodes. I have enabled routing and added necessary static routes on all hosts, enabled HA on the pool, created a test VM and have tested migration of that VM between main nodes. I haven't yet tested HA by disconnecting network or powering off hosts. I am planning to do this testing in next 2 days.

@olivierlambert: Can you answer the question on the enterprise support for such topology? And do you see any technical pitfalls with this approach?

I admit I am fairly new to HA stuff related to virtualization, so any feedback from the community is appreciated, just to enlighten me.

Thanks.