@DwightHat I am using xoa from sources and i updated it to the latest commit which seemed to fix it for me..

Posts

-

RE: VDI_IO_ERROR(Device I/O errors) after resizing a VM's disk

-

RE: XCP-ng 8.3 updates announcements and testing

@Greg_E neither. 8.2 is still LTS currently! They haven't switched it yet, but presumably it will be soon!

-

RE: VDI_IO_ERROR(Device I/O errors) after resizing a VM's disk

Woohoo it worked! I updated to latest commit and the backup completed this time. Thanks, and apologies for the noise!

-

VDI_IO_ERROR(Device I/O errors) after resizing a VM's disk

Hi,

I have a CR job that backups a VM. The VM was running low in disk so I resized it up to 100GB from 50GB. Following this resize the backup job for this VM has been failing with Error: VDI_IO_ERROR(Device I/O errors).Is this a known or expected issue following a resize? Do I need to setup a new backup replication job for this?

Thanks,

John

-

RE: Mirror backup with Continuous Replication

@florent said in Mirror backup with Continuous Replication:

Backup from Storage Repository 2 to Backup Repository (Remote)

We are looking at a similar setup. We currently do continuous replication from one host to another host and then want to take a weekly backup off from SR2 effectively to a NAS.

Looking at one of these jobs, there is 31 days retention, and a fullbackup every 20 days. Is there a way I can match only the latest backup automatically so I can off-site just that to the NAS, and it come out as a full-backup, as I don't need to take 31 days worth, just the latest. I could adjust the full-backup interval perhaps to help with this and just take the most recent full backup although I don't think there is a tag for those?

SR2 on the second host also has live VM's running on it as well as being a CR target.

Or is there another way I can achieve this? I could take a backup directly from the running VM/SR1 direct to the NAS but I think that would interfere with the CR snapshot chain?

Thanks,

John

-

Storage Migrate a CR backup chain

Hi,

I have a VM that uses continuous replication to a backup host, it runs nightly, and has 31days of retention. The backup host needs more disk space so I was planning on adding some more disks and configuring the raid array and creating a new SR then storage migrating the VDI's running on here across to the new storage repository, including the CR backups for this VM.I'm not quite sure how to storage migrate the CR snapshot chain which appears as 31 shutdown VM's on the backup host, without breaking the chain. I could migrate each individual VDI but I imagine that might create a full disk on the SR?

I'd like to reclaim the disks from the old SR quickly to reuse them, so ideally don't want to wait 31days..

Is there a way I should be going about doing this?

Thanks,

John

-

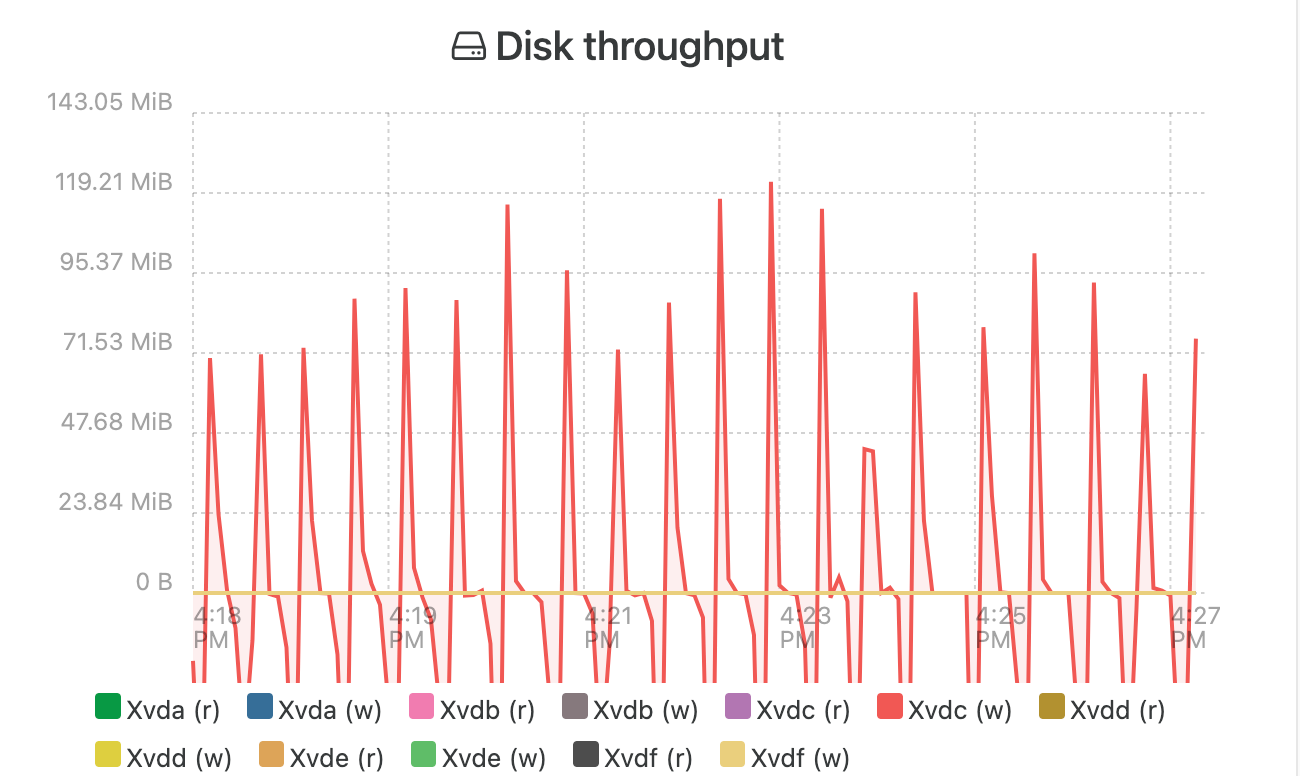

Negative values in stats graphs

Hi,

I am watching a VM that is doing a disk restore and it's Disk throughput stats graph seem to flip / flop between positive and negative values. It did the same for a different partition as well which was restored before this one. Other graphs seem to all be positive. Any known cause of this?Built from sources:

xo-server 5.124.0

xo-web 5.126.0

Xen Orchestra, commit 99e36

Thanks,

John

-

RE: Continuous Replication on a large VM

@Gheppy cool thanks. Good to see some real-world experience with a similar setup!

-

RE: Continuous Replication on a large VM

@olivierlambert Testing is a pain due to the resources required for this (!) and duplicating the setup, particularly as its something we were looking at as a proposal to the client atm.

I meant corruption could occur on boot up of the replicated VM as if it didn't have memory included then it would be booted like it had crashed effectively so there could be some corruption potentially from that effect.

Where does the saving of the memory occur to if its 180GB?! I.e. where do we need that 180GB of resource? Is the VM 'paused' whilst the memory is saved?

cheers

john

-

Continuous Replication on a large VM

Hi,

We have a large Windows Server 2012R2 VM that comes in at 4.5TB of storage across several disks (largest disk is 2TB) and has 180GB of RAM. It runs SQL Server. We were wondering about whether we could enable continuous replication on this to speed up recovery times in a failure situation.CR supports snapshot with memory - I was wondering what it has been tested to and if there is a limit to size on this and whether 180GB of RAM would end up being problematic if we wanted to do it hourly?

Does 'normal' snapshot in this process do quiescing with VSS? I'm a bit nervous that if we can't do the memory CR then we'll end up with corrupted databases effectively when it boots up. Oh this is running on XenServer 7.0 still although I want to get it over to XCP-ng ideally.

Thanks,

John

-

RE: UEFI Bootloader and KB5012170

@christopher-petzel Thanks for those tips. This seemed to work well on two Windows Server 2019 VM's, but not on a Windows 10 VM which sat there saying "Preparing Automatic Repair" for ages, so I reverted back to non-secure boot.

john

-

RE: XCP-ng 7.5.0 final is here

Hm.. I did a toolstack restart and it still had the high memory usage after, but it has now since dropped..

root 1920 0.2 2.0 108860 39516 ? Ssl Aug16 2:39 /usr/sbin/message-switch --config /etc/message-switch.conf

Perhaps it takes a bit of time to clear it out.

-

RE: XCP-ng 7.5.0 final is here

@olivierlambert said in XCP-ng 7.5.0 final is here:

Ah damn! I think you spotted the issue then!

I think that is different to the original problem as there was enough disk space in that instance, but this non-production VM I was using to test doesn't have the space on the source host.

New problem I've come across since doing that failed migration, the message-switch process is now eating approx half of the memory on the server:

[root@host log]# free -m

total used free shared buff/cache available

Mem: 1923 1601 27 12 295 221

Swap: 511 26 485

[root@host log]# ps axuww | grep message

root 1920 0.2 52.4 1094032 1033144 ? Ssl Aug16 2:36 /usr/sbin/message-switch --config /etc/message-switch.conf

root 32669 0.0 0.1 112656 2232 pts/24 S+ 12:43 0:00 grep --color=auto messageAnyone know how to restart that?

-

RE: XCP-ng 7.5.0 final is here

Ok migration of W2K8R2 with 6.2 drivers from XCP-ng 7.5 to XCP-ng 7.5 failed with msg:

Storage_Interface.Does_not_exist(_)

It also crashed the VM.

I think this could be related to https://bugs.xenserver.org/browse/XSO-785 as there is only 10GB free on the source storage repository so I don't think I can actually do the migration because of that (it's recommended to have 2x space available to migrate a VM, something that becomes increasingly harder with large VMs!).

-

RE: XCP-ng 7.5.0 final is here

ok, I've got one running XS 6.2 drivers I can test although it's got 100GB of disk so will take some time to do. Both ends are XCP-ng 7.5. I'll live migrate it and back with this driver first as a test and then try some others, see how it goes.

Edit: this vm is Win 2008R2 also where as previous which failed was 2K12.

-

RE: XCP-ng 7.5.0 final is here

@borzel unfortunately this is a production client server so can't do that easily. The two linux VM's moved over fine. Most of the VM's we have are Linux based also but I might be able to do it with an internal one on a different host but need to see if it can be moved easily as it has multiple network interfaces.

I think the one with the issue I mentioned before had XS7.1 drivers installed.

-

RE: XCP-ng 7.5.0 final is here

I had a live migration issue with windows too.

Secondary host running XCP-ng 7.5, primary XS7.1, live-migrated the Win 2K12 VM from primary host to secondary fine, took 14mins. I updated XS7.1 to XCP-ng 7.5 and installed new Dell BIOS on primary host. Migrate back hung at 99%. I left it about 45mins (3-times as long as the original migrate took) and noted it was still sending data between the two.. I wonder if it was stuck in some form of loop.

The logs on src host had this in logs a lot:

Aug 16 13:55:05 xen52 xenopsd-xc: [debug|xen52|37 |Async.VM.migrate_send R:c6ab32165e47|xenops_server] TASK.signal 1305 = ["Pending",0.99] Aug 16 13:55:05 xen52 xapi: [debug|xen52|389 |org.xen.xapi.xenops.classic events D:333a26fc4942|xenops] Processing event: ["Task","1305"] Aug 16 13:55:05 xen52 xapi: [debug|xen52|389 |org.xen.xapi.xenops.classic events D:333a26fc4942|xenops] xenops event on Task 1305 Aug 16 13:55:05 xen52 xenopsd-xc: [debug|xen52|37 |Async.VM.migrate_send R:c6ab32165e47|xenops] VM = 66ebe30b-2bd2-ae59-c225-54a625655d52; domid = 2; progress = 99 / 100I read a bit on Citrix forums and decided to shutdown the VM. Doing so from XCP-ng center didn't work, so I did it from within the VM.

Upon shutdown it seemed to sort itself, but the VM came up as 'paused' on the primary node. This couldn't be resumed. A force restart from XCP-ng center jsut seemed to hang. I tried to cancel the task with xe task-cancel on hard_reboot and it didn't work so did a xe-toolstack-restart and this reset the VM state back to shutdown. It then booted normally...

Now the secondary host was running an old BIOS still with earlier microcode so not sure if it was related to that in anyway..

john