Xen Orchestra Backup RAM consumption still does not look o.k. in my case... Even after downgrading Node JS to 20 and all other dependencies to their respective versions as used in XOA.

I am currently running XO commit "91c5d98489b5981917ca0aabc28ac37acd448396" / feat: release 6.1.1 so I expected RAM fixes as mentioned by @florent to be there.

Despite all of that backup jobs got terminated again (Xen Orchestra Backup status "interrupted").

Xen Orchestra log shows:

<--- JS stacktrace --->

FATAL ERROR: Reached heap limit Allocation failed - JavaScript heap out of memory

----- Native stack trace -----

1: 0xb76db1 node::OOMErrorHandler(char const*, v8::OOMDetails const&) [/usr/local/bin/node]

2: 0xee62f0 v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, v8::OOMDetails const&) [/usr/local/bin/node]

3: 0xee65d7 v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, v8::OOMDetails const&) [/usr/local/bin/node]

4: 0x10f82d5 [/usr/local/bin/node]

5: 0x1110158 v8::internal::Heap::CollectGarbage(v8::internal::AllocationSpace, v8::internal::GarbageCollectionReason, v8::GCCallbackFlags) [/usr/local/bin/node]

6: 0x10e6271 v8::internal::HeapAllocator::AllocateRawWithLightRetrySlowPath(int, v8::internal::AllocationType, v8::internal::AllocationOrigin, v8::internal::AllocationAlignment) [/usr/local/bin/node]

7: 0x10e7405 v8::internal::HeapAllocator::AllocateRawWithRetryOrFailSlowPath(int, v8::internal::AllocationType, v8::internal::AllocationOrigin, v8::internal::AllocationAlignment) [/usr/local/bin/node]

8: 0x10c3b26 v8::internal::Factory::AllocateRaw(int, v8::internal::AllocationType, v8::internal::AllocationAlignment) [/usr/local/bin/node]

9: 0x10b529c v8::internal::FactoryBase<v8::internal::Factory>::AllocateRawArray(int, v8::internal::AllocationType) [/usr/local/bin/node]

10: 0x10b5404 v8::internal::FactoryBase<v8::internal::Factory>::NewFixedArrayWithFiller(v8::internal::Handle<v8::internal::Map>, int, v8::internal::Handle<v8::internal::Oddball>, v8::internal::AllocationType) [/usr/local/bin/node]

11: 0x10d1e45 v8::internal::Factory::NewJSArrayStorage(v8::internal::ElementsKind, int, v8::internal::ArrayStorageAllocationMode) [/usr/local/bin/node]

12: 0x10d1f4e v8::internal::Factory::NewJSArray(v8::internal::ElementsKind, int, int, v8::internal::ArrayStorageAllocationMode, v8::internal::AllocationType) [/usr/local/bin/node]

13: 0x12214a9 v8::internal::JsonParser<unsigned char>::BuildJsonArray(v8::internal::JsonParser<unsigned char>::JsonContinuation const&, v8::base::SmallVector<v8::internal::Handle<v8::internal::Object>, 16ul, std::allocator<v8::internal::Handle<v8::internal::Object> > > const&) [/usr/local/bin/node]

14: 0x122c35e [/usr/local/bin/node]

15: 0x122e999 v8::internal::JsonParser<unsigned char>::ParseJson(v8::internal::Handle<v8::internal::Object>) [/usr/local/bin/node]

16: 0xf78171 v8::internal::Builtin_JsonParse(int, unsigned long*, v8::internal::Isolate*) [/usr/local/bin/node]

17: 0x1959df6 [/usr/local/bin/node]

{"level":"error","message":"Forever detected script was killed by signal: SIGABRT"}

{"level":"error","message":"Script restart attempt #1"}

Warning: Ignoring extra certs from `/host-ca.pem`, load failed: error:80000002:system library::No such file or directory

2026-02-10T15:49:15.008Z xo:main WARN could not detect current commit {

error: Error: spawn git ENOENT

at Process.ChildProcess._handle.onexit (node:internal/child_process:285:19)

at onErrorNT (node:internal/child_process:483:16)

at processTicksAndRejections (node:internal/process/task_queues:82:21) {

errno: -2,

code: 'ENOENT',

syscall: 'spawn git',

path: 'git',

spawnargs: [ 'rev-parse', '--short', 'HEAD' ],

cmd: 'git rev-parse --short HEAD'

}

}

2026-02-10T15:49:15.012Z xo:main INFO Starting xo-server v5.196.2 (https://github.com/vatesfr/xen-orchestra/commit/91c5d9848)

2026-02-10T15:49:15.032Z xo:main INFO Configuration loaded.

2026-02-10T15:49:15.036Z xo:main INFO Web server listening on http://[::]:80

2026-02-10T15:49:15.043Z xo:main INFO Web server listening on https://[::]:443

2026-02-10T15:49:15.455Z xo:mixins:hooks WARN start failure {

error: Error: spawn xenstore-read ENOENT

at Process.ChildProcess._handle.onexit (node:internal/child_process:285:19)

at onErrorNT (node:internal/child_process:483:16)

at processTicksAndRejections (node:internal/process/task_queues:82:21) {

errno: -2,

code: 'ENOENT',

syscall: 'spawn xenstore-read',

path: 'xenstore-read',

spawnargs: [ 'vm' ],

cmd: 'xenstore-read vm'

}

}

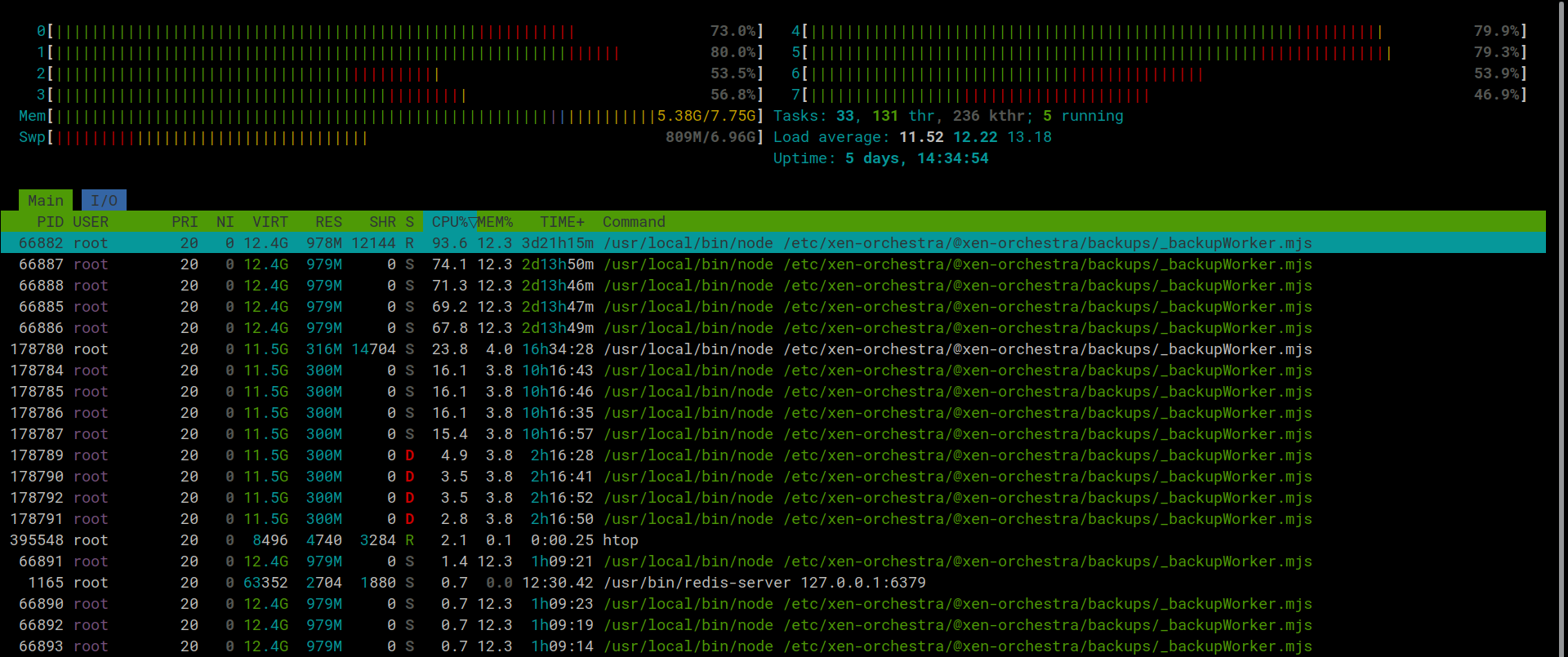

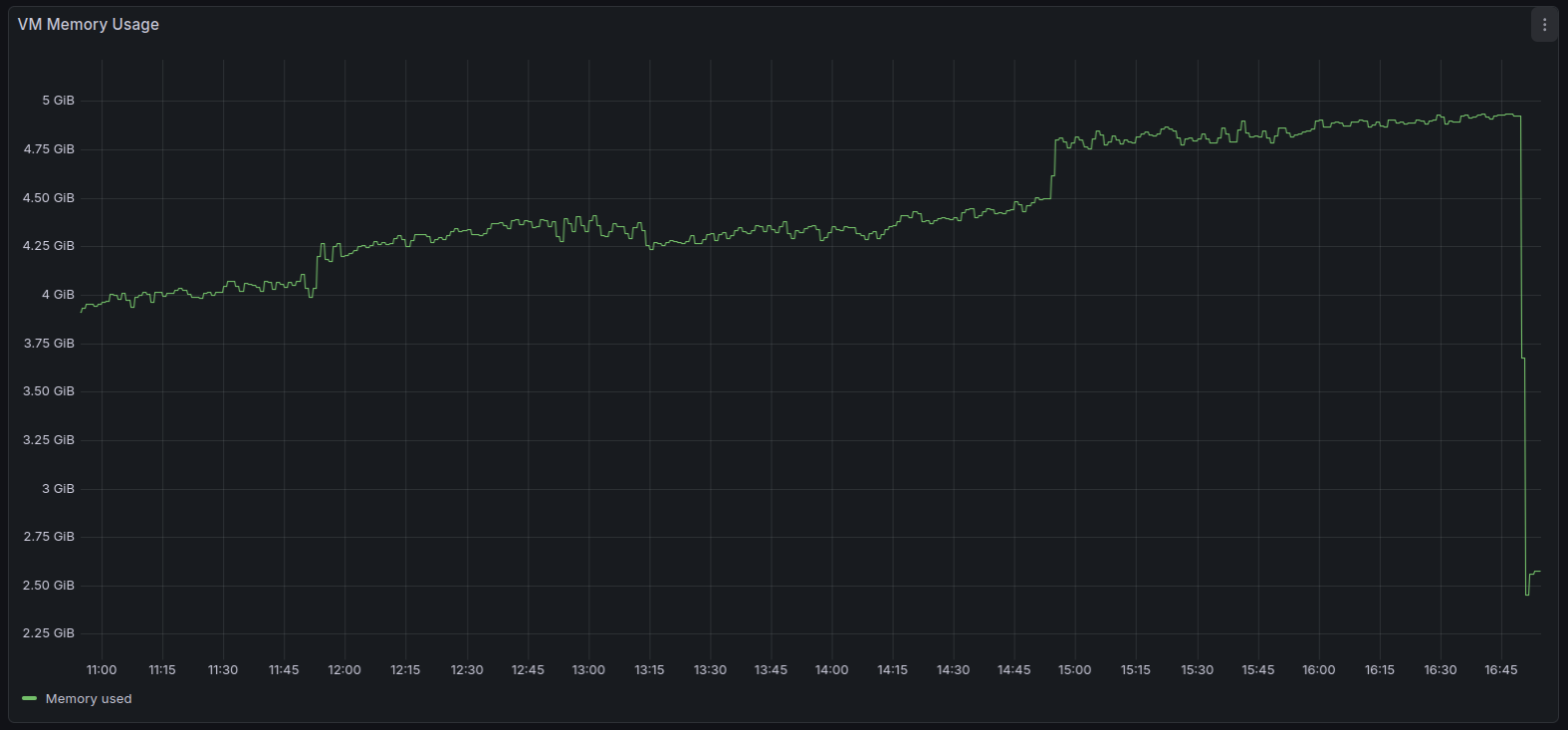

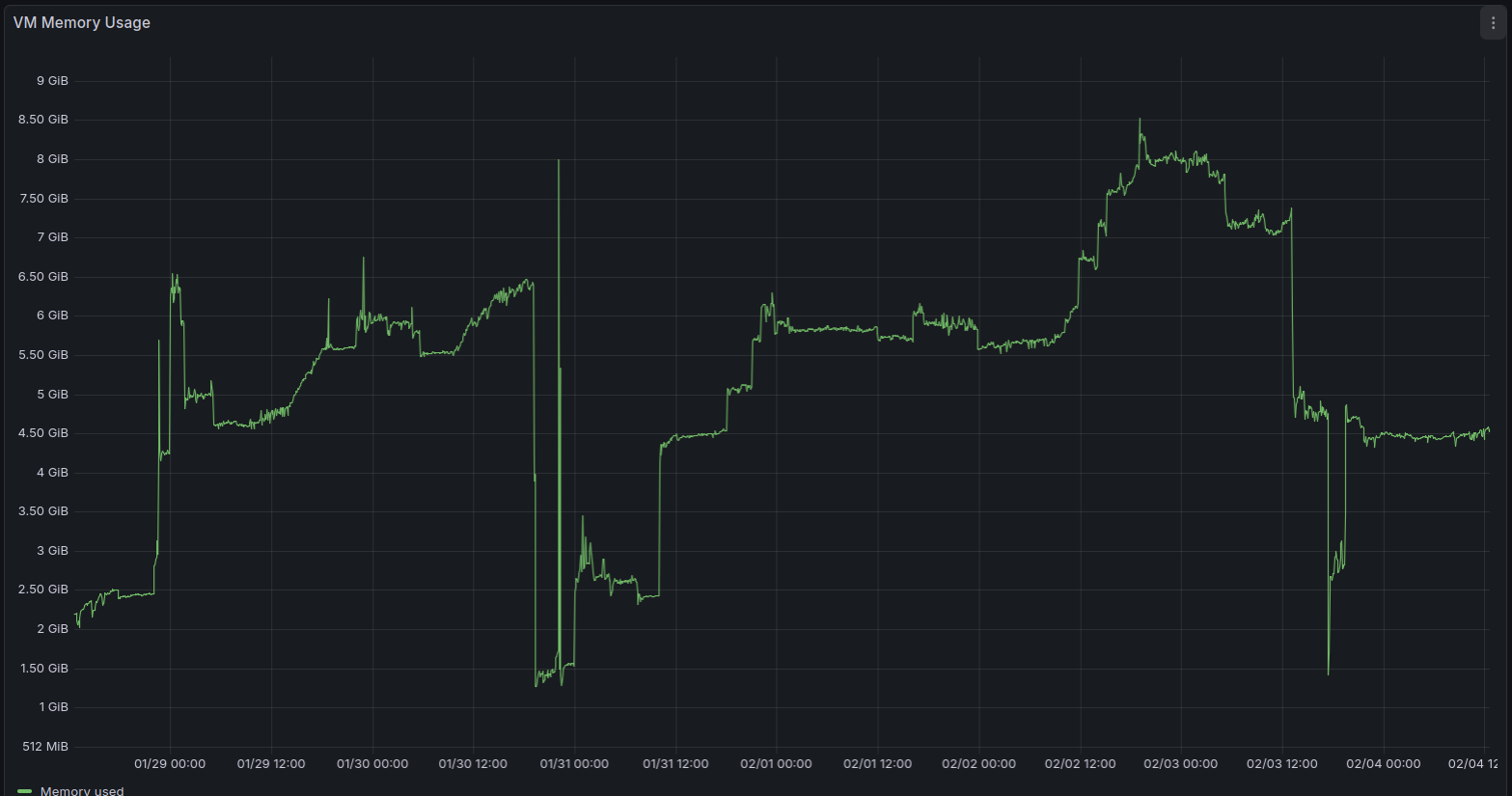

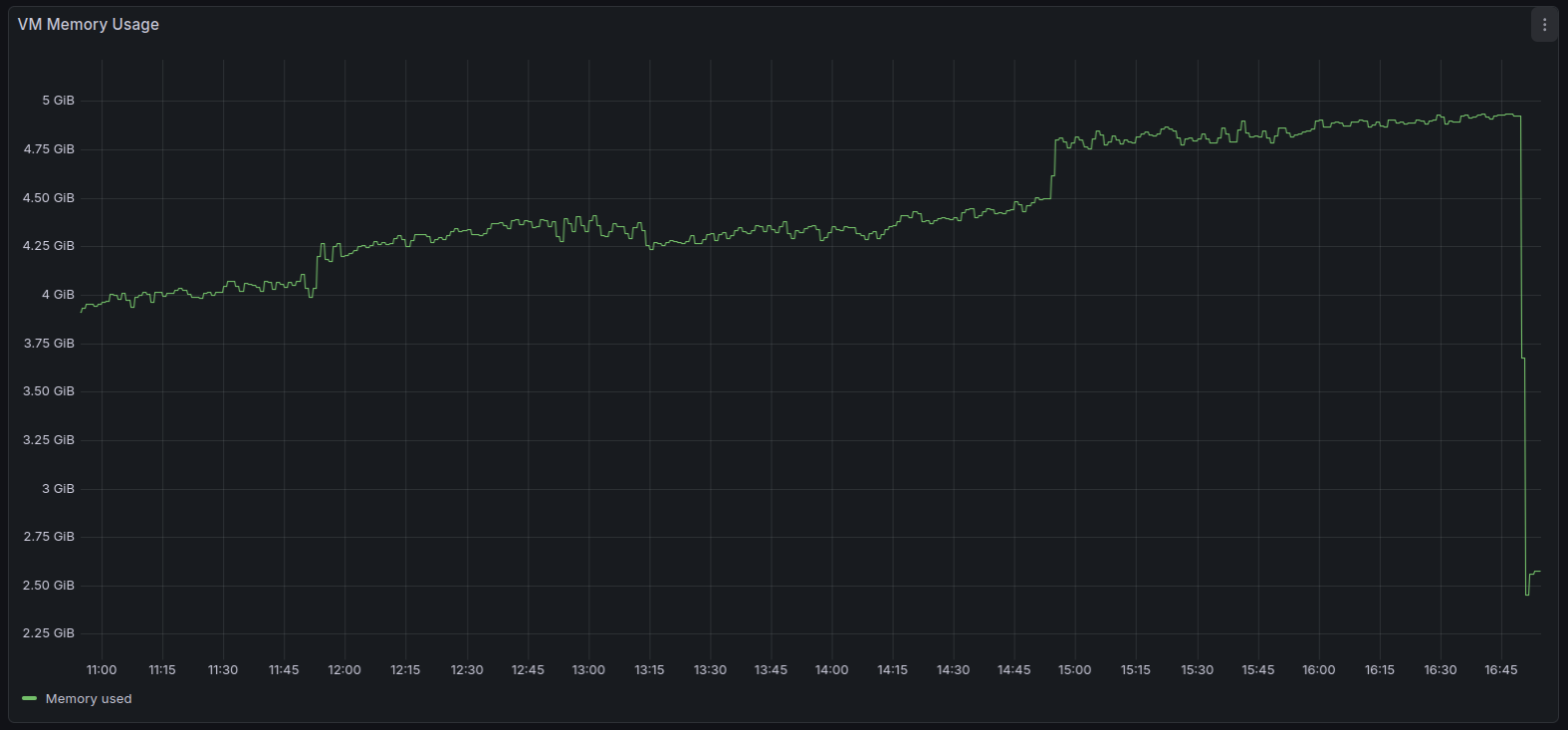

XO virtual machine RAM usage climbed again, even after updating to "feat: release 6.1.1" commit. VM has 8GB RAM, they do not fully get exhausted.

Seems to be related to Node heap size.

You can see the exact moment when the backup jobs went into status "interrupted" (RAM usage dropped).

I am trying to fix these backup issues and am really running out of ideas...

My backup jobs had been running stable in the past.

Something about RAM usage seem to have changed around the release of XO6 as previously mentioned in this thread.