@andriy.sultanov said in Potential bug with Windows VM backup: "Body Timeout Error":

xe-toolstack-restart

Okay I was able to replicate the issue.

This is the setup that I used and that resulted in the "body timeout error" previously discussed in this thread:

OS: Windows Server 2019 Datacenter

The versions of the packages in question that were used in order to replicate the issue (XCP-ng 8.3, fully upgraded):

[11:58 dat-xcpng-test01 ~]# rpm -q xapi-core

xapi-core-25.27.0-2.2.xcpng8.3.x86_64

[11:59 dat-xcpng-test01 ~]# rpm -q qcow-stream-tool

qcow-stream-tool-25.27.0-2.2.xcpng8.3.x86_64

[11:59 dat-xcpng-test01 ~]# rpm -q vhd-tool

vhd-tool-25.27.0-2.2.xcpng8.3.x86_64

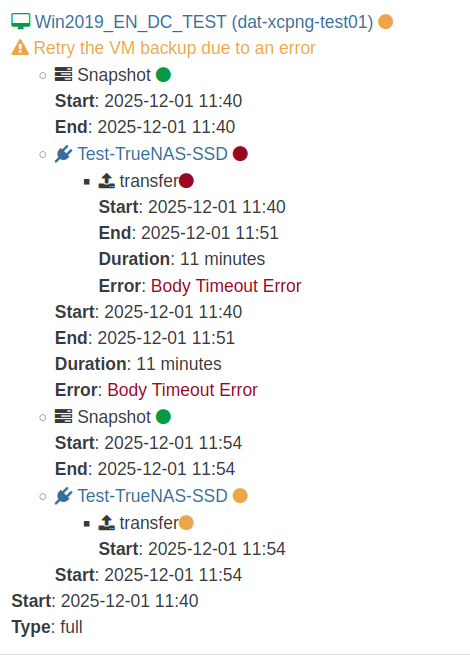

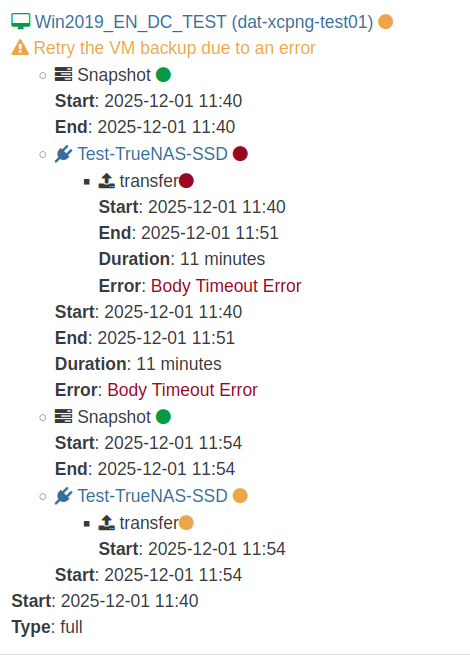

Result:

Backup log:

{

"data": {

"mode": "full",

"reportWhen": "failure"

},

"id": "1764585634255",

"jobId": "b19ed05e-a34f-4fab-b267-1723a7195f4e",

"jobName": "Full-Backup-Test",

"message": "backup",

"scheduleId": "579d937a-cf57-47b2-8cde-4e8325422b15",

"start": 1764585634255,

"status": "failure",

"infos": [

{

"data": {

"vms": [

"36c492a8-e321-ef2b-94dc-a14e5757d711"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "36c492a8-e321-ef2b-94dc-a14e5757d711",

"name_label": "Win2019_EN_DC_TEST"

},

"id": "1764585635692",

"message": "backup VM",

"start": 1764585635692,

"status": "failure",

"tasks": [

{

"id": "1764585635919",

"message": "snapshot",

"start": 1764585635919,

"status": "success",

"end": 1764585644161,

"result": "0f548c1f-ce5c-56e3-0259-9c59b7851a17"

},

{

"data": {

"id": "f1bc8d14-10dd-4440-bb1d-409b91f3b550",

"type": "remote",

"isFull": true

},

"id": "1764585644192",

"message": "export",

"start": 1764585644192,

"status": "failure",

"tasks": [

{

"id": "1764585644201",

"message": "transfer",

"start": 1764585644201,

"status": "failure",

"end": 1764586308921,

"result": {

"name": "BodyTimeoutError",

"code": "UND_ERR_BODY_TIMEOUT",

"message": "Body Timeout Error",

"stack": "BodyTimeoutError: Body Timeout Error\n at FastTimer.onParserTimeout [as _onTimeout] (/opt/xo/xo-builds/xen-orchestra-202511080402/node_modules/undici/lib/dispatcher/client-h1.js:646:28)\n at Timeout.onTick [as _onTimeout] (/opt/xo/xo-builds/xen-orchestra-202511080402/node_modules/undici/lib/util/timers.js:162:13)\n at listOnTimeout (node:internal/timers:588:17)\n at process.processTimers (node:internal/timers:523:7)"

}

}

],

"end": 1764586308922,

"result": {

"name": "BodyTimeoutError",

"code": "UND_ERR_BODY_TIMEOUT",

"message": "Body Timeout Error",

"stack": "BodyTimeoutError: Body Timeout Error\n at FastTimer.onParserTimeout [as _onTimeout] (/opt/xo/xo-builds/xen-orchestra-202511080402/node_modules/undici/lib/dispatcher/client-h1.js:646:28)\n at Timeout.onTick [as _onTimeout] (/opt/xo/xo-builds/xen-orchestra-202511080402/node_modules/undici/lib/util/timers.js:162:13)\n at listOnTimeout (node:internal/timers:588:17)\n at process.processTimers (node:internal/timers:523:7)"

}

},

{

"id": "1764586443440",

"message": "clean-vm",

"start": 1764586443440,

"status": "success",

"end": 1764586443459,

"result": {

"merge": false

}

},

{

"id": "1764586443624",

"message": "snapshot",

"start": 1764586443624,

"status": "success",

"end": 1764586451966,

"result": "c3e9736e-d6eb-3669-c7b8-f603333a83bf"

},

{

"data": {

"id": "f1bc8d14-10dd-4440-bb1d-409b91f3b550",

"type": "remote",

"isFull": true

},

"id": "1764586452003",

"message": "export",

"start": 1764586452003,

"status": "success",

"tasks": [

{

"id": "1764586452008",

"message": "transfer",

"start": 1764586452008,

"status": "success",

"end": 1764586686887,

"result": {

"size": 10464489322

}

}

],

"end": 1764586686900

},

{

"id": "1764586690122",

"message": "clean-vm",

"start": 1764586690122,

"status": "success",

"end": 1764586690140,

"result": {

"merge": false

}

}

],

"warnings": [

{

"data": {

"attempt": 1,

"error": "Body Timeout Error"

},

"message": "Retry the VM backup due to an error"

}

],

"end": 1764586690142

}

],

"end": 1764586690143

}

I then enabled your test repository and installed the packages that you mentioned:

[12:01 dat-xcpng-test01 ~]# rpm -q xapi-core

xapi-core-25.27.0-2.3.0.xvafix.1.xcpng8.3.x86_64

[12:08 dat-xcpng-test01 ~]# rpm -q vhd-tool

vhd-tool-25.27.0-2.3.0.xvafix.1.xcpng8.3.x86_64

[12:08 dat-xcpng-test01 ~]# rpm -q qcow-stream-tool

qcow-stream-tool-25.27.0-2.3.0.xvafix.1.xcpng8.3.x86_64

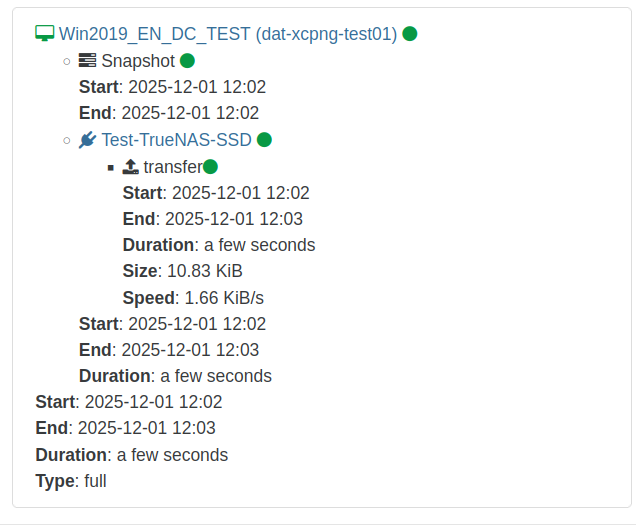

I restarted tool-stack and re-ran the backup job.

Unfortunately it did not solve the issue and made the backup behave very strangely:

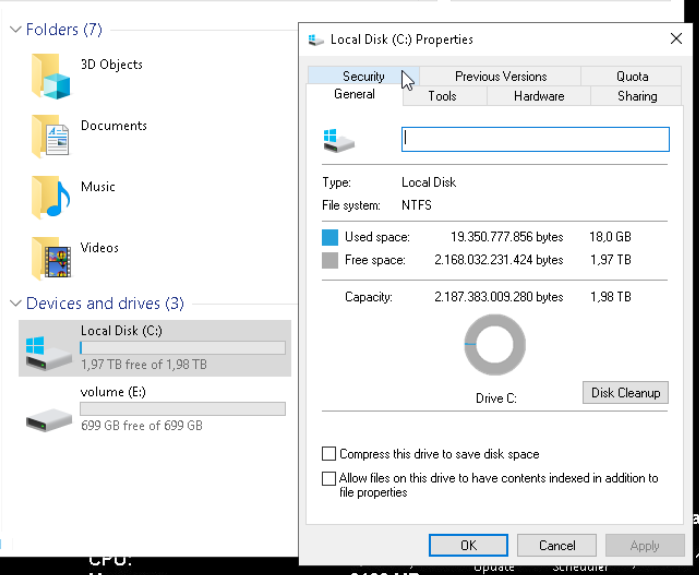

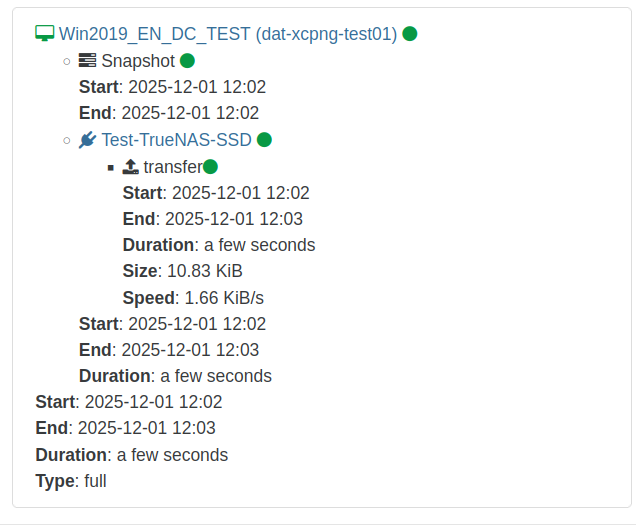

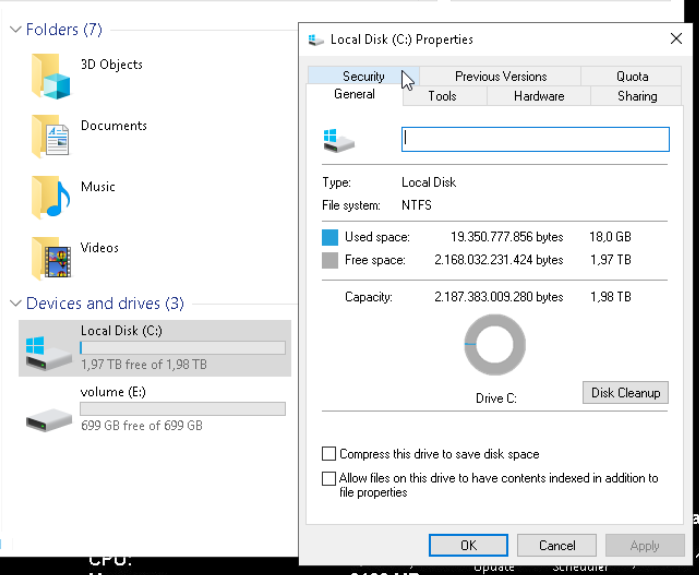

The backup job ran only a few seconds and reported that it was "successful". But only 10.83KiB were transferred. There are 18GB used space on this VM. So the data unfortunately was not transferred by the backup job.

Here is the backup log:

{

"data": {

"mode": "full",

"reportWhen": "failure"

},

"id": "1764586964999",

"jobId": "b19ed05e-a34f-4fab-b267-1723a7195f4e",

"jobName": "Full-Backup-Test",

"message": "backup",

"scheduleId": "579d937a-cf57-47b2-8cde-4e8325422b15",

"start": 1764586964999,

"status": "success",

"infos": [

{

"data": {

"vms": [

"36c492a8-e321-ef2b-94dc-a14e5757d711"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "36c492a8-e321-ef2b-94dc-a14e5757d711",

"name_label": "Win2019_EN_DC_TEST"

},

"id": "1764586966983",

"message": "backup VM",

"start": 1764586966983,

"status": "success",

"tasks": [

{

"id": "1764586967194",

"message": "snapshot",

"start": 1764586967194,

"status": "success",

"end": 1764586975429,

"result": "ebe5c4e2-5746-9cb3-7df6-701774a679b5"

},

{

"data": {

"id": "f1bc8d14-10dd-4440-bb1d-409b91f3b550",

"type": "remote",

"isFull": true

},

"id": "1764586975453",

"message": "export",

"start": 1764586975453,

"status": "success",

"tasks": [

{

"id": "1764586975473",

"message": "transfer",

"start": 1764586975473,

"status": "success",

"end": 1764586981992,

"result": {

"size": 11093

}

}

],

"end": 1764586982054

},

{

"id": "1764586985271",

"message": "clean-vm",

"start": 1764586985271,

"status": "success",

"end": 1764586985290,

"result": {

"merge": false

}

}

],

"end": 1764586985291

}

],

"end": 1764586985292

}

If you need me to test something else or if I should provide some log file from the XCP-ng system please let me know.

Best regards