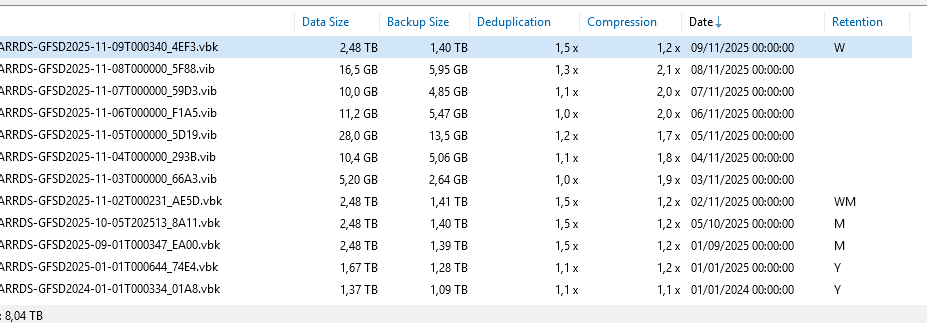

just to #brag

Deploy of worker is okay (one big rocky linux with default settings 6vcpu, 6Gb RAM, 100Gb vdi)

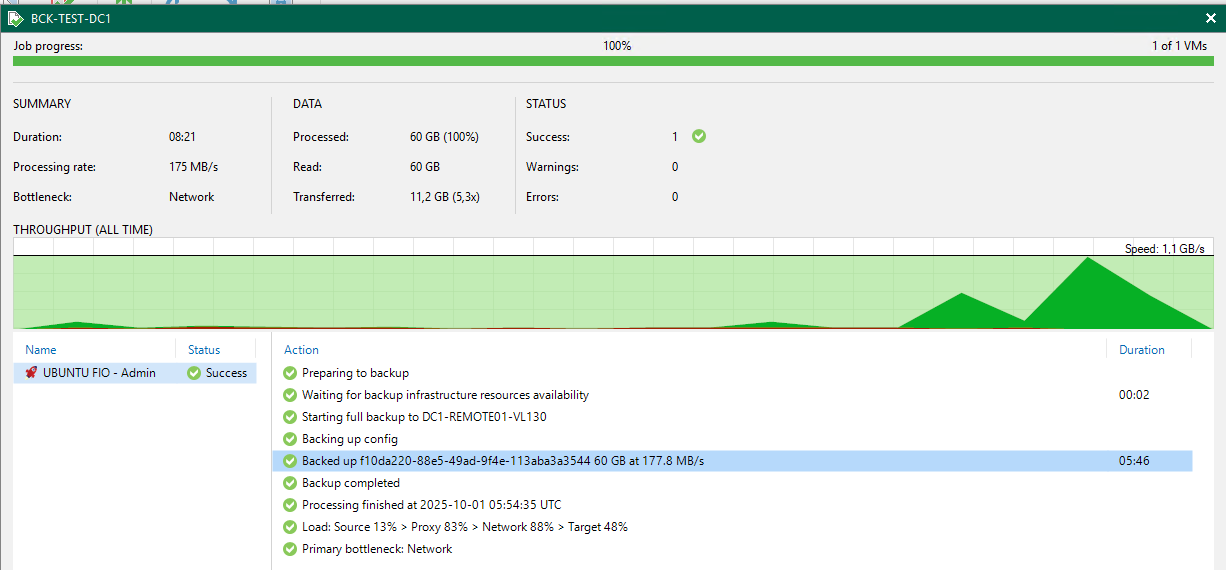

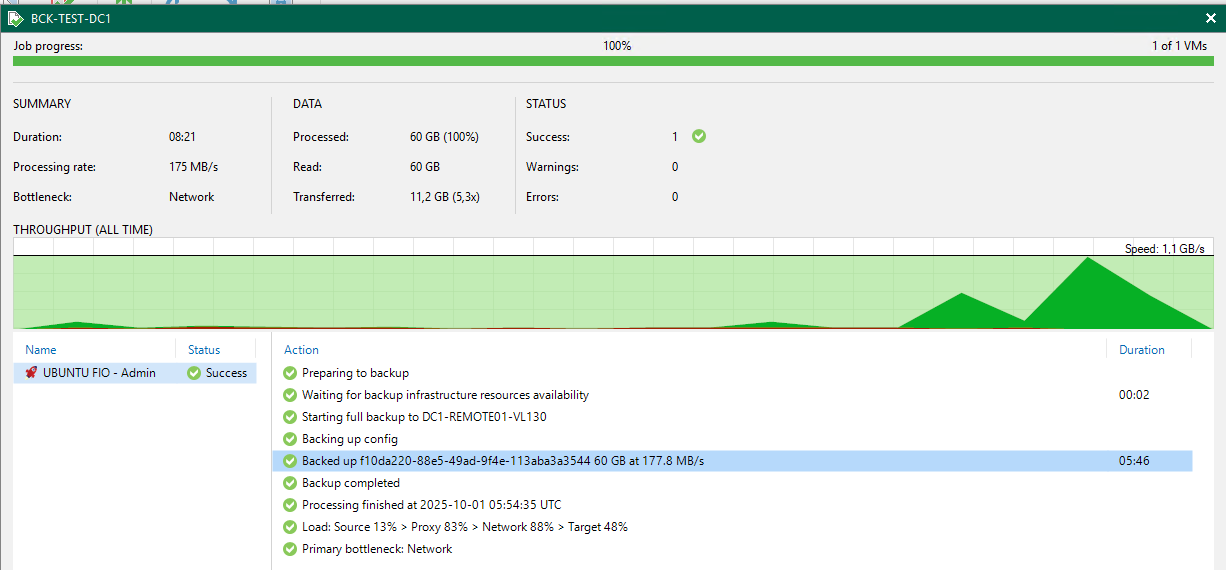

first backup is fine, with decent speed ! (to a xcp hosted S3 minio)

will keep testing

just to #brag

Deploy of worker is okay (one big rocky linux with default settings 6vcpu, 6Gb RAM, 100Gb vdi)

first backup is fine, with decent speed ! (to a xcp hosted S3 minio)

will keep testing

Hey all,

We are proud of our new setup, full XCPng hosting solution we racked in a datacenter today.

This is the production node, tomorrow i'll post the replica node !

XCPng 8.3, HPE hardware obviously, and we are preparing full automation of clients by API (from switch vlans to firewall public IP, and automatic VM deployment).

This needs a sticker "Vates Inside"  #vent

#vent

@sluflyer06 and I wish HPE would be added too

is there anywhere where we can check the backlog / work in progress / to be done on XO6 ?

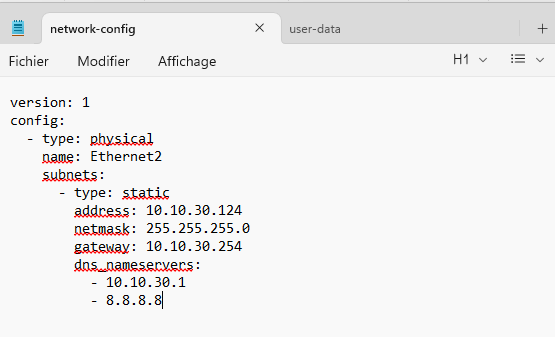

I did stick to version: 1 in my working configuration

Had to rename my "Ethernet 2" nic name to Ethernet2 without the space

You have to put the exact template nic name for this to work.

@MajorP93 throw in multiple garbage collections during snap/desnap of backups on a XOSTOR SR, and these SR scans really get in the way

Hi,

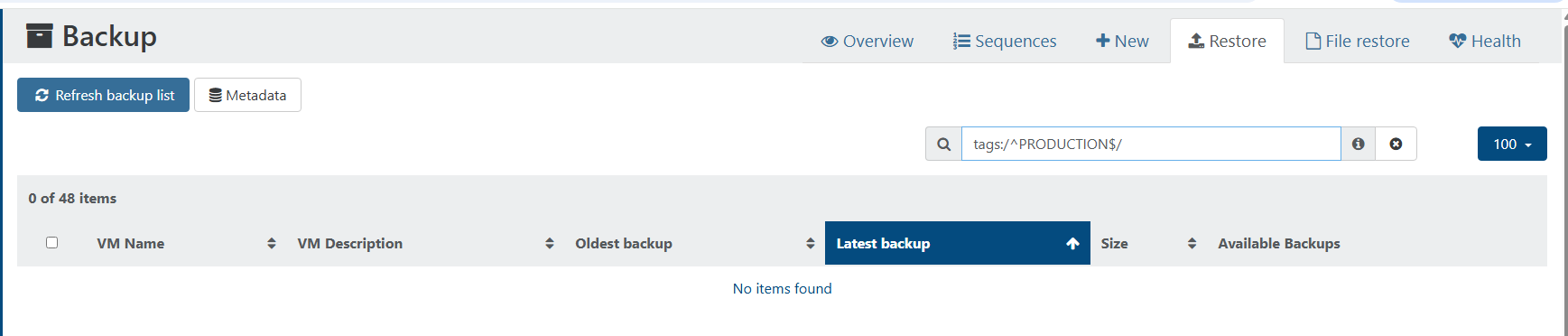

Smart backups are wonderful to manage smart selection of VMs to be backuped.

When we browse the RESTORE section, would be cool to get the TAGs back visually, and possibility to filter them

I'd like to get "all VMs restorable with this particular TAG" type of filter, hope i'm clear.

Perhaps something to add to XO6 ?

Could we have a way to know wich backup is part of LTR ?

In veeam B&R, when doing LTR/GFS, there is a letter like W(weekly) M(monthly) Y(yearly)to signal the fact in the UI

That's pure cosmectics indeed, but practical.

@Bastien-Nollet okay i'll do that tonight and will report back

@Bastien-Nollet said in backup mail report says INTERRUPTED but it's not ?:

file packages/xo-server-backup-reports/dist/index.js by a

modification done, will give feedback

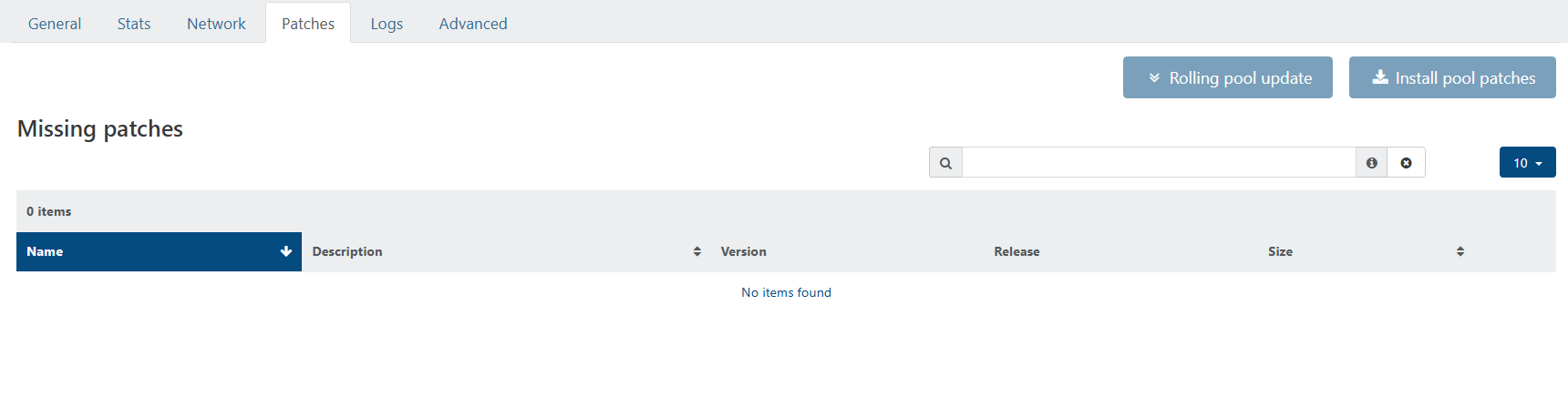

@gduperrey we had the XOA update alert, upgraded to XOA 6.1.0

but no sign of XCP hosts updates ?

When patches are available, it usually pops up on its own, is there something to do on cli now ?

EDIT : my bad, we had a DNS resolution problem... I now see a bunch of updates available...

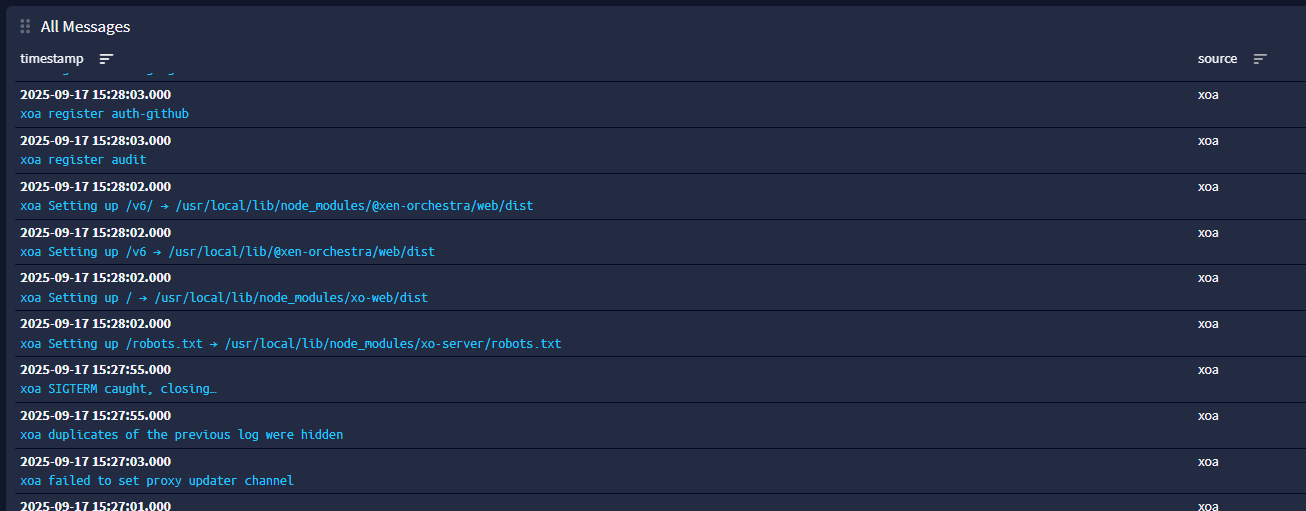

@Forza I didn't try, as my default Graylog Input was UDP and worked with the hosts...

But guys, that was it. In TCP mode, it's working. Rapidly set up a TCP input, and voila.

@MK.ultra don't think so

it's working without for me.

@tmk hi !

Many thanks, your modified python file did the trick, my static IP address is now working as intented.

I can confirm this is working on Windows 2025 Server as well.

@MajorP93 I guess so, if someone from Vates team get us the answer as why so frequently perhaps it will enlighten us

@iamsumeshks as said on reddit, it seems that you are good to go

monitor your nics bandwidth in hosts stats to ensure it goes on the good pif.

PREFNIC is the one where DRBD works i guess

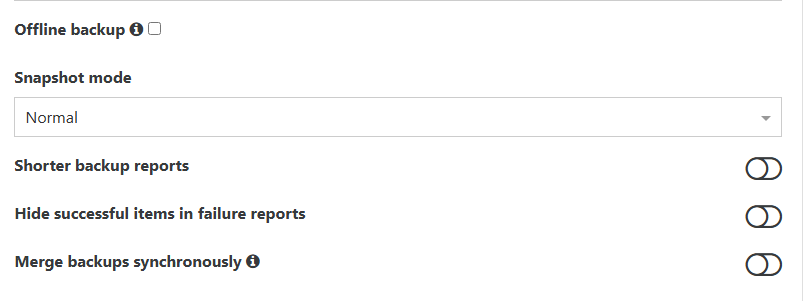

@Bastien-Nollet where is this "offline backups" check option ?

I'm aware of snapshot mode/offline, but not offline backups ?

EDIT : my bad, I found it, it's only available for FULL BACKUPS, not DELTA BACKUPS

@Bastien-Nollet Hi, here is the feedback : having two sequences for two schedules keeps the chain continuity and is to be considered working as two enabled schedules on one backup job.

Todays backup was indeed a delta, and did the healthcheck as intended.

Question is answered !

@Bastien-Nollet ha yeah, sequences are a set of schedules... so I have to put the two schedules in two sequences.

my fear is that it is still not clear if the two separated sequences of schedules will behave as two schedules in one job. see what I mean ? (chain continuity of two schedules attached to one job)

I dont want to get a new full with the standalone sequence/schedule that is healthcheck configured.

i'll need to try and report back here.

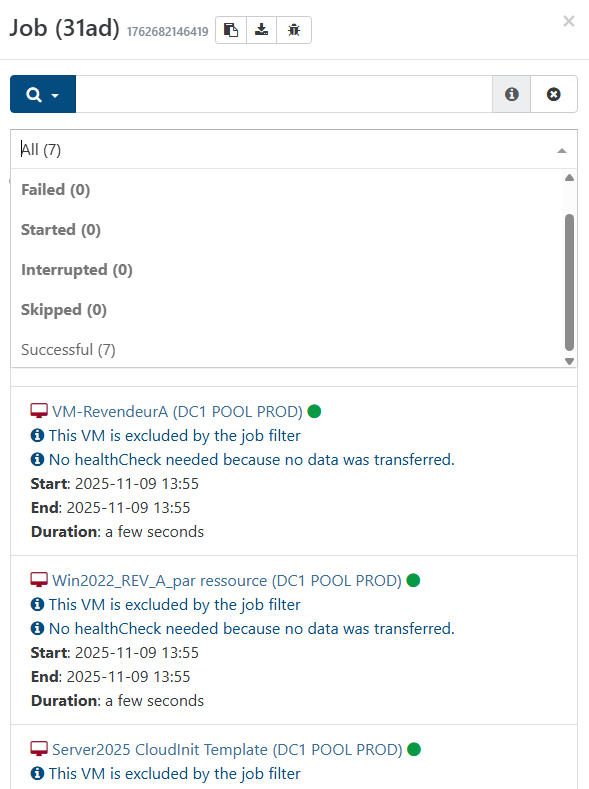

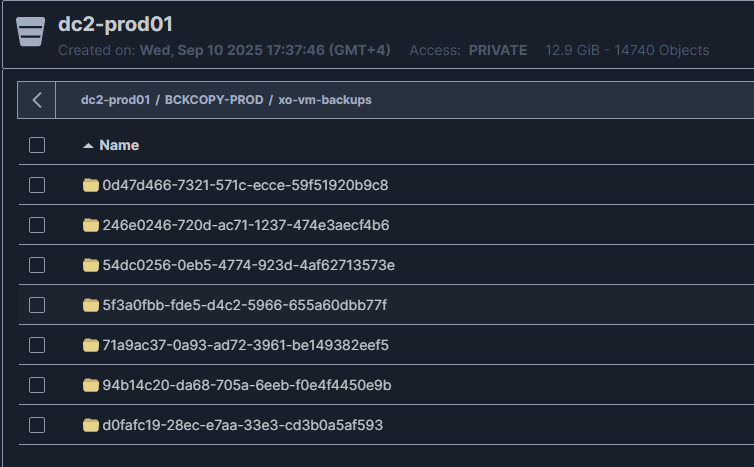

and I noticed a "bug?" with mirror copy job ?

Even if I only manually select one VM out of the 7 that are on the remote, they are "checked" by the job, but excluded by the job filter

on the remote, all 7 VMs folders are created, but only the copied VM contains data

the copy is OK for the selected VM, but why check and create the other VMs/folders ?