@Greg_E hi there,

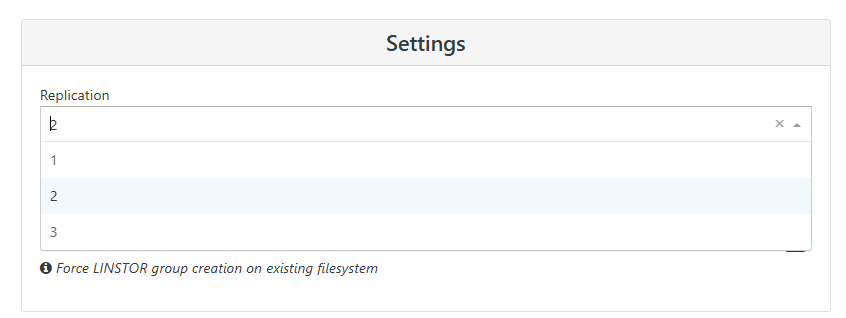

beyond number of minimal hosts to be supported (think it's 3) and minimal disks to get good redundancy (I think its minimal 3 per host, must be identical) you have a replication parameter when building an XOSTOR

it defaults to two (you have two copies of each workload) and this parameter can impact your total usable space.

also beware of network requirements (for satellites connections, and DRBD replication)

minimum of 2 nics per server, and DRDB replication should be at least 10Gb nics

tip : the linstor-controller is not always the pool master...

let the night decide

let the night decide

, check the troubleshooting section of documentation here :

, check the troubleshooting section of documentation here :