EDIT: DISCLAIMER: I'm using the below config in a home lab, using spare parts, and where there are no financial or service-level consequences if it all burns down.

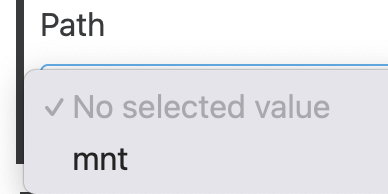

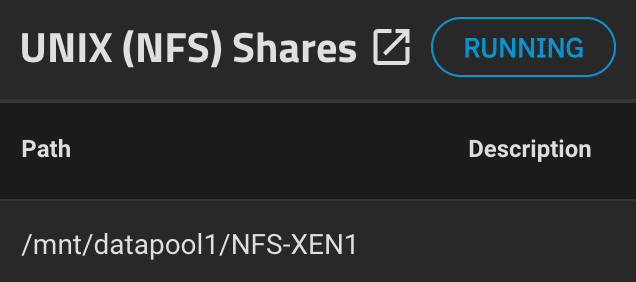

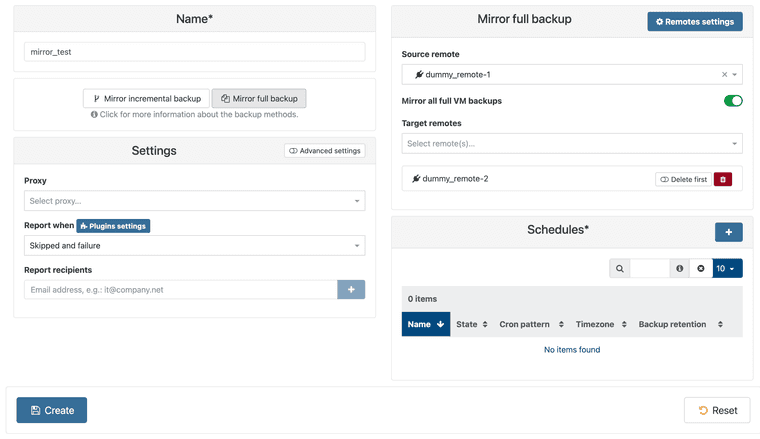

@TechGrips From one of your posts I see your XO is separate from the XCP-ng host (running on VirtualBox). I tried something similar, and went through some of the same pains as you when it came to backup remote (BR) setups. In the end, I used a spare mini-PC running Ubuntu, installed XO from sources, attached a USB drive formatted as EXT4, mounted it (/mnt/USB1 or something) and used that as a 'Local BR'.

I then noticed that I sometimes saw 0 bytes free - turned out that the USB drive was going to sleep. Without properly spending any time on power management, I wrote a cron job to simply write the date to a text file on /mnt/USB1 every 5 minutes... Lazy but it kept the USB drive awake and XO backups worked great.

The biggest risk is that, if the USB drive disconnects / fails / unmounts, the /mnt/USB1 folder still exists on the root filesystem, and could fill up if a backup job consumes all the free space - so definitely look into controls for that (perhaps quotes or something more intelligent than the lazy keepawake method I have)

Side note: please remember that many people on this forum speak languages other than English, so sometimes their written messages don't carry the full context or intention. Sorry to see you've felt condescended to. I've found it helps me keep positive to try assume that people's intentions are to help, even if the person's message seems blunt or odd, or if they're asking questions that seem to blame (they're probably just trying to get more info to help). We're all here to help each other, and I've only had good, helpful service from the Vates team (@danp specifically) to date.

Sorry I can't be more helpful.

Sorry I can't be more helpful.