Prioritization of VM startup AND shutdown sequencing! PLEASE - in the GUI (XO). So - without code - I can finally shutdown my servers accessing DB's prior to shutting down the DB server vm's themselves thereby saving myself from table corruption.

Posts

-

RE: DevOps Megathread: what you need and how we can help!

-

RE: VM qcow2 --> vhd Drive Migration Failures

@AtaxyaNetwork I appreciate the sentiment. I think this one is all on me as pointed out by @Danp ..

My VDI's would not register without this step. I'm unsure as to why because the error logs were completely blank within XO. Your post in conjunction with the docs were extremely helpful though!

-

RE: VM qcow2 --> vhd Drive Migration Failures

Okay. I have cracked this nut!

Answered all my questions by muddling my way through things.

Will document my steps after I finish the next batch of VM's/VDI's. I will say though, after having figured this out and looking for ways to automate and streamline the process, this is hella labour intensive! Honestly, for many of these VM's it is less work re-creating from scratch. I wish there were a way that were as easy as VMWare.

In short, I re-read the docs over and over. Then I followed the advice of a "staging/buffer" environment to carry out all of the

rsyncandqemu-img converttasks. The tricky part (for me) was locating theuuidinfo etc for the disk images -- I copied converted viarsyncto the local datastor. I booted my first vm and all checked out. Though, I am not able to migrate the VDI off of the localsr-mountrepo and onto my NAS NFS volume. It fails every time. -

RE: VM qcow2 --> vhd Drive Migration Failures

@olivierlambert literally in the midst of replying! Yes, got it.

After reading over the reference post I realized

sr-mount(ie Storage Repo ) was where all my *.vhd's are. Duh. So, now only one question remains for me ..

) was where all my *.vhd's are. Duh. So, now only one question remains for me ..Do I copy the

qcow2to my "buffer" machine (as noted in docs), perform the conversion tovhdthere and thenrsyncthe resulting output to the NFS share dir that is identified undersr-mountor to the XCP-ng host directly? There shouldn't be any difference here as they are the same NFS mount.Sorry, one additional follow up: do name the

vhdimage exactly the same as the existing 'dummy'vhdthat was created with the VDI? Following this, do I then import disconnect the drive from the VDI and re-import?Perhaps my confusion is around the fact that I have already created a VDI for the drive I am migrating. As the docs say not to do this until after the image has been converted.

-

RE: VM qcow2 --> vhd Drive Migration Failures

Also, where does XCP-ng mount NFS volumes? Not in

/mntor/mediaand I cannot seem to find anything in/deveither. If I am going torsyncthe image to the host for repair I need to be able to access the files on the NFS mount. -

RE: VM qcow2 --> vhd Drive Migration Failures

@Danp right, this:

- Use rsync to copy the converted files (VHD) to your XCP-ng host.

- After the rsync operation, the VHD are not valid for the XAPI, so repair them

But

rsyncto where? @AtaxyaNetwork mentions/run/sr-mount/uuid-of-vmbut I don't see a folder for the UUID of the VM I created there. Likely because it resides on an NFS mount?? Or does the *.vhd just need to exist somewhere on the XCP-ng host?Confused.

I want the *.vhd's to be on the NFS mount/share. Though, I could rsync to a local folder, then migrate once added?

-

VM qcow2 --> vhd Drive Migration Failures

Hello again ..

I have batched transferred and exported a series of *.qcow2 VHD's from Proxmox to remote NFS storage:

qemu-img convert -O qcow2 /dev/zvol/zpool1/name-of-vm-plus-id /mnt/pve/nfs-share/dump/name-of-vm-plus-id.qcow2I then converted those exported images to *.vhd using the

qemu-imgcommand:qemu-img convert -O vpc /mnt/pve/nfs-share/dump/name-of-vm-plus-id.qcow2 /mnt/pve/nfs-share/dump/'uuid-target-vdi-xcp-ng'.vhdFinally, I attempted to "IMPORT" the

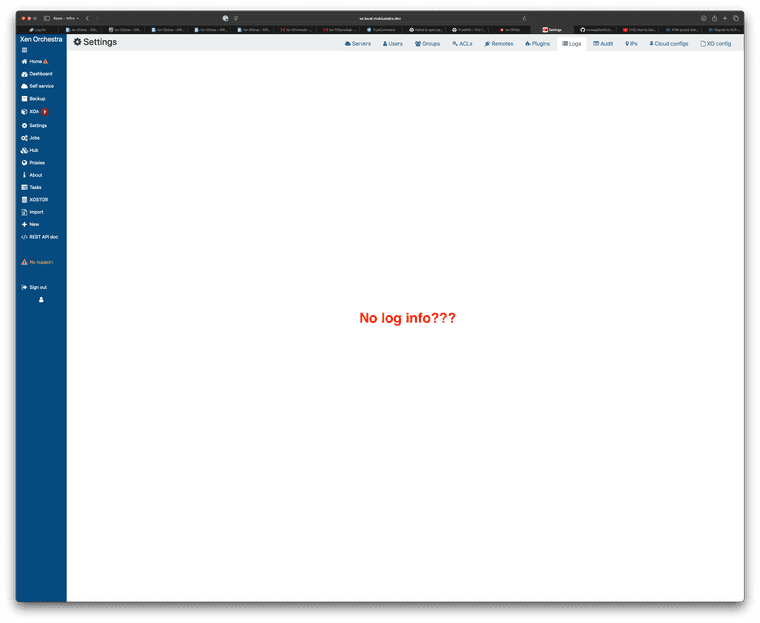

'uuid-target-vdi-xcp-ng'.vhdimages into XCP-ng and they fail, every single time. When I attempt to comb the log for errors from within XO there is nothing (see screenshot). Which is very, very weird.

I originally was referencing this post when searching for a means to convert Proxmox VHD's to XCP-ng. Prior to finding the forum post, I did persuse and review the official docs here.

Hopefully there's a simple way for me to view the logs and understand why XCP-ng is failing to import the converted *.vhd's.

Thanks in advance for your help!

-

RE: VM Boot Order via XO?

@sid thanks for this! Not what I was looking for, as you stated, but useful nonetheless.

-

RE: VM Boot Order via XO?

@Greg_E exactly! I'm thinking of my junior/int sys-admins who are very comfortable with VMWare process (vCenter). It's dead simple, yet, even vCenter doesn't have a facility that can scan for app/service feedback as a health check. So, this would be a first.

-

RE: VM Boot Order via XO?

@sid this is how my K8S/K3S and Stack yml configs work. They leverage interdependencies and health checks across clusters (ping via IP and listen for reply etc) work. API or potentially webhooks could work. Though, what I’m asking/looking for is a way that XO could facilitate. Even if the “script” were added via XO-UI and I could expose apps/services/vm’s dynamically via said UI. Proxmox has something similar to this, it’s buried with VM config options within the UI.

-

RE: VM Boot Order via XO?

@ThasianXi this is a solution, not the one I was hoping for. I was explicitly hoping for an XO guy-led solution. Something similar to the way VMWare allows one to drag vm's in boot pref. @olivierlambert if this is not a feature request already, how do I make it one?

-

VM Boot Order via XO?

Hello ~

I found this article within the forums. Is it only possible to prioritize the boot order of VM's via the CLI? Or is there a facility with XO to do so? Thanks in advance for your help.

USE CASE

- Multiple DB types and clusters of DB's

- K3S cluster

- K8S cluster

- Swarm cluster

- DB's are required to be up prior to apps clusters (as listed) and in some cases there are interdependencies between various apps across K3S, K8S, and Swarm

All I am looking to do at the moment is ensure the DB VM's are up and operational prior to initializing my clusters. I can create

ymlconfigs to ensure the interdependencies are met within my app infra at a later date. With ESXi this was super easy, even Proxmox to a degree.Still very much new to XCP-ng and need some assistance finding this within the XO GUI.

-

RE: Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng

@DustinB it's there on mine as well. Going to test it in a couple of weeks. Lots on the docket! Thanks again, everyone.

-

RE: Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng

@john.c said in Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng:

@DustinB said in Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng:

@cichy said in Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng:

@john.c correct. It's the auto updates and automations that come with the appliance that I am after. I believe the trial is only one month? Or possibly only 15 days? The support element is mostly irrelevant to me/us. I do a fair bit of automation via Ansible/Terraform, so developing our own unique library of Templates is ideal. Again, new to all this. So, it may just be that I've not come across this within my XO from "sources" build

Take a look at my provide or lookup Jarli01 on GitHub if you want a simple yet effective installation and maintenance approach to installing and managing XOCE.

@DustinB They mentioned needing the updates and related automations. Also given the size of the organisation that they are working for, they’ll likely need the QA of XOA in production.

If you check out Hok+ (https://www.hok.com/) website then scroll down, to the bottom they list all of their offices around the world. Also you can get statistics about the numbers of employees.

@cichy Am I correct about the above please?

This is correct. @DustinB ~ I am using this XO sources script to install/update/maintain XO atm. Though, I am on a Mac (ARM) which means I am unable to install XO to manage pools/hosts locally.

I'm going to push the CE/Sources ver as far as I can. It has been noted (by @john.c ) that two incredibly useful 'plugins' - net data/netbox - are not available on the XO Sources edition.

Once again, I appreciate the feedback and comments - all very helpful.

-

RE: Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng

@john.c thanks again for all this info!

I plan to meet up with the team this week to assess our objectives and KPI's; in the meantime, all of the above has helped tremendously. I'm currently messing around with establishing K8S + Swarm clusters, testing the automation capabilities, XCP-ng is proving to be quite flexible. Learning the nuances of dynamic resource allocation (CPU/RAM, etc.), there are some nuanced differences from vSphere/Proxmox.

Again, thanks very much for your help. I've made note of all your comments above. Especially references to Terraform/Vault alternatives! These are gold.

-

RE: Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng

@john.c said in Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng:

@cichy said in Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng:

@john.c correct. It's the auto updates and automations that come with the appliance that I am after. I believe the trial is only one month? Or possibly only 15 days? The support element is mostly irrelevant to me/us. I do a fair bit of automation via Ansible/Terraform, so developing our own unique library of Templates is ideal. Again, new to all this. So, it may just be that I've not come across this within my XO from "sources" build.

To your point - I was referring to XO Community = to XO Sources.

We may be unique in our internal policies to test for min 9-12 months prior to subscription? Proxmox licensing worked very well for us, because even when our three node HA edge cluster was in production, we were still able to license per the lowest tier which mad the whole stack so much more financially viable. I think we have 2/3 nodes on basic licensing and 1 may even be on Community! We are very technically savvy bunch that has managed to get by on this thus far.

Thanks for the comments/feedback. Much appreciated!

@cichy Though do note that you can make your case to Vates staff, during the trial if you find you don’t have enough time to test. Just don’t string them along by gaming the trial offer, to the point it becomes of infinite length, in a similar fashion to another organisation which won’t be named.

Also Netdata is a valuable plugin available in the appliance version of Xen Orchestra (XOA). A useful part of any monitoring solution.

The updating functionality in Xen Orchestra has recently gained the capability to be scheduled to run regularly. Finally in the last couple of months, Vates has completely re-done the backup feature in Xen Orchestra. If your workplace operates in regulated industry or policies are for air gapped infrastructure then Vates have most definitely got you covered with Pro and Enterprise plans!!

The XO Hub feature is only available to the appliance version as it is tied, to the Vates IT infrastructure. As well as likely the user account. Another feature present in the appliance version is the capacity for your Xen Orchestra settings, to be synchronised against the Vates account!

You may be very technically minded and/or your team, but as you state your new to the Vates VMS stack. Your access to their paid support, through the subscription support plan will pay for itself. Additionally your supporting (funding) future work on the software Vates releases. They can help you track down issues, as developers and thus root out what causes problems. Plus as members of the Xen Project and through that Linux Foundation, this will likely prove valuable - influence on future development.

If you pay yearly and sufficient multiple years, you’ll have the following based on choice made:-

- 1 Year - No Savings

- 3 Years - Up to 10% Savings in 3 years

- 5 Years - Up to 15% Savings in 5 years

Check the comparison between the plans it will show what you’re getting for what’s being paid. Also note that as new things are added at particular levels, on the paid plans they will become available to you when ready and available. This is based on update channel and plan chosen.

With Proxmox your paying per cpu socket, potentially including per host and this is per year. However with Vates VMS on the most basic plans, per year and on higher ones per host per year. No having to deal with costs, per cpu socket or core with Vates. Thus more predictable costs, thus making it much cheaper for you in the long run!!

So if you have an infrastructure of no more than 3 hosts max depending on requirements, then either Essentials or Essentials+. However more than 3 hosts requires you to go for either Pro or Enterprise plans. Note that Enterprise plan requires a minimum of 4 hosts!

This is all incredibly helpful. Thank you!

I will keep testing and then reach out to Vates once I have assessed the qty of servers I’ll be migrating to the new infra.

One question: were you referring to “Netbox” integration? Currently using this to keep track of everything from rack (multiple) to power to server builds (GPU, drives, ram, cpu, etc). If that can be fully automated, it may be worth the cost alone!

-

RE: Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng

@john.c correct. It's the auto updates and automations that come with the appliance that I am after. I believe the trial is only one month? Or possibly only 15 days? The support element is mostly irrelevant to me/us. I do a fair bit of automation via Ansible/Terraform, so developing our own unique library of Templates is ideal. Again, new to all this. So, it may just be that I've not come across this within my XO from "sources" build.

To your point - I was referring to XO Community = to XO Sources.

We may be unique in our internal policies to test for min 9-12 months prior to subscription? Proxmox licensing worked very well for us, because even when our three node HA edge cluster was in production, we were still able to license per the lowest tier which mad the whole stack so much more financially viable. I think we have 2/3 nodes on basic licensing and 1 may even be on Community! We are very technically savvy bunch that has managed to get by on this thus far.

Thanks for the comments/feedback. Much appreciated!

-

RE: Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng

@nikade thanks for your question!

Just out of curiosity, why are you migrating from proxmox to xcp-ng? Are you ex. vmware?

We used both vmware and xcp-ng for a long time and xcp-ng is was the obvious alternative for us for workloads that we didn't want in our vmware environment, mostly because of using shared storage and the general similarities.So, in short, yes. Ex-VMWare. Though, we are still running VMW on core infrastructure - no way to escape this. I am investigating XCP-ng because I'm primarily looking for cost effective 'edge' and/or 'ai' hypervisor infra solutions. Initially, I used Harvester (by SUSE) for its flexible composability and Kubevirt integration -- we were orchestrating Windows clients for scalable (400+ simul users) viz app. Unfortunately Harvester's UI AND CLI lack a lot of base and common functionality required in our use case. So, I leaned in on Proxmox. After about a year, I've started to realize that although LXC containers are a major convenience, they run directly on dom0, which is absolutely nuts. In addition, ZFS volumes were eating 50% of the system's RAM, etc. Great for a "homelab" not necessarily for production.

This brings us to how I wound up with XCP-ng. There are certainly functional eccentricities: the XO UI leaves A LOT to be desired. However, outside of this and as I become more comfortable with the way it operates, it is the closest thing to ESXi/vSphere I've used thus far. This in conjunction with my honed K8S && Swarm skills have me thinking I may have just found THE solution I've been looking for!

I do have a minor gripe, @olivierlambert : currently I am testing this for scaled deployment across the org. BUT, there are no pricing options in the sub $1k range that allow me to test enterprise/production features long-term prior to deploying. We never jump into launching solutions without testing for 9-12 months, at least. So, to spend $4k+ just to POC an edge cluster is nearly impossible to justify as an expense. I am currently using XO Community but have already run into the paywall with certain features I want to test 'long-term' - prior to deployment.

Thanks for your assistance! It looks like I'll be pretty active here until I iron everything out and gradually start diving in a littler deeper and migrate VM's off of Proxmox and into XCP-ng.

-

RE: Pre-Setup for Migration of 75+ VM's from Proxmox VE to XCP-ng

OMG!

I can't believe I had not tried this. I guess I was avoiding using a template period. Now I understand, it's just a base point. Thank you so much!

I can't believe I had not tried this. I guess I was avoiding using a template period. Now I understand, it's just a base point. Thank you so much!