@DustyArmstrong thats super-strange, i actually have the same setup at home, 2 hp z240 machines running xcp-ng in a small pool.

xcp1 is always up and running, xcp2 is powered down when I dont need it, everything important is running on xcp1, maybe that's the reason I don't run into these issues.

Posts

-

RE: Network Management lost, No Nic display Consol

-

RE: Network Management lost, No Nic display Consol

@DustyArmstrong said in Network Management lost, No Nic display Consol:

@nikade sorry to drag this up but, is there a particular process or methodology to avoid this in the first place? Just had it happen on two brand new hosts, I had to re-install XCP from scratch. Bit worried if I reboot one of them now for any reason this will happen again. It happened on both the pool master and the slave, network completely wiped out on both.

Genuinely one of the most bizarre series of events I've ever experienced with server infrastructure, I could not understand what was going on until I found this thread.

What exactly happend? Could you try and explain in 1-2-3 steps?

-

RE: Recommended DELL Hardware ?

I second all of @pilow points. We have been running a lot of Dell R630's with Intel CPU, Intel NIC, PERC H730i raid-cards and it has been flawless. Now we're using Dell R660's, but with newer spec's.

Tried Broadcom NIC's once, it worked, but had some weird performance issues from time to time.

Go with shared storage, NFS if possible (thin provisioning) and avoid XOSAN/XOSTOR, I don't think its battle-tested quite yet. -

RE: An error occurred while fetching the patches

@olivierlambert great news, I had a feeling this was in the works! Thanks for the info.

-

RE: An error occurred while fetching the patches

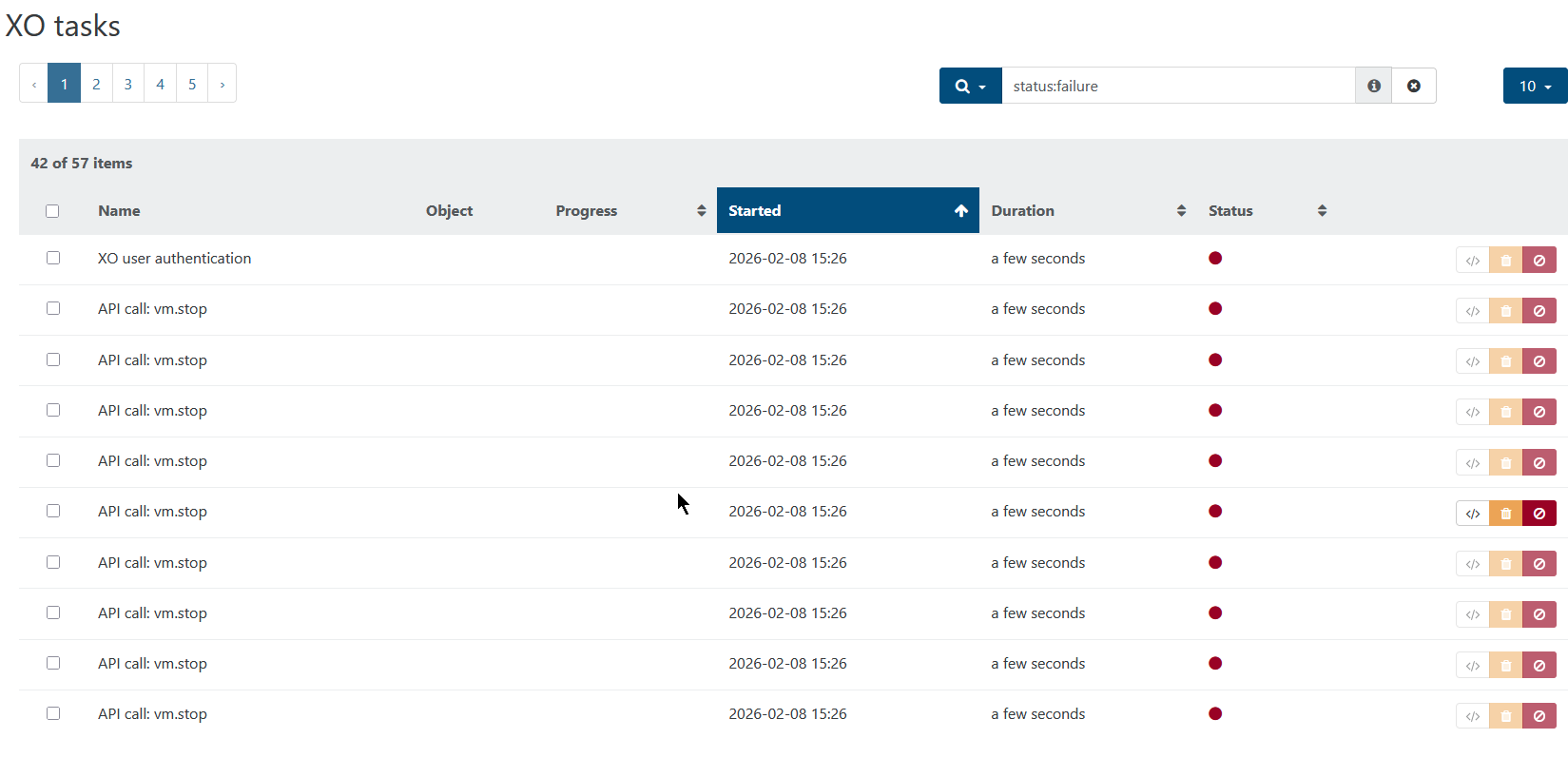

@Pilow Well yeah, it was indeed shown under TASK but man, there's a lot of tasks:

Some kind of status would be nice on the "update" tab of the vm, as well on the pool "update" tab.

-

An error occurred while fetching the patches

So im playing around with a test-pool i've recently setup to try and get my skillset up to date with xcp-ng and xo due to the latest Broadcom VMware changes to their partnerprogram.

I haven't been using xcp-ng a lot lately, pretty much only in my homelab as well as a colo machine that has basically been running on its own for quite some time.

I went to do what I always do when setting up a new xcp-ng machine, to the update tab and noticed there are 70+ updates in line. Nothing strange with that, this is a brand new installation, so I press the update button and after a while im seeing this:

An error occurred while fetching the patches. Please make sure the updater plugin is installed by running `yum install xcp-ng-updater` on the host.I didn't bother too much, maybe something just went wrong? I then went on to install the 2nd machine (Im setting up a POC of 8 xcp-ng hosts in total) and I did the same thing, just to see the exact same thing.

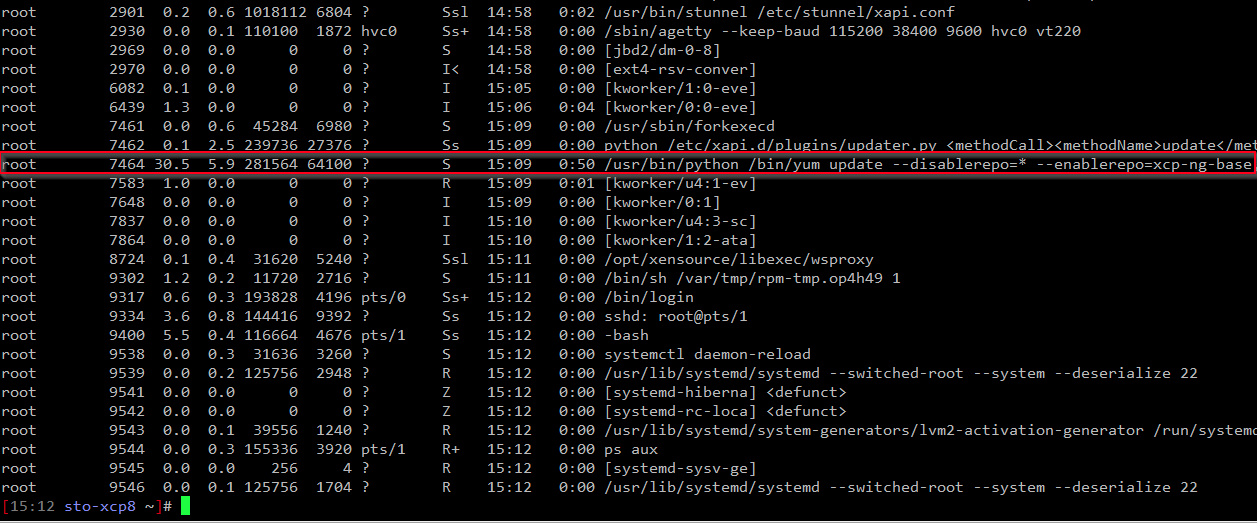

This time I went to the stats tab, just to notice that there is indeed some load on the machine, this lead me to ssh in to the host where I noticed that it is actually running yum to update:

Is this per design? Why didn't it just tell me something like "Update in progress % done" or something like that? This makes it very hard to know whats actually going on, unless you ssh to the box and check ps.

-

RE: VM metadata import fail & stuck

@henri9813 Ahh alright, I understand now! Thanks for clarifying.

-

RE: VM metadata import fail & stuck

@henri9813 Maybe im now understaind the problem here? But a warm migration is a online migration, in other words a live migration without shutting the vm down and that is exactly how it should work.

-

RE: VM metadata import fail & stuck

@henri9813 Did you reboot the master after it was updated? If yes, I think you should be able to migrate back the VM's to the master, and then continue patcting the rest of the hosts.

-

RE: Create new virtual machine?

@DRWhite85 then choose "other" as template to create a "generic" vm, then you dont have to worry about the template stuff.

-

RE: Large VM Migration fails, host has free disk - Storage_error

Are you using EXT or LVM?

I've seen this as well with large VM's and not enough free space on the source host, the only solution is to either free up some space or do an offline copy. Migration wont work, so copy is your only option. -

RE: V2V - Stops at 99%

@florent I'll send you a link in DM, hope it helps with something.

If not, it wasn't too much of an effort, thanks for your time so far

-

RE: V2V - Stops at 99%

Thanks @florent - I can see some of the export logs, but not this particular window-vm, unfortunately. Probably because its too far back in time:

Dec 27 21:33:19 xoa xo-server[845]: 2025-12-27T21:33:19.667Z xo:vmware-explorer:esxi INFO nbdkit logs of [datastore2] DEBIAN 11 observium.iextreme.org/DEBIAN 11 observium.iextreme.org.vmdk are in /tmp/xo-serverG5cHF7 Dec 27 21:36:22 xoa xo-server[845]: 2025-12-27T21:36:22.559Z xo:vmware-explorer:esxi INFO nbdkit logs of [datastore2] Windows Server 2022 veeam2.iextreme.org/Windows Server 2022 veeam2.iextreme.org-000005.vmdk are in /tmp/xo-serverqv0XVK Dec 28 00:32:35 xoa xo-server[845]: 2025-12-28T00:32:35.287Z xo:vmware-explorer:esxi INFO nbdkit logs of [datastore2] DEBIAN 11 observium.iextreme.org/DEBIAN 11 observium.iextreme.org.vmdk are in /tmp/xo-serverfqb8lP Dec 28 15:28:09 xoa xo-server[845]: 2025-12-28T15:28:09.050Z xo:vmware-explorer:esxi INFO nbdkit logs of [datastore2] DEBIAN 11 observium.iextreme.org/DEBIAN 11 observium.iextreme.org.vmdk are in /tmp/xo-serverxcV6N7 Dec 29 20:13:27 xoa xo-server[845]: 2025-12-29T20:13:27.226Z xo:vmware-explorer:esxi INFO nbdkit logs of [datastore2] DEBIAN 11 observium.iextreme.org/DEBIAN 11 observium.iextreme.org.vmdk are in /tmp/xo-servermnF0TDThe observium VM worked at last, but I had to give it 4 tries before it actually worked.

Edit: Right now all my important VM's have been imported, so I don't think we have to spend anymore time on this, unless you want the logs for the observium import.

-

RE: V2V - Stops at 99%

@Danp Yeah? How?

Let me know if you want me to grab some logs for you guys, if it's still interesting. -

RE: V2V - Stops at 99%

@florent Oh shoot! So only during the actual vmware import? I guess they're gone now then

Sorry I couldn't be of more help! -

RE: V2V - Stops at 99%

@florent Sorry to dissapoint you

Can you remind me where the vmware-import logs are stored on the XO machine? I can see if I still have them for you, it could be an alignment issue. -

RE: V2V - Stops at 99%

@florent it was 2 windows vm's, both with 100gb but not even 50% filled.

Edit: All Linux VM's worked tho.

-

RE: V2V - Stops at 99%

Got most of them working, except 2 of the bigger ones.

-

RE: SR.Scan performance withing XOSTOR

@irtaza9 it scans all the VDI's on the SR to see if something has changed, if there's a need for coalesce and so on.

I dont think it will be a big issue if you increase the auto-scan-interval value to lets say 5 minutes (300 seconds), but do remember, that everything regarding the VDI's on the SR will take up to 5 minutes to update, as well as triggering coalesce after removing snapshots. -

RE: Network Management lost, No Nic display Consol

@acebmxer It's not pretty, but its failsafe. The proceedure looked like this in our case:

- Disable HA in the "old pool"

- Put a host in the "old pool" into maintenance mode

- Reinstall that host and connect it to XOA and then patch it

- Create a "new pool" from that host

- Create a new LUN or NFS share in the SAN for "new pool" and attach it to "new pool"

- Live migrate VM's over from "old pool" to "new pool"

- Once you've freed up another host you repeate step 2 and 3 and then join that host to "new pool". It is important that you patch it before joining it to the pool, that is done by going to Settings -> Servers in XOA and connect to it manually.

And then just continue untill you're done. Live migration is pretty reliable now days, so this works pretty good and since we had 10G network its not taking as long as it used to do with 1G network.

We did this after a major incident on our primary production site where 2 out of 4 hosts in a pool "suddenly" lost their NIC's after updating them. Since then we never updated the pools again. Standalone hosts are fine tho, they never did this.Luckily we had 2 other pools where we could migrate the VM's to, but we couldn't realy trust the updating after that.