@gduperrey The new OpenSSL/SSH blocks existing/working RSA keys from older SSH clients. While you can still use a password for SSH, it will block old keys from working which will break things (not good for existing LTS installs). To maintain compatibility add PubkeyAcceptedAlgorithms +ssh-rsa to /etc/ssh/sshd_config

Posts

-

RE: XCP-ng 8.3 updates announcements and testing

-

RE: Delta Backup not deleting old snapshots

@Pa3docypris I also had this problem with one single Debian VM. I would delete the snapshot and they would just keep adding every backup. I have lots of the same VMs and lots of other VMs on the pool. Three VMs were basically exactly the same but only one had an issue.

I tried deleting all snapshots on the VM. Changing the CBT state. Doing a full backup up of the VM.... same problem. Snapshots just built up.

I found a CD in the VM drive and it would not eject (with a XCP error). So there was something strange with the VM state. I shutdown the VM, cleared the CD, and restarted the VM. After that, backups worked normally for that VM. So I won't blame XCP or XO, it seems the VM was just in a random strange state that caused problems.

-

RE: Install XO from sources.

@dcskinner @acebmxer I totally agree.... XOA is the Vates provided software appliance XO system.

Compiling XO yourself is XO from source or XO community edition or anything other than XOA. Calling it XOA adds confusion because it's not the same thing that Vates provides.

-

RE: ASUS NUC NUC14MNK-B LAN problems

@MajorP93 @olivierlambert I think 8.3 has reached LTS so I don't think the drivers are getting major upgrades (it's up to Vates). It seams reasonable to add the 8125 alt driver and new 8126/8127 drivers as an installable option.

Realtek keeps releasing new chips and new versions of the existing chips (like the 8125 in @paha machine) that don't work with the existing older drivers. It's an ongoing issue even with mainline Linux kernels.

-

RE: Old DELL 2950 with E5430@2.66GHz

@whyberg Nope... that feature is part of the CPU. But is that the actual problem? Maybe... As that machine is too old to do any real work, it's a fine toy. There are better faster cheap test machines available.

-

RE: Replication is leaving VDIs attached to Control Domain, again

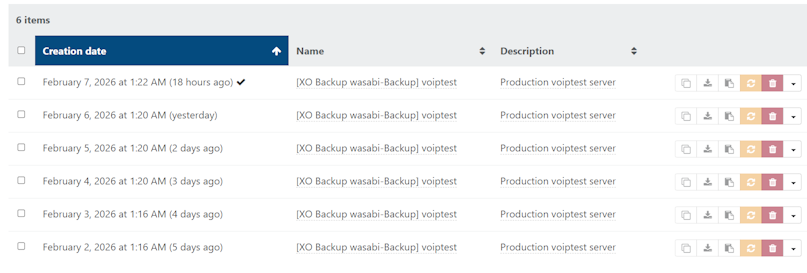

@florent Delta backup is also leaving old snapshots on some VMs. It should only have one (current) snapshot for the nightly backup. This is an issue on 1 of 100 VMs.

XCP (Jan 2026 update) and XO (91c5d) are current.

-

RE: Old DELL 2950 with E5430@2.66GHz

@whyberg The E5430 does not support EPT/SLAT.... without EPT the system uses shadow paging so virtual machines will be slower. Also, you can't run XCP 8.3.

-

RE: Windows 11 (Win11_25H2_English_x64.iso) Fails to Install

@busthead Try turning off Secure Boot.

-

RE: Replication is leaving VDIs attached to Control Domain, again

@florent With CR running and NBD enabled for 2, I see both exports and one import (per disk). It's never the import that's stuck and only one (not both) of the exports (if it happens).

I have updated XCP 8.3 to the new January 2026 patch and XO to current master and will keep an eye on it again.

-

RE: XCP-ng 8.3 updates announcements and testing

@gduperrey Standard XCP 8.3 pools updated and running.

-

RE: Replication is leaving VDIs attached to Control Domain, again

@florent Different random ones.

-

Replication is leaving VDIs attached to Control Domain, again

Using updated XCP 8.3 and XO (current master 21767) Continuous Replication is sometimes (1%) leaving VDIs attached to Control Domain.... again. The backups and tasks do finish correctly.

I don't know exactly when it started happening (may be around 8448b4d the 6.0 release, but not exactly that version) and it's not something that happens all the time. Sometimes once a day, other days several times.

This problem was happening a long time again and then it was fixed in XO (a year ago) and everything was good. This may be a timing issue again that XO is just not finishing removing the attached VDI for backup (need longer waiting and retries?)

I know it's not a lot of info. This is more of a "be on notice" that something is happening, maybe others have the same issue again.

-

RE: Does dom0 require a GPU?

@tjkreidl You can assign Dom0 to a serial port using console=ttyS1 (in grub.cfg) and then block the VGA/GPU from Dom0 using video=efifb:off xen-pciback.hide=(0000:01:00.1)

-

RE: Does dom0 require a GPU?

@Johny So, you just want a low power VGA card... I did not find may new ones. I do see an ASPEED AST2400 chipset that many use for a PCIe 1x VGA card (non-gpu). It's reported to use about 2-3 watts. It costs about $30USD and ships from China. The issue I see reported with it is firmware issues. Newer systems booting with UEFI may have issues seeing the card and may need a flash update on the video card, which is a known problem.

Option 2 is to just not have a VGA display for dom0.

-

RE: Red Hat Linux 10.1 ISO Won't Boot in UEFI Mode

@kagbasi-ngc HP DL360 G9 and newer will work...

-

RE: VDI not showing in XO 5 from Source.

@Danp I have also seen this problem with XO, but currently do not have an example to test. This was an issue on both local SR and shared SR on NFS.

-

RE: XCP-ng 8.3 and Dell R660 - crash during boot, halts remainder of installer process (bnxt_en?)

@dcskinner The correct link is: xcp-ng-8.3.0-20250606.2.iso

@olivierlambert The December 2025 blog post has the wrong link...