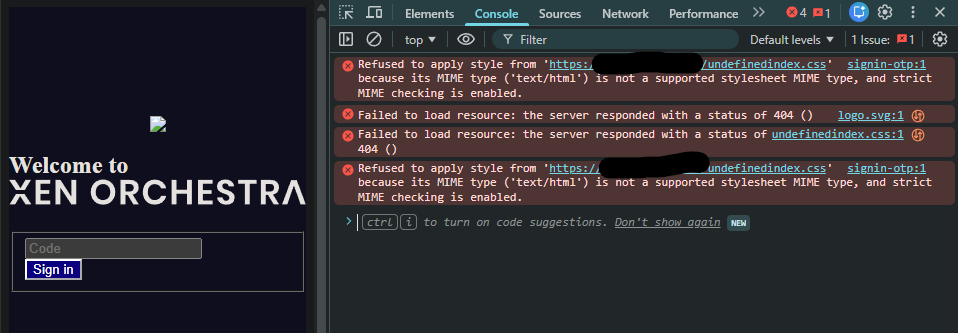

Also..

Thank you for taking a look at it and solving it so quickly, @florent

It is a quite nice interaction when reporting bugs that they are looked at and triaged. Not saying everything needs to be a priority 1. But I do appreciate being close to the process. And if I can help make things better by reporting bugs, in a hopefully constructive way. Then I'll continue to contribute my way.

️ XO 6: dedicated thread for all your feedback!

️ XO 6: dedicated thread for all your feedback!