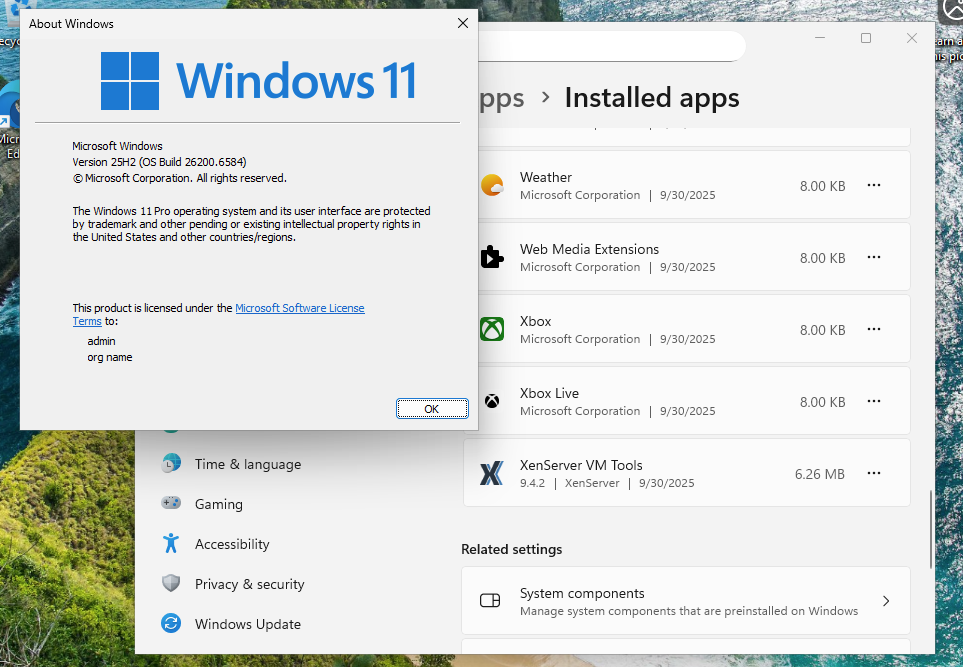

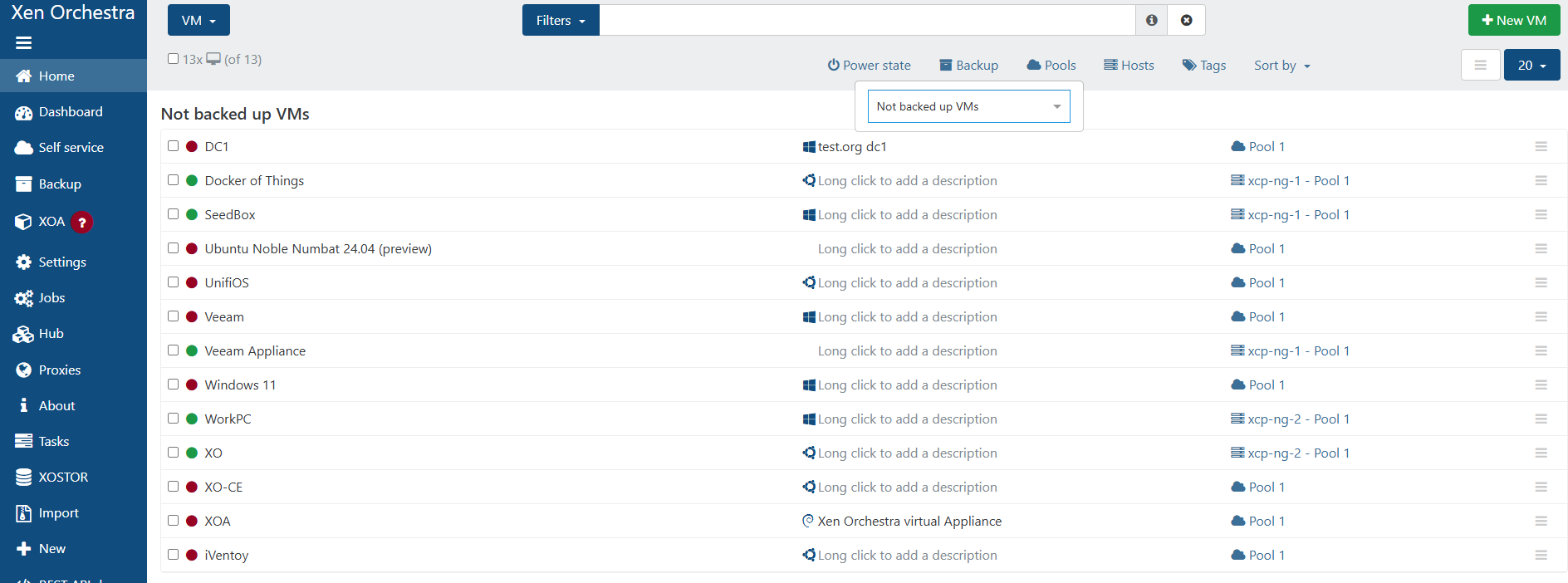

Finally just finished our migration from VMware to XCP-NG.

VMs - 34 mix windows server and ubuntu linux.

Pools - 3

Host - 6

Dell R660 - 2

Dell R640 - 4

Finally just finished our migration from VMware to XCP-NG.

VMs - 34 mix windows server and ubuntu linux.

Pools - 3

Host - 6

Dell R660 - 2

Dell R640 - 4

While this project is more for myself it is open to others to use. Please use at your own risk. Double check my script before using in a production environment. I am open to suggestions and please report any issues here - https://github.com/acebmxer/install_xen_orchestra/issues

With that said I wanted to create my own script to install XOA from sources using the information provided by https://docs.xen-orchestra.com/installation#from-the-sources. It took many tries to get it working just to see the log in screen.

I have only tested on Ubuntu 24.04.4 as of yet.

https://github.com/acebmxer/install_xen_orchestra

# Xen Orchestra Installation Script

Automated installation script for [Xen Orchestra](https://xen-orchestra.com/) from source, based on the [official documentation](https://docs.xen-orchestra.com/installation#from-the-sources).

## Features

- Installs all required dependencies and prerequisites automatically

- Uses Node.js 20 LTS (with npm v10)

- Yarn package manager installed globally

- Self-signed SSL certificate generation for HTTPS

- Direct port binding (80 and 443) - no proxy required

- Systemd service for automatic startup

- Update functionality with commit comparison

- Automatic backups before updates (keeps last 5)

- Interactive restore from any available backup

- Rebuild functionality — fresh clone + clean build on the current branch, preserves settings

- Configurable via simple config file

- **Customizable service user** - run as any username or root, defaults to 'xo'

- **Automatic swap space management** - creates 2GB swap if needed for builds

- **NFS mount support** - automatically configures sudo permissions for remote storage

- **Memory-efficient builds** - prevents out-of-memory errors on low-RAM systems

## Quick Start

### 1. Clone this repository

```bash

git clone https://github.com/acebmxer/install_xen_orchestra.git

cd install_xen_orchestra

Copy the sample configuration file and customize it:

cp sample-xo-config.cfg xo-config.cfg

Edit xo-config.cfg with your preferred settings:

nano xo-config.cfg

Note: If

xo-config.cfgis not found when running the script, it will automatically be created fromsample-xo-config.cfgwith default settings.

Important: Do NOT run this script with sudo. Run as a normal user with sudo privileges - the script will use sudo internally for commands that require elevated permissions.

./install-xen-orchestra.sh

The xo-config.cfg file supports the following options:

| Option | Default | Description |

|---|---|---|

HTTP_PORT |

80 | HTTP port for web interface |

HTTPS_PORT |

443 | HTTPS port for web interface |

INSTALL_DIR |

/opt/xen-orchestra | Installation directory |

SSL_CERT_DIR |

/etc/ssl/xo | SSL certificate directory |

SSL_CERT_FILE |

xo-cert.pem | SSL certificate filename |

SSL_KEY_FILE |

xo-key.pem | SSL private key filename |

GIT_BRANCH |

master | Git branch (master, stable, or tag) |

BACKUP_DIR |

/opt/xo-backups | Backup directory for updates |

BACKUP_KEEP |

5 | Number of backups to retain |

NODE_VERSION |

20 | Node.js major version |

SERVICE_USER |

xo | Service user (any username, leave empty for root) |

DEBUG_MODE |

false | Enable debug logging |

To update an existing installation:

./install-xen-orchestra.sh --update

The update process will:

BACKUP_DIR (default: /opt/xo-backups)BACKUP_KEEP backups are retained (default: 5)[1] is the most recent, [5] is the oldestTo restore a previous installation:

./install-xen-orchestra.sh --restore

The restore process will:

Example output:

==============================================

Available Backups

==============================================

[1] xo-backup-20260221_233000 (2026-02-21 06:30:00 PM EST) commit: a1b2c3d4e5f6 (newest)

[2] xo-backup-20260221_141500 (2026-02-21 09:15:00 AM EST) commit: 9f8e7d6c5b4a

[3] xo-backup-20260220_162000 (2026-02-20 11:20:00 AM EST) commit: 1a2b3c4d5e6f

[4] xo-backup-20260219_225200 (2026-02-19 05:52:00 PM EST) commit: 3c4d5e6f7a8b

[5] xo-backup-20260219_133000 (2026-02-19 08:30:00 AM EST) commit: 7d8e9f0a1b2c (oldest)

Enter the number of the backup to restore [1-5], or 'q' to quit:

After a successful restore the confirmed commit is displayed:

[SUCCESS] Restore completed successfully!

[INFO] Restored commit: a1b2c3d4e5f6

If your installation becomes corrupted or broken, use --rebuild to do a fresh clone and clean build of your current branch without losing any settings:

./install-xen-orchestra.sh --rebuild

The rebuild process will:

--update — saved to BACKUP_DIR)INSTALL_DIR and do a fresh git clone of the same branchNote: Settings stored in

/etc/xo-server(config.toml) and/var/lib/xo-server(databases and state) are not touched during a rebuild, so all your connections, users, and configuration are preserved.

After installation, Xen Orchestra runs as a systemd service:

# Start the service

sudo systemctl start xo-server

# Stop the service

sudo systemctl stop xo-server

# Check status

sudo systemctl status xo-server

# View logs

sudo journalctl -u xo-server -f

After installation, access the web interface:

http://your-server-iphttps://your-server-ipNote: If you changed

HTTP_PORTorHTTPS_PORTinxo-config.cfgfrom the defaults (80/443), append the port to the URL — e.g.http://your-server-ip:8080

admin@admin.netadminWarning: Change the default password immediately after first login!

To switch to a different branch (e.g., from master to stable

xo-config.cfg and change GIT_BRANCH./install-xen-orchestra.sh --update

The script will automatically fetch and checkout the new branch during the update process.

Note: The script automatically creates 2GB swap space if insufficient memory is detected during builds to prevent out-of-memory errors.

The script automatically installs all required dependencies:

Debian/Ubuntu:

RHEL/CentOS/Fedora:

Check the service logs:

sudo journalctl -u xo-server -n 50

If running as non-root, the service uses CAP_NET_BIND_SERVICE to bind to privileged ports. Ensure systemd is configured correctly.

The easiest fix is to use the built-in rebuild command, which takes a backup first:

./install-xen-orchestra.sh --rebuild

Or manually (if running as non-root SERVICE_USER):

cd /opt/xen-orchestra

rm -rf node_modules

# Replace 'xo' with your SERVICE_USER if different

sudo -u xo yarn

sudo -u xo yarn build

If the build process fails with exit code 137 (killed), your system ran out of memory:

The script automatically handles this by:

To manually check/add swap:

# Check current swap

free -h

# Create 2GB swap file if needed

sudo fallocate -l 2G /swapfile

sudo chmod 600 /swapfile

sudo mkswap /swapfile

sudo swapon /swapfile

echo '/swapfile none swap sw 0 0' | sudo tee -a /etc/fstab

If you get an error when adding NFS remote storage:

mount.nfs: not installed setuid - "user" NFS mounts not supported

The script automatically handles this by configuring sudo permissions for your service user (default: xo) to run mount/umount commands including NFS-specific helpers.

If you encounter this issue on an existing installation:

# Update sudoers configuration (replace 'xo' with your SERVICE_USER if different)

sudo tee /etc/sudoers.d/xo-server-xo > /dev/null << 'EOF'

# Allow xo-server user to mount/unmount without password

Defaults:xo !requiretty

xo ALL=(ALL:ALL) NOPASSWD:SETENV: /bin/mount, /usr/bin/mount, /bin/umount, /usr/bin/umount, /bin/findmnt, /usr/bin/findmnt, /sbin/mount.nfs, /usr/sbin/mount.nfs, /sbin/mount.nfs4, /usr/sbin/mount.nfs4, /sbin/umount.nfs, /usr/sbin/umount.nfs, /sbin/umount.nfs4, /usr/sbin/umount.nfs4

EOF

sudo chmod 440 /etc/sudoers.d/xo-server-xo

sudo systemctl restart xo-server

If NFS mounts succeed but you get permission errors when writing:

EACCES: permission denied, open '/run/xo-server/mounts/.keeper_*'

This is a UID/GID mismatch between the xo-server user and your NFS export permissions:

Option 1: Run as root (recommended for simplicity)

# Edit config

nano xo-config.cfg

# Set: SERVICE_USER=

# (leave empty to run as root)

# Update service (replace 'xo' with your SERVICE_USER if different)

sudo sed -i 's/User=xo/User=root/' /etc/systemd/system/xo-server.service

sudo chown -R root:root /opt/xen-orchestra /var/lib/xo-server /etc/xo-server

sudo systemctl daemon-reload

sudo systemctl restart xo-server

Option 2: Configure NFS for your service user's UID

On your NFS server, adjust exports to allow your service user's UID (check with id <username>), or use appropriate squash settings in your NFS export configuration.

Ensure Redis is running:

redis-cli ping

# Should respond with: PONG

xo user by default (customizable to any username; leave empty for root)/bin/mount, /usr/bin/mount, /sbin/mount.nfs, etc.)/bin/umount, /usr/bin/umount, /sbin/umount.nfs, etc.)/bin/findmnt, /usr/bin/findmnt)/etc/sudoers.d/xo-server-<username> with NOPASSWD for specific commands onlyThis installation script is provided as-is. Xen Orchestra itself is licensed under AGPL-3.0.

[info] Updating Xen Orchestra from 'cb85e44ae' to 'eed3d72f7'

First log is from automatic schedule before latest update.

2026-02-17T18_00_00.012Z - backup NG.txt

latest log after update. Backup completed successfully.

2026-02-17T18_22_52.199Z - backup NG.txt

Updated AMD Ryzen pool at home and update two Intel Dell r660 and r640 pools at work. No issues to report back.

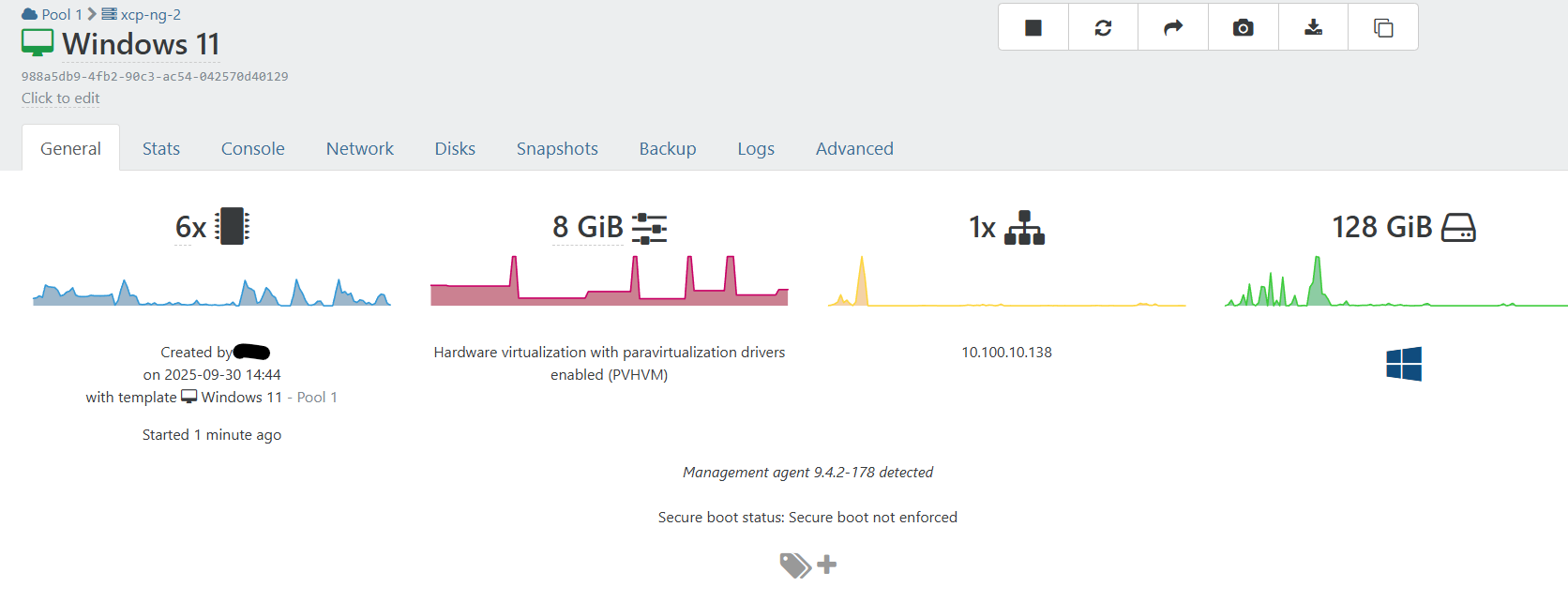

Thanks for the reply back. Update when sucessfull. Windows Server 2025 iso now properly installs.

At work I was not able to install default certs for UEFI due to one failing to download. Run these updates and I was able to successfully install the certs to the host.

i have applied updates to my 2 amd home lab hosts. Ryzen 7700x and 7950x amd x670e mobo's. No issues to report. Windows vm migrated between hosts no isssues. No issues with windows vm booting with uefi enabled prior to update.

Reach out to who's script you are using for deploying XO from sources.

This script - https://github.com/ronivay/XenOrchestraInstallerUpdater there is an option in the config file to enable XOv6. There are concerns with not being fully ready to its disabled by default. I currently have not any issues related to that.

Again thank you so much for you time trying to assist with this. The script OP has responded and updated the script to work correctly now.

Again thank to all Vates staff for all the hard work you do that goes unnoticed.

@dinhngtu Well those directions didnt work as I was unable to locate the setup.exe file in any of the vmware directories. I asked google ai how to force uninstall the vmware tools and started to follow those directions of maunally deleting the services and folder and registry entries. However the vm pysically became unbootable. It would not show the logo of the bios starting up. The vm would appear to be starting up but no console screen would load. I even tried to restore from a backup taken two days ago. That VM started up and of coarse windows wanted todo updates and vm became unbootable again.

I deleted all previous vm and did a fresh migration. This time i did remove the tools prior to the migration. VM is back working.

Did dirty upgrade left all boxes checked and vm is setup to update drivers via windows update....

I have rebooted vm and restarts no issues.

@dinhngtu same mine where two fresh VMs fresh install of server 2025. Older iso works newer iso does not. For me the newer iso the system rebooted 2 maybe 3 times then crashed to a hard power off of the vm.

Again no xen tools installed as os never finished installing on a fresh vm. no OS upgrade.

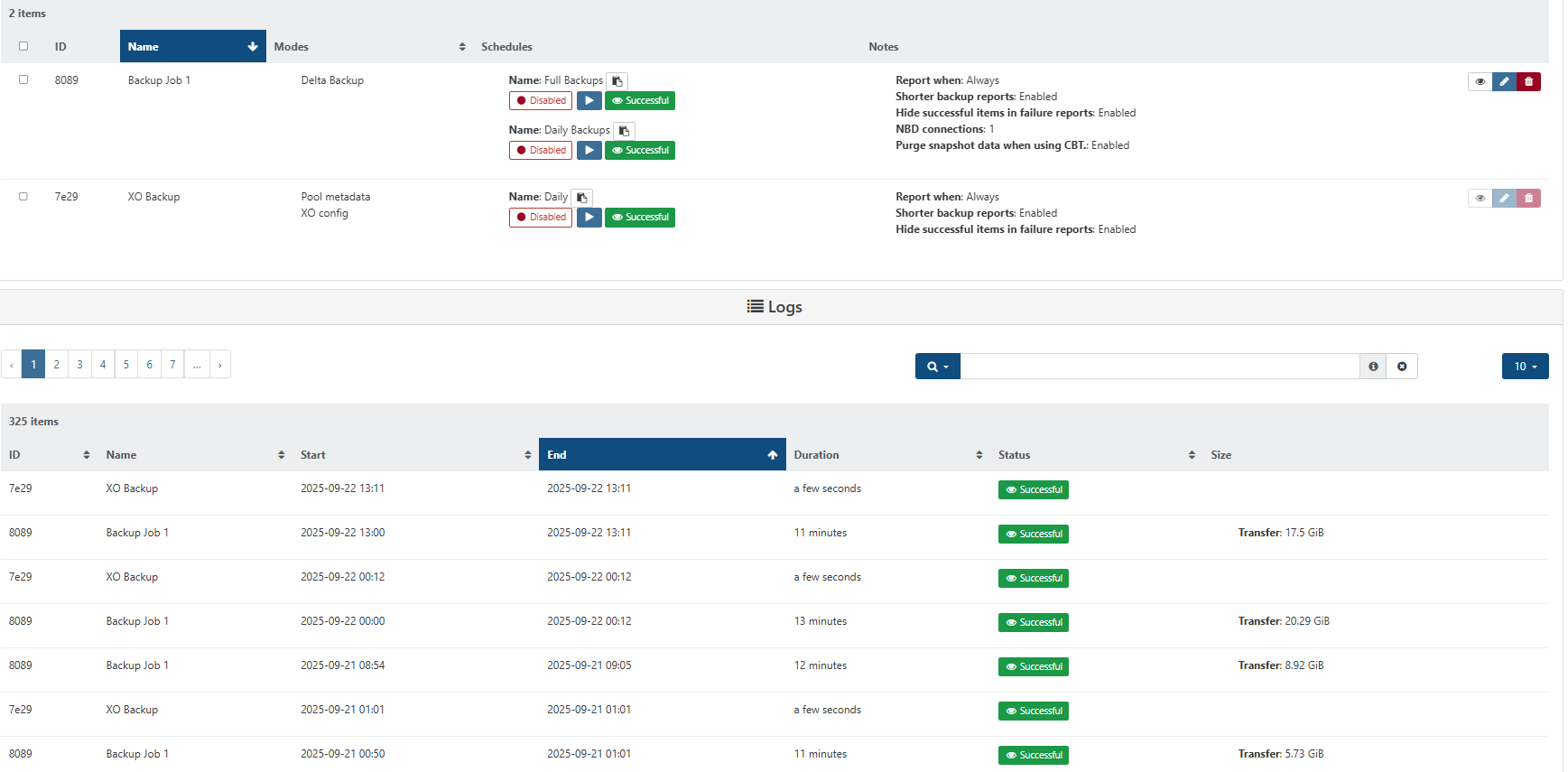

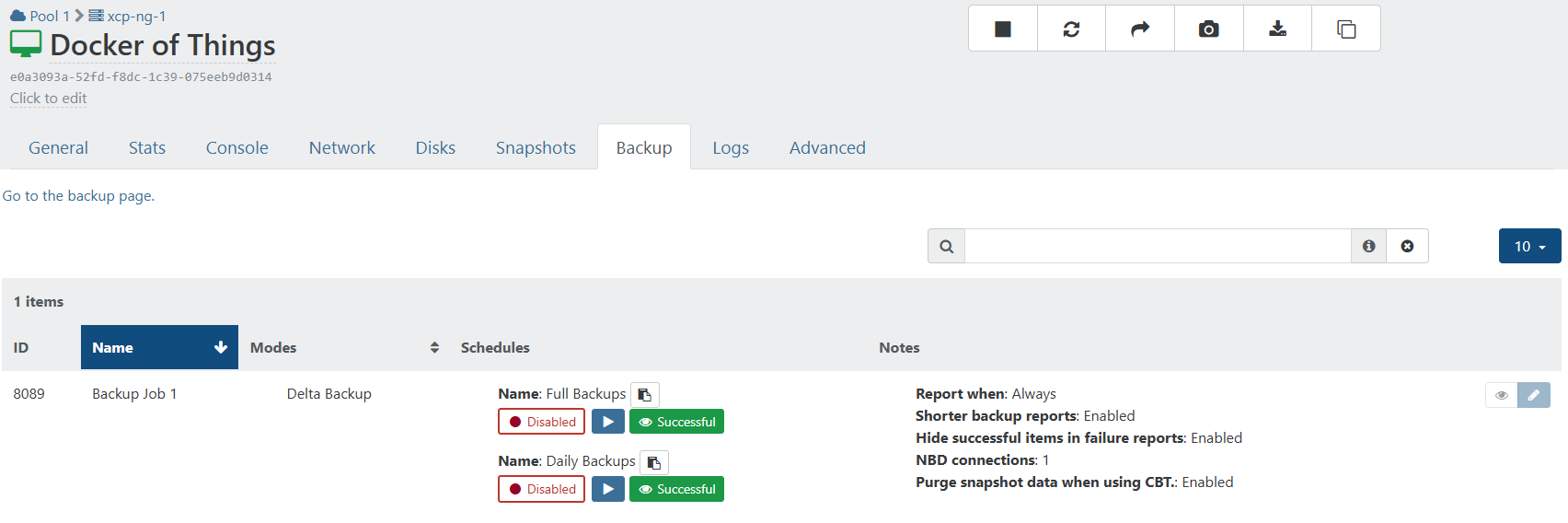

as @MBNext stated disabling Purge snapshot data when using CBT worked for me.

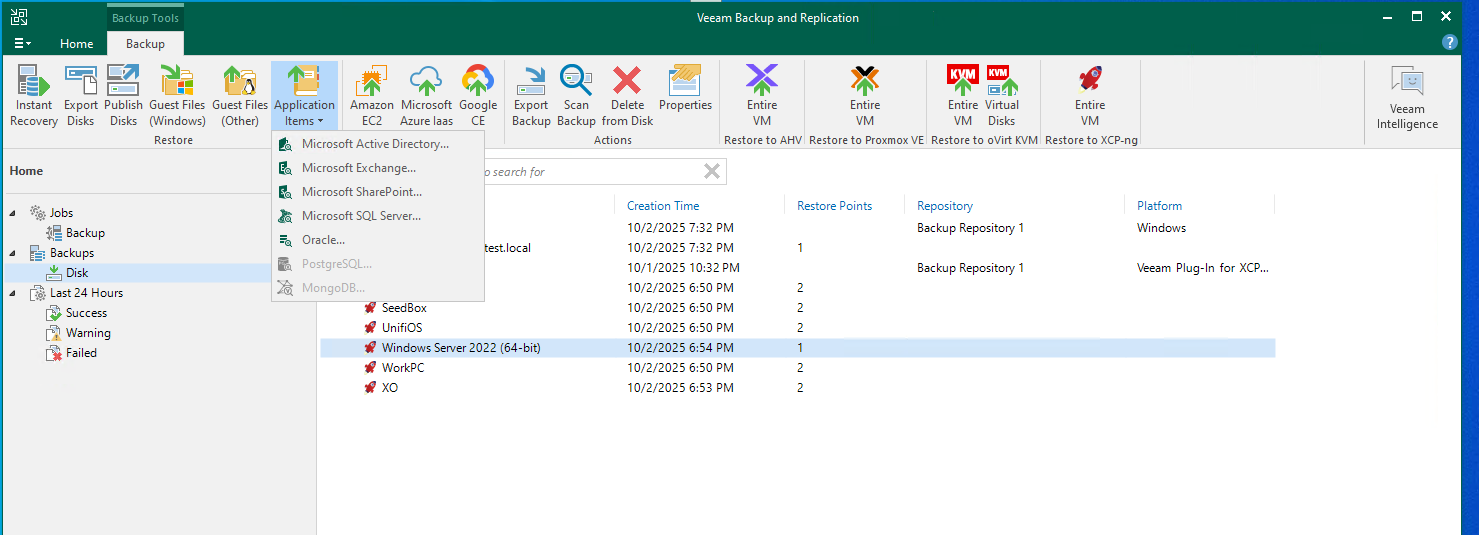

Correction XCP-NG backups are Application aware... Just not accessible from the right click menu on the backup. If you click from the menu bar at top it is available...

I thought restarting tool stack affected both host? Either way did try that but on host 1 originally. So i restarted on host 2 (where problem is). still no locked. I rebooted host 2 and that seem to do the trick. Took a few min for garbage collection to complete. All clean now.

Thanks.

@Pilow didnt realize veeam support for xcp-ng entered public beta. Downloading now and going to start testing.

Not sure this as been mention before You create a tag called "Backup" and in your backup job you can have it backup every vm with that tag. Why is this not an option for Mirror jobs? I have some VMs i want to mirror to another Remote while others not so much.

Having tags would make this a lot easier to manage. So you dont have to re-edit the mirror job to add or remove a vm to be mirrored.

I just checked this on XOCE commit d76e7 and shows the same.

Updated to Commit 04338 and the backups are fixed Thank you.

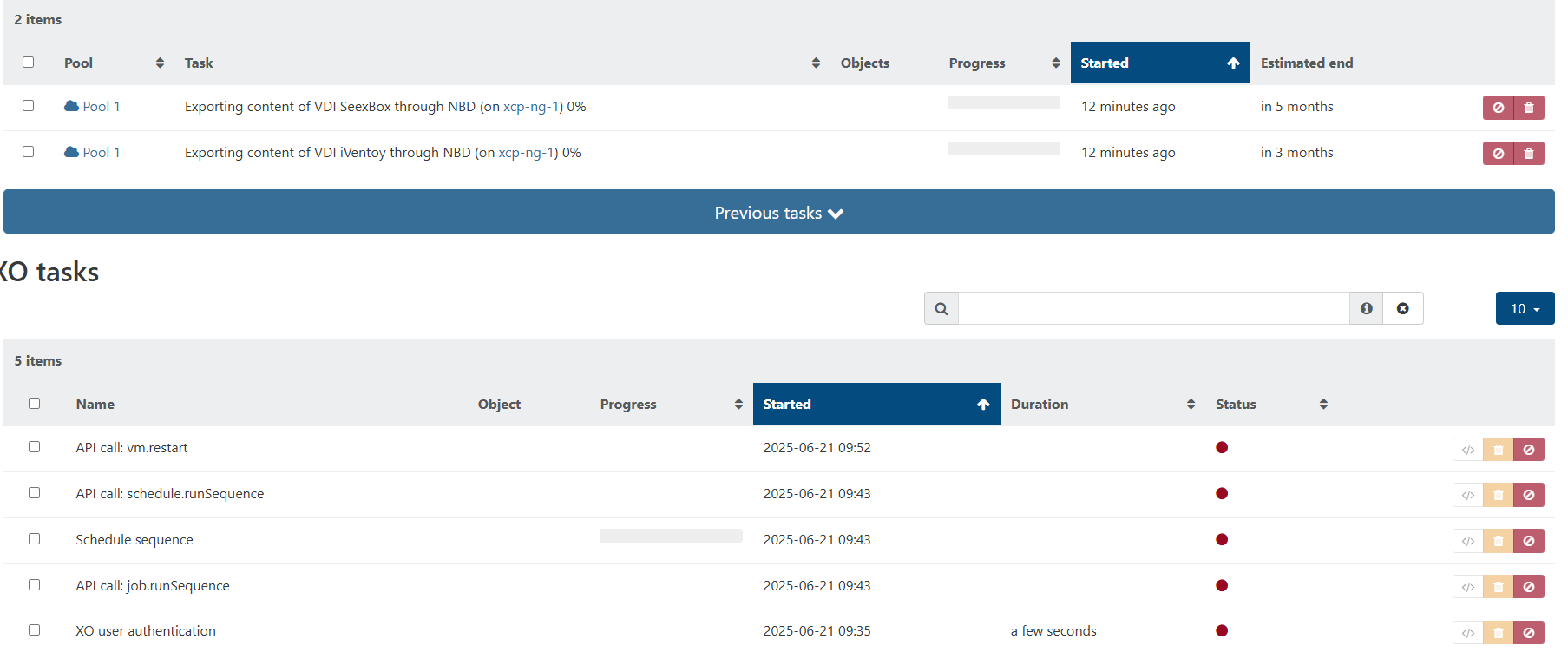

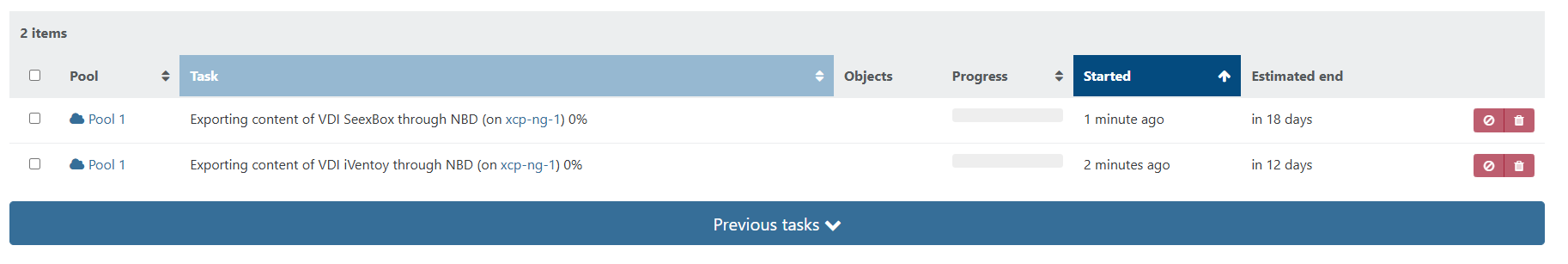

Last night 2 of my vms failed to complete a delta backup. As the tasks could not be cancled in any way i rebooted XO (built from sources) the task still show "runing" so i restarted the tool stack on host 1 and the tasks cleared. I attempted to restart failed backups and again the backup just hangs. It create the snapshot but never transfer data. The Remote is the same location as the nfs storage the vms are running from. So i know the Storage is good.

A few more rebooted of XO and tool stack. I rebooted both host and each time backups get stuck. If i try to start a new backup (same job) all vms hang. I tried to run a full delta backup and same. I tried to update XO but I am on current master build as of today (6b263) I tried to do a force update and still backup never completes.

I built a new VM for XO and installed from sources and still fail.

Here is one of the logs from the backups...

{

"data": {

"mode": "delta",

"reportWhen": "always",

"hideSuccessfulItems": true

},

"id": "1750503695411",

"jobId": "95ac8089-69f3-404e-b902-21d0e878eec2",

"jobName": "Backup Job 1",

"message": "backup",

"scheduleId": "76989b41-8bcf-4438-833a-84ae80125367",

"start": 1750503695411,

"status": "failure",

"infos": [

{

"data": {

"vms": [

"b25a5709-f1f8-e942-f0cc-f443eb9b9cf3",

"3446772a-4110-7a2c-db35-286c73af4ab4",

"bce2b7f4-d602-5cdf-b275-da9554be61d3",

"e0a3093a-52fd-f8dc-1c39-075eeb9d0314",

"afbef202-af84-7e64-100a-e8a4c40d5130"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "b25a5709-f1f8-e942-f0cc-f443eb9b9cf3",

"name_label": "SeedBox"

},

"id": "1750503696510",

"message": "backup VM",

"start": 1750503696510,

"status": "interrupted",

"tasks": [

{

"id": "1750503696519",

"message": "clean-vm",

"start": 1750503696519,

"status": "success",

"end": 1750503696822,

"result": {

"merge": false

}

},

{

"id": "1750503697911",

"message": "snapshot",

"start": 1750503697911,

"status": "success",

"end": 1750503699564,

"result": "6e2edbe9-d4bd-fd23-28b9-db4b03219e96"

},

{

"data": {

"id": "1575a1d8-3f87-4160-94fc-b9695c3684ac",

"isFull": false,

"type": "remote"

},

"id": "1750503699564:0",

"message": "export",

"start": 1750503699564,

"status": "success",

"tasks": [

{

"id": "1750503701979",

"message": "clean-vm",

"start": 1750503701979,

"status": "success",

"end": 1750503702141,

"result": {

"merge": false

}

}

],

"end": 1750503702142

}

],

"warnings": [

{

"data": {

"attempt": 1,

"error": "invalid HTTP header in response body"

},

"message": "Retry the VM backup due to an error"

}

]

},

{

"data": {

"type": "VM",

"id": "3446772a-4110-7a2c-db35-286c73af4ab4",

"name_label": "XO"

},

"id": "1750503696512",

"message": "backup VM",

"start": 1750503696512,

"status": "interrupted",

"tasks": [

{

"id": "1750503696518",

"message": "clean-vm",

"start": 1750503696518,

"status": "success",

"end": 1750503696693,

"result": {

"merge": false

}

},

{

"id": "1750503712472",

"message": "snapshot",

"start": 1750503712472,

"status": "success",

"end": 1750503713915,

"result": "a1bdef52-142c-5996-6a49-169ef390aa2e"

},

{

"data": {

"id": "1575a1d8-3f87-4160-94fc-b9695c3684ac",

"isFull": false,

"type": "remote"

},

"id": "1750503713915:0",

"message": "export",

"start": 1750503713915,

"status": "success",

"tasks": [

{

"id": "1750503716280",

"message": "clean-vm",

"start": 1750503716280,

"status": "success",

"end": 1750503716383,

"result": {

"merge": false

}

}

],

"end": 1750503716385

}

],

"warnings": [

{

"data": {

"attempt": 1,

"error": "invalid HTTP header in response body"

},

"message": "Retry the VM backup due to an error"

}

]

},

{

"data": {

"type": "VM",

"id": "bce2b7f4-d602-5cdf-b275-da9554be61d3",

"name_label": "iVentoy"

},

"id": "1750503702145",

"message": "backup VM",

"start": 1750503702145,

"status": "interrupted",

"tasks": [

{

"id": "1750503702148",

"message": "clean-vm",

"start": 1750503702148,

"status": "success",

"end": 1750503702233,

"result": {

"merge": false

}

},

{

"id": "1750503702532",

"message": "snapshot",

"start": 1750503702532,

"status": "success",

"end": 1750503704850,

"result": "05c5365e-3bc5-4640-9b29-0684ffe6d601"

},

{

"data": {

"id": "1575a1d8-3f87-4160-94fc-b9695c3684ac",

"isFull": false,

"type": "remote"

},

"id": "1750503704850:0",

"message": "export",

"start": 1750503704850,

"status": "interrupted",

"tasks": [

{

"id": "1750503706813",

"message": "transfer",

"start": 1750503706813,

"status": "interrupted"

}

]

}

],

"infos": [

{

"message": "Transfer data using NBD"

}

]

},

{

"data": {

"type": "VM",

"id": "e0a3093a-52fd-f8dc-1c39-075eeb9d0314",

"name_label": "Docker of Things"

},

"id": "1750503716389",

"message": "backup VM",

"start": 1750503716389,

"status": "interrupted",

"tasks": [

{

"id": "1750503716395",

"message": "clean-vm",

"start": 1750503716395,

"status": "success",

"warnings": [

{

"data": {

"path": "/xo-vm-backups/e0a3093a-52fd-f8dc-1c39-075eeb9d0314/20250604T160135Z.json",

"actual": 6064872448,

"expected": 6064872960

},

"message": "cleanVm: incorrect backup size in metadata"

}

],

"end": 1750503716886,

"result": {

"merge": false

}

},

{

"id": "1750503717182",

"message": "snapshot",

"start": 1750503717182,

"status": "success",

"end": 1750503719640,

"result": "9effb56d-68e6-8015-6bd5-64fa65acbada"

},

{

"data": {

"id": "1575a1d8-3f87-4160-94fc-b9695c3684ac",

"isFull": false,

"type": "remote"

},

"id": "1750503719640:0",

"message": "export",

"start": 1750503719640,

"status": "interrupted",

"tasks": [

{

"id": "1750503721601",

"message": "transfer",

"start": 1750503721601,

"status": "interrupted"

}

]

}

],

"infos": [

{

"message": "Transfer data using NBD"

}

]

}

],

"end": 1750504870213,

"result": {

"message": "worker exited with code null and signal SIGTERM",

"name": "Error",

"stack": "Error: worker exited with code null and signal SIGTERM\n at ChildProcess.<anonymous> (file:///opt/xo/xo-builds/xen-orchestra-202506202218/@xen-orchestra/backups/runBackupWorker.mjs:24:48)\n at ChildProcess.emit (node:events:518:28)\n at ChildProcess.patchedEmit [as emit] (/opt/xo/xo-builds/xen-orchestra-202506202218/@xen-orchestra/log/configure.js:52:17)\n at Process.ChildProcess._handle.onexit (node:internal/child_process:293:12)\n at Process.callbackTrampoline (node:internal/async_hooks:130:17)"

}

}