@florent I couldn't map it directly to the XO, so I mounted it on Linux.

Posts

-

RE: Problem: Encrypted Remotes

-

RE: Problem: Encrypted Remotes

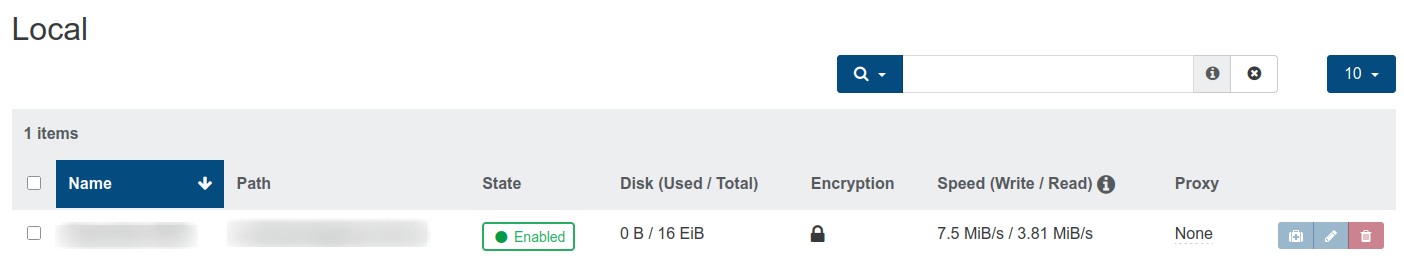

@florent It's actually only 3TB. It's a local S3 mount on the XO server that I mapped. The size shown is wrong.

-

RE: Problem: Encrypted Remotes

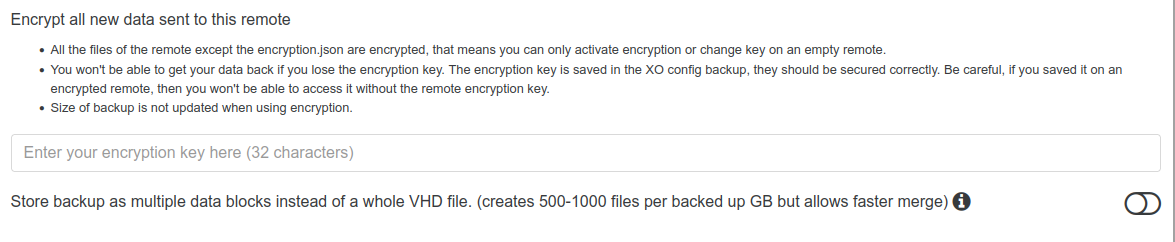

@florent After setting a 32 character hexadecimal password, encryption was applied.

My question now is whether encryption is applied only “to the remote drive that acts as a vault” or to each stored file.

-

Problem: Encrypted Remotes

What should I configure as a key? Typing the characters didn't work.

Version: Xen Orchestra, commit 6277a

-

RE: Re-add a repaired master node to the pool

@Andrew, @bvitnik and @DustinB In my tests, I did the following. I did this process twice and it worked. To simulate a hardware failure on the master node, I simply turned it off.

If the master pool is down or unresponsive due to a hardware failure, follow these steps to restore operations:

-

Use an SSH client to log in to a slave host in the pool.

-

Run the following command on the slave host to promote it as the new pool master:

xe pool-emergency-transition-to-master- Confirm the change of the pool master and verify the hosts present in it:

xe pool-listxe host-listEven if it is down, the old master node will appear in the listing.

-

Remap the pool in XCP-ng or XO using the IP of the new master node.

-

After resolving the hardware issues on the old master node, start it up. When it finishes booting, it will be recognized as a slave node.

In testing, I did not need to run any other commands. However, if the node is not recognized, try typing on it after accessing it via SSH:

xe pool-recover-slavesI didn't understand why it worked. It seemed like "magic"!

-

-

RE: Re-add a repaired master node to the pool

@DustinB I use the open community version.

-

RE: Re-add a repaired master node to the pool

@DustinB I use a dedicated Dell SAN storage.

-

Re-add a repaired master node to the pool

I am doing a lot of testing before putting my environment into production.

Suppose the pool has two nodes, one master node and the other a slave node. In case the master node fails due to hardware issues, I saw that the slave node can be changed to master using the command "xe pool-emergency-transition-to-master".

But when the old master server is repaired, how can I add it back? Won't I have two masters at the same time? Will a conflict occur?

In the tests I performed, when shutting down the master node, the VMs running on it were also shut down and not migrated to the slave node.

Links consulted:

https://xcp-ng.org/forum/topic/8361/xo-new-pool-master

https://xcp-ng.org/forum/topic/4075/pool-master-down-what-steps-need-done-next -

RE: "Virtual Disk Not On Preferred Path" error on Dell Storages when mapping volumes

@cairoti said in "Virtual Disk Not On Preferred Path" error on Dell Storages when mapping volumes:

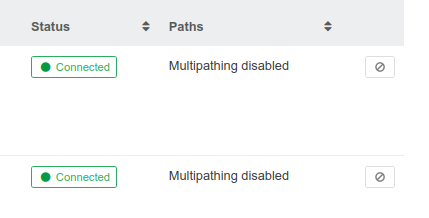

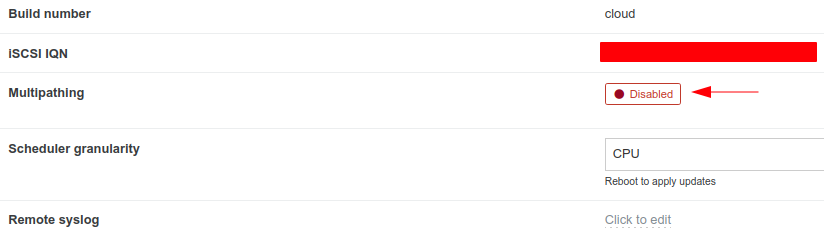

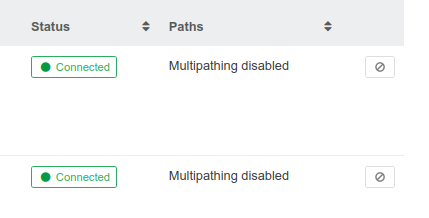

The description below appears in the mapped volume in XO:

I don't know if the image has any relation to the problem.

The "Multipathing" option was not enabled on the hosts in the pool. So even though there was more than one connection to the Dell Storage, the warning message was displayed. The controller to be used could be 0 or 1. But because "Multipathing" was disabled, the storage automatically switched from 1 to 0 for some mapped volumes because it believed there was only one possible path.

I consider this issue resolved!

-

RE: "Virtual Disk Not On Preferred Path" error on Dell Storages when mapping volumes

The description below appears in the mapped volume in XO:

I don't know if the image has any relation to the problem.

-

"Virtual Disk Not On Preferred Path" error on Dell Storages when mapping volumes

Hi, when I map Dell storage volumes in XCP-ng, I get "Virtual Disk Not On Preferred Path" error in Dell management software.

In the link below I posted in the Dell community and from the answer given I understood that it could be some configuration that I didn't do in XCP-ng. But what would it be?

Volumes mapped to "RAID Controller Module in Slot 0" do not exhibit the issue. However, volumes mapped to "RAID Controller Module in Slot 1" are changed to "RAID Controller Module in Slot 0", which causes the issue.

Has anyone dealt with this issue?

-

RE: vm start delay - does it work yet?

@payback007 said in vm start delay - does it work yet?:

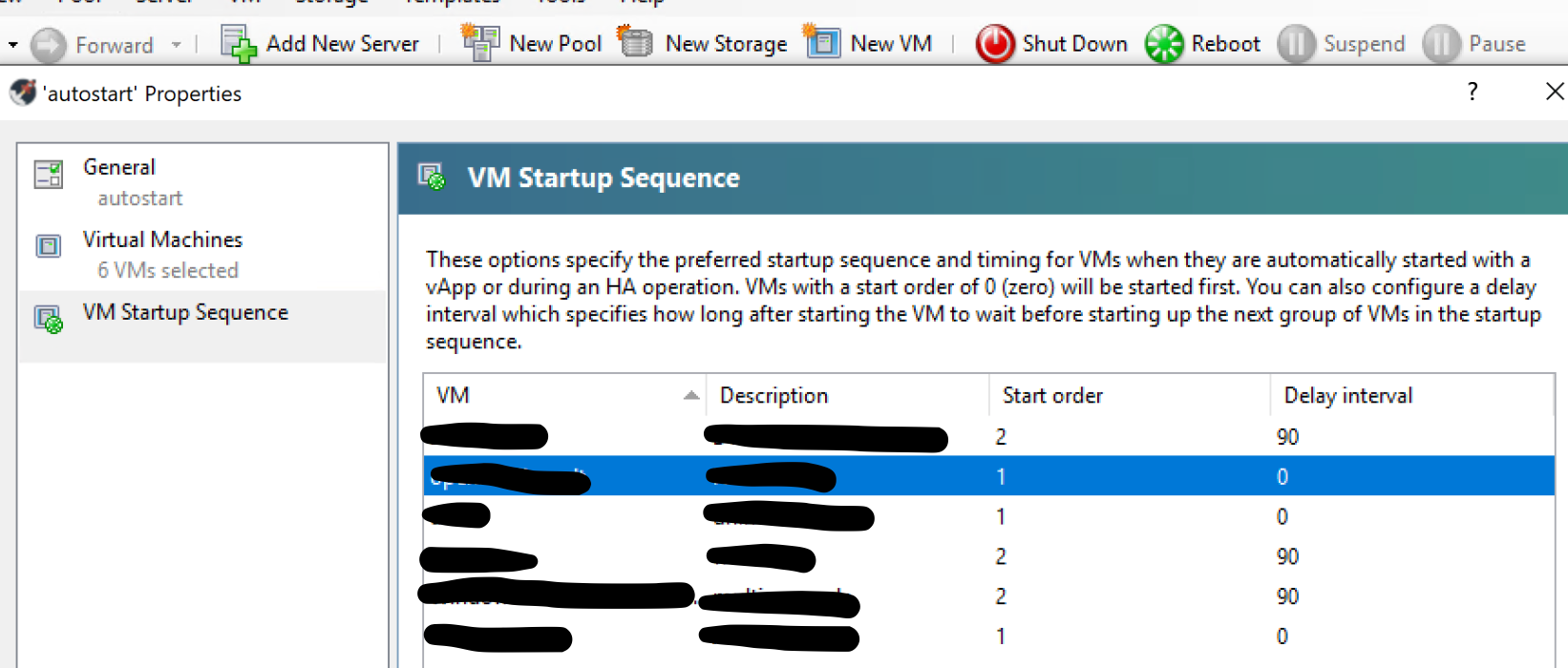

unfortunately "start delay" is not working as expected. The function what you marked above is to change the start delay of an existing "vApp". Here is an example of my setup:

The value whould change the "Delay interval" later by XOA, nothing else. Otherwise is vApp feature also not working on my XCP-ng installation, I think it was never really tested.

If you want to implement start delays to your VM's you can follow this guide:

- define vApp for autostart in xcp-ng center including start order

- find out the uuid of the vApp:

xe appliance-list- write autostart script containing

#!/bin/sh xe appliance-start uuid=uuid-autostart-vApp- implement new systemd.service in /etc/systemd/system/autostart.service

[Unit] Description=autostart script for boot VM After=graphical.target [Service] Type=simple ExecStart=/path/to/your/autostart-script.sh TimeoutStartSec=0 [Install] WantedBy=default.target- enable the service

systemctl enable autostart.serviceEditing of boot delay time is then possible via XOA which is already a nice feature at all for "fine tuning" or adapt if new VMs are added to the autostart vApp.

@olivierlambert whould it make sense to open an additional feature request? vApp-implementation was several times discussed with no "final statement" I think.

When I have a pool without HA, how could I use this script?

I thought about setting the script on the master server. However, in a maintenance, where a second node becomes the master, will I have to recreate the script?

-

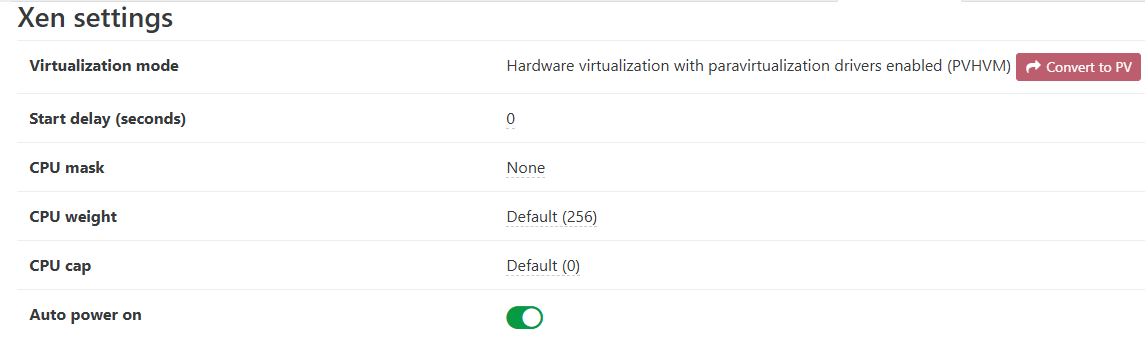

RE: Question about "auto power on"

@Davidj-0 From what I understand, the xcp host must already be turned on. The VM will only start at the defined time, when for some reason, it has been turned off previously.

My plan was to have the servers power up when they received power. Then the "auto power on" XOCE VM would start and, via scheduling, power on other VMs in a predefined order.

Does the "Appliances" feature only exist in the XCP-ng command line or in the XCP-ng Center?

According to this link this feature does not exist on XO: https://xcp-ng.org/forum/topic/6311/managing-vapps-with-xoa

-

RE: Question about "auto power on"

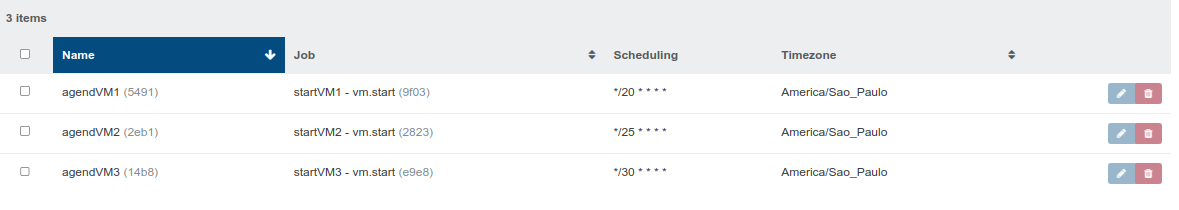

@karlisi To test I created 3 schedules to start 3 VMs respectively. My goal was to test the startup order. Afterwards I restarted the XO VM. I noticed that the VMs start earlier than expected. How does the XO time the VMs?

In job I did not define timeout. Below are the schedules for each VM.

-

RE: Question about "auto power on"

@DustinB I would like the VMs to boot automatically and in order when the servers are powered on.

Do you know if there is any material about Jobs on the XO, for starting VMs?

-

RE: Question about "auto power on"

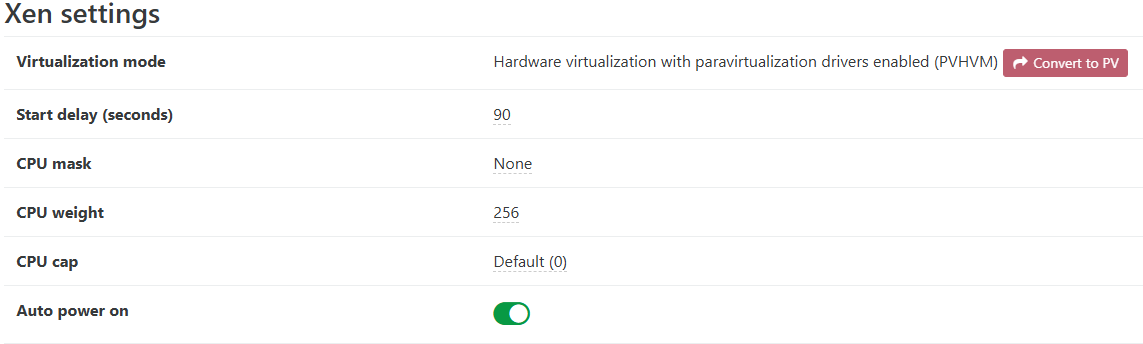

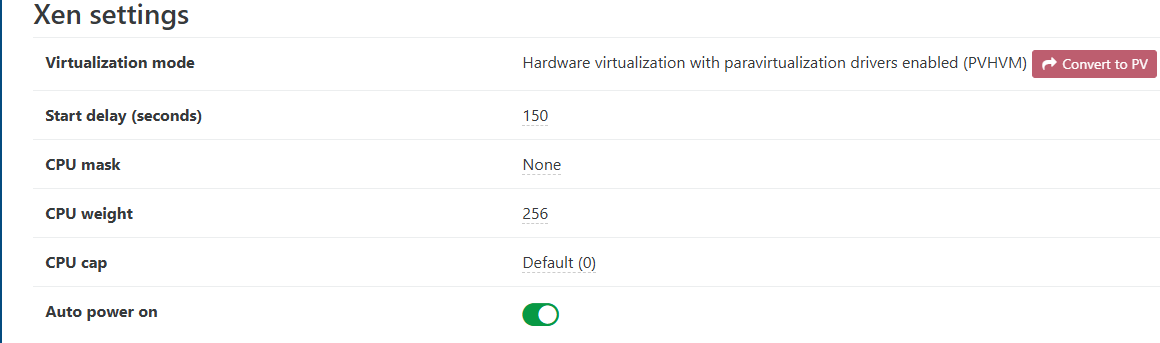

@DustinB I have it set up like this:

VM1: 0 seconds delay

VM2: 90 seconds delay

VM3: 150 seconds delayHowever, in the test, VM1 started and then VM3. VM2 took longer to start.

I would like to set the times large because some VMs communicate with services on other VMs, so the order is important.

See the configuration:

-

RE: Question about "auto power on"

@DustinB Thanks for your reply. I will try to use the "delay" time.

-

Question about "auto power on"

Hello. I'm trying to configure VMs in a pool to start automatically in XCP-ng. Is it possible to define a boot order for VMs in XOA?

Link I consulted:

-

RE: VM migration time

@Greg_E At this time we do not have the financial resources to purchase new boards.

-

RE: VM migration time

@nikade @olivierlambert I created two Linux VMs and ran the below bandwidth and disk tests:

Testing using Dell Storage Network and local server volumes:

# iperf -c IPServer -r -t 600 -i 60 ------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 128 KByte (default) ------------------------------------------------------------ ------------------------------------------------------------ Client connecting to IPServer, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 1] local IPClient port 54762 connected with IPServer port 5001 (icwnd/mss/irtt=14/1448/1321) [ ID] Interval Transfer Bandwidth [ 1] 0.0000-60.0000 sec 51.6 GBytes 7.39 Gbits/sec [ 1] 60.0000-120.0000 sec 50.9 GBytes 7.28 Gbits/sec [ 1] 120.0000-180.0000 sec 51.2 GBytes 7.33 Gbits/sec [ 1] 180.0000-240.0000 sec 49.1 GBytes 7.03 Gbits/sec [ 1] 240.0000-300.0000 sec 50.8 GBytes 7.27 Gbits/sec [ 1] 300.0000-360.0000 sec 48.8 GBytes 6.99 Gbits/sec [ 1] 360.0000-420.0000 sec 51.7 GBytes 7.41 Gbits/sec [ 1] 420.0000-480.0000 sec 49.2 GBytes 7.05 Gbits/sec [ 1] 480.0000-540.0000 sec 50.1 GBytes 7.17 Gbits/sec [ 1] 540.0000-600.0000 sec 50.0 GBytes 7.16 Gbits/sec [ 1] 0.0000-600.0027 sec 503 GBytes 7.21 Gbits/sec [ 2] local IPClient port 5001 connected with IPServer port 33924 [ ID] Interval Transfer Bandwidth [ 2] 0.0000-60.0000 sec 50.8 GBytes 7.28 Gbits/sec [ 2] 60.0000-120.0000 sec 51.4 GBytes 7.36 Gbits/sec [ 2] 120.0000-180.0000 sec 52.4 GBytes 7.51 Gbits/sec [ 2] 180.0000-240.0000 sec 50.3 GBytes 7.21 Gbits/sec [ 2] 240.0000-300.0000 sec 50.4 GBytes 7.22 Gbits/sec [ 2] 300.0000-360.0000 sec 51.0 GBytes 7.30 Gbits/sec [ 2] 360.0000-420.0000 sec 50.6 GBytes 7.24 Gbits/sec [ 2] 420.0000-480.0000 sec 50.4 GBytes 7.22 Gbits/sec [ 2] 480.0000-540.0000 sec 50.1 GBytes 7.18 Gbits/sec [ 2] 540.0000-600.0000 sec 50.9 GBytes 7.29 Gbits/sec [ 2] 0.0000-600.0125 sec 508 GBytes 7.28 Gbits/secTest using Dell Storage volumes and networking:

# iperf -c IPServer -r -t 600 -i 60 ------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 128 KByte (default) ------------------------------------------------------------ ------------------------------------------------------------ Client connecting to IPServer, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 1] local IPClient port 50006 connected with IPServer port 5001 (icwnd/mss/irtt=14/1448/4104) [ ID] Interval Transfer Bandwidth [ 1] 0.0000-60.0000 sec 33.7 GBytes 4.82 Gbits/sec [ 1] 60.0000-120.0000 sec 33.3 GBytes 4.77 Gbits/sec [ 1] 120.0000-180.0000 sec 33.4 GBytes 4.78 Gbits/sec [ 1] 180.0000-240.0000 sec 36.1 GBytes 5.16 Gbits/sec [ 1] 240.0000-300.0000 sec 36.7 GBytes 5.25 Gbits/sec [ 1] 300.0000-360.0000 sec 32.8 GBytes 4.69 Gbits/sec [ 1] 360.0000-420.0000 sec 33.4 GBytes 4.78 Gbits/sec [ 1] 420.0000-480.0000 sec 34.5 GBytes 4.93 Gbits/sec [ 1] 480.0000-540.0000 sec 35.3 GBytes 5.05 Gbits/sec [ 1] 540.0000-600.0000 sec 34.3 GBytes 4.91 Gbits/sec [ 1] 0.0000-600.0239 sec 343 GBytes 4.92 Gbits/sec [ 2] local IPClient port 5001 connected with IPServer port 52714 [ ID] Interval Transfer Bandwidth [ 2] 0.0000-60.0000 sec 35.7 GBytes 5.12 Gbits/sec [ 2] 60.0000-120.0000 sec 31.6 GBytes 4.53 Gbits/sec [ 2] 120.0000-180.0000 sec 30.3 GBytes 4.34 Gbits/sec [ 2] 180.0000-240.0000 sec 35.1 GBytes 5.02 Gbits/sec [ 2] 240.0000-300.0000 sec 37.9 GBytes 5.42 Gbits/sec [ 2] 300.0000-360.0000 sec 37.5 GBytes 5.37 Gbits/sec [ 2] 360.0000-420.0000 sec 37.5 GBytes 5.37 Gbits/sec [ 2] 420.0000-480.0000 sec 37.1 GBytes 5.31 Gbits/sec [ 2] 480.0000-540.0000 sec 33.9 GBytes 4.86 Gbits/sec [ 2] 540.0000-600.0000 sec 35.0 GBytes 5.00 Gbits/sec [ 2] 0.0000-600.0036 sec 352 GBytes 5.03 Gbits/secDell storage disk testing for each VM:

dd if=/dev/zero of=/tmp/teste1.img bs=1G count=1 oflag=dsync 1+0 records in 1+0 records out 1073741824 bytes (1,1 GB, 1,0 GiB) copied, 15,3566 s, 69,9 MB/sdd if=/dev/zero of=/tmp/teste1.img bs=1G count=1 oflag=dsync 1+0 records in 1+0 records out 1073741824 bytes (1,1 GB, 1,0 GiB) copied, 19,0043 s, 56,5 MB/sServer local disk test for each VM:

dd if=/dev/zero of=/tmp/teste1.img bs=1G count=1 oflag=dsync 1+0 records in 1+0 records out 1073741824 bytes (1,1 GB, 1,0 GiB) copied, 6,88148 s, 156 MB/sdd if=/dev/zero of=/tmp/teste1.img bs=1G count=1 oflag=dsync 1+0 records in 1+0 records out 1073741824 bytes (1,1 GB, 1,0 GiB) copied, 5,83594 s, 184 MB/s